Technology peripherals

Technology peripherals

AI

AI

NVIDIA H100 dominates the authoritative AI performance test, completing large model training based on GPT-3 in 11 minutes

NVIDIA H100 dominates the authoritative AI performance test, completing large model training based on GPT-3 in 11 minutes

NVIDIA H100 dominates the authoritative AI performance test, completing large model training based on GPT-3 in 11 minutes

On Tuesday local time, MLCommons, an open industry alliance in the field of machine learning and artificial intelligence, disclosed the latest data from two MLPerf benchmarks. Among them, the NVIDIA H100 chipset set new records in all categories in the test of artificial intelligence computing power performance. It is also the only hardware platform that can run all tests.

(Source: NVIDIA, MLCommons)

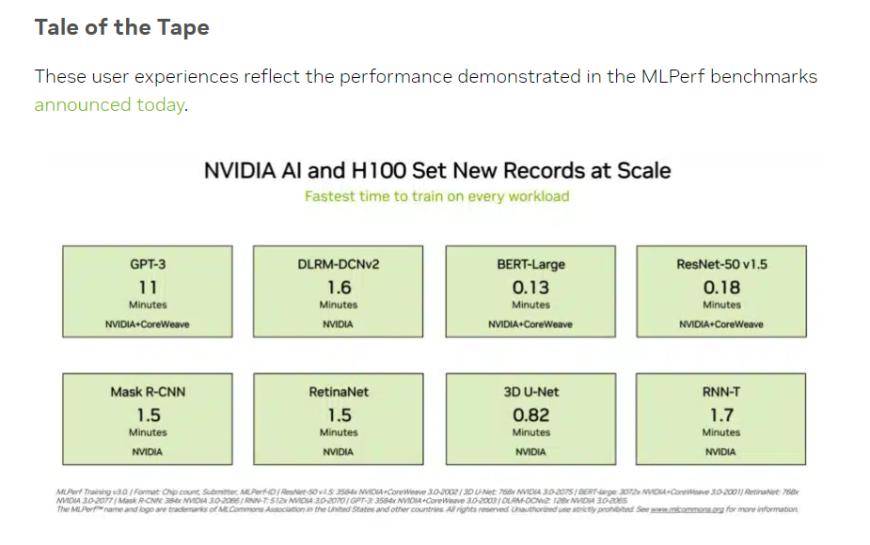

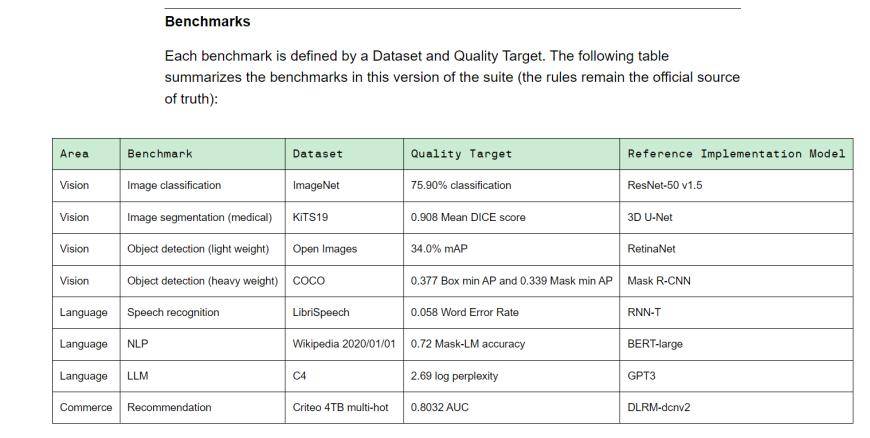

MLPerf is an artificial intelligence leadership alliance composed of academia, laboratories and industries. It is currently an internationally recognized and authoritative AI performance evaluation benchmark. Training v3.0 contains 8 different loads, including vision (image classification, biomedical image segmentation, object detection for two loads), language (speech recognition, large language model, natural language processing) and recommendation system. In other words, different equipment vendors take different amounts of time to complete the benchmark task.

(Training v3.0 training benchmark, source: MLCommons)

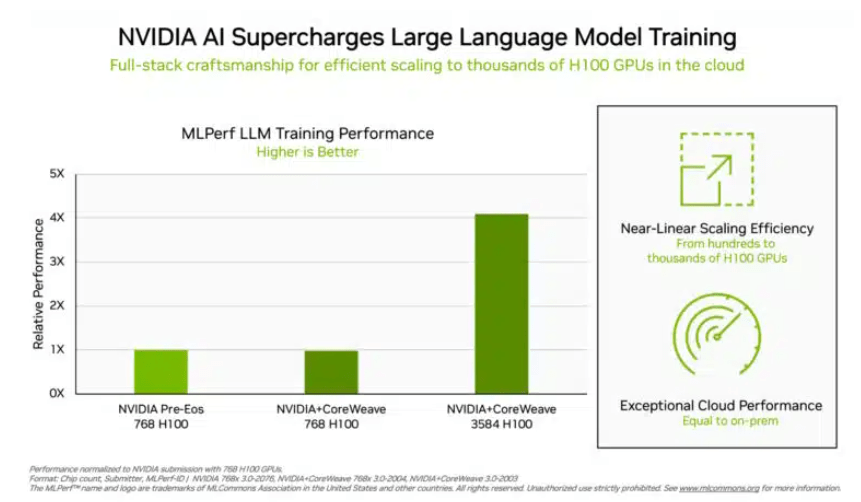

In the "big language model" training test that investors are more concerned about, the data submitted by NVIDIA and GPU cloud computing platform CoreWeave set a cruel industry standard for this test. With the concerted efforts of 896 Intel Xeon 8462Y processors and 3584 NVIDIA H100 chips, it only took 10.94 minutes to complete the large language model training task based on GPT-3.

Except for Nvidia, only Intel’s product portfolio received evaluation data on this project. In a system built with 96 Xeon 8380 processors and 96 Habana Gaudi2 AI chips, the time to complete the same test was 311.94 minutes. Using a platform with 768 H100 chips, the horizontal comparison test only takes 45.6 minutes.

(The more chips, the better the data, source: NVIDIA)

Regarding this result, Intel also said that there is still room for improvement. Theoretically, as long as more chips are stacked, the calculation results will naturally be faster. Jordan Plawner, Intel's senior director of AI products, told the media that Habana's computing results will be improved by 1.5 times to 2 times. Plawner declined to disclose the specific price of Habana Gaudi2, saying only that the industry needs a second manufacturer to provide AI training chips, and MLPerf data shows that Intel has the ability to fill this demand.

In the BERT-Large model training that is more familiar to Chinese investors, NVIDIA and CoreWeave pushed the data to an extreme 0.13 minutes. In the case of 64 cards, the test data also reached 0.89 minutes. The current infrastructure of mainstream large models is the Transformer structure in the BERT model.

Source: Financial Associated Press

The above is the detailed content of NVIDIA H100 dominates the authoritative AI performance test, completing large model training based on GPT-3 in 11 minutes. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1676

1676

14

14

1429

1429

52

52

1333

1333

25

25

1278

1278

29

29

1257

1257

24

24

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

The release includes three distinct models, GPT-4.1, GPT-4.1 mini and GPT-4.1 nano, signaling a move toward task-specific optimizations within the large language model landscape. These models are not immediately replacing user-facing interfaces like

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

Unlock the Power of Embedding Models: A Deep Dive into Andrew Ng's New Course Imagine a future where machines understand and respond to your questions with perfect accuracy. This isn't science fiction; thanks to advancements in AI, it's becoming a r

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Simulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Gemini as the Foundation of Google’s AI Strategy Gemini is the cornerstone of Google’s AI agent strategy, leveraging its advanced multimodal capabilities to process and generate responses across text, images, audio, video and code. Developed by DeepM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

“Super happy to announce that we are acquiring Pollen Robotics to bring open-source robots to the world,” Hugging Face said on X. “Since Remi Cadene joined us from Tesla, we’ve become the most widely used software platform for open robotics thanks to

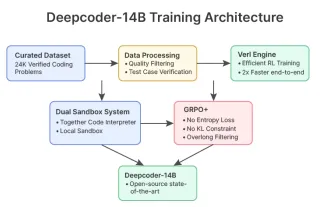

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

In a significant development for the AI community, Agentica and Together AI have released an open-source AI coding model named DeepCoder-14B. Offering code generation capabilities on par with closed-source competitors like OpenAI