Technology peripherals

Technology peripherals

AI

AI

Big breakthrough, announced by the Chinese Academy of Sciences! 1.5 to 10 times faster than Nvidia, will AI chips change the world? Concept leader's straight daily limit

Big breakthrough, announced by the Chinese Academy of Sciences! 1.5 to 10 times faster than Nvidia, will AI chips change the world? Concept leader's straight daily limit

Big breakthrough, announced by the Chinese Academy of Sciences! 1.5 to 10 times faster than Nvidia, will AI chips change the world? Concept leader's straight daily limit

Optical computing has shown rapid development in the field of AI and has broad application prospects.

Recently, the team of Researcher Li Ming and Academician Zhu Ninghua of the Microwave Optoelectronics Research Group of the State Key Laboratory of Integrated Optoelectronics, Institute of Semiconductors, Chinese Academy of Sciences, developed an ultra-highly integrated optical convolution processor. Relevant research results were published in "Nature Communications" under the title Compact optical convolution processing unit based on multimode interference.

This marks a major breakthrough in optical computing in our country. CITIC Construction Investment even directly called out that this technological breakthrough has broad prospects in the field of AI. It is understood that optical computing is a technology that uses light waves as a carrier for information processing. It has the advantages of large bandwidth, low latency, and low power consumption. It provides a computing architecture of "transmission is calculation, structure is function" and is expected to Avoid the data tidal transmission problem present in the von Neumann computing paradigm.

Optical computing has developed rapidly in the field of artificial intelligence in recent years and has broad application prospects, CITIC Construction Investment pointed out. Companies represented by Lightmatter and Lightelligence have launched new silicon photonic computing chips with performance far exceeding current AI computing chips. According to data from Lightmatter, the Envise chip they launched runs 1.5 to 10 times faster than Nvidia's A100 chip. times.

Can optical chips challenge Nvidia’s AI chips? Nvidia's biggest rival AMD on Tuesday showed off its upcoming GPU-specific MI300X AI chip, which it calls an accelerator, that can speed up the processing of generative artificial intelligence used by ChatGPT and other chatbots and can use up to 192GB memory, and Nvidia's H100 chip only supports 120GB of memory, Nvidia's dominance in this emerging market may be challenged.

Yueling shares rapidly hit the limit

Optical computing and optical chips are also related to the current hottest CPO concept, in addition to the above-mentioned good news. With the advancement of artificial intelligence, the demand for optical modules has grown rapidly, which has promoted the benefit of optical chips as the core material. It is understood that indium phosphide optical chips and components are the largest cost item in optical modules. Their performance directly determines the transmission rate of the optical module and is one of the cores of the optical communications industry chain.

Affected by multiple positive factors such as the CPO concept, the optical chip concept has also begun to be favored by the market recently. Optical chip concept stocks were trending strongly in early trading today, with Shijia Photonics soaring nearly 15%. Data shows that Shijia Photonics’ main business covers three major sectors: optical chips and devices, indoor optical cables, and cable materials. The company stated on the interactive platform on January 31 that O-band LWDM high-power DFB laser chips have begun sample delivery and sample single-stage in the field of optical computing.

The performance of Yueling shares was even more explosive, soaring to the daily limit in just about 5 minutes. As of the morning's close, the stock was trading at 11.45 yuan, up 9.99%, with nearly 100,000 orders closed. This is the third daily limit for the stock within 4 trading days. According to data, Zhongshi Optical Core, a joint-stock company owned by Yueling Co., Ltd., mainly develops and mass-produces indium phosphide-based compound semiconductor laser optical chips, based on optical communication-related products. Zhongke Optical Core, a wholly-owned subsidiary of Sinopec Optical Core, has a complete industry line of epitaxial growth, chip micro-nano processing and device packaging. Its existing products include epitaxial wafers, chips, TO devices, butterfly devices, PON devices, optical modules, etc. It is a high-tech enterprise with truly independent intellectual property rights and the ability to independently design and mass-produce optical chips and devices.

There are only 4 high-quality high-growth stocks

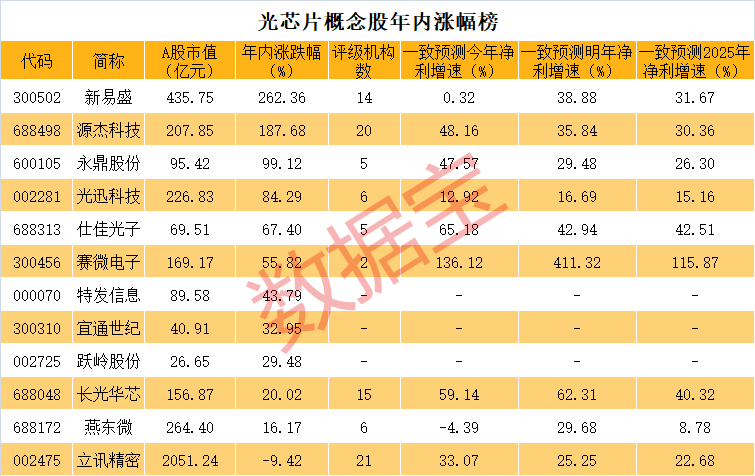

There are not many stocks with the concept of optical chips in the A-share market. According to incomplete statistics from Databao, there are a total of 12 optical chip concept stocks with a total market value of over 383.4 billion yuan. Judging from market performance, Xinyi Sheng has the largest increase during the year, exceeding 262%, followed by Yuanjie Technology, with an increase of over 187% during the year.

Luxshare Precision, Yuanjie Technology, Changguang Huaxin and Xinyi Sheng have all received attention and ratings from more than 10 institutions. From a growth perspective, Yuanjie Technology, Changguang Huaxin, Shijia Photonics, and Sai Microelectronics all predict that the net profit growth rate this year, next year and 2025 will exceed 30%.

The above is the detailed content of Big breakthrough, announced by the Chinese Academy of Sciences! 1.5 to 10 times faster than Nvidia, will AI chips change the world? Concept leader's straight daily limit. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

New title: NVIDIA H200 released: HBM capacity increased by 76%, the most powerful AI chip that significantly improves large model performance by 90%

Nov 14, 2023 pm 03:21 PM

New title: NVIDIA H200 released: HBM capacity increased by 76%, the most powerful AI chip that significantly improves large model performance by 90%

Nov 14, 2023 pm 03:21 PM

According to news on November 14, Nvidia officially released the new H200 GPU at the "Supercomputing23" conference on the morning of the 13th local time, and updated the GH200 product line. Among them, the H200 is still built on the existing Hopper H100 architecture. However, more high-bandwidth memory (HBM3e) has been added to better handle the large data sets required to develop and implement artificial intelligence, making the overall performance of running large models improved by 60% to 90% compared to the previous generation H100. The updated GH200 will also power the next generation of AI supercomputers. In 2024, more than 200 exaflops of AI computing power will be online. H200

MediaTek is rumored to have won a large order from Google for server AI chips and will supply high-speed Serdes chips

Jun 19, 2023 pm 08:23 PM

MediaTek is rumored to have won a large order from Google for server AI chips and will supply high-speed Serdes chips

Jun 19, 2023 pm 08:23 PM

On June 19, according to media reports in Taiwan, China, Google (Google) has approached MediaTek to cooperate in order to develop the latest server-oriented AI chip, and plans to hand it over to TSMC's 5nm process for foundry, with plans for mass production early next year. According to the report, sources revealed that this cooperation between Google and MediaTek will provide MediaTek with serializer and deserializer (SerDes) solutions and help integrate Google’s self-developed tensor processor (TPU) to help Google create the latest Server AI chips will be more powerful than CPU or GPU architectures. The industry points out that many of Google's current services are related to AI. It has invested in deep learning technology many years ago and found that using GPUs to perform AI calculations is very expensive. Therefore, Google decided to

The next big thing in AI: Peak performance of NVIDIA B100 chip and OpenAI GPT-5 model

Nov 18, 2023 pm 03:39 PM

The next big thing in AI: Peak performance of NVIDIA B100 chip and OpenAI GPT-5 model

Nov 18, 2023 pm 03:39 PM

After the debut of the NVIDIA H200, known as the world's most powerful AI chip, the industry began to look forward to NVIDIA's more powerful B100 chip. At the same time, OpenAI, the most popular AI start-up company this year, has begun to develop a more powerful and complex GPT-5 model. Guotai Junan pointed out in the latest research report that the B100 and GPT5 with boundless performance are expected to be released in 2024, and the major upgrades may release unprecedented productivity. The agency stated that it is optimistic that AI will enter a period of rapid development and its visibility will continue until 2024. Compared with previous generations of products, how powerful are B100 and GPT-5? Nvidia and OpenAI have already given a preview: B100 may be more than 4 times faster than H100, and GPT-5 may achieve super

Kneron launches latest AI chip KL730 to drive large-scale application of lightweight GPT solutions

Aug 17, 2023 pm 01:37 PM

Kneron launches latest AI chip KL730 to drive large-scale application of lightweight GPT solutions

Aug 17, 2023 pm 01:37 PM

KL730's progress in energy efficiency has solved the biggest bottleneck in the implementation of artificial intelligence models - energy costs. Compared with the industry and previous Nerner chips, the KL730 chip has increased by 3 to 4 times. The KL730 chip supports the most advanced lightweight GPT large Language models, such as nanoGPT, and provide effective computing power of 0.35-4 tera per second. AI company Kneron today announced the release of the KL730 chip, which integrates automotive-grade NPU and image signal processing (ISP) to bring safe and low-energy AI The capabilities are empowered in various application scenarios such as edge servers, smart homes, and automotive assisted driving systems. San Diego-based Kneron is known for its groundbreaking neural processing units (NPUs), and its latest chip, the KL730, aims to achieve

NVIDIA launches new AI chip H200, performance improved by 90%! China's computing power achieves independent breakthrough!

Nov 14, 2023 pm 05:37 PM

NVIDIA launches new AI chip H200, performance improved by 90%! China's computing power achieves independent breakthrough!

Nov 14, 2023 pm 05:37 PM

While the world is still obsessed with NVIDIA H100 chips and buying them crazily to meet the growing demand for AI computing power, on Monday local time, NVIDIA quietly launched its latest AI chip H200, which is used for training large AI models. Compared with other The performance of the previous generation products H100 and H200 has been improved by about 60% to 90%. The H200 is an upgraded version of the Nvidia H100. It is also based on the Hopper architecture like the H100. The main upgrade includes 141GB of HBM3e video memory, and the video memory bandwidth has increased from the H100's 3.35TB/s to 4.8TB/s. According to Nvidia’s official website, H200 is also the company’s first chip to use HBM3e memory. This memory is faster and has larger capacity, so it is more suitable for large languages.

AI chips are out of stock globally!

May 30, 2023 pm 09:53 PM

AI chips are out of stock globally!

May 30, 2023 pm 09:53 PM

Google’s CEO likened the AI revolution to humanity’s use of fire, but now the digital fire that fuels the industry—AI chips—is hard to come by. The new generation of advanced chips that drive AI operations are almost all manufactured by NVIDIA. As ChatGPT explodes out of the circle, the market demand for NVIDIA graphics processing chips (GPUs) far exceeds the supply. "Because there is a shortage, the key is your circle of friends," said Sharon Zhou, co-founder and CEO of Lamini, a startup that helps companies build AI models such as chatbots. "It's like toilet paper during the epidemic." This kind of thing. The situation has limited the computing power that cloud service providers such as Amazon and Microsoft can provide to customers such as OpenAI, the creator of ChatGPT.

Microsoft is developing its own AI chip 'Athena'

Apr 25, 2023 pm 01:07 PM

Microsoft is developing its own AI chip 'Athena'

Apr 25, 2023 pm 01:07 PM

Microsoft is developing AI-optimized chips to reduce the cost of training generative AI models, such as the ones that power the OpenAIChatGPT chatbot. The Information recently quoted two people familiar with the matter as saying that Microsoft has been developing a new chipset code-named "Athena" since at least 2019. Employees at Microsoft and OpenAI already have access to the new chips and are using them to test their performance on large language models such as GPT-4. Training large language models requires ingesting and analyzing large amounts of data in order to create new output content for the AI to imitate human conversation. This is a hallmark of generative AI models. This process requires a large number (on the order of tens of thousands) of A

Kneron announces the launch of its latest AI chip KL730

Aug 17, 2023 am 10:09 AM

Kneron announces the launch of its latest AI chip KL730

Aug 17, 2023 am 10:09 AM

According to the original words, it can be rewritten as: (Global TMT August 16, 2023) AI company Kneron, headquartered in San Diego and known for its groundbreaking neural processing units (NPU), announced the release of the KL730 chip. The chip integrates automotive-grade NPU and image signal processing (ISP), and provides safe and low-energy AI capabilities to various application scenarios such as edge servers, smart homes, and automotive assisted driving systems. The KL730 chip has achieved great results in terms of energy efficiency. A breakthrough, compared with previous Nerner chips, its energy efficiency has increased by 3 to 4 times, and is 150% to 200% higher than similar products in major industries. The chip has an effective computing power of 0.35-4 tera per second and can support the most advanced lightweight GPT large