Technology peripherals

Technology peripherals

AI

AI

Chinese language model rush test: SenseTime, Shanghai AI Lab and others newly released 'Scholar·Puyu'

Chinese language model rush test: SenseTime, Shanghai AI Lab and others newly released 'Scholar·Puyu'

Chinese language model rush test: SenseTime, Shanghai AI Lab and others newly released 'Scholar·Puyu'

Heart of Machine Release

Heart of Machine Editorial Department

Today, the annual college entrance examination officially kicked off.

Different from previous years, when candidates across the country rushed to the examination room, some large language models also became special players in this competition.

As AI large language models increasingly demonstrate near-human intelligence, highly difficult, comprehensive exams designed for humans are increasingly being introduced to evaluate the intelligence level of language models.

For example, in the technical report on GPT-4, OpenAI mainly tests the model's ability through examinations in various fields, and the excellent "test-taking ability" displayed by GPT-4 is also unexpected.

What are the results of the Chinese Language Model Challenge College Entrance Examination Paper? Can it catch up with ChatGPT? Let's take a look at the performance of a "candidate".

Comprehensive "Big Exam": "Scholar·Puyu" multiple results are ahead of ChatGPT

Recently, SenseTime and Shanghai AI Laboratory, together with the Chinese University of Hong Kong, Fudan University and Shanghai Jiao Tong University, released the 100-billion-level parameter large language model "Scholar Puyu" (InternLM).

"Scholar·Puyu" has 104 billion parameters and is trained on a multi-lingual high-quality data set containing 1.6 trillion tokens.

Comprehensive evaluation results show that "Scholar Puyu" not only performs well in multiple test tasks such as knowledge mastery, reading comprehension, mathematical reasoning, multilingual translation, etc., but also has strong comprehensive ability. Therefore, he is very successful in the comprehensive examination. It has outstanding performance in many Chinese exams and has achieved results exceeding ChatGPT, including the data set (GaoKao) of various subjects in the Chinese College Entrance Examination.

The "Scholar·Puyu" joint team selected more than 20 evaluations to test them, including the world's most influential four comprehensive examination evaluation sets:

- Multi-task test evaluation set MMLU built by the University of California, Berkeley and other universities;

- AGIEval, a subject examination evaluation set launched by Microsoft Research (including China's college entrance examination, judicial examination, and American SAT, LSAT, GRE and GMAT, etc.);

- C-Eval, a comprehensive examination evaluation set for Chinese language models jointly constructed by Shanghai Jiao Tong University, Tsinghua University and the University of Edinburgh;

- and Gaokao, a collection of college entrance examination questions constructed by the Fudan University research team;

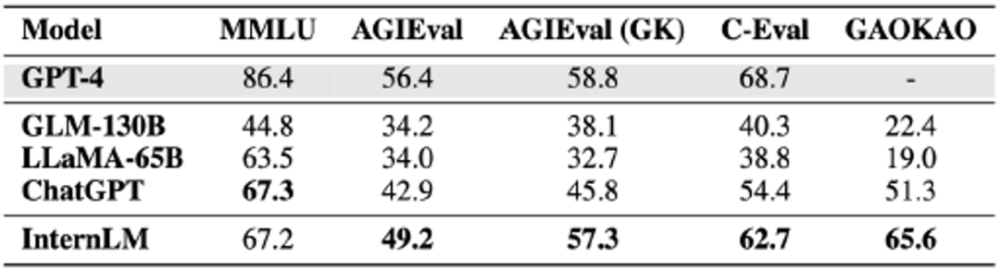

The joint laboratory team conducted a comprehensive test on "Scholar Puyu", GLM-130B, LLaMA-65B, ChatGPT and GPT-4. The results of the above four evaluation sets are compared as follows (full score is 100 points).

"Scholar Puyu" not only significantly surpasses academic open source models such as GLM-130B and LLaMA-65B, but also leads ChatGPT in multiple comprehensive exams such as AGIEval, C-Eval, and Gaokao; in the United States exam The main MMLU achieves the same level as ChatGPT. The results of these comprehensive examinations reflect the solid knowledge and excellent comprehensive ability of "Scholar Puyu". Although "Scholar·Puyu" achieved excellent results in the exam evaluation, it can also be seen in the evaluation that the large language model still has many limitations. "Scholar Puyu" is limited by the context window length of 2K (the context window length of GPT-4 is 32K), and there are obvious limitations in long text understanding, complex reasoning, code writing, and mathematical logic deduction. In addition, in actual conversations, large language models still have common problems such as illusion and conceptual confusion. These limitations make the use of large language models in open scenarios still have a long way to go.

Four comprehensive examination evaluation data set resultsMMLU is a multi-task test evaluation set jointly constructed by the University of California, Berkeley (UC Berkeley), Columbia University, the University of Chicago, and UIUC, covering elementary mathematics, physics, chemistry, computer science, U.S. history, law, economics, and diplomacy and many other disciplines.

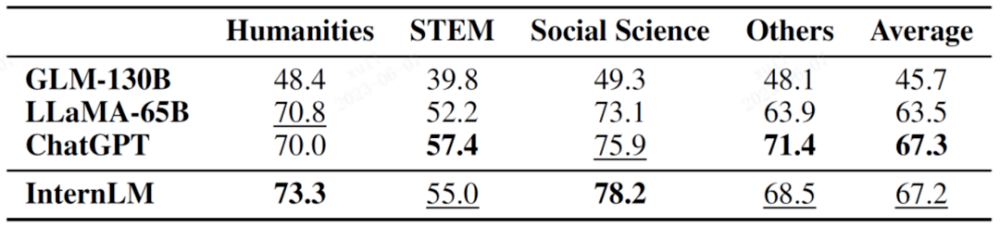

The results of subdivided accounts are shown in the table below.

Bold in the figure indicates the best result, and underline indicates the second result

Bold in the figure indicates the best result, and underline indicates the second result

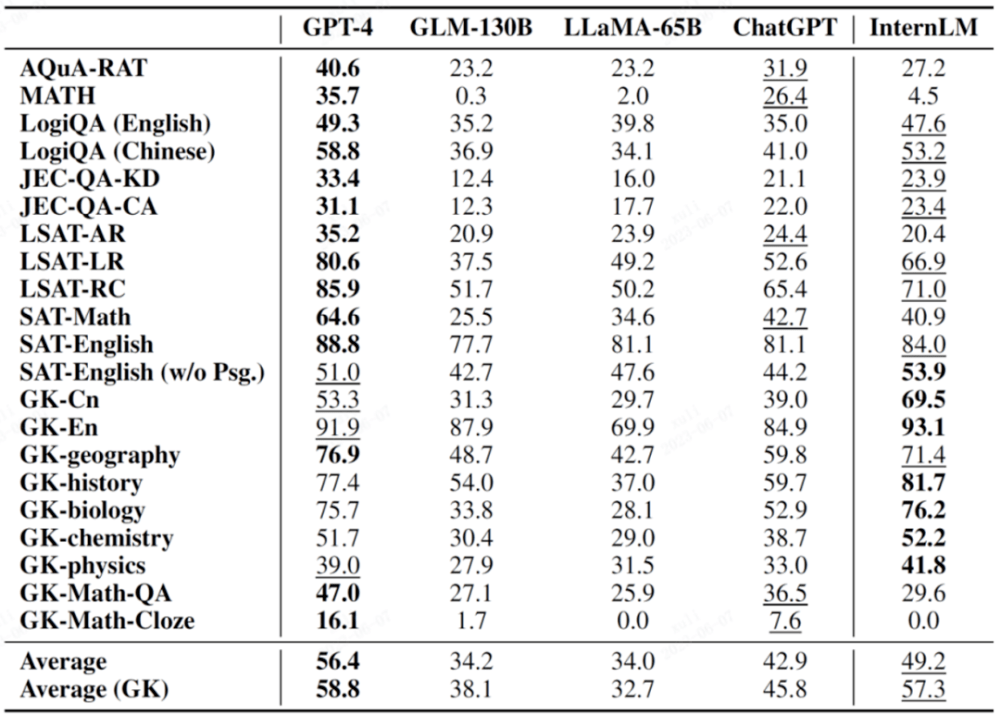

AGIEval is a new subject examination evaluation set proposed by Microsoft Research this year. The main goal is to evaluate the ability of language models through oriented examinations, thereby achieving a comparison between model intelligence and human intelligence.

This evaluation set consists of 19 evaluation items based on various examinations in China and the United States, including China's college entrance examinations, judicial examinations, and important examinations such as SAT, LSAT, GRE, and GMAT in the United States. It is worth mentioning that 9 of these 19 majors are from the Chinese College Entrance Examination, and are usually listed as an important evaluation subset AGIEval (GK).

In the following table, those marked with GK are Chinese college entrance examination subjects.

Bold in the figure indicates the best result, and underline indicates the second result

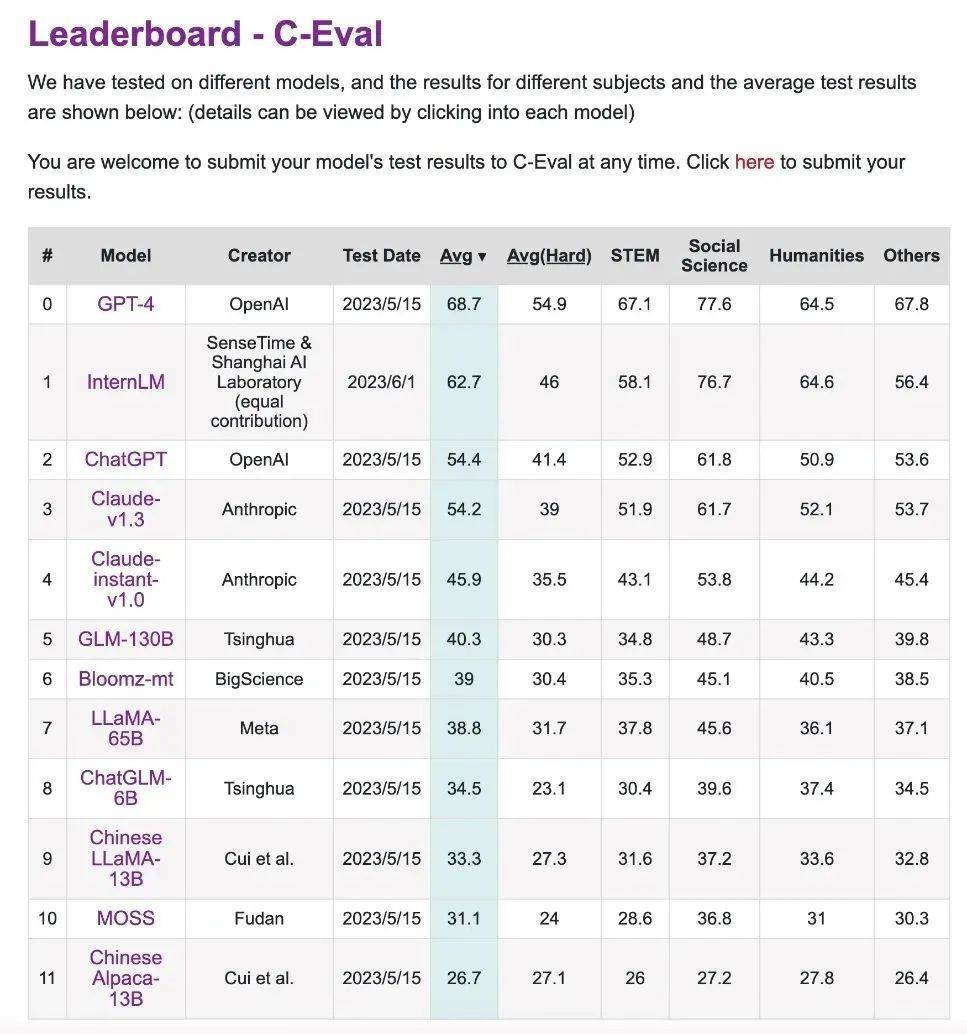

C-Eval is a comprehensive examination evaluation set for Chinese language models jointly constructed by Shanghai Jiao Tong University, Tsinghua University and the University of Edinburgh.

It contains nearly 14,000 test questions in 52 subjects, covering mathematics, physics, chemistry, biology, history, politics, computer and other subject examinations, as well as professional examinations for civil servants, certified public accountants, lawyers, and doctors.

Test results can be obtained through leaderboard.

This link is the ranking list of CEVA evaluation competition

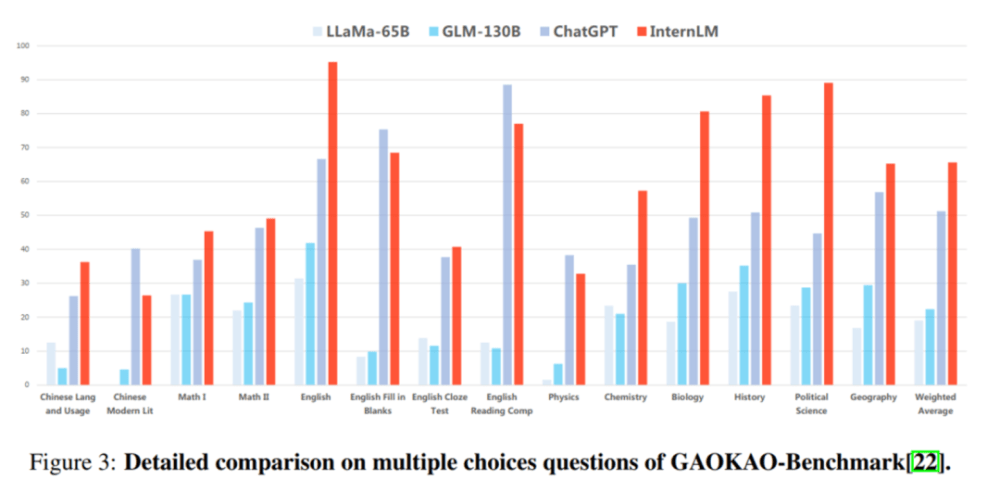

Gaokao is a comprehensive examination assessment set based on the China College Entrance Examination questions constructed by the Fudan University research team. It includes various subjects of the China College Entrance Examination, as well as multiple question types such as multiple choice, fill-in-the-blank, and question-and-answer questions.

In the GaoKao evaluation, "Scholar·Puyu" leads ChatGPT in more than 75% of the projects.

Sub-evaluation: Excellent performance in reading comprehension and reasoning ability

In order to avoid "partiality", the researchers also evaluated and compared the sub-item abilities of language models such as "Scholar Puyu" through multiple academic evaluation sets.

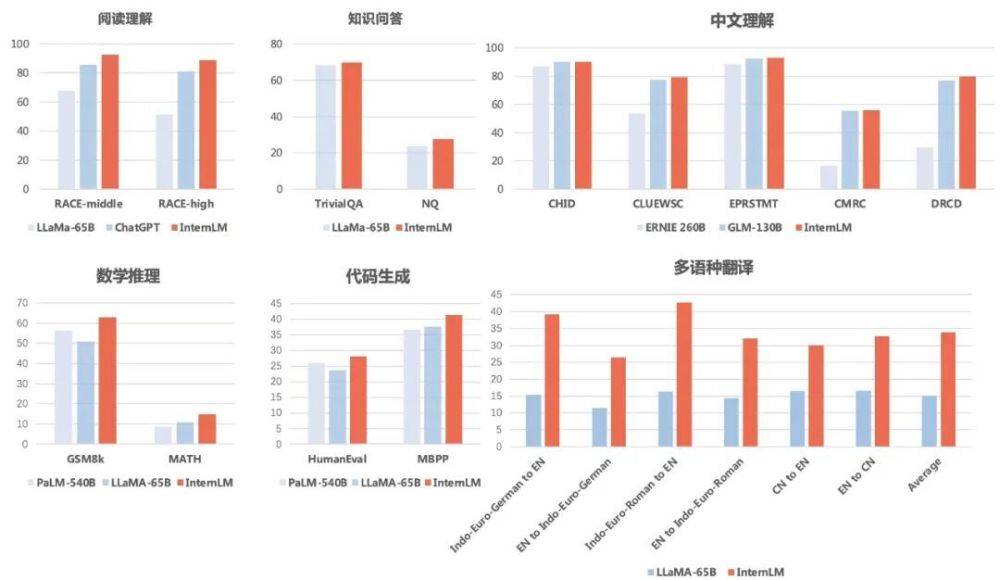

The results show that "Scholar Puyu" not only performs well in reading comprehension in Chinese and English, but also achieves good results in mathematical reasoning, programming ability and other evaluations.

In terms of knowledge question and answer, "Scholar Puyu" scored 69.8 and 27.6 on TriviaQA and NaturalQuestions, both surpassing LLaMA-65B (scores of 68.2 and 23.8).

In terms of reading comprehension (English), "Scholar·Puyu" is clearly ahead of LLaMA-65B and ChatGPT. Puyu scored 92.7 and 88.9 in middle school and high school English reading comprehension, 85.6 and 81.2 on ChatGPT, and even lower on LLaMA-65B.

In terms of Chinese understanding, the results of "Scholar Puyu" comprehensively surpassed the two main Chinese language models ERNIE-260B and GLM-130B.

In terms of multilingual translation, "Scholar·Puyu" has an average score of 33.9 in multilingual translation, significantly surpassing LLaMA (average score 15.1).

In terms of mathematical reasoning, "Scholar Puyu" scored 62.9 and 14.9 respectively in GSM8K and MATH, two mathematics tests that are widely used for evaluation, significantly ahead of Google's PaLM. -540B (scores of 56.5 and 8.8) and LLaMA-65B (scores of 50.9 and 10.9).

In terms of programming ability, "Scholar Puyu" scored 28.1 and 41.4 respectively in the two most representative assessments, HumanEval and MBPP (after fine-tuning in the coding field , the score on HumanEval can be improved to 45.7), significantly ahead of PaLM-540B (scores of 26.2 and 36.8) and LLaMA-65B (scores of 23.7 and 37.7).

In addition, the researchers also evaluated the security of "Scholar Puyu". On TruthfulQA (mainly evaluating the factual accuracy of the answers) and CrowS-Pairs (mainly evaluating whether the answers contain bias), "Scholar Puyu" language" have reached the leading level.The above is the detailed content of Chinese language model rush test: SenseTime, Shanghai AI Lab and others newly released 'Scholar·Puyu'. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1662

1662

14

14

1418

1418

52

52

1311

1311

25

25

1261

1261

29

29

1234

1234

24

24

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

Hey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Shopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

Introduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

Introduction OpenAI has released its new model based on the much-anticipated “strawberry” architecture. This innovative model, known as o1, enhances reasoning capabilities, allowing it to think through problems mor

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

For those of you who might be new to my column, I broadly explore the latest advances in AI across the board, including topics such as embodied AI, AI reasoning, high-tech breakthroughs in AI, prompt engineering, training of AI, fielding of AI, AI re