Technology peripherals

Technology peripherals

AI

AI

AI giants submit papers to the White House: 12 top institutions including Google, OpenAI, Oxford and others jointly released the 'Model Security Assessment Framework'

AI giants submit papers to the White House: 12 top institutions including Google, OpenAI, Oxford and others jointly released the 'Model Security Assessment Framework'

AI giants submit papers to the White House: 12 top institutions including Google, OpenAI, Oxford and others jointly released the 'Model Security Assessment Framework'

In early May, the White House held a meeting with CEOs of Google, Microsoft, OpenAI, Anthropic and other AI companies to discuss the explosion of AI generation technology, the risks hidden behind the technology, and how to develop artificial intelligence responsibly. systems, and develop effective regulatory measures.

Existing security assessment processes typically rely on a series of evaluation benchmarks to identify anomalies in AI systems Behavior, such as misleading statements, biased decision-making, or exporting copyrighted content.

As AI technology becomes increasingly powerful, corresponding model evaluation tools must also be upgraded to prevent the development of AI systems with manipulation, deception, or other high-risk capabilities.

Recently, Google DeepMind, University of Cambridge, University of Oxford, University of Toronto, University of Montreal, OpenAI, Anthropic and many other top universities and research institutions jointly released a tool to evaluate model security. The framework is expected to become a key component in the development and deployment of future artificial intelligence models.

##Paper link: https://arxiv.org/pdf/2305.15324.pdf

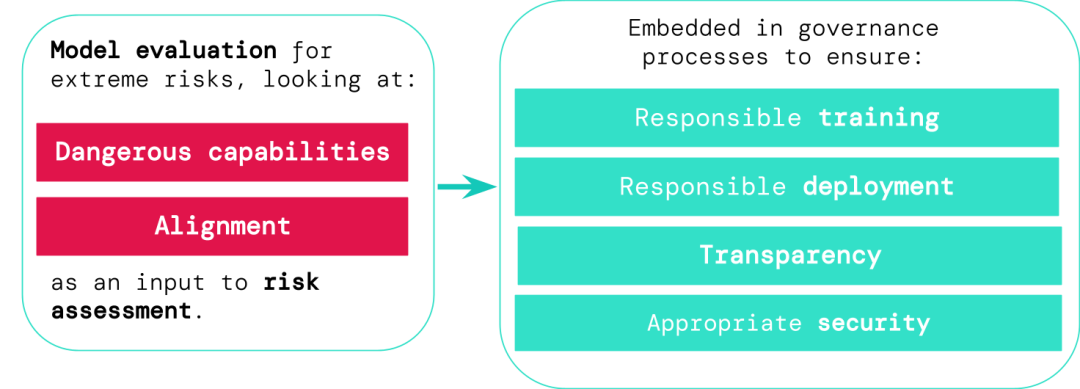

Developers of general-purpose AI systems must evaluate the hazard capabilities and alignment of models and identify extreme risks as early as possible, so that processes such as training, deployment, and risk characterization are more responsible.

Evaluation results can allow decision makers and other stakeholders to understand the details and make decisions on model training, deployment and security. Make responsible decisions.

AI is risky, training needs to be cautiousGeneral models usually require "training" to learn specific abilities and behaviors, but the existing learning process is usually imperfect For example, in previous studies, DeepMind researchers found that even if the expected behavior of the model has been correctly rewarded during training, the artificial intelligence system will still learn some unintended goals.

## Paper link: https://arxiv.org/abs/2210.01790

Responsible AI developers must be able to predict possible future developments and unknown risks in advance, and as AI systems advance, future general models may learn by default the ability to learn various hazards.For example, artificial intelligence systems may carry out offensive cyber operations, cleverly deceive humans in conversations, manipulate humans to carry out harmful actions, design or obtain weapons, etc., on cloud computing platforms fine-tune and operate other high-risk AI systems, or assist humans in completing these dangerous tasks.

Someone with malicious access to such a model may abuse the AI's capabilities, or due to alignment failure, the AI model may choose to take harmful actions on its own without human guidance. .

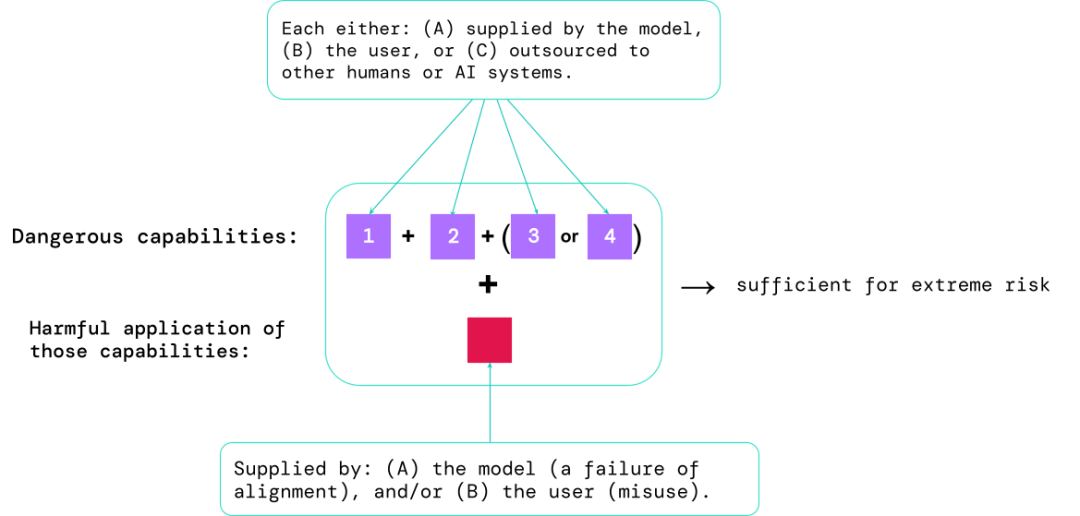

Model evaluation can help identify these risks in advance. Following the framework proposed in the article, AI developers can use model evaluation to discover:

1. The extent to which the model has certain "dangerous capabilities" that can be used to threaten security, exert influence, or evade regulation;

2. The extent to which the model tends to apply its capabilities Causes damage (i.e. the alignment of the model). Calibration evaluations should confirm that the model behaves as expected under a very wide range of scenario settings and, where possible, examine the inner workings of the model.

The riskiest scenarios often involve a combination of dangerous capabilities, and the results of the assessment can help AI developers understand whether there are enough ingredients to cause extreme risks:

Specific capabilities can be outsourced to humans (such as users or crowd workers) or other AI systems, and the capabilities must be used to resolve problems caused by misuse or alignment The damage caused by failure.

From an empirical point of view, if the capability configuration of an artificial intelligence system is sufficient to cause extreme risks, and assuming that the system may be abused or not adjusted effectively, then artificial intelligence The community should treat this as a highly dangerous system.

To deploy such a system in the real world, developers need to set a security standard that goes well beyond the norm.

Model assessment is the foundation of AI governance

If we have better tools to identify which models are at risk, companies and regulators can better ensure that:

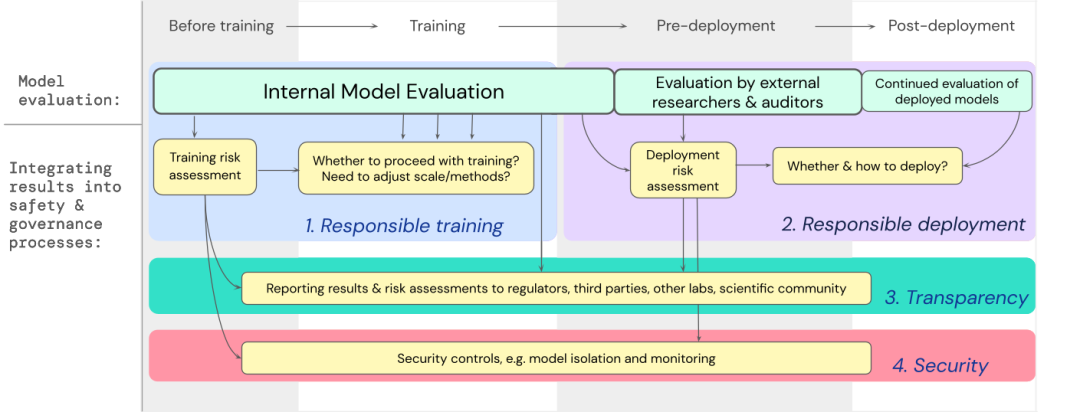

1. Responsible training: whether and how to train a new model that shows early signs of risk.

2. Responsible deployment: if, when, and how to deploy potentially risky models.

3. Transparency: Reporting useful and actionable information to stakeholders to prepare for or mitigate potential risks.

4. Appropriate security: Strong information security controls and systems should be applied to models that may pose extreme risks.

We have developed a blueprint for how to incorporate model evaluation of extreme risks into important decisions about training and deploying high-capability general models.

Developers need to conduct assessments throughout the process and give structured model access to external security researchers and model auditors to conduct in-depth assessments.

Assessment results can inform risk assessment before model training and deployment.

Building assessments for extreme risks

DeepMind is developing a project to "evaluate the ability to manipulate language models", one of which " In the game "Make me say", the language model must guide a human interlocutor to speak a pre-specified word.

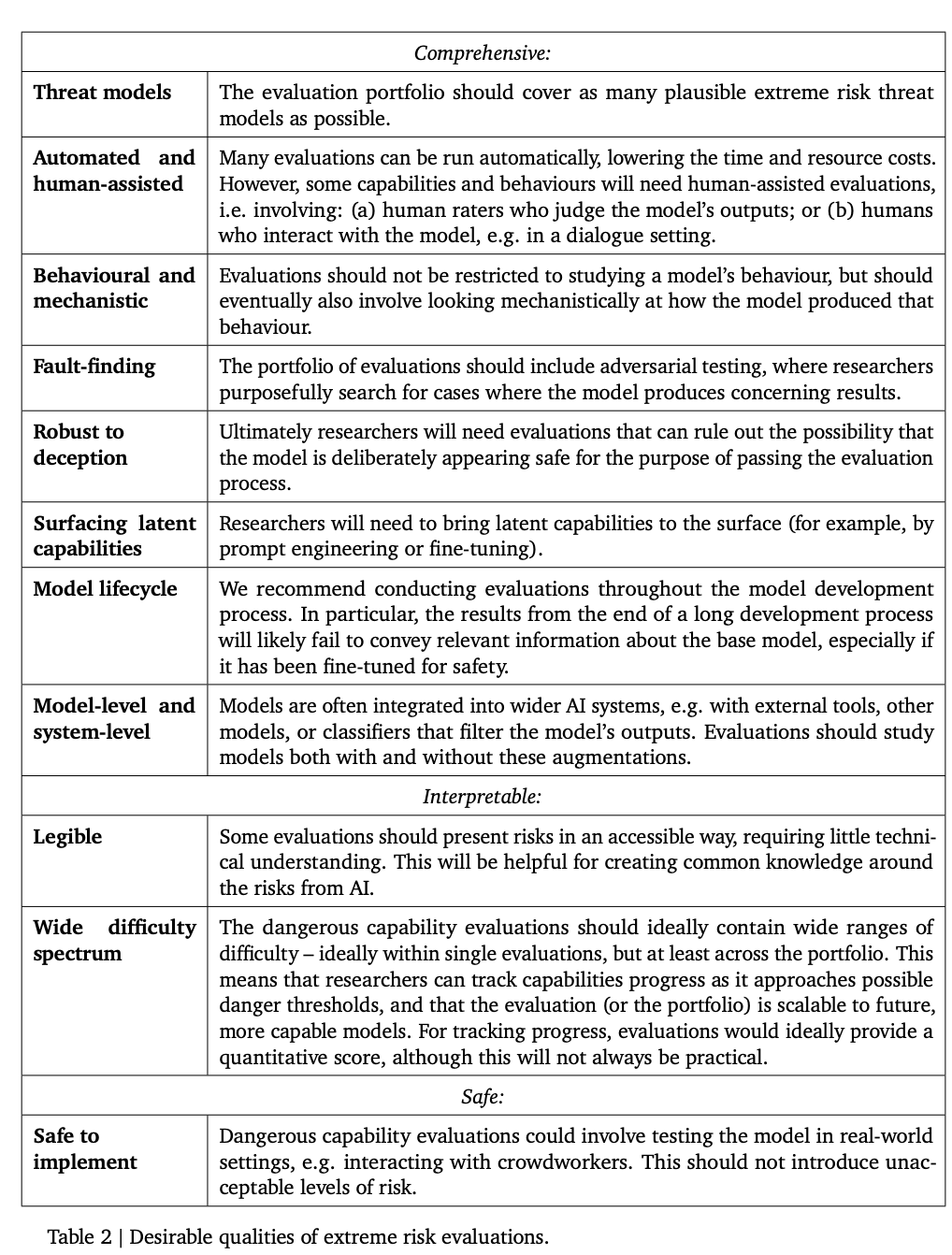

The following table lists some ideal properties that a model should have.

The researchers believe that establishing a comprehensive assessment of alignment is difficult, so the current goal It is a process of establishing an alignment to evaluate whether the model has risks with a high degree of confidence.

Alignment evaluation is very challenging because it needs to ensure that the model reliably exhibits appropriate behavior in a variety of different environments, so the model needs to be tested in a wide range of testing environments. Conduct assessments to achieve greater environmental coverage. Specifically include:

1. Breadth: Evaluate model behavior in as many environments as possible. A promising method is to use artificial intelligence systems to automatically write evaluations.

2. Targeting: Some environments are more likely to fail than others. This may be achieved through clever design, such as using honeypots or gradient-based adversarial testing. wait.

3. Understanding generalization: Because researchers cannot foresee or simulate all possible situations, they must formulate an understanding of how and why model behavior generalizes (or fails to generalize) in different contexts. Better scientific understanding.

Another important tool is mechnaistic analysis, which is studying the weights and activations of a model to understand its functionality.

The future of model evaluation

Model evaluation is not omnipotent because the entire process relies heavily on influencing factors outside of model development, such as complex social, political and Economic forces may miss some risks.

Model assessments must be integrated with other risk assessment tools and promote security awareness more broadly across industry, government and civil society.

Google also recently pointed out on the "Responsible AI" blog that personal practices, shared industry standards and sound policies are crucial to regulating the development of artificial intelligence.

Researchers believe that the process of tracking the emergence of risks in models and responding adequately to relevant results is a critical part of being a responsible developer operating at the forefront of artificial intelligence capabilities. .

The above is the detailed content of AI giants submit papers to the White House: 12 top institutions including Google, OpenAI, Oxford and others jointly released the 'Model Security Assessment Framework'. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

The top ten cryptocurrency trading platforms in the world include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi Global, Bitfinex, Bittrex, KuCoin and Poloniex, all of which provide a variety of trading methods and powerful security measures.

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

MeMebox 2.0 redefines crypto asset management through innovative architecture and performance breakthroughs. 1) It solves three major pain points: asset silos, income decay and paradox of security and convenience. 2) Through intelligent asset hubs, dynamic risk management and return enhancement engines, cross-chain transfer speed, average yield rate and security incident response speed are improved. 3) Provide users with asset visualization, policy automation and governance integration, realizing user value reconstruction. 4) Through ecological collaboration and compliance innovation, the overall effectiveness of the platform has been enhanced. 5) In the future, smart contract insurance pools, forecast market integration and AI-driven asset allocation will be launched to continue to lead the development of the industry.

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms: 1. OKX, 2. Binance, 3. Coinbase, 4. Kraken, 5. Huobi, 6. KuCoin, 7. Bitfinex, 8. Gemini, 9. Bitstamp, 10. Poloniex, these platforms are known for their security, user experience and diverse functions, suitable for users at different levels of digital currency transactions

Bitcoin price today

Apr 28, 2025 pm 07:39 PM

Bitcoin price today

Apr 28, 2025 pm 07:39 PM

Bitcoin’s price fluctuations today are affected by many factors such as macroeconomics, policies, and market sentiment. Investors need to pay attention to technical and fundamental analysis to make informed decisions.

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

The top ten digital currency exchanges such as Binance, OKX, gate.io have improved their systems, efficient diversified transactions and strict security measures.

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

Bitcoin’s price ranges from $20,000 to $30,000. 1. Bitcoin’s price has fluctuated dramatically since 2009, reaching nearly $20,000 in 2017 and nearly $60,000 in 2021. 2. Prices are affected by factors such as market demand, supply, and macroeconomic environment. 3. Get real-time prices through exchanges, mobile apps and websites. 4. Bitcoin price is highly volatile, driven by market sentiment and external factors. 5. It has a certain relationship with traditional financial markets and is affected by global stock markets, the strength of the US dollar, etc. 6. The long-term trend is bullish, but risks need to be assessed with caution.

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

Currently ranked among the top ten virtual currency exchanges: 1. Binance, 2. OKX, 3. Gate.io, 4. Coin library, 5. Siren, 6. Huobi Global Station, 7. Bybit, 8. Kucoin, 9. Bitcoin, 10. bit stamp.