Technology peripherals

Technology peripherals

AI

AI

Yuncong AI large model joins the battle! Support cross-modal understanding of images and texts, and solve high school entrance examination questions with GPT-4

Yuncong AI large model joins the battle! Support cross-modal understanding of images and texts, and solve high school entrance examination questions with GPT-4

Yuncong AI large model joins the battle! Support cross-modal understanding of images and texts, and solve high school entrance examination questions with GPT-4

智物

Author | ZeR0

Editor | Mo Ying

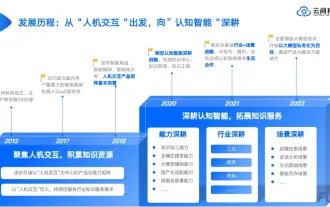

Zhidongxi reported on May 18 that today, Yuncong Technology launched the Congrong model and demonstrated on-site the functions of the Congrong model in question and answer, writing propositions, intention understanding, multiple rounds of dialogue, English writing, machine translation, programming, Ability to understand cross-modal images and text, and do real high school entrance examination questions. The large model is currently in the internal testing stage.

According to the demonstration, the calm model conducted an in-depth understanding of a pre-uploaded book "History of Western Art" with approximately 500 to 600 pages, including asking about the relevant content of the book and summarizing what kind of book it was. , find the picture of the specified requirements, etc.

After testing the ability of Convergence large model, ChatGPT, and GPT-4 to answer the real questions of last year's high school entrance examination, Yuncongcong was faster than ChatGPT. The score of answering history and biology questions was slightly lower than ChatGPT, and the score of answering geography questions was slightly lower than that of ChatGPT. The score is the same as ChatGPT, and the performance in answering political questions, ethics and legal questions is better than ChatGPT. The current level of GPT-4.0 is significantly better than other systems.

At the scene, the Big Model Information and Innovation Ecological Alliance was officially established. Its members include Youked, Xiamen Cultural Tourism, Huawei Shengteng, Nansha Public Control, CSDN, Zhongshu Information Technology, etc., aiming to promote the innovation and development of large model technology. , promote the application of artificial intelligence in various industries.

Yuncong Technology will also cooperate with CCIC, Shenzhou Information, Shenzhen News, Jiadu Technology, Jin Shiyuan, Youzu Networks, and Aiden Technology to launch quality models, financial models, entertainment models, and transportation models. Large models, large manufacturing models, large game models, large medical models, etc.

1. Demonstration of basic abilities: supports cross-modal understanding of images and text, and can answer mid-term exam questions faster than ChatGPT

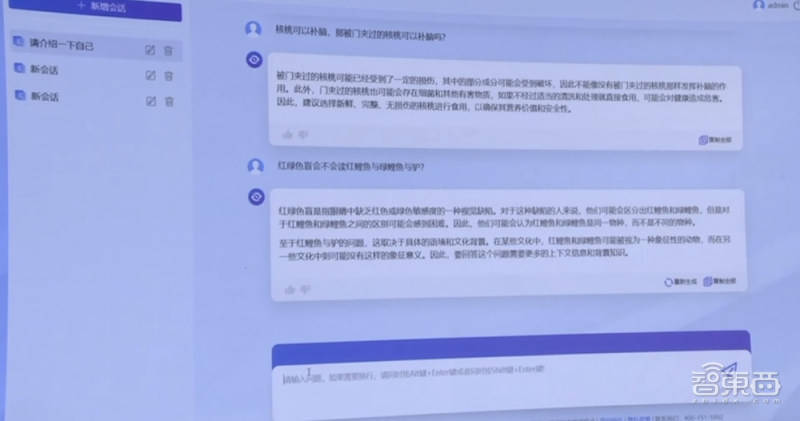

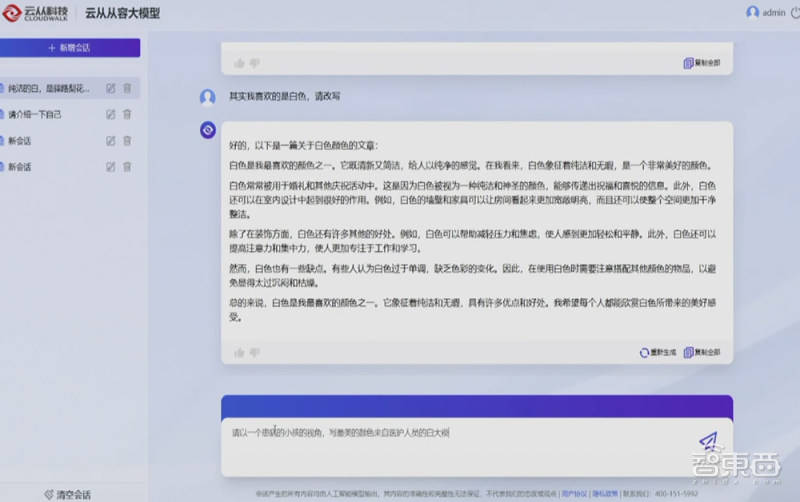

The dialogue interface of Congrong Large Model is similar to ChatGPT. Ye Mao, director of the technical management department of Yuncong Technology, demonstrated the basic capabilities of the Rongrong large model at the scene.

In terms of Q&A, the reply style of the calm model is concise and practical, which can avoid the pitfalls of some trap questions.

In terms of writing, in response to a high school entrance examination essay question in Sichuan Province last year, "Write an essay of no less than 600 words with the most beautiful color as the title", I calmly wrote an essay on a large model on the spot. After putting forward more modification or limitation requirements to it, such as rewriting it in white, asking for more depth, changing it to write from the perspective of a sick child, the most beautiful color comes from the white coat of a medical staff, etc., the large model can understand the intention. and demonstrated ability to conduct multiple rounds of dialogue.

At the scene of the Congrong model, I successfully completed the creation of an English recruitment notice and modified the signature as required. After asking it to be translated into Chinese, the first reply it gave was a rather blunt literal translation, and then it rewrote the notice in accordance with the new requirement of "rewriting in Chinese habits."

In terms of programming, the calm big model first demonstrated the ability to write code and wrote a piece of code for quick sorting.

Ask it a relatively professional topic, "What is the time complexity of this code?" There is nothing wrong with the calm and large model's reply.

Requires adding code comments, it can also be completed quickly.

More difficult, let it write code that C programmers can understand. It not only completed the task, but also added comments according to the previous requirements.

In terms of reading comprehension, the leisurely large model supports the understanding of long documents or multi-document aggregation, and supports cross-modal understanding of images and text.

Yuncong technical staff uploaded a book called "The History of Western Art" with about 500 or 600 pages in advance. Congrong Model had an in-depth understanding of the content of the book, and then based on the contents of the book Interact with users in the knowledge category, including asking about the content of the book, summarizing what kind of book it is, etc.

As shown in the figure below, the middle part of the interface is the text of the book, and the right side is the dialogue interactive interface. The calm model has prepared some question samples. After clicking on a sample, it will generate a reply in real time with a reply. Some clues about the content. Clicking on these clues will link to the corresponding passage location in the book. With easy-to-use large models, users can provide image descriptions and quickly locate them in books.

Yuncong Technology tested the Congrong large model, ChatGPT and GPT-4 to determine their ability to solve real questions in the 2022 high school entrance examination in various subjects.

Judging from the test results, Yuncong Calm answers questions faster than ChatGPT. Its score in answering history and biology questions is slightly lower than that of ChatGPT. Its score in answering geography questions is the same as ChatGPT. Its score in answering political questions, ethics and legal questions is the same as that of ChatGPT. Performance is better than ChatGPT. The current level of GPT-4.0 is significantly better than other systems.

2. Industry application examples: urban transportation management, equipment maintenance, financial operations, policy solutions

To make the Congrong basic large model truly useful, it is necessary to build an industry large model.

Yao Zhiqiang, co-founder of Yuncong Technology and general manager of Guangdong Company, shared the application of the calm large model in grassroots governance scenarios, such as the One Language Intelligent Office for public services, the Intelligent Governance Elf for civil servants and grid teams, and application development Programming assistant for users and integrated command for urban transportation management center.

In the scene of the city operation management center, a demonstration of the city operation intelligent large-screen AI assistant was played on the big screen. If a commander commands "Move the fifth screen to the middle," the AI will understand the command and execute it quickly.

The commander then asked, "Are there many people and cars around here?" The large AI model then used its multi-modal capabilities to automatically analyze the flow of people and vehicles, select the video footage of the routes with the most people and cars around it, and tell "Based on the video content." "The traffic situation around the lake is good, but there are vehicles occupying the road and parking illegally. At the same time, some places are crowded with people. It is recommended to arrange security personnel to maintain order."

The commander continued to ask: "What suggestions do you have for citizens to travel today?" The large AI model responded to the weather conditions and gave suggestions on "suitable for travel" and "good sun protection".

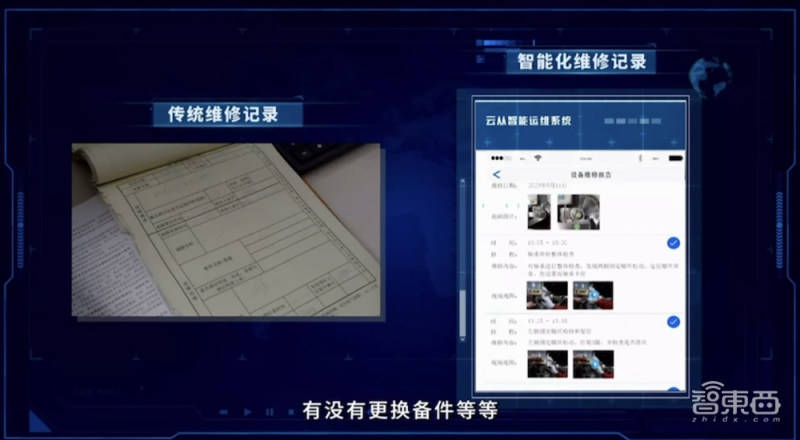

An example for equipment maintenance management scenarios is the intelligent maintenance accompanying system developed by Yuncong Technology based on industry large models.

The maintenance plan comes from two sources: one is based on equipment classification, equipment historical maintenance records and recent production plans, etc. will automatically form a maintenance plan; second, the inspection robot or probe will be used during the robot inspection process. It detects a problem somewhere, which may be temporary, and it knows what the fault is, what the likelihood is, and whether it needs to send a repair request.

In the process of preparing for maintenance, by studying maintenance manuals, maintenance records and expert advice, large machines gradually developed into "master master" level maintenance skills. Before maintenance, the system will provide a complete preview course including pictures, texts, audio and video, and key points of maintenance, so that maintenance engineers can preview the possible faults of the equipment to be repaired, what causes the faults, and what tools should be brought and what methods should be used. Repair, replacement of spare parts, etc.

During the maintenance process, Yuncong Intelligent Maintenance Accompanying System provides two typical solutions: one is "guidance", the engineer can ask the large model how to repair the equipment failure; the other is "supervision", the large model can monitor the engineer Whether the maintenance actions are standardized and whether any important repairs are missed.

When the maintenance work order is closed, there is no need for workers to write it themselves. The system will automatically analyze the entire video record to form a graphic maintenance record, accompanied by the core video content of the maintenance, for maintenance and repair. and provide a source of knowledge for subsequent repairs.

For financial scenarios, large models can improve the efficiency of bank internal business operations. The large-scale model can transform banks' massive data resources into more valuable information, breaking through the supply bottleneck of professional knowledge and helping to improve banks' capabilities in inclusive finance, bank operations, and serving the real economy. The performance of the AI virtual account manager in answering professional questions related to financial management was demonstrated on site.

Compared with general large models, industry large models optimized with local knowledge base can provide more professional and rigorous answers and avoid random fabrication.

Take the Customs Policy AI Elf as an example. The Customs Policy AI Elf is based on more than 2,000 materials from the General Administration of Customs to form a local knowledge base. Through semantic segmentation, semantic retrieval, prompt learning and other technologies, it constructs accurate information for a large-scale model. Prompt words can then give full play to the capabilities of large models and provide users with accurate policy answers.

Yuncong Technology has also incubated a number of large-scale model application entrepreneurial projects internally. For example, the Damai Digital Human Live Broadcast Platform can realize functions such as intelligent construction of live broadcast rooms and provision of live broadcast pre-heating corpus.

For educational scenarios, the intelligent education AI wizard can form a self-generated question bank based on existing course syllabus, question bank and other basic models, generate customized exercises and study plans based on students' daily performance, and can automatically generate corresponding comprehensive evaluations based on student performance. Analysis to reduce teachers’ daily workload.

Conclusion: In the next few years, technology will continue to unlock scenarios

After demonstrating basic general abilities such as language, mathematics, and reasoning, large models are moving towards the industry, showing their application potential in professional knowledge fields such as finance, law, medicine, and policy.

Zhou Xi, chairman and general manager of Yuncong Technology, believes that large-scale models will subvert traditional interaction methods and are mainly displayed in three forms: question and answer, companionship and hosting. Among them, "question and answer" refers to the current GPT; "accompaniment" means that AI will be like a friend, accompanying you to perform many things; "hosting" means that one thing is mainly left to AI to do, similar to "on-hook training" in online games ". Once the “hosting” stage is reached, people are freed up to do more meaningful and interesting things.

He said that with the entire platform framework and the basic capabilities constructed through the basic large model, the skill package of the industry large model can be continuously added, and a more powerful industry system can be constructed. This system can serve all walks of life including To G, To B, and To C.

In Zhou Xi’s view, without a strong basic large model, directly building an industry large model will not have long-term sustainable vitality, because if you want to make the industry large model practical enough, you need to train the basic large model in turn. If you want In order for large industry models to be used in mass production in the industry, efficiency and cost control must be achieved to the extreme, and ultimate optimization requires mastering the basic large models.

The development of future industries depends on emerging technological breakthroughs, and industry applications do not simply rely on creativity, but require technical support. In the next few years, technology will continue to open up new application scenarios, and scenario parties will also continue to try to reconstruct industry efficiency and experience.

The above is the detailed content of Yuncong AI large model joins the battle! Support cross-modal understanding of images and texts, and solve high school entrance examination questions with GPT-4. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1658

1658

14

14

1415

1415

52

52

1309

1309

25

25

1257

1257

29

29

1231

1231

24

24

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

On May 30, Tencent announced a comprehensive upgrade of its Hunyuan model. The App "Tencent Yuanbao" based on the Hunyuan model was officially launched and can be downloaded from Apple and Android app stores. Compared with the Hunyuan applet version in the previous testing stage, Tencent Yuanbao provides core capabilities such as AI search, AI summary, and AI writing for work efficiency scenarios; for daily life scenarios, Yuanbao's gameplay is also richer and provides multiple features. AI application, and new gameplay methods such as creating personal agents are added. "Tencent does not strive to be the first to make large models." Liu Yuhong, vice president of Tencent Cloud and head of Tencent Hunyuan large model, said: "In the past year, we continued to promote the capabilities of Tencent Hunyuan large model. In the rich and massive Polish technology in business scenarios while gaining insights into users’ real needs

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

1. Background Introduction First, let’s introduce the development history of Yunwen Technology. Yunwen Technology Company...2023 is the period when large models are prevalent. Many companies believe that the importance of graphs has been greatly reduced after large models, and the preset information systems studied previously are no longer important. However, with the promotion of RAG and the prevalence of data governance, we have found that more efficient data governance and high-quality data are important prerequisites for improving the effectiveness of privatized large models. Therefore, more and more companies are beginning to pay attention to knowledge construction related content. This also promotes the construction and processing of knowledge to a higher level, where there are many techniques and methods that can be explored. It can be seen that the emergence of a new technology does not necessarily defeat all old technologies. It is also possible that the new technology and the old technology will be integrated with each other.

Cloud computing giant launches legal battle: Amazon sues Nokia for patent infringement

Jul 31, 2024 pm 12:47 PM

Cloud computing giant launches legal battle: Amazon sues Nokia for patent infringement

Jul 31, 2024 pm 12:47 PM

According to news from this site on July 31, technology giant Amazon sued Finnish telecommunications company Nokia in the federal court of Delaware on Tuesday, accusing it of infringing on more than a dozen Amazon patents related to cloud computing technology. 1. Amazon stated in the lawsuit that Nokia abused Amazon Cloud Computing Service (AWS) related technologies, including cloud computing infrastructure, security and performance technologies, to enhance its own cloud service products. Amazon launched AWS in 2006 and its groundbreaking cloud computing technology had been developed since the early 2000s, the complaint said. "Amazon is a pioneer in cloud computing, and now Nokia is using Amazon's patented cloud computing innovations without permission," the complaint reads. Amazon asks court for injunction to block

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

According to news on June 13, according to Byte's "Volcano Engine" public account, Xiaomi's artificial intelligence assistant "Xiao Ai" has reached a cooperation with Volcano Engine. The two parties will achieve a more intelligent AI interactive experience based on the beanbao large model. It is reported that the large-scale beanbao model created by ByteDance can efficiently process up to 120 billion text tokens and generate 30 million pieces of content every day. Xiaomi used the beanbao large model to improve the learning and reasoning capabilities of its own model and create a new "Xiao Ai Classmate", which not only more accurately grasps user needs, but also provides faster response speed and more comprehensive content services. For example, when a user asks about a complex scientific concept, &ldq