Technology peripherals

Technology peripherals

AI

AI

Hallucination? Musk's TruthGPT can't handle it either! OpenAI co-founder says it's complicated

Hallucination? Musk's TruthGPT can't handle it either! OpenAI co-founder says it's complicated

Hallucination? Musk's TruthGPT can't handle it either! OpenAI co-founder says it's complicated

Last month, Musk frantically called for a 6-month suspension of super AI research and development.

Before long, Lao Ma could no longer sit still and officially announced the launch of an AI platform called TruthGPT.

Musk once said that TruthGPT will be the "largest truth-seeking artificial intelligence" that will try to understand the nature of the universe.

He emphasized that an artificial intelligence that cares about understanding the universe is unlikely to exterminate humanity because we are an interesting part of the universe.

However, no language model can handle "illusion" so far.

Recently, the co-founder of OpenAI explained why the realization of TruthGPT’s lofty ideals is so difficult.

TruthGPT ideal is a bubble?

The TruthGPT that Musk’s X.AI wants to build is an honest language model.

In doing so, we will directly target ChatGPT.

Because, previously, AI systems like ChatGPT often produced classic hallucination cases such as erroneous output, and even supported reports of certain political beliefs.

Although ChatGPT allows users to have more control over language models to solve problems, "illusion" is still a core problem that OpenAI, Google, and Musk's artificial intelligence companies must deal with in the future.

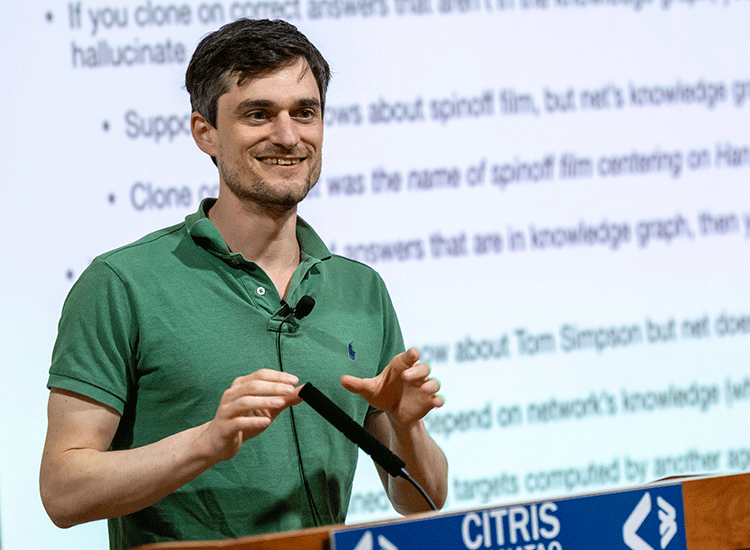

OpenAI co-founder and researcher John Schulman discusses these challenges and how to deal with them in his talk "RL and Truthfulness – Towards TruthGPT".

#Why are there "hallucinations"?

According to Schulman, hallucinations can be roughly divided into two types:

1. "Pattern completion behavior", that is, the language model cannot express itself Uncertainty, the inability to question premises in a prompt, or to continue from a previous mistake.

2. The model guesses incorrectly.

Since the language model represents a knowledge graph that contains facts from the training data in its own network, fine-tuning can be understood as learning a function that operates on that knowledge graph And output token prediction.

For example, a fine-tuning dataset might contain the question "What is the genre of Star Wars?" and the answer "Science Fiction."

If this information is already in the original training data, i.e. it is part of the knowledge graph, then the model will not learn new information; One behavior - outputting the correct answer. This kind of fine-tuning is also called "behavioral cloning".

But the problem is, if the question about "What is the name of Han Solo's spin-off movie" appears in the fine-tuning data set.

But if the answer "Solo" is not part of the original training data set (nor part of the knowledge graph), the network will learn to answer even if it does not know the answer.

Teach the network to make up answers—that is, to create “hallucinations”—by fine-tuning answers that are actually correct but not in the knowledge graph. Conversely, training with incorrect answers causes the network to withhold information.

Thus, behavioral cloning should ideally always be based on network knowledge, but this knowledge is often unknown to the human workers who create or evaluate the dataset, such as instruction tuning.

According to Schulman, this problem also exists when other models create fine-tuned data sets, as is the case with the Alpaca formula.

He predicted that smaller networks with smaller knowledge graphs would not only learn to use the output of ChatGPT to give answers and follow instructions, but also learn to hallucinate more frequently.

How does OpenAI combat hallucinations?

First of all, for simple questions, the language model can predict whether it knows the answer in most cases, and can also express uncertainty.

Therefore, Schulman said that when fine-tuning the data set, the model must learn how to express uncertainty, how to deal with situations where the premise is changed, and when errors are acknowledged.

Instances of these situations should be fed to the model and let them learn.

But the models are still poorly trained in timing, that is, they don’t know when to perform these operations.

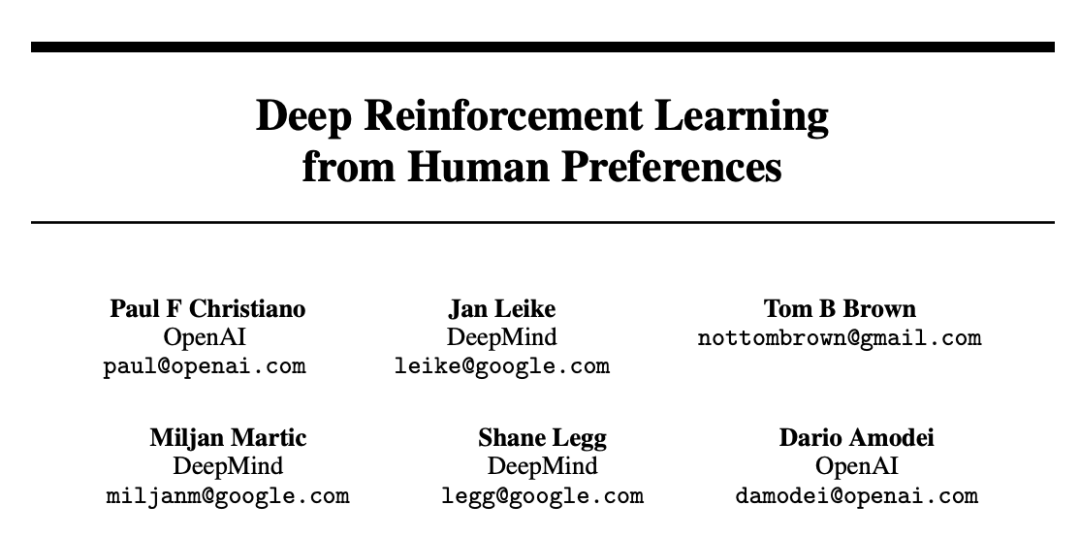

Schulman said that this is where reinforcement learning (RL) comes into play. For example, Reinforcement Learning with Human Feedback (RLHF).

Applying RL, the model can learn "behavior boundaries" and learn when to perform what behavior.

Another difficulty is the ability to retrieve and cite sources.

The question is, with the ability to copy behavior and RLHF, why does ChatGPT still hallucinate?

The reason lies in the difficulty of the problem itself.

While the above method works well for short questions and answers, other problems arise with long format settings common in ChatGPT.

On the one hand, a completely wrong answer is unlikely. In most cases, wrong and right are mixed together.

In extreme cases, it may be just an error in 100 lines of code.

In other cases, the information is not wrong in the traditional sense but misleading. Therefore, in a system like ChatGPT, it is difficult to measure the quality of the output in terms of information content or correctness.

But this measurement is very important for RL algorithms designed to train complex behavioral boundaries.

Currently, OpenAI relies on RLHF’s ranking-based reward model, which is able to predict which of two answers it thinks is better, but does not give a valid signal as to which one is better. How much better, more informative or correct the answer is.

Schulman said it lacks the ability to provide feedback to the model to learn fine behavioral boundaries. And this kind of fine behavioral boundary is the possible way to solve the illusion.

Additionally, this process is further complicated by human error in the RLHF labeling process.

Therefore, although Schulman regards RL as one of the important ways to reduce hallucinations, he believes that there are still many unresolved problems.

Except for what the reward model mentioned above needs to look like to guide correct behavior, RLHF currently only relies on human judgment.

This may make knowledge generation more difficult. Because predictions about the future sometimes lead to less convincing presentations.

However, Schulman believes that the generation of knowledge is the next important step in language models. At the same time, he believes that the theoretical construction of problems such as predicting the future and giving inference rules is the next type of open problem that needs to be solved urgently. Sexual issues.

One possible solution, Schulman said, is to use other AI models to train language models.

OpenAI also believes that this method is very meaningful for AI alignment.

ChatGPT Architect

As the architect of ChatGPT, John Schulman joined OpenAI as one of the co-founders as early as 2015 when he was still studying for a PhD.

In an interview, Schulman explained why he joined OpenAI:

I want to do it Regarding artificial intelligence research, I think OpenAI has an ambitious mission and is committed to building general artificial intelligence.

Although, talking about AGI seemed a little crazy at the time, I thought it was reasonable to start thinking about it, and I wanted to be in a place where talking about AGI was acceptable.

In addition, according to Schulman, OpenAI’s idea of introducing human feedback reinforcement learning (RLHF) into ChatGPT can be traced back to 17 years ago.

At that time, he was also a member of OpenAI and published a paper "Deep Reinforcement Learning from Human Preferences" which mentioned this method.

##Paper address: https://arxiv.org/pdf/1706.03741.pdf

The OpenAI security team is working on this because they want to align their models with human preferences—trying to make the models actually listen to humans and try to do what humans want to do.

When GPT-3 completed training, then Schulman decided to join this trend because he saw the potential of the entire research direction.

When asked what his first reaction was when he used ChatGPT for the first time, Schulman’s words revealed “no emotion.”

I still remember that ChatGPT came out last year, which made many people’s brains explode instantly.

And no one inside OpenAI is excited about ChatGPT. Because the released ChatGPT was a weaker model based on GPT-3.5, colleagues were playing with GPT-4 at that time.

So at that time, no one at OpenAI was excited about ChatGPT because there was such a more powerful and smarter model that had already been trained.

Regarding his views on the next frontier of artificial intelligence in the future, Schulman said that AI continues to improve on more difficult tasks, and then the question arises, what should humans do? , tasks under which humans can have greater influence and do more work with the help of large models.

The above is the detailed content of Hallucination? Musk's TruthGPT can't handle it either! OpenAI co-founder says it's complicated. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1653

1653

14

14

1413

1413

52

52

1306

1306

25

25

1251

1251

29

29

1224

1224

24

24

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

In order to align large language models (LLMs) with human values and intentions, it is critical to learn human feedback to ensure that they are useful, honest, and harmless. In terms of aligning LLM, an effective method is reinforcement learning based on human feedback (RLHF). Although the results of the RLHF method are excellent, there are some optimization challenges involved. This involves training a reward model and then optimizing a policy model to maximize that reward. Recently, some researchers have explored simpler offline algorithms, one of which is direct preference optimization (DPO). DPO learns the policy model directly based on preference data by parameterizing the reward function in RLHF, thus eliminating the need for an explicit reward model. This method is simple and stable