Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

How to carry out big data management and data warehouse design in PHP?

How to carry out big data management and data warehouse design in PHP?

How to carry out big data management and data warehouse design in PHP?

With the popularization of the Internet and the development of Web applications, data management and data warehouse design have become one of the important links in Web development. PHP is a programming language widely used in web development, so how to carry out big data management and data warehouse design in PHP? This article will answer them one by one for you.

1. Big data management

- Selection and optimization of database

In PHP applications, the relational databases we often use include MySQL and PostgreSQL , SQLite, etc. In order to achieve big data management, it is necessary to choose a relational database that can support large amounts of data storage and fast reading and writing. At the same time, in addition to selecting an excellent database, it also needs to be optimized so that it can better serve data management work.

Database optimization can start from many aspects, such as:

(1) Choose a suitable database engine, such as InnoDB, MyISAM, etc.

(2) Put the frequently used fields in the data table in front.

(3) Avoid using too many JOIN operations.

(4) Using indexes can greatly improve the query speed of data.

- Sub-database and sub-table

In big data management, the efficiency of processing massive data has always been one of the difficult problems to overcome. In order to improve data processing efficiency, you can use sub-database and sub-table technology to store data in multiple databases to improve query efficiency.

Distributed database design can be divided into two types: vertical sharding and horizontal sharding. Vertical segmentation is to divide a database into multiple sub-databases according to the frequency of use of the data table, and there is no correlation between the sub-databases; horizontal segmentation is to split the data in a data table into multiple databases according to certain rules. The data in the databases are related.

- Data caching

Data caching is an important technical means to improve data processing efficiency. Caching technologies such as Memcached and Redis can be used in PHP to store frequently accessed data in memory and directly read the data in memory, avoiding frequent database access. In addition, browser caching technology can also be used to cache static resources locally to reduce the time waste caused by network transmission and achieve faster response speed.

2. Data warehouse design

- Dimensional model and fact table

The design of data warehouse is the core of the entire big data management, dimensional model and facts Tables are the two most important concepts in data warehouse design.

The dimension table is used to describe each dimension in the business, such as time, region, product, etc.; the fact table records factual data, such as sales data, access data, etc. By associating different dimensions with fact tables, flexible data query and multi-dimensional data analysis can be achieved.

- ETL

The design of data warehouse not only includes data storage, but also requires data cleaning, transformation and loading (ETL) and other operations.

ETL operations include three steps: data extraction (Extraction), data transformation (Transformation) and data loading (Load). Data extraction refers to obtaining the required data from the source system; data conversion involves cleaning, format conversion, data integration and other operations; data loading refers to loading the converted data into the target system.

- OLAP

Online Analytical Processing (OLAP) is a multi-dimensional data analysis technology that can easily perform statistics, analysis and query on data. The most common OLAP technology is the multidimensional data cube (Cube).

A multidimensional data cube is a cube-shaped data structure formed by merging dimension tables and fact tables. Each face represents a different dimension attribute. By rotating and translating the multi-dimensional data cube, different data slices and data sampling can be obtained, which facilitates multi-dimensional data analysis and report production.

In short, big data management and data warehouse design are important links in PHP applications. Using appropriate databases, sub-databases, sub-tables, data caching, ETL and OLAP and other technical means can improve data processing efficiency and data query Accuracy of analysis.

The above is the detailed content of How to carry out big data management and data warehouse design in PHP?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1669

1669

14

14

1428

1428

52

52

1329

1329

25

25

1273

1273

29

29

1256

1256

24

24

Read CSV files and perform data analysis using pandas

Jan 09, 2024 am 09:26 AM

Read CSV files and perform data analysis using pandas

Jan 09, 2024 am 09:26 AM

Pandas is a powerful data analysis tool that can easily read and process various types of data files. Among them, CSV files are one of the most common and commonly used data file formats. This article will introduce how to use Pandas to read CSV files and perform data analysis, and provide specific code examples. 1. Import the necessary libraries First, we need to import the Pandas library and other related libraries that may be needed, as shown below: importpandasaspd 2. Read the CSV file using Pan

Introduction to data analysis methods

Jan 08, 2024 am 10:22 AM

Introduction to data analysis methods

Jan 08, 2024 am 10:22 AM

Common data analysis methods: 1. Comparative analysis method; 2. Structural analysis method; 3. Cross analysis method; 4. Trend analysis method; 5. Cause and effect analysis method; 6. Association analysis method; 7. Cluster analysis method; 8 , Principal component analysis method; 9. Scatter analysis method; 10. Matrix analysis method. Detailed introduction: 1. Comparative analysis method: Comparative analysis of two or more data to find the differences and patterns; 2. Structural analysis method: A method of comparative analysis between each part of the whole and the whole. ; 3. Cross analysis method, etc.

How to build a fast data analysis application using React and Google BigQuery

Sep 26, 2023 pm 06:12 PM

How to build a fast data analysis application using React and Google BigQuery

Sep 26, 2023 pm 06:12 PM

How to use React and Google BigQuery to build fast data analysis applications Introduction: In today's era of information explosion, data analysis has become an indispensable link in various industries. Among them, building fast and efficient data analysis applications has become the goal pursued by many companies and individuals. This article will introduce how to use React and Google BigQuery to build a fast data analysis application, and provide detailed code examples. 1. Overview React is a tool for building

11 basic distributions that data scientists use 95% of the time

Dec 15, 2023 am 08:21 AM

11 basic distributions that data scientists use 95% of the time

Dec 15, 2023 am 08:21 AM

Following the last inventory of "11 Basic Charts Data Scientists Use 95% of the Time", today we will bring you 11 basic distributions that data scientists use 95% of the time. Mastering these distributions helps us understand the nature of the data more deeply and make more accurate inferences and predictions during data analysis and decision-making. 1. Normal Distribution Normal Distribution, also known as Gaussian Distribution, is a continuous probability distribution. It has a symmetrical bell-shaped curve with the mean (μ) as the center and the standard deviation (σ) as the width. The normal distribution has important application value in many fields such as statistics, probability theory, and engineering.

How to use ECharts and php interfaces to implement data analysis and prediction of statistical charts

Dec 17, 2023 am 10:26 AM

How to use ECharts and php interfaces to implement data analysis and prediction of statistical charts

Dec 17, 2023 am 10:26 AM

How to use ECharts and PHP interfaces to implement data analysis and prediction of statistical charts. Data analysis and prediction play an important role in various fields. They can help us understand the trends and patterns of data and provide references for future decisions. ECharts is an open source data visualization library that provides rich and flexible chart components that can dynamically load and process data by using the PHP interface. This article will introduce the implementation method of statistical chart data analysis and prediction based on ECharts and php interface, and provide

Machine learning and data analysis using Go language

Nov 30, 2023 am 08:44 AM

Machine learning and data analysis using Go language

Nov 30, 2023 am 08:44 AM

In today's intelligent society, machine learning and data analysis are indispensable tools that can help people better understand and utilize large amounts of data. In these fields, Go language has also become a programming language that has attracted much attention. Its speed and efficiency make it the choice of many programmers. This article introduces how to use Go language for machine learning and data analysis. 1. The ecosystem of machine learning Go language is not as rich as Python and R. However, as more and more people start to use it, some machine learning libraries and frameworks

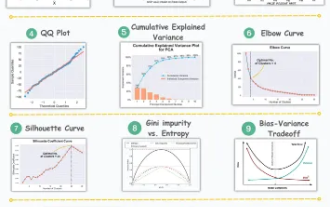

11 Advanced Visualizations for Data Analysis and Machine Learning

Oct 25, 2023 am 08:13 AM

11 Advanced Visualizations for Data Analysis and Machine Learning

Oct 25, 2023 am 08:13 AM

Visualization is a powerful tool for communicating complex data patterns and relationships in an intuitive and understandable way. They play a vital role in data analysis, providing insights that are often difficult to discern from raw data or traditional numerical representations. Visualization is crucial for understanding complex data patterns and relationships, and we will introduce the 11 most important and must-know charts that help reveal the information in the data and make complex data more understandable and meaningful. 1. KSPlotKSPlot is used to evaluate distribution differences. The core idea is to measure the maximum distance between the cumulative distribution functions (CDF) of two distributions. The smaller the maximum distance, the more likely they belong to the same distribution. Therefore, it is mainly interpreted as a "system" for determining distribution differences.

What are the recommended data analysis websites?

Mar 13, 2024 pm 05:44 PM

What are the recommended data analysis websites?

Mar 13, 2024 pm 05:44 PM

Recommended: 1. Business Data Analysis Forum; 2. National People’s Congress Economic Forum - Econometrics and Statistics Area; 3. China Statistics Forum; 4. Data Mining Learning and Exchange Forum; 5. Data Analysis Forum; 6. Website Data Analysis; 7. Data analysis; 8. Data Mining Research Institute; 9. S-PLUS, R Statistics Forum.