Technology peripherals

Technology peripherals

AI

AI

The great master Li Mu and Kuaishou veteran Li Yan were exposed and switched to big models after leaving their jobs. ChatGPT set off a boom in AI entrepreneurship

The great master Li Mu and Kuaishou veteran Li Yan were exposed and switched to big models after leaving their jobs. ChatGPT set off a boom in AI entrepreneurship

The great master Li Mu and Kuaishou veteran Li Yan were exposed and switched to big models after leaving their jobs. ChatGPT set off a boom in AI entrepreneurship

Recently, Internet giants have joined the large model track one after another.

Yesterday, the news that Master Li Mu left Amazon to work as a model model exploded on everyone’s social networks like thunder.

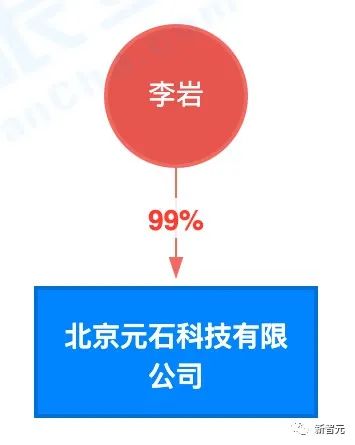

Following this, it was revealed today that the new company established by former Kuaishou AI core boss Li Yan after leaving Kuaishou in 2022 also makes large models.

Obviously, since ChatGPT has allowed the world to see the application scenarios of AI, the competition among domestic enterprises in the AI model layer has begun to intensify.

Kuaishou boss Li Yan started a business and entered multi-modality

Li Yan established the AI company "Yuanshi Technology" in the second half of 2022, mainly engaged in multi-modal The development of large dynamic models.

Li Yan is an old employee of Kuaishou with a job number of around 75, and is also the core figure in the research and development of Kuaishou AI technology.

In November 2015, with the support of Su Hua, then CEO of Kuaishou, Li Yan established the first internal deep learning department DL (Deep Learning) group with the goal of building The algorithm model identifies video content that violates laws and regulations.

Subsequently, Kuaishou had more needs for video content understanding. In 2016, Li Yan changed the name of the team from the DL group to the MMU (Multimedia understanding, multimedia content understanding) group. In addition to solving security compliance issues, it also dabbled in the research and development of algorithm models in various forms such as voice, text, and images.

At the 2018 CNCC conference, Li Yan emphasized the importance of multimodal model technology in a speech titled "Multimodal Content Production and Understanding":

- Change the way of human-computer interaction

- Make information distribution more efficient

Take the short videos we often watch as an example, In addition to multi-modal information such as visual, auditory and text, user behavior is also another modal data.

In this way, the video itself and the user's behavior together constitute a very complex multi-modal problem.

The purpose of multimodal research is to make the way human-computer interaction becomes more and more natural and comfortable.

However, multi-modal research is quite difficult.

On the one hand, we must face the semantic gap problem of single modality and the heterogeneous gap problem of how to comprehensively model data of different modalities; on the other hand, we must also solve Missing data problem due to difficulty in constructing multimodal datasets.

At that time, many studies in the academic community still stayed in the single-modal field, but Li Yan firmly believed that multi-modality would become a more valuable research direction in the future.

His experience in Kuaishou gave Li Yan a deep understanding of the ecology of AI in short videos. In 2021, he chose to leave Kuaishou.

In the second half of 2022, he established Yuanshi Technology. According to 36Kr’s exclusive verification, Yuanshi Technology’s main focus is the research and development of multi-modal large models.

Master and disciple in one: write a book, start a business, start a business again

And yesterday, the news that Master Li Mu was suspected of joining a large model entrepreneurship was instantly posted on social networks Screen.

According to the public account "Dear Data", Alex Smola, the "father of parameter server", left Amazon in February this year and founded an artificial intelligence company called Boson.ai.

As for the introduction of this new company, there is not much information, and the official page is still under construction.

Link: https://boson.ai/

To be sure, we need to do large-scale model-related projects.

Also according to Alex’s LinkedIn profile, “We are doing something big. If you are interested in the scalable basic model, please contact me.”

#It is worth noting that on the company’s GitHub homepage, Amazon’s chief scientist Li Mu also contributed code.

# Therefore, it is speculated that Li Mu has joined Boson.ai and started a business with his mentor.

#However, so far, its homepage has not been updated.

Li Mu and Alex Smola founded a data analysis algorithm company called Marianas Labs in 2016.

#At that time, Li Mu served as CTO and co-founder.

Li Mu once mentioned in the article "The Five Years of Doctorate" that

At that time The popularity of deep learning has led to various large-scale acquisitions of start-up companies.

Alex worked with him for a long time with hundreds of thousands of angel investments. Alex wrote crawlers and he ran the model himself, and later sold it to a Small Public Company Company 1-Page.

The master and apprentice first met at Carnegie Mellon University (CMU) ).

In September 2012, Li Mu went to CMU for further studies, studying under Alex Smola.

#At that time, Alex was still working at Google and there was no funding, so they left him to Dave Andersen. Therefore, Li Mu had two mentors, one doing machine learning and the other doing distributed systems.

#In the first half of the year at CMU, Li Mu chatted with two mentors for an hour every week.

Because the two instructors have very different styles, and Alex reacts very quickly, it is difficult to keep up with his rhythm. If you want to explain your ideas, you need to do more homework.

And Dave will help Li Mu understand something thoroughly without giving many ideas.

# Under the guidance of two mentors, Li Mu grew up rapidly.

#In his second year of studying at CMU, while Yu Kai and others were doing deep learning, Li Mu also joined this research boom.

Based on his interest in distributed deep learning frameworks, he chose to cooperate with Chen Tianqi and use CXXNet as a starting point to do deep learning related projects.

When the two of them wrote the xgboost distributed startup script together, they discovered that file reading can be used by multiple projects.

In order to avoid reinventing the wheel, Li Mu and Chen Tianqi worked together to create an organization called DMLC on Github, and then created the DMLC, which became a great success. MXNet.

In July 2016, Alex joined Amazon. At the same time, Li Mu took MXNet to join Amazon as a part-time employee and chose to stay after graduation.

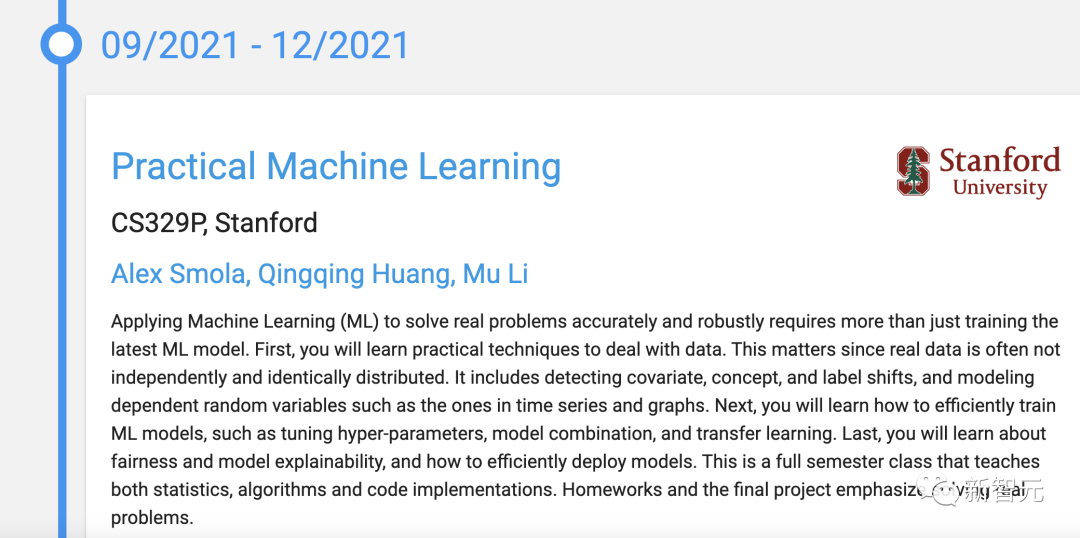

#During 2019, the master and apprentice also gave lectures together at UC Berkeley.

#In 2021, the two will also teach "Practical Machine Learning" together at Stanford University.

It is worth mentioning that the book "Hands-On Deep Learning" is Written by Li Mu, Aston Zhang, PhD in computer science at the University of Illinois at Urbana-Champaign, and his mentor Alex.

#This book has become very popular since its release. As one of the authors of MXNet, Li Mu's "Hands-On Deep Learning" is also written using the MXNet framework.

Who else is on the road to large models?

The multi-modal direction is what Li Yan has wanted to do for a long time. Li Mu followed his mentor to start a business, which may have been affected to some extent by the popularity of ChatGPT.

The competition among domestic enterprises in the AI model layer has begun to intensify. The current large-scale model track is crowded with players from all walks of life, including the giants, big bosses, returnees/big factory executives, small startups transitioning, professors, and soy sauce factions.

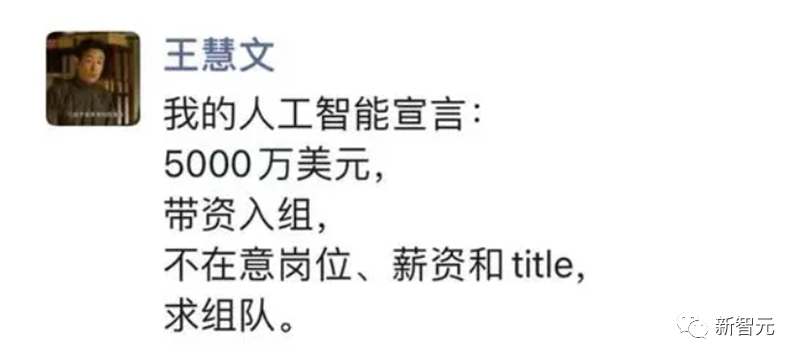

On February 13, Wang Huiwen, who had retired from Meituan for two years, returned to the public eye with an "AI Hero List", saying that he would spend 50 million US dollars " Bring money to join the team", and "I don't care about the position, salary and title, I want to form a team."

## In the past, Wang Huiwen raised the ticket price for large-scale business startups to 50 million US dollars. Later, there was "Go out and ask Ask" founder Li Zhiwen officially announced the end of the large model competition.

Li Zhiwen led the team in 2020 to train the large model UCLAL

In addition, former Sogou CEO Wang Xiaochuan also issued a vague statement. Announced that he was about to enter the battlefield of "China's OpenAI" and admitted to 36Kr that he was making rapid preparations.

On February 26, Zhou Bowen, the founder and chief scientist of Xianyuan Technology, also released a message announcing the recruitment of partners. People, let’s work together to build the Chinese version of ChatGPT.

The recent surge in demand has shown that the potential market for domestically generated artificial intelligence products is surprisingly large.

The explosion of ChatGPT means that the singularity has arrived. It has triggered lower and deeper changes. The new generation of AI will integrate the physical world and the information world to realize knowledge and computing. , closed loop of reasoning.

In just two days, it was revealed that two big guys had quit their business to start a large model track. The press conferences predicted by domestic giants will be held within a few months.

Therefore, in this AI large model domestic pursuit competition that has been started since the beginning of the year, we may soon see some players sprint to the finish line.

The above is the detailed content of The great master Li Mu and Kuaishou veteran Li Yan were exposed and switched to big models after leaving their jobs. ChatGPT set off a boom in AI entrepreneurship. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1666

1666

14

14

1425

1425

52

52

1327

1327

25

25

1273

1273

29

29

1253

1253

24

24

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

DMA in C refers to DirectMemoryAccess, a direct memory access technology, allowing hardware devices to directly transmit data to memory without CPU intervention. 1) DMA operation is highly dependent on hardware devices and drivers, and the implementation method varies from system to system. 2) Direct access to memory may bring security risks, and the correctness and security of the code must be ensured. 3) DMA can improve performance, but improper use may lead to degradation of system performance. Through practice and learning, we can master the skills of using DMA and maximize its effectiveness in scenarios such as high-speed data transmission and real-time signal processing.

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

Handling high DPI display in C can be achieved through the following steps: 1) Understand DPI and scaling, use the operating system API to obtain DPI information and adjust the graphics output; 2) Handle cross-platform compatibility, use cross-platform graphics libraries such as SDL or Qt; 3) Perform performance optimization, improve performance through cache, hardware acceleration, and dynamic adjustment of the details level; 4) Solve common problems, such as blurred text and interface elements are too small, and solve by correctly applying DPI scaling.

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

C performs well in real-time operating system (RTOS) programming, providing efficient execution efficiency and precise time management. 1) C Meet the needs of RTOS through direct operation of hardware resources and efficient memory management. 2) Using object-oriented features, C can design a flexible task scheduling system. 3) C supports efficient interrupt processing, but dynamic memory allocation and exception processing must be avoided to ensure real-time. 4) Template programming and inline functions help in performance optimization. 5) In practical applications, C can be used to implement an efficient logging system.

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

In MySQL, add fields using ALTERTABLEtable_nameADDCOLUMNnew_columnVARCHAR(255)AFTERexisting_column, delete fields using ALTERTABLEtable_nameDROPCOLUMNcolumn_to_drop. When adding fields, you need to specify a location to optimize query performance and data structure; before deleting fields, you need to confirm that the operation is irreversible; modifying table structure using online DDL, backup data, test environment, and low-load time periods is performance optimization and best practice.

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

The built-in quantization tools on the exchange include: 1. Binance: Provides Binance Futures quantitative module, low handling fees, and supports AI-assisted transactions. 2. OKX (Ouyi): Supports multi-account management and intelligent order routing, and provides institutional-level risk control. The independent quantitative strategy platforms include: 3. 3Commas: drag-and-drop strategy generator, suitable for multi-platform hedging arbitrage. 4. Quadency: Professional-level algorithm strategy library, supporting customized risk thresholds. 5. Pionex: Built-in 16 preset strategy, low transaction fee. Vertical domain tools include: 6. Cryptohopper: cloud-based quantitative platform, supporting 150 technical indicators. 7. Bitsgap:

How does deepseek official website achieve the effect of penetrating mouse scroll event?

Apr 30, 2025 pm 03:21 PM

How does deepseek official website achieve the effect of penetrating mouse scroll event?

Apr 30, 2025 pm 03:21 PM

How to achieve the effect of mouse scrolling event penetration? When we browse the web, we often encounter some special interaction designs. For example, on deepseek official website, �...