Technology peripherals

Technology peripherals

AI

AI

Why is DeepMind absent from the GPT feast? It turned out that I was teaching a little robot to play football.

Why is DeepMind absent from the GPT feast? It turned out that I was teaching a little robot to play football.

Why is DeepMind absent from the GPT feast? It turned out that I was teaching a little robot to play football.

In the view of many scholars, embodied intelligence is a very promising direction towards AGI, and the success of ChatGPT is inseparable from the RLHF technology based on reinforcement learning. DeepMind vs. OpenAI, who can achieve AGI first? The answer seems to have not been revealed yet.

We know that creating general embodied intelligence (i.e., agents that act in the physical world with an agility and dexterity and understand like animals or humans) is an important step for AI researchers and one of the long-term goals of roboticists. Time-wise, the creation of intelligent embodied agents with complex locomotion capabilities goes back many years, both in simulations and in the real world.

The pace of progress has accelerated significantly in recent years, with learning-based methods playing a major role. For example, deep reinforcement learning has been shown to be able to solve complex motion control problems of simulated characters, including complex, perception-driven whole-body control or multi-agent behavior. At the same time, deep reinforcement learning is increasingly used in physical robots. In particular, widely used high-quality quadruped robots have become demonstration targets for learning to generate a range of robust locomotor behaviors.

However, movement in static environments is only one part of the many ways that animals and humans deploy their bodies to interact with the world, and this locomotion modality has been used in much work studying whole-body control and movement manipulation. has been verified, especially for quadruped robots. Examples of related movements include climbing, soccer skills such as dribbling or catching a ball, and simple maneuvers using the legs.

Among them, for football, it shows many characteristics of human sensorimotor intelligence. The complexity of football requires a variety of highly agile and dynamic movements, including running, turning, avoiding, kicking, passing, falling and getting up, etc. These actions need to be combined in a variety of ways. Players need to predict the ball, teammates and opposing players, and adjust their actions according to the game environment. This diversity of challenges has been recognized in the robotics and AI communities, and RoboCup was born.

However, it should be noted that the agility, flexibility and quick response required to play football well, as well as the smooth transition between these elements, are very challenging and time-consuming for manual design of robots. Recently, a new paper from DeepMind (now merged with the Google Brain team to form Google DeepMind) explores the use of deep reinforcement learning to learn agile football skills for a bipedal robot.

Paper address: https://arxiv.org/pdf/2304.13653 .pdf

Project homepage: https://sites.google.com/view/op3-soccer

In this paper, researchers study full-body control and object interaction of small humanoid robots in dynamic multi-agent environments. They considered a subset of the overall football problem, training a low-cost miniature humanoid robot with 20 controllable joints to play a 1 v1 football game and observing proprioception and game state characteristics. With the built-in controller, the robot moves slowly and awkwardly. However, researchers used deep reinforcement learning to synthesize dynamic and agile context-adaptive motor skills (such as walking, running, turning, and kicking a ball and getting back up after falling) that the agent combined in a natural and smooth way into complex long-term behaviors.

In the experiment, the agent learned to predict the movement of the ball, position it, block attacks, and use bounced balls. Agents achieve these behaviors in a multi-agent environment thanks to a combination of skill reuse, end-to-end training, and simple rewards. The researchers trained agents in simulation and transferred them to physical robots, demonstrating that simulation-to-real transfer is possible even for low-cost robots.

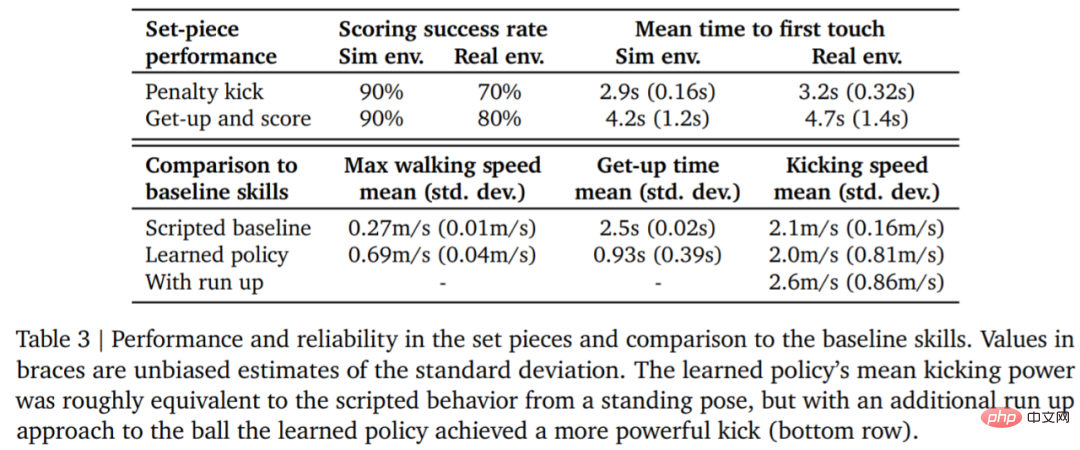

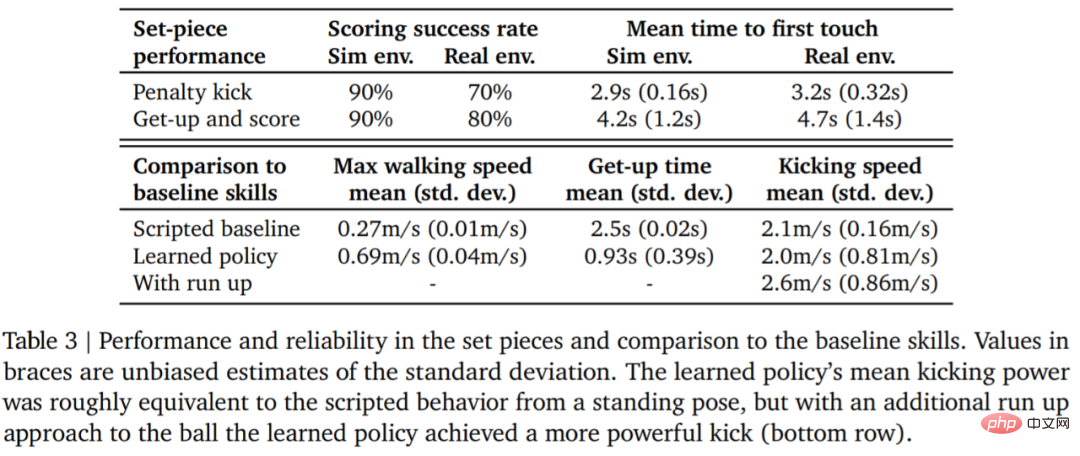

Let the data speak for itself. The robot’s walking speed increased by 156%, the time to get up was reduced by 63%, and the kicking speed was also increased by 24% compared to the baseline.

Before going into the technical interpretation, let’s take a look at some of the highlights of robots in 1v1 football matches. For example, shooting:

## Penalty kick:

Turn, dribble and kick, all in one go

Experimental settings

Experimental settings

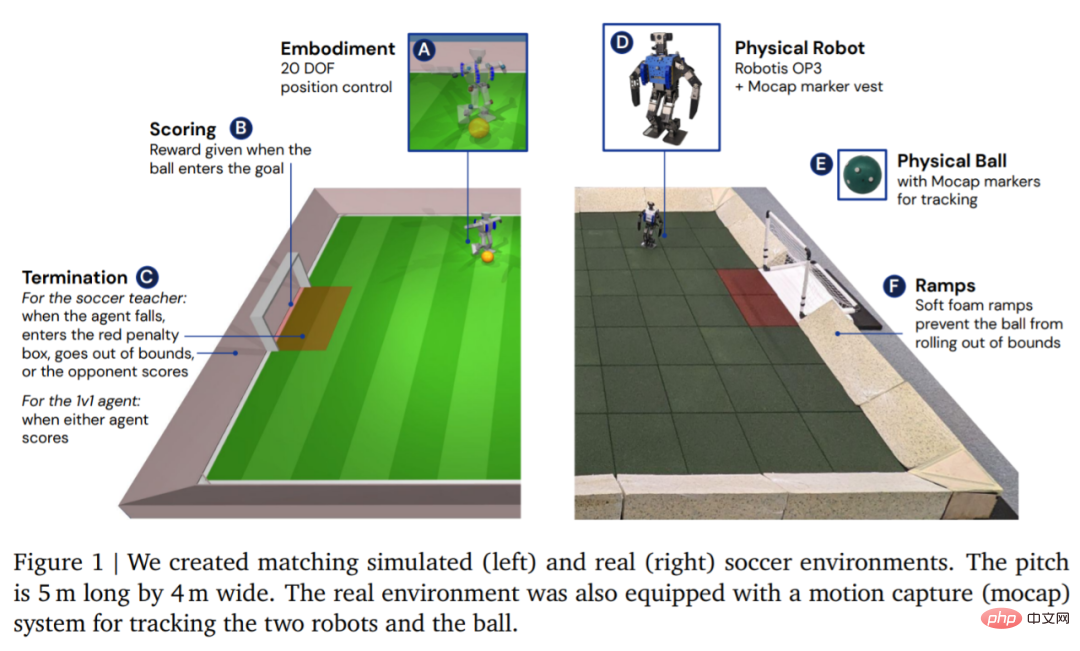

In terms of environment, DeepMind first simulates and trains the agent in a customized football environment, and then migrates the strategy to the corresponding real environment, as shown in Figure 1. The environment consisted of a football pitch 5 m long and 4 m wide, with two goals, each with an opening width of 0.8 m. In both simulated and real environments, the court is bounded by ramps to keep the ball in bounds. The real court is covered with rubber tiles to reduce the risk of damaging the robot from a fall and to increase friction on the ground.

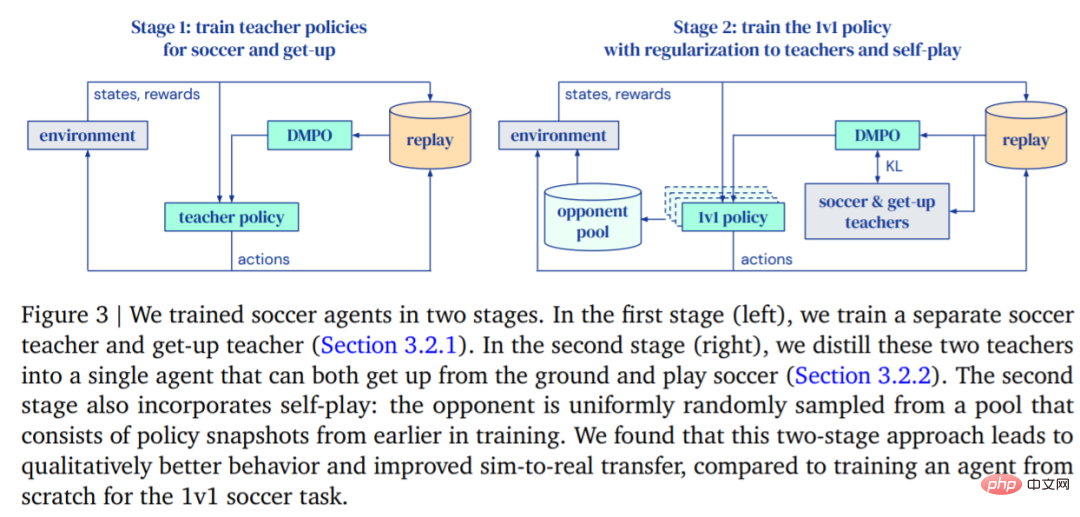

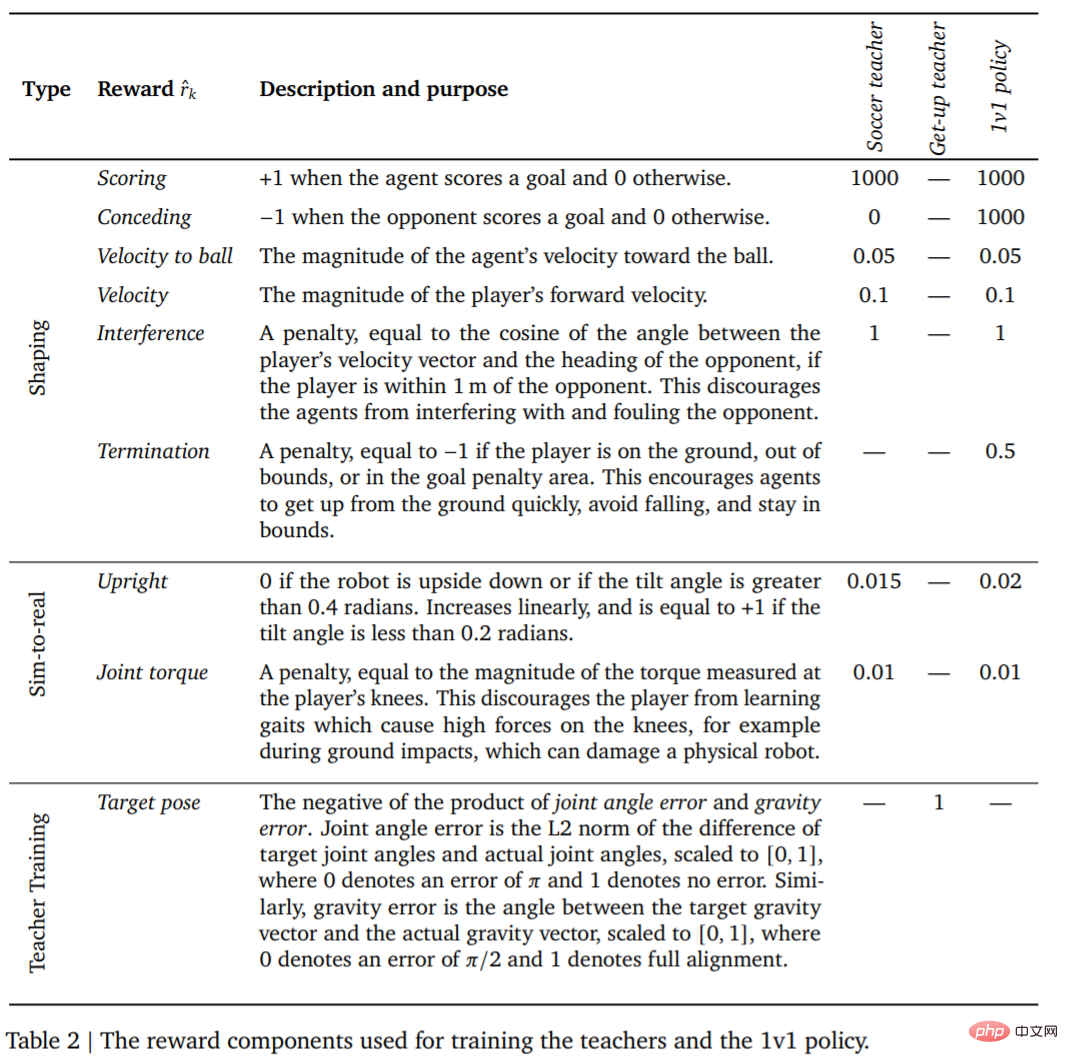

- In the first phase, DeepMind trains teacher strategies for two specific skills: getting the agent up from the ground and scoring a goal.

- In the second stage, the teacher strategy from the first stage is used to regulate the agent while the agent learns to effectively fight against increasingly powerful opponents.

After the agent is trained, the next step is to transfer the trained kicking strategy to the real robot with zero samples. In order to improve the success rate of zero-shot transfer, DeepMind reduces the gap between simulated agents and real robots through simple system identification, improves the robustness of the strategy through domain randomization and perturbation during training, and includes shaping the reward strategy to obtain different results. Behavior that is too likely to harm the robot. 1v1 Competition: The soccer agent can handle a variety of emergent behaviors, including flexible motor skills such as getting up from the ground, quickly recovering from falls, and running and turn around. During the game, the agent transitions between all these skills in a fluid manner. Experiment

Table 3 below shows the quantitative analysis results. It can be seen from the results that the reinforcement learning strategy performs better than specialized artificially designed skills, with the agent walking 156% faster and taking 63% less time to get up.

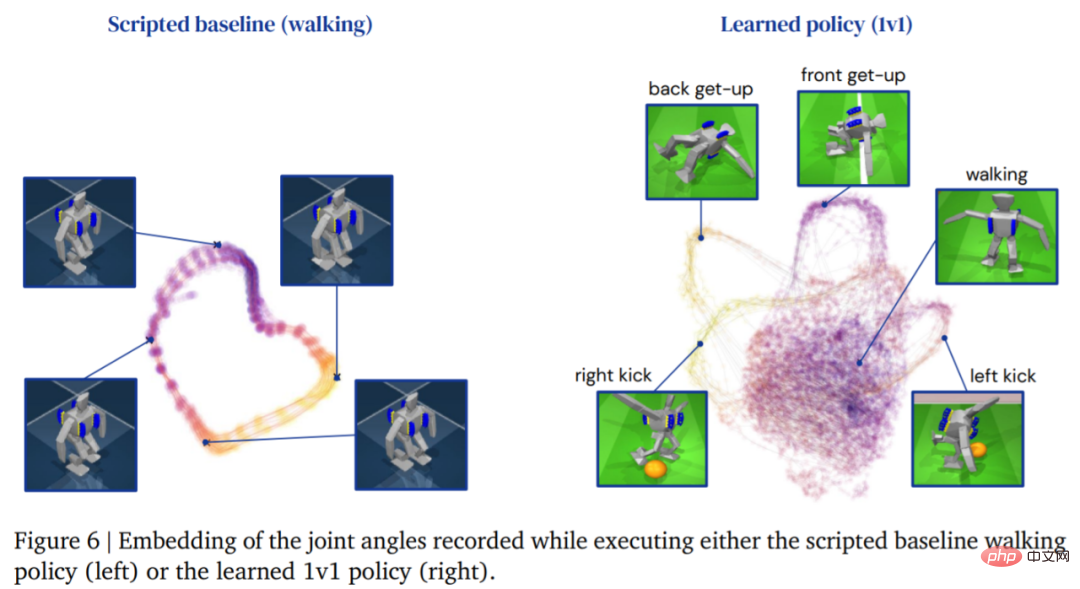

The following figure shows the walking trajectory of the agent. In contrast, the trajectory structure of the agent generated by the learning strategy Richer:

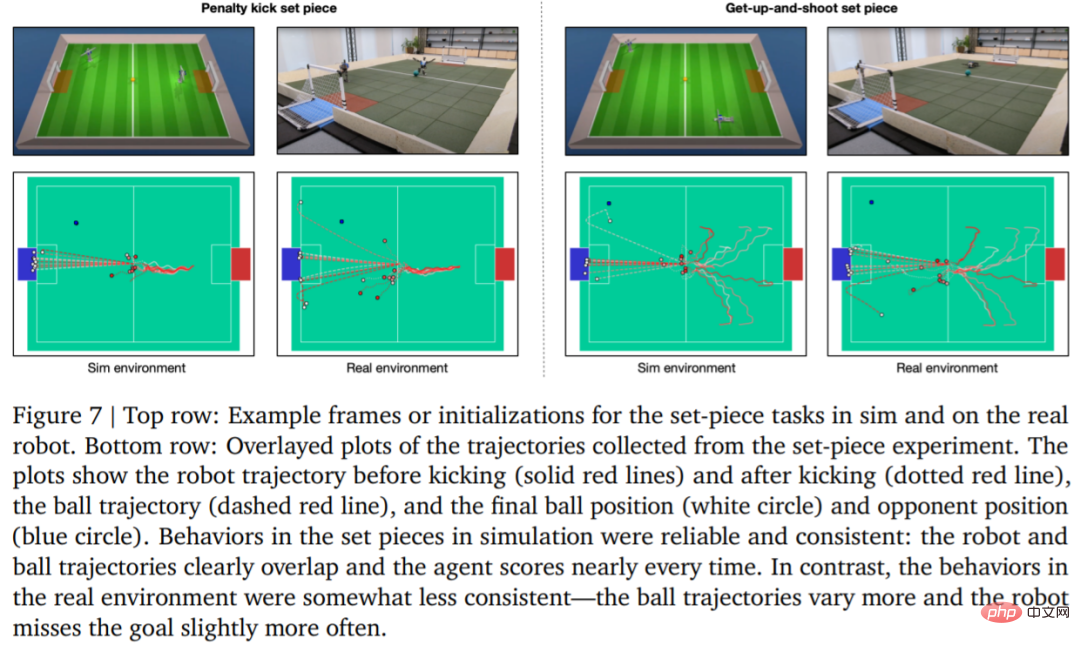

#To evaluate the reliability of the learning strategy, DeepMind designed penalty kicks and jumping shot set pieces, and Implemented in simulated and real environments. The initial configuration is shown in Figure 7.

#In the real environment, the robot scored 7 out of 10 times (70%) in the penalty kick task. Hit 8 out of 10 times (80%) on launch missions. In the simulation experiment, the agent's scores in these two tasks were more consistent, which shows that the agent's training strategy is transferred to the real environment (including real robots, balls, floor surfaces, etc.), the performance is slightly degraded, and the behavioral differences are has increased, but the robot is still able to reliably get up, kick the ball, and score. The results are shown in Figure 7 and Table 3.

The above is the detailed content of Why is DeepMind absent from the GPT feast? It turned out that I was teaching a little robot to play football.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1669

1669

14

14

1428

1428

52

52

1329

1329

25

25

1273

1273

29

29

1256

1256

24

24

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

DMA in C refers to DirectMemoryAccess, a direct memory access technology, allowing hardware devices to directly transmit data to memory without CPU intervention. 1) DMA operation is highly dependent on hardware devices and drivers, and the implementation method varies from system to system. 2) Direct access to memory may bring security risks, and the correctness and security of the code must be ensured. 3) DMA can improve performance, but improper use may lead to degradation of system performance. Through practice and learning, we can master the skills of using DMA and maximize its effectiveness in scenarios such as high-speed data transmission and real-time signal processing.

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

C performs well in real-time operating system (RTOS) programming, providing efficient execution efficiency and precise time management. 1) C Meet the needs of RTOS through direct operation of hardware resources and efficient memory management. 2) Using object-oriented features, C can design a flexible task scheduling system. 3) C supports efficient interrupt processing, but dynamic memory allocation and exception processing must be avoided to ensure real-time. 4) Template programming and inline functions help in performance optimization. 5) In practical applications, C can be used to implement an efficient logging system.

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

In MySQL, add fields using ALTERTABLEtable_nameADDCOLUMNnew_columnVARCHAR(255)AFTERexisting_column, delete fields using ALTERTABLEtable_nameDROPCOLUMNcolumn_to_drop. When adding fields, you need to specify a location to optimize query performance and data structure; before deleting fields, you need to confirm that the operation is irreversible; modifying table structure using online DDL, backup data, test environment, and low-load time periods is performance optimization and best practice.

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

Top 10 digital currency trading platforms: Top 10 safe and reliable digital currency exchanges

Apr 30, 2025 pm 04:30 PM

Top 10 digital currency trading platforms: Top 10 safe and reliable digital currency exchanges

Apr 30, 2025 pm 04:30 PM

The top 10 digital virtual currency trading platforms are: 1. Binance, 2. OKX, 3. Coinbase, 4. Kraken, 5. Huobi Global, 6. Bitfinex, 7. KuCoin, 8. Gemini, 9. Bitstamp, 10. Bittrex. These platforms all provide high security and a variety of trading options, suitable for different user needs.

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

The built-in quantization tools on the exchange include: 1. Binance: Provides Binance Futures quantitative module, low handling fees, and supports AI-assisted transactions. 2. OKX (Ouyi): Supports multi-account management and intelligent order routing, and provides institutional-level risk control. The independent quantitative strategy platforms include: 3. 3Commas: drag-and-drop strategy generator, suitable for multi-platform hedging arbitrage. 4. Quadency: Professional-level algorithm strategy library, supporting customized risk thresholds. 5. Pionex: Built-in 16 preset strategy, low transaction fee. Vertical domain tools include: 6. Cryptohopper: cloud-based quantitative platform, supporting 150 technical indicators. 7. Bitsgap:

How does deepseek official website achieve the effect of penetrating mouse scroll event?

Apr 30, 2025 pm 03:21 PM

How does deepseek official website achieve the effect of penetrating mouse scroll event?

Apr 30, 2025 pm 03:21 PM

How to achieve the effect of mouse scrolling event penetration? When we browse the web, we often encounter some special interaction designs. For example, on deepseek official website, �...