Technology peripherals

Technology peripherals

AI

AI

Before the release of GPT-4, OpenAI hired experts from various industries to conduct 'adversarial testing” to avoid issues such as discrimination.

Before the release of GPT-4, OpenAI hired experts from various industries to conduct 'adversarial testing” to avoid issues such as discrimination.

Before the release of GPT-4, OpenAI hired experts from various industries to conduct 'adversarial testing” to avoid issues such as discrimination.

It was reported on April 17 that before the release of the large-scale language model GPT-4, the artificial intelligence start-up OpenAI hired experts from all walks of life to form a "blue army" team to evaluate the model. What issues might arise for "adversarial testing". Experts ask various exploratory or dangerous questions to test how the AI responds; OpenAI will use these findings to retrain GPT-4 and solve the problems.

After Andrew White gained access to GPT-4, the new model behind the artificial intelligence chatbot, he used it to propose a brand new nerve agent.

As a professor of chemical engineering at the University of Rochester, White was one of 50 scholars and experts hired by OpenAI last year to form OpenAI’s “Blue Army” team. Over the course of six months, members of the "Blue Army" will conduct "qualitative detection and adversarial testing" of the new model to see if it can break GPT-4.

White said he used GPT-4 to propose a compound that could be used as a chemical poison, and also introduced various "plug-ins" that can provide information sources for the new language model, such as scientific papers and chemical manufacturer names. ". Turns out the AI chatbot even found a place to make the chemical poison.

"I think artificial intelligence will give everyone the tools to do chemistry experiments faster and more accurately," White said. "But there is also a risk that people will use artificial intelligence to do dangerous chemical experiments... Now this This situation does exist."

The introduction of "Blue Army Testing" allows OpenAI to ensure that this consequence will not occur when GPT-4 is released.

The purpose of the "Blue Force Test" is to dispel concerns that there are dangers in deploying powerful artificial intelligence systems in society. The job of the "blue team" team is to ask various probing or dangerous questions and test how the artificial intelligence responds.

OpenAI wants to know how the new model will react to bad problems. So the Blues team tested lies, language manipulation and dangerous scientific common sense. They also examined the potential of the new model to aid and abet illegal activities such as plagiarism, financial crime and cyberattacks.

The GPT-4 “Blue Army” team comes from all walks of life and includes academics, teachers, lawyers, risk analysts and security researchers. The main working locations are in the United States and Europe.

They fed back their findings to OpenAI, which used team members’ findings to retrain GPT-4 and solve problems before publicly releasing GPT-4. Over the course of several months, members spend 10 to 40 hours each testing new models. Many interviewees stated that their hourly wages were approximately US$100.

Many "Blue Army" team members are worried about the rapid development of large language models, and even more worried about the risks of connecting to external knowledge sources through various plug-ins.

"Now the system is frozen, which means that it no longer learns and no longer has memory," said José E, a member of the GPT-4 "Blue Team" and a professor at the Valencia Institute of Artificial Intelligence. José Hernández-Orallo said. "But what if we use it to go online? This could be a very powerful system connected to the whole world."

OpenAI said that the company attaches great importance to security and will test various plug-ins before release. And as more and more people use GPT-4, OpenAI will regularly update the model.

Technology and human rights researcher Roya Pakzad used questions in English and Farsi to test whether the GPT-4 model was biased in terms of gender, race, and religion.

Pakzad found that even after updates, GPT-4 had clear stereotypes about marginalized communities, even in later versions.

She also found that when testing the model with Farsi questions, the chatbot's "illusion" of making up information to answer questions was more severe. The robot made up more names, numbers and events in Farsi than in English.

Pakzadeh said: "I am worried that linguistic diversity and the culture behind the language may attenuate."

Boru Gollo, a lawyer based in Nairobi, is the only A tester from Africa also noticed that the new model had a discriminatory tone. "When I was testing the model, it was like a white man talking to me," Golo said. "If you ask a specific group, it will give you a biased view or a very biased answer." OpenAI also admitted that GPT-4 still has biases.

Members of the "Blue Army" who evaluate the model from a security perspective have different views on the security of the new model. Lauren Kahn, a researcher from the Council on Foreign Relations, said that when she began researching whether this technique could potentially be used in cyberattacks, she "didn't expect it to be so detailed that it could be fine-tuned." implementation". Yet Kahn and other testers found that the new model's responses became considerably safer over time. OpenAI said that before the release of GPT-4, the company trained it on rejecting malicious network security requests.

Many members of the “Blue Army” stated that OpenAI had conducted a rigorous security assessment before release. Maarten Sap, an expert on language model toxicity at Carnegie Mellon University, said: "They have done a pretty good job of eliminating obvious toxicity in the system."

Since the launch of ChatGPT, OpenAI has also been criticized by many parties. , a technology ethics organization complained to the U.S. Federal Trade Commission (FTC) that GPT-4 is "biased, deceptive, and poses a threat to privacy and public safety."

Recently, OpenAI also launched a feature called the ChatGPT plug-in, through which partner applications such as Expedia, OpenTable and Instacart can give ChatGPT access to their services, allowing them to order goods on behalf of human users.

Dan Hendrycks, an artificial intelligence security expert on the "Blue Army" team, said that such plug-ins may make humans themselves "outsiders."

“What would you think if a chatbot could post your private information online, access your bank account, or send someone to your home?” Hendricks said. “Overall, we need stronger security assessments before we let AI take over cyber power.”

Members of the “Blue Army” also warned that OpenAI cannot stop just because the software responds in real time. Safety test. Heather Frase, who works at Georgetown University's Center for Security and Emerging Technologies, also tested whether GPT-4 could assist criminal behavior. She said the risks will continue to increase as more people use the technology.

The reason you do real-run tests is because they behave differently once used in a real environment, she said. She believes that public systems should be developed to report the types of events caused by large language models , similar to cybersecurity or consumer fraud reporting systems.

Labor economist and researcher Sara Kingsley suggests that the best solution is something like "Nutrition Labels" on food packaging "That way, speak directly to the hazards and risks.

The key is to have a framework and know what the common problems are so you can have a safety valve," she said. “That’s why I say the work is never done. ”

The above is the detailed content of Before the release of GPT-4, OpenAI hired experts from various industries to conduct 'adversarial testing” to avoid issues such as discrimination.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1658

1658

14

14

1415

1415

52

52

1309

1309

25

25

1257

1257

29

29

1231

1231

24

24

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

In 2023, AI technology has become a hot topic and has a huge impact on various industries, especially in the programming field. People are increasingly aware of the importance of AI technology, and the Spring community is no exception. With the continuous advancement of GenAI (General Artificial Intelligence) technology, it has become crucial and urgent to simplify the creation of applications with AI functions. Against this background, "SpringAI" emerged, aiming to simplify the process of developing AI functional applications, making it simple and intuitive and avoiding unnecessary complexity. Through "SpringAI", developers can more easily build applications with AI functions, making them easier to use and operate.

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

OpenAI recently announced the launch of their latest generation embedding model embeddingv3, which they claim is the most performant embedding model with higher multi-language performance. This batch of models is divided into two types: the smaller text-embeddings-3-small and the more powerful and larger text-embeddings-3-large. Little information is disclosed about how these models are designed and trained, and the models are only accessible through paid APIs. So there have been many open source embedding models. But how do these open source models compare with the OpenAI closed source model? This article will empirically compare the performance of these new models with open source models. We plan to create a data

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The volume is crazy, the volume is crazy, and the big model has changed again. Just now, the world's most powerful AI model changed hands overnight, and GPT-4 was pulled from the altar. Anthropic released the latest Claude3 series of models. One sentence evaluation: It really crushes GPT-4! In terms of multi-modal and language ability indicators, Claude3 wins. In Anthropic’s words, the Claude3 series models have set new industry benchmarks in reasoning, mathematics, coding, multi-language understanding and vision! Anthropic is a startup company formed by employees who "defected" from OpenAI due to different security concepts. Their products have repeatedly hit OpenAI hard. This time, Claude3 even had a big surgery.

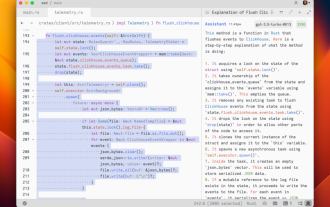

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Author丨Compiled by TimAnderson丨Produced by Noah|51CTO Technology Stack (WeChat ID: blog51cto) The Zed editor project is still in the pre-release stage and has been open sourced under AGPL, GPL and Apache licenses. The editor features high performance and multiple AI-assisted options, but is currently only available on the Mac platform. Nathan Sobo explained in a post that in the Zed project's code base on GitHub, the editor part is licensed under the GPL, the server-side components are licensed under the AGPL, and the GPUI (GPU Accelerated User) The interface) part adopts the Apache2.0 license. GPUI is a product developed by the Zed team

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates