Technology peripherals

Technology peripherals

AI

AI

The big model can 'write' papers by itself, with formulas and references. The trial version is now online

The big model can 'write' papers by itself, with formulas and references. The trial version is now online

The big model can 'write' papers by itself, with formulas and references. The trial version is now online

In recent years, with the advancement of research in various subject areas, scientific literature and data have exploded, making it increasingly difficult for academic researchers to discover useful insights from large amounts of information. Usually, people use search engines to obtain scientific knowledge, but search engines cannot organize scientific knowledge autonomously.

Now, a research team from Meta AI has proposed Galactica, a new large-scale language model that can store, combine and reason about scientific knowledge.

- Paper address: https://galactica.org/static/paper.pdf

- Trial address: https://galactica.org/

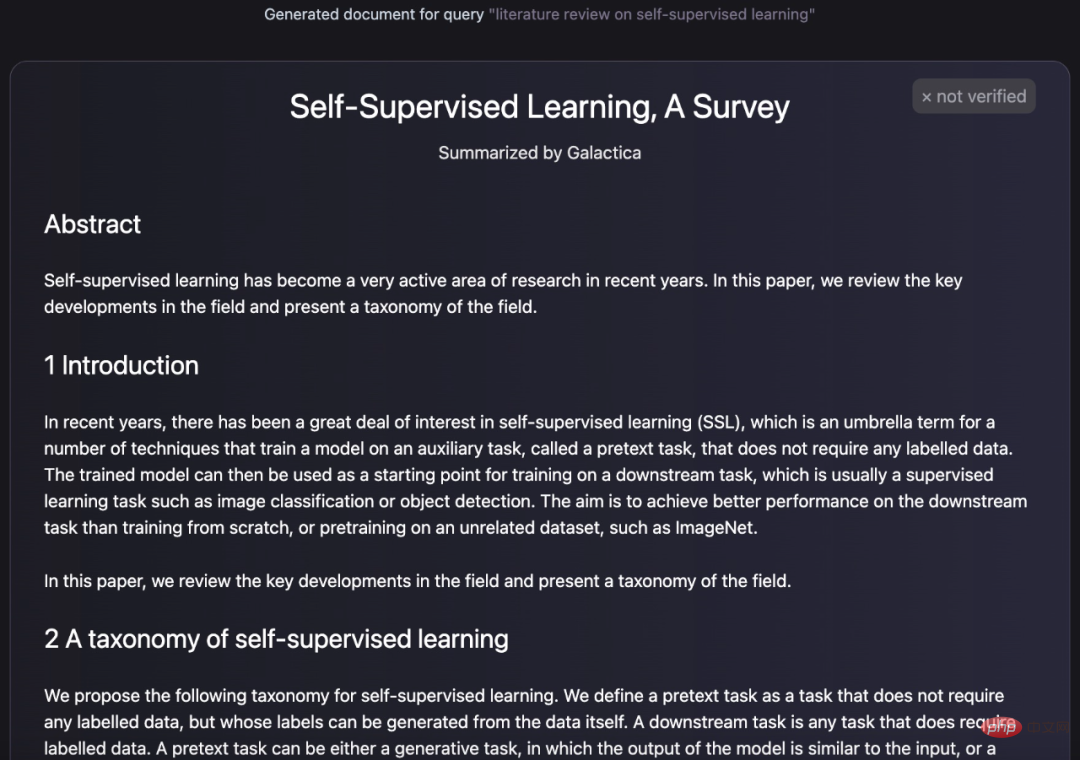

How powerful is the Galactica model? It can do it by itself Summarize and summarize a review paper:

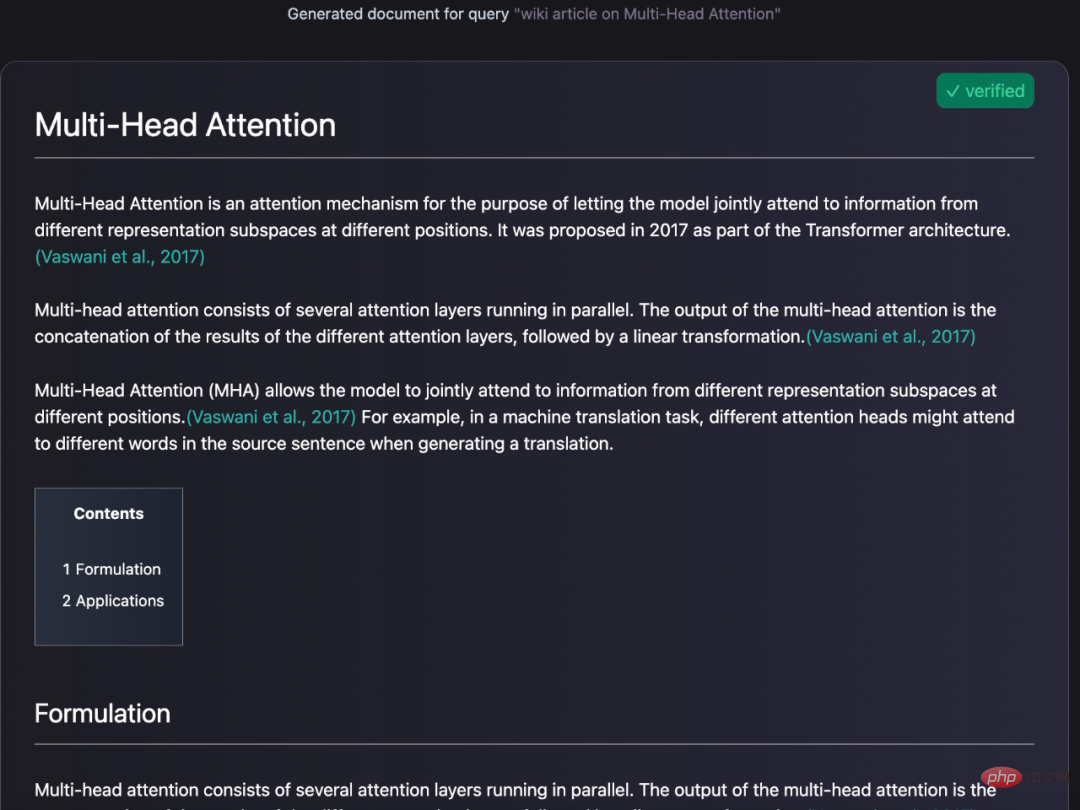

You can also generate an encyclopedia query for the entry:

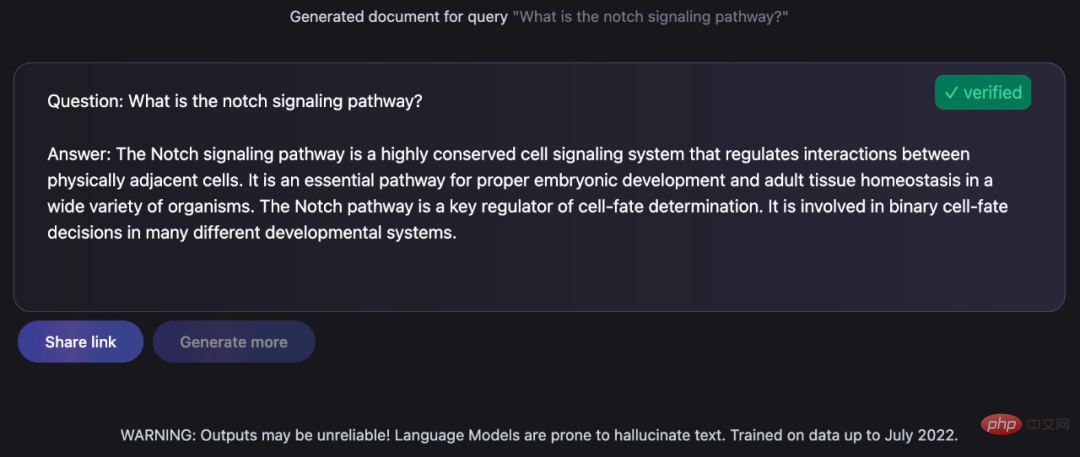

Give knowledgeable answers to the questions asked:

These tasks are still necessary for anthropologists A challenging task, but one that Galactica accomplished very well. Turing Award winner Yann LeCun also tweeted his praise:

Let’s take a look at the specific details of the Galactica model.

Model Overview

The Galactica model is trained on a large scientific corpus of papers, reference materials, knowledge bases and many other sources, including more than 48 million articles Papers, textbooks and handouts, knowledge on millions of compounds and proteins, scientific websites, encyclopedias and more. Unlike existing language models that rely on uncurated, web-crawler-based text, the corpus used for Galactica training is high quality and highly curated. This study trained the model for multiple epochs without overfitting, where performance on upstream and downstream tasks was improved by using repeated tokens.

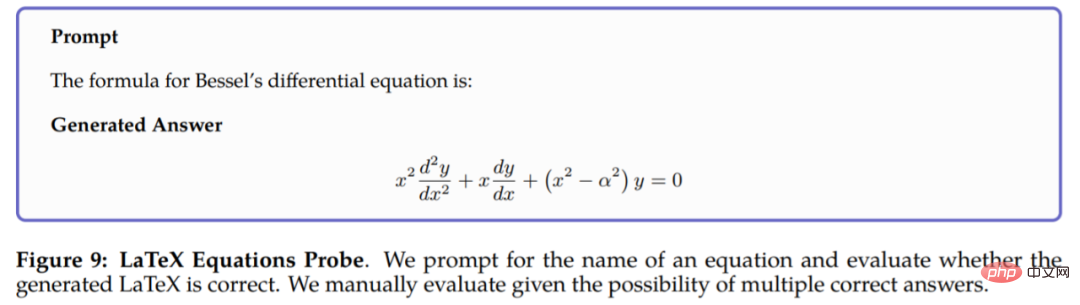

Galactica outperforms existing models on a range of scientific tasks. On technical knowledge exploration tasks such as LaTeX equations, the performance of Galactica and GPT-3 is 68.2% VS 49.0%. Galactica also excels at inference, significantly outperforming Chinchilla on the mathematical MMLU benchmark.

Galactica also outperforms BLOOM and OPT-175B on BIG-bench despite not being trained on a common corpus. Additionally, it achieved new performance highs of 77.6% and 52.9% on downstream tasks such as PubMedQA and MedMCQA development.

Simply put, the research encapsulates step-by-step reasoning in special tokens to mimic the inner workings. This allows researchers to interact with models using natural language, as shown below in Galactica’s trial interface.

It is worth mentioning that in addition to text generation, Galactica can also perform multi-modal tasks involving chemical formulas and protein sequences. This will contribute to the field of drug discovery.

Implementation details

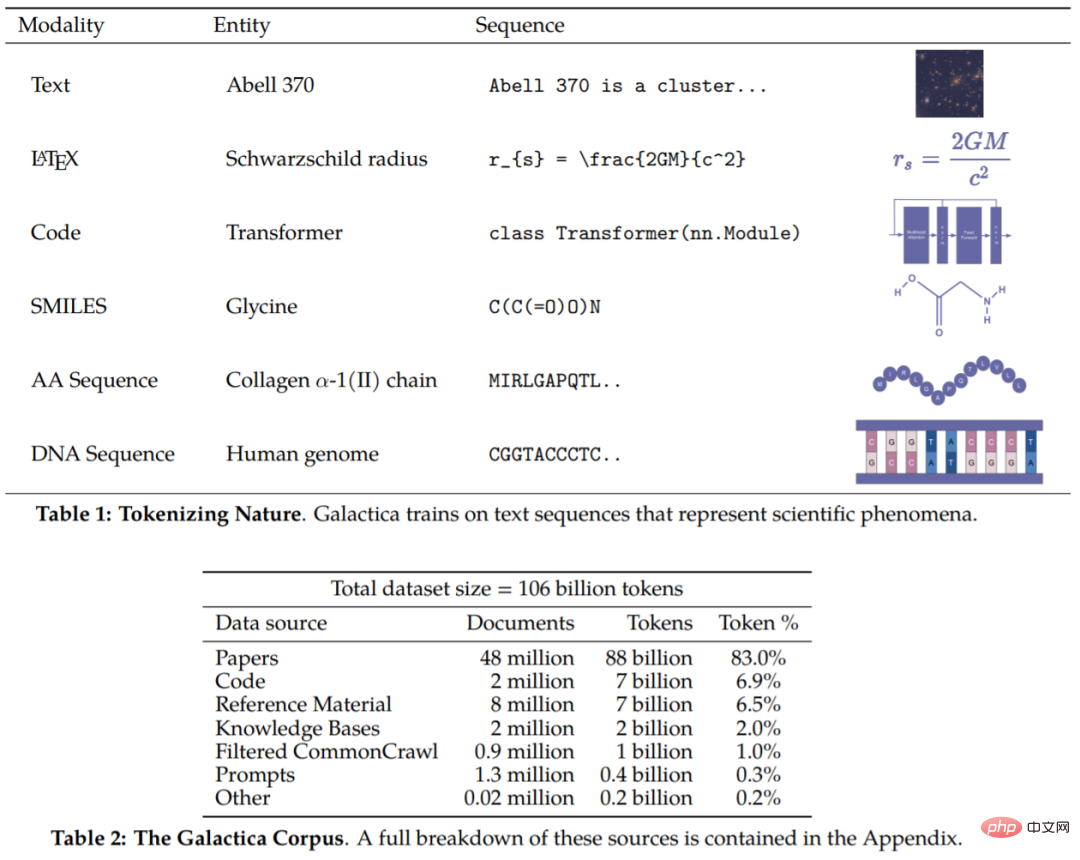

The corpus of this article contains 106 billion tokens, which come from papers, references, encyclopedias, and other scientific materials. It can be said that this research includes both natural language resources (papers, reference books) and sequences in nature (protein sequences, chemical forms). Details of the corpus are shown in Tables 1 and 2 .

Now that we have the corpus, the next step is how to operate the data. Generally speaking, the design of tokenization is very important. For example, if protein sequences are written in terms of amino acid residues, then character-based tokenization is appropriate. In order to achieve tokenization, this study performed specialized tokenization on different modalities. Specific examples include (including but not limited to):

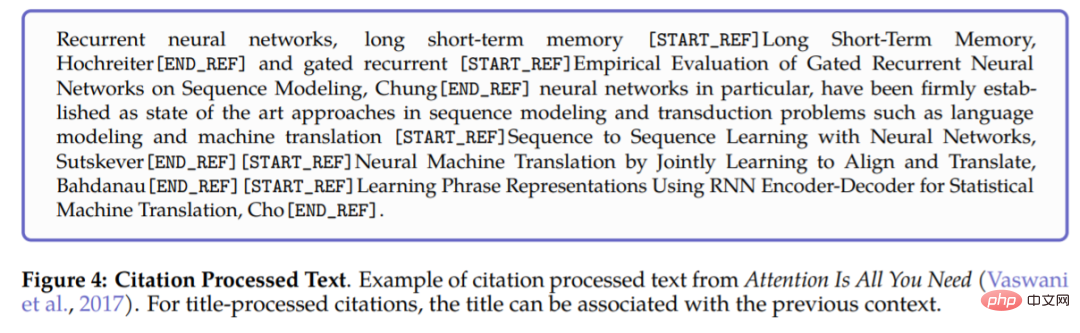

- Reference: Use special reference tokens [START_REF] and [END_REF] to wrap references;

- Stepwise reasoning: Use working memory tokens to encapsulate stepwise reasoning and simulate the internal working memory context;

- Numbers: Divide numbers into separate tokens. For example, 737612.62 → 7,3,7,6,1,2,.,6,2;

- SMILES formula: wrap the sequence with [START_SMILES] and [END_SMILES] and apply Character-based tokenization. Likewise, this study uses [START_I_SMILES] and [END_I_SMILES] to represent isomeric SMILES. For example: C(C(=O)O)N→C, (,C,(,=,O,),O,),N;

- DNA sequence: Apply one A character-based tokenization that treats each nucleotide base as a token, where the starting tokens are [START_DNA] and [END_DNA]. For example, CGGTACCCTC→C, G, G, T, A, C, C, C, T, C.

# Figure 4 below shows an example of processing references to a paper. When handling references use global identifiers and the special tokens [START_REF] and [END_REF] to represent the place of the reference.

#After the data set is processed, the next step is how to implement it. Galactica has made the following modifications based on the Transformer architecture:

- GeLU activation: Use GeLU activation for models of various sizes;

- Context window: For models of different sizes, use a context window of length 2048;

- No bias: Follow PaLM, no bias is used in dense kernel or layer specifications;

- Learning location embedding: Learning location embedding is used for the model;

- Vocabulary: Use BPE to build a vocabulary containing 50k tokens.

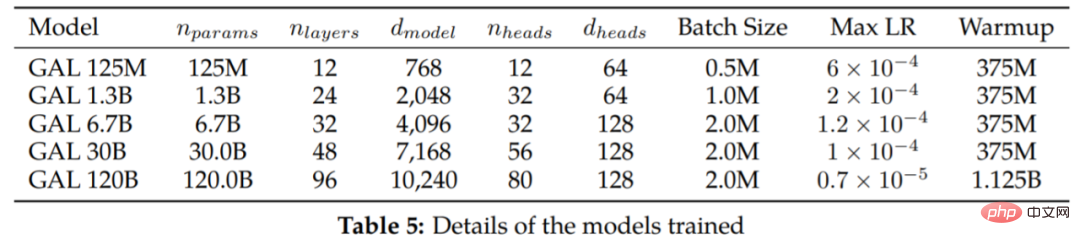

Table 5 lists models of different sizes and training hyperparameters.

Experiment

Duplicate tokens are considered harmless

As can be seen from Figure 6, after four epochs of training, the verification loss continues to decrease. The model with 120B parameters only starts to overfit at the beginning of the fifth epoch. This is unexpected because existing research shows that duplicate tokens can be harmful to performance. The study also found that the 30B and 120B models exhibited a double-decline effect epoch-wise, where the validation loss plateaued (or rose), followed by a decline. This effect becomes stronger after each epoch, most notably for the 120B model at the end of training.

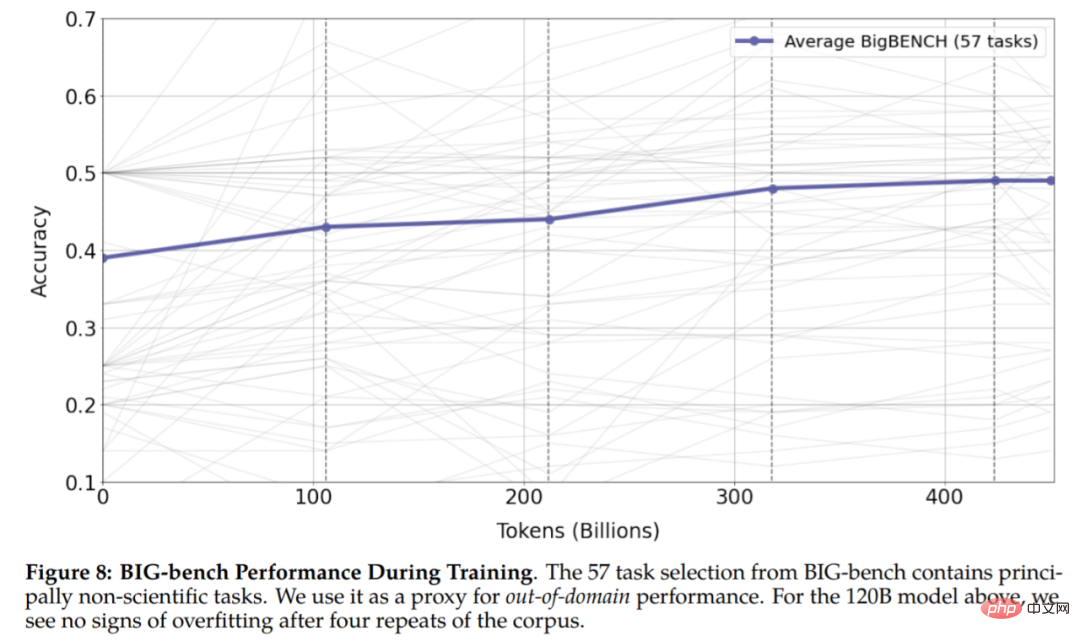

The results of Figure 8 show that there is no sign of overfitting in the experiment, which shows that repeated tokens can improve the performance of downstream and upstream tasks.

Other results

It’s too slow to type the formula, now use the prompt LaTeX can be generated:

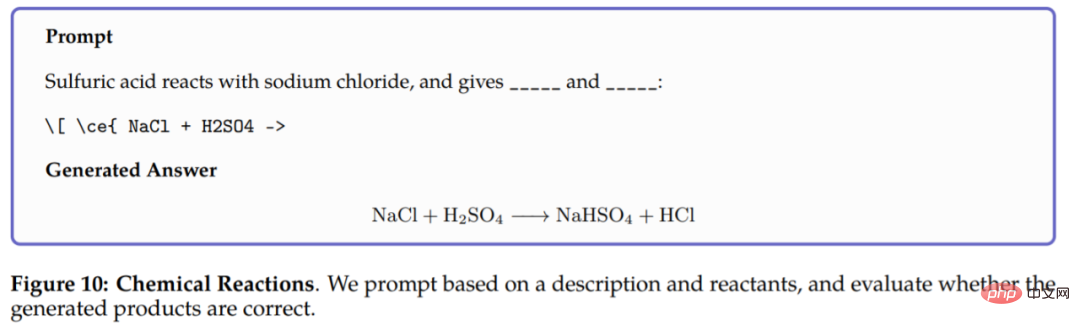

In a chemical reaction, Galactica is required to predict the product of the reaction in the chemical equation LaTeX. The model can be based only on the reactants. Making inferences, the results are as follows:

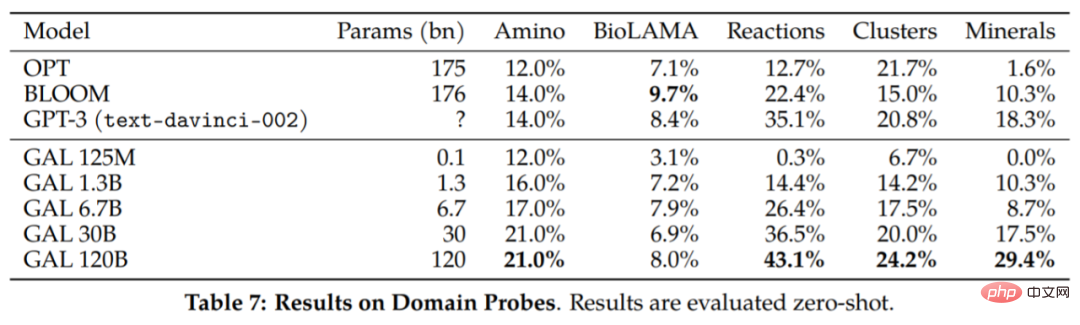

Some additional results are reported in Table 7:

Galactica's reasoning abilities. The study is first evaluated on the MMLU mathematics benchmark and the evaluation results are reported in Table 8. Galactica performs strongly compared to the larger base model, and using tokens appears to improve Chinchilla's performance, even for the smaller 30B Galactica model.

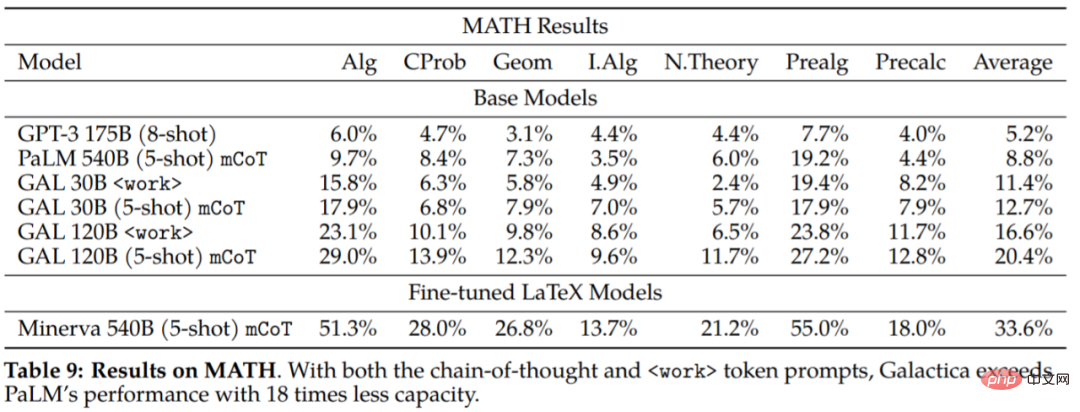

The study also evaluated the MATH dataset to further explore Galactica’s inference capabilities:

It can be concluded from the experimental results that Galactica is much better than the basic PaLM model in terms of thinking chain and prompts. This suggests that Galactica is a better choice for handling mathematical tasks.

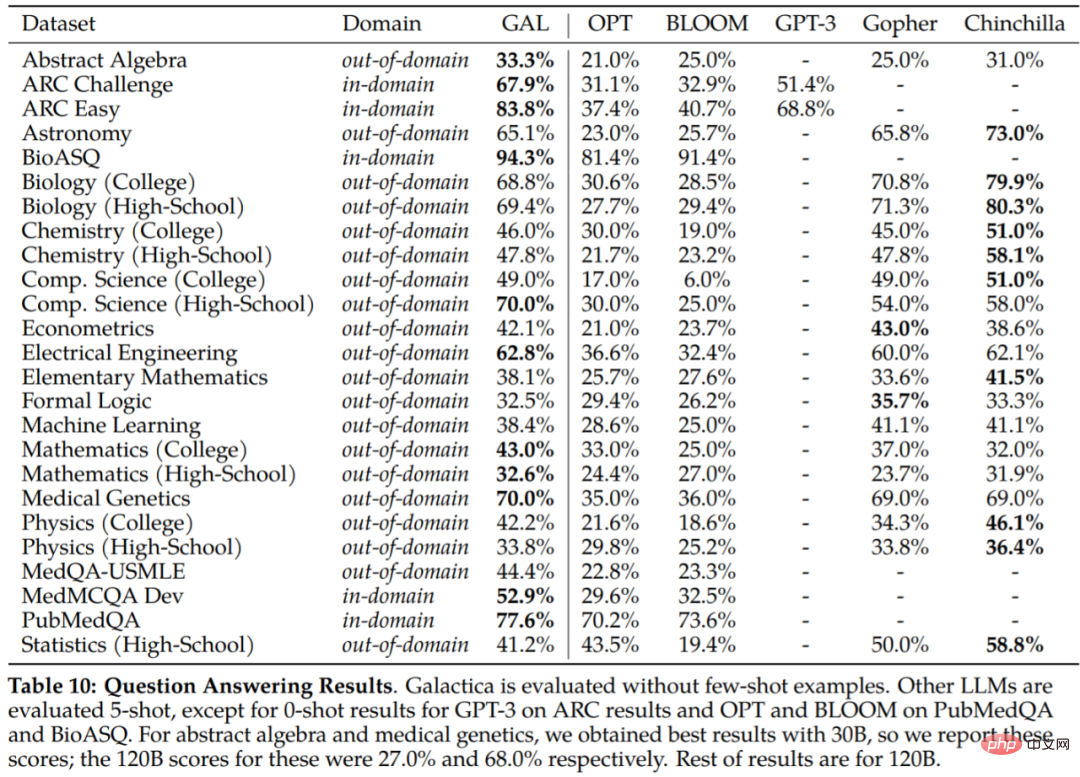

The evaluation results on downstream tasks are shown in Table 10. Galactica significantly outperforms other language models and outperforms larger models on most tasks (Gopher 280B). The difference in performance was larger compared to Chinchilla, which appeared to be stronger on a subset of tasks: particularly high school subjects and less mathematical, memory-intensive tasks. In contrast, Galactica tends to perform better on math and graduate-level tasks.

The study also evaluated Chinchilla’s ability to predict citations given input context, an assessment of Chinchilla’s ability to organize scientific literature. Important test. The results are as follows:

For more experimental content, please refer to the original paper.

The above is the detailed content of The big model can 'write' papers by itself, with formulas and references. The trial version is now online. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

The top ten cryptocurrency trading platforms in the world include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi Global, Bitfinex, Bittrex, KuCoin and Poloniex, all of which provide a variety of trading methods and powerful security measures.

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

MeMebox 2.0 redefines crypto asset management through innovative architecture and performance breakthroughs. 1) It solves three major pain points: asset silos, income decay and paradox of security and convenience. 2) Through intelligent asset hubs, dynamic risk management and return enhancement engines, cross-chain transfer speed, average yield rate and security incident response speed are improved. 3) Provide users with asset visualization, policy automation and governance integration, realizing user value reconstruction. 4) Through ecological collaboration and compliance innovation, the overall effectiveness of the platform has been enhanced. 5) In the future, smart contract insurance pools, forecast market integration and AI-driven asset allocation will be launched to continue to lead the development of the industry.

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

Bitcoin price today

Apr 28, 2025 pm 07:39 PM

Bitcoin price today

Apr 28, 2025 pm 07:39 PM

Bitcoin’s price fluctuations today are affected by many factors such as macroeconomics, policies, and market sentiment. Investors need to pay attention to technical and fundamental analysis to make informed decisions.

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms: 1. OKX, 2. Binance, 3. Coinbase, 4. Kraken, 5. Huobi, 6. KuCoin, 7. Bitfinex, 8. Gemini, 9. Bitstamp, 10. Poloniex, these platforms are known for their security, user experience and diverse functions, suitable for users at different levels of digital currency transactions

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

Bitcoin’s price ranges from $20,000 to $30,000. 1. Bitcoin’s price has fluctuated dramatically since 2009, reaching nearly $20,000 in 2017 and nearly $60,000 in 2021. 2. Prices are affected by factors such as market demand, supply, and macroeconomic environment. 3. Get real-time prices through exchanges, mobile apps and websites. 4. Bitcoin price is highly volatile, driven by market sentiment and external factors. 5. It has a certain relationship with traditional financial markets and is affected by global stock markets, the strength of the US dollar, etc. 6. The long-term trend is bullish, but risks need to be assessed with caution.

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

The top ten digital currency exchanges such as Binance, OKX, gate.io have improved their systems, efficient diversified transactions and strict security measures.

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

The top ten cryptocurrency exchanges in the world in 2025 include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi, Bitfinex, KuCoin, Bittrex and Poloniex, all of which are known for their high trading volume and security.