Technology peripherals

Technology peripherals

AI

AI

A GPU runs the ChatGPT volume model, and ControlNet is another artifact for AI drawing.

A GPU runs the ChatGPT volume model, and ControlNet is another artifact for AI drawing.

A GPU runs the ChatGPT volume model, and ControlNet is another artifact for AI drawing.

catalog

- Transformer models: an introduction and catalog

- High-throughout Generative Inference of Large Language Models with a Single GPU

- Temporal Domain Generalization with Drift-Aware Dynamic Neural Networks

- Large-scale physically accurate modeling of real proton exchange membrane fuel cell with deep learning

- A Comprehensive Survey on Pretrained Foundation Models: A History from BERT to ChatGPT

- Adding Conditional Control to Text-to-Image Diffusion Models

- EVA3D: Compositional 3D Human Generation from 2D image Collections

- ArXiv Weekly Radiostation: NLP, CV, ML More selected papers (with audio)

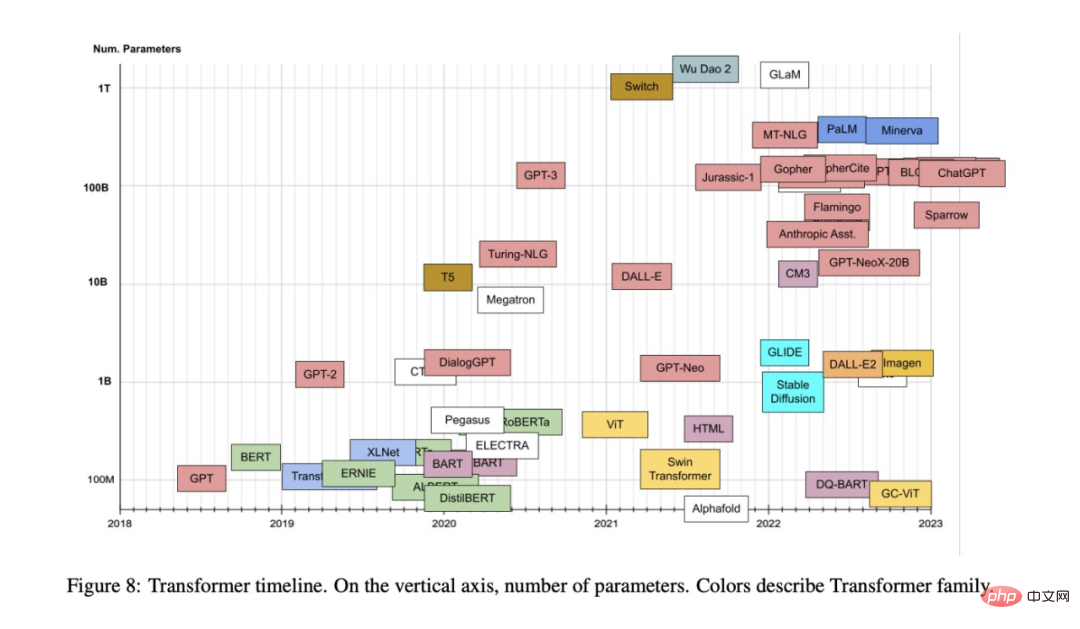

Paper 1: Transformer models: an introduction and catalog

- Author: Xavier Amatriain

- ##Paper address: https://arxiv.org/pdf /2302.07730.pdf

Abstract: Since it was proposed in 2017, the Transformer model has been demonstrated in other fields such as natural language processing and computer vision. It has achieved unprecedented strength and triggered technological breakthroughs such as ChatGPT, and people have also proposed various variants based on the original model.

As academia and industry continue to propose new models based on the Transformer attention mechanism, it is sometimes difficult for us to summarize this direction. Recently, a comprehensive article by Xavier Amatriain, head of AI product strategy at LinkedIn, may help us solve this problem.

## Recommendation: The goal of this article is to provide a more comprehensive but A simple catalog and classification also introduces the most important aspects and innovations in the Transformer model.

Paper 2: High-throughout Generative Inference of Large Language Models with a Single GPU

- ##Author: Ying Sheng et al

- Paper address: https://github.com/FMInference/FlexGen/blob/main/docs/paper.pdf

Traditionally, the high computational and memory requirements of large language model (LLM) inference have necessitated the use of multiple high-end AI accelerators Conduct training. This study explores how to reduce the requirements of LLM inference to a consumer-grade GPU and achieve practical performance. ,Recently, new research from Stanford University, UC Berkeley, ETH Zurich, Yandex, Moscow State Higher School of Economics, Meta, Carnegie Mellon University and other institutions proposed FlexGen. This is a high-throughput generation engine for running LLM with limited GPU memory. The figure below shows the design idea of FlexGen, which uses block scheduling to reuse weights and overlap I/O with calculations, as shown in figure (b) below, while other baseline systems use inefficient row-by-row scheduling, as shown in figure (a) below .

Recommendation:

Run the ChatGPT volume model, and only need one GPU from now on: here comes the method to accelerate by a hundred times.

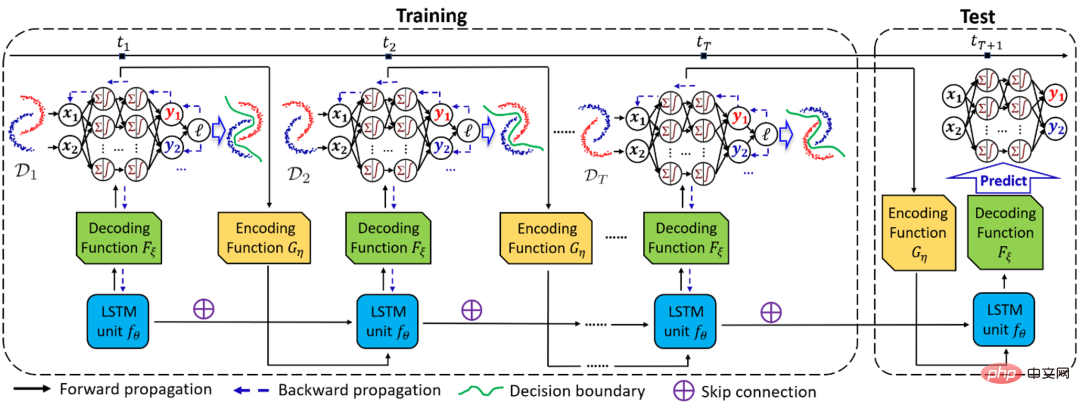

Paper 3: Temporal Domain Generalization with Drift-Aware Dynamic Neural Networks

- Author : Guangji Bai et al

- Paper address: https://arxiv.org/pdf/2205.10664.pdf

In the Domain Generalization (DG) task, when the distribution of the domain changes continuously with the environment, how to accurately capture the change and its impact on the model is A very important but also extremely challenging question. To this end, Professor Zhao Liang’s team from Emory University proposed a time domain generalization framework DRAIN based on Bayesian theory, which uses a recursive network to learn the drift of the time dimension domain distribution, and at the same time uses a dynamic neural network to And the combination of graph generation technology maximizes the expressive ability of the model and achieves model generalization and prediction in unknown fields in the future. This work has been selected into ICLR 2023 Oral (Top 5% among accepted papers). The following is a schematic diagram of the overall framework of DRAIN.

Recommendation: Drift-aware dynamic neural network blessing, the new framework for time domain generalization far exceeds Domain generalization & adaptation methods.

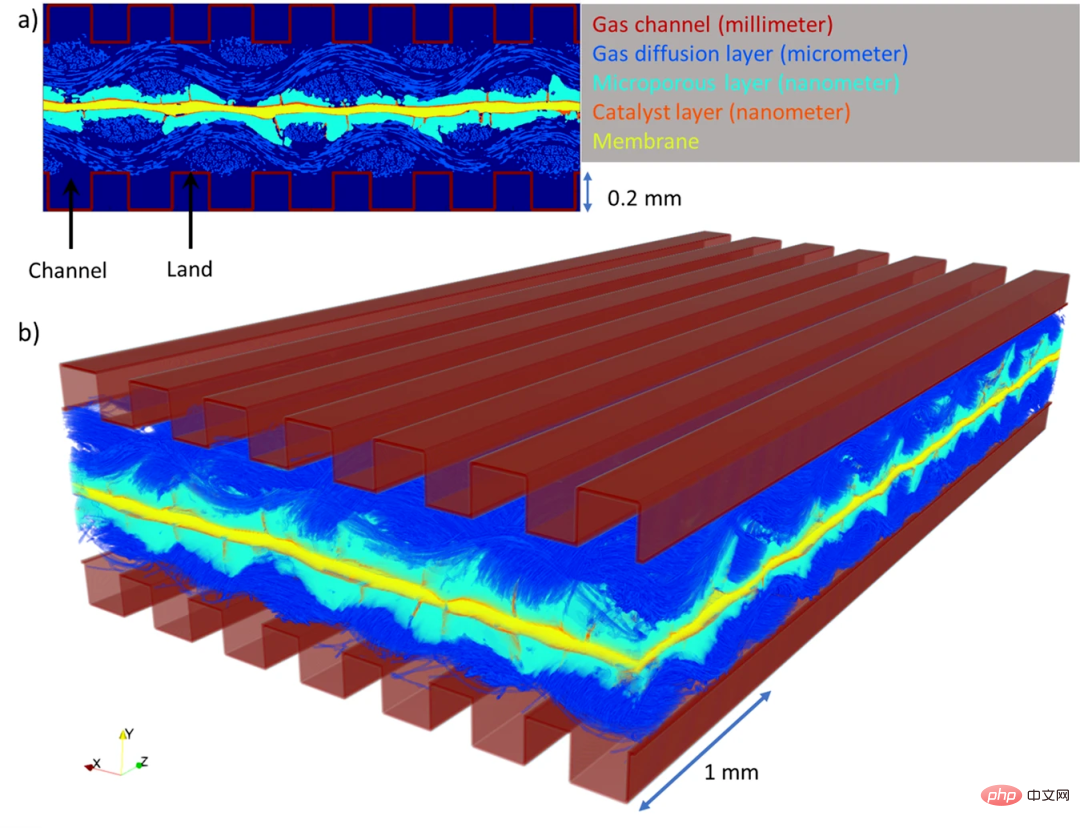

Paper 4: Large-scale physically accurate modeling of real proton exchange membrane fuel cell with deep learning

- Author: Ying Da Wang et al

- Paper address: https://www.nature.com/articles/s41467-023-35973- 8

##Abstract: In order to ensure energy supply and combat climate change, people’s focus has shifted from fossil fuels to clean and renewable energy, hydrogen With its high energy density and clean and low-carbon energy attributes, it can play an important role in the energy transformation. Hydrogen fuel cells, especially proton exchange membrane fuel cells (PEMFC), are key to this green revolution due to their high energy conversion efficiency and zero-emission operation.

PEMFC converts hydrogen into electricity through an electrochemical process, with the only by-product of the reaction being pure water. However, PEMFCs can become inefficient if water cannot flow out of the cell properly and subsequently "floods" the system. Until now, it has been difficult for engineers to understand the precise manner in which water drains or accumulates inside fuel cells because they are so small and complex.

Recently, a research team from the University of New South Wales in Sydney developed a deep learning algorithm (DualEDSR) to improve the understanding of the internal conditions of PEMFC, which can be obtained from lower resolution X High-resolution modeling images generated from radiographic microcomputed tomography. The process has been tested on a single hydrogen fuel cell, allowing its interior to be accurately modeled and potentially improving its efficiency. The figure below shows the PEMFC domains generated in this study.

Recommendation: Deep learning conducts large-scale physical accurate modeling of the interior of fuel cells to help Battery performance improved.

Paper 5: A Comprehensive Survey on Pretrained Foundation Models: A History from BERT to ChatGPT

- Author: Ce Zhou et al

- Paper address: https://arxiv.org/pdf/2302.09419.pdf

Abstract: This nearly 100-page review combs the evolution history of the pre-trained basic model, allowing us to see how ChatGPT became successful step by step.

Recommendation: From BERT to ChatGPT, a hundred-page review combs the evolution history of pre-trained large models.

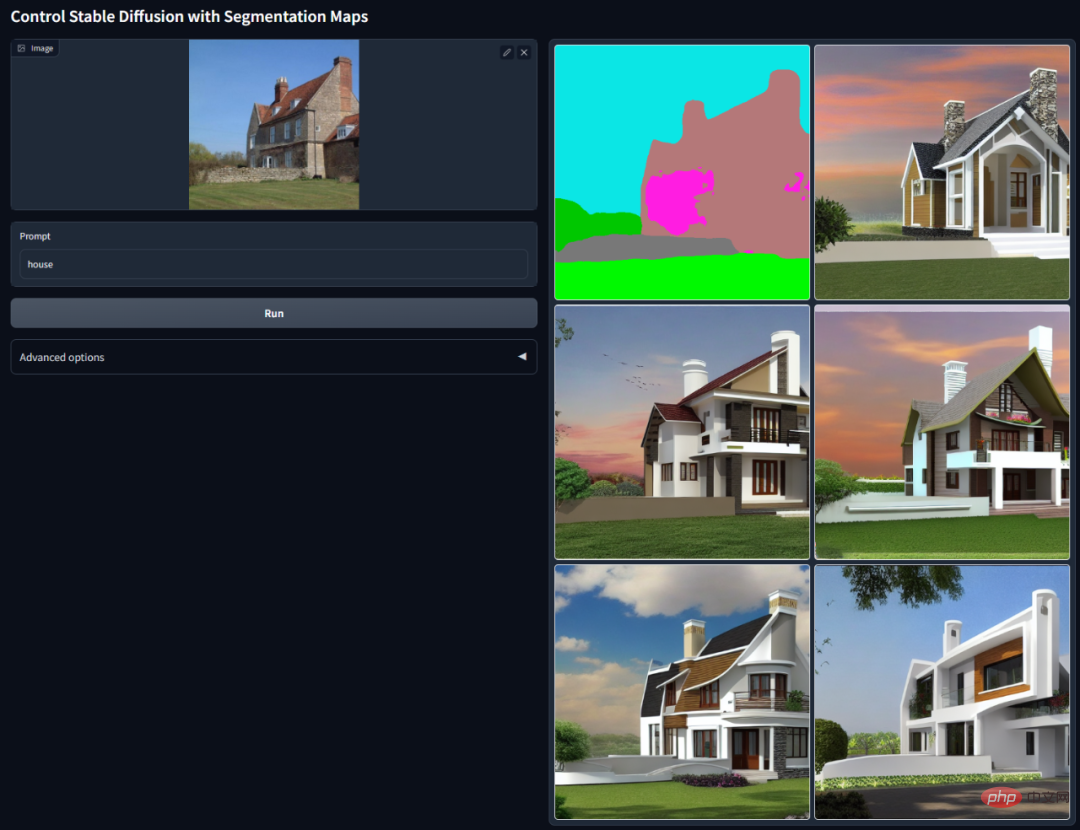

Paper 6: Adding Conditional Control to Text-to-Image Diffusion Models

- Author: Lvmin Zhang et al

- Paper address: https://arxiv.org/pdf/2302.05543.pdf

Abstract: This paper proposes an end-to-end neural network architecture ControlNet, which can improve the graph-generating graph by adding additional conditions to control the diffusion model (such as Stable Diffusion) Effect, and can generate full-color images from line drawings, generate images with the same depth structure, and optimize hand generation through hand key points.

Recommendation: AI reduces dimensionality to defeat human painters, introduces ControlNet into Vincentian graphs, and fully reuses depth and edge information.

Paper 7: EVA3D: Compositional 3D Human Generation from 2D image Collections

- Author :Fangzhou Hong et al

- Paper address: https://arxiv.org/abs/2210.04888

Abstract: At ICLR 2023, the Nanyang Technological University-SenseTime Joint Research Center S-Lab team proposed the first method to learn high-resolution 3D human body generation from a collection of 2D images. EVA3D. Thanks to the differentiable rendering provided by NeRF, recent 3D generative models have achieved stunning results on stationary objects. However, in a more complex and deformable category such as the human body, 3D generation still poses great challenges.

This paper proposes an efficient combined NeRF representation of the human body, achieving high-resolution (512x256) 3D human body generation without using a super-resolution model. EVA3D has significantly surpassed existing solutions on four large-scale human body data sets, and the code has been open source.

Recommendation: ICLR 2023 Spotlight | 2D images to brain-fill the 3D human body, you can wear any clothes and change the movements.

ArXiv Weekly Radiostation

Heart of Machine cooperates with ArXiv Weekly Radiostation initiated by Chu Hang, Luo Ruotian, and Mei Hongyuan. Based on 7 Papers, this selection is More important papers this week, including 10 selected papers in each of NLP, CV, and ML fields, and abstract introductions of the papers in audio format are provided. The details are as follows:

7 NLP Papers

This week’s 10 selected NLP papers are:

1. Active Prompting with Chain- of-Thought for Large Language Models. (from Tong Zhang)

2. Prosodic features improve sentence segmentation and parsing. (from Mark Steedman)

3. ProsAudit, a prosodic benchmark for self-supervised speech models. (from Emmanuel Dupoux)

4. Exploring Social Media for Early Detection of Depression in COVID-19 Patients. ( from Jie Yang)

5. Federated Nearest Neighbor Machine Translation. (from Enhong Chen)

6. SPINDLE: Spinning Raw Text into Lambda Terms with Graph Attention. (from Michael Moortgat)

7. A Neural Span-Based Continual Named Entity Recognition Model. (from Qingcai Chen)

10 CV Papers

This week’s 10 CV selected papers are:

1. MERF: Memory-Efficient Radiance Fields for Real-time View Synthesis in Unbounded Scenes. (from Richard Szeliski, Andreas Geiger)

2. Designing an Encoder for Fast Personalization of Text-to-Image Models. (from Daniel Cohen-Or)

3. Teaching CLIP to Count to Ten. (from Michal Irani)

4. Evaluating the Efficacy of Skincare Product: A Realistic Short-Term Facial Pore Simulation. (from Weisi Lin)

5. Real-Time Damage Detection in Fiber Lifting Ropes Using Convolutional Neural Networks. (from Moncef Gabbouj)

6. Embedding Fourier for Ultra-High-Definition Low-Light Image Enhancement. (from Chen Change Loy)

7. Region-Aware Diffusion for Zero-shot Text-driven Image Editing. (from Changsheng Xu)

8. Side Adapter Network for Open-Vocabulary Semantic Segmentation. (from Xiang Bai)

9. VoxFormer: Sparse Voxel Transformer for Camera-based 3D Semantic Scene Completion. (from Sanja Fidler)

10. Object-Centric Video Prediction via Decoupling of Object Dynamics and Interactions. (from Sven Behnke)

10 ML Papers

本周 10 篇 ML 精选论文是:

1. normflows: A PyTorch Package for Normalizing Flows. (from Bernhard Schölkopf)

2. Concept Learning for Interpretable Multi-Agent Reinforcement Learning. (from Katia Sycara)

3. Random Teachers are Good Teachers. (from Thomas Hofmann)

4. Aligning Text-to-Image Models using Human Feedback. (from Craig Boutilier, Pieter Abbeel)

5. Change is Hard: A Closer Look at Subpopulation Shift. (from Dina Katabi)

6. AlpaServe: Statistical Multiplexing with Model Parallelism for Deep Learning Serving. (from Zhifeng Chen)

7. Diverse Policy Optimization for Structured Action Space. (from Hongyuan Zha)

8. The Geometry of Mixability. (from Robert C. Williamson)

9. Does Deep Learning Learn to Abstract? A Systematic Probing Framework. (from Nanning Zheng)

10. Sequential Counterfactual Risk Minimization. (from Julien Mairal)

The above is the detailed content of A GPU runs the ChatGPT volume model, and ControlNet is another artifact for AI drawing.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

Cryptocurrency data platforms suitable for beginners include CoinMarketCap and non-small trumpet. 1. CoinMarketCap provides global real-time price, market value, and trading volume rankings for novice and basic analysis needs. 2. The non-small quotation provides a Chinese-friendly interface, suitable for Chinese users to quickly screen low-risk potential projects.

okx online okx exchange official website online

Apr 22, 2025 am 06:45 AM

okx online okx exchange official website online

Apr 22, 2025 am 06:45 AM

The detailed introduction of OKX Exchange is as follows: 1) Development history: Founded in 2017 and renamed OKX in 2022; 2) Headquartered in Seychelles; 3) Business scope covers a variety of trading products and supports more than 350 cryptocurrencies; 4) Users are spread across more than 200 countries, with tens of millions of users; 5) Multiple security measures are adopted to protect user assets; 6) Transaction fees are based on the market maker model, and the fee rate decreases with the increase in trading volume; 7) It has won many honors, such as "Cryptocurrency Exchange of the Year".

A list of special services for major virtual currency trading platforms

Apr 22, 2025 am 08:09 AM

A list of special services for major virtual currency trading platforms

Apr 22, 2025 am 08:09 AM

Institutional investors should choose compliant platforms such as Coinbase Pro and Genesis Trading, focusing on cold storage ratios and audit transparency; retail investors should choose large platforms such as Binance and Huobi, focusing on user experience and security; users in compliance-sensitive areas can conduct fiat currency trading through Circle Trade and Huobi Global, and mainland Chinese users need to go through compliant over-the-counter channels.

Top 10 latest releases of virtual currency trading platforms for bulk transactions

Apr 22, 2025 am 08:18 AM

Top 10 latest releases of virtual currency trading platforms for bulk transactions

Apr 22, 2025 am 08:18 AM

The following factors should be considered when choosing a bulk trading platform: 1. Liquidity: Priority is given to platforms with an average daily trading volume of more than US$5 billion. 2. Compliance: Check whether the platform holds licenses such as FinCEN in the United States, MiCA in the European Union. 3. Security: Cold wallet storage ratio and insurance mechanism are key indicators. 4. Service capability: Whether to provide exclusive account managers and customized transaction tools.

A list of top ten virtual currency trading platforms that support multiple currencies

Apr 22, 2025 am 08:15 AM

A list of top ten virtual currency trading platforms that support multiple currencies

Apr 22, 2025 am 08:15 AM

Priority is given to compliant platforms such as OKX and Coinbase, enabling multi-factor verification, and asset self-custody can reduce dependencies: 1. Select an exchange with a regulated license; 2. Turn on the whitelist of 2FA and withdrawals; 3. Use a hardware wallet or a platform that supports self-custody.

Recommended top 10 for easy access to digital currency trading apps (latest ranking in 25)

Apr 22, 2025 am 07:45 AM

Recommended top 10 for easy access to digital currency trading apps (latest ranking in 25)

Apr 22, 2025 am 07:45 AM

The core advantage of gate.io (global version) is that the interface is minimalist, supports Chinese, and the fiat currency trading process is intuitive; Binance (simplified version) has the highest global trading volume, and the simple version model only retains spot trading; OKX (Hong Kong version) has the simple version of the interface is simple, supports Cantonese/Mandarin, and has a low threshold for derivative trading; Huobi Global Station (Hong Kong version) has the core advantage of being an old exchange, launches a meta-universe trading terminal; KuCoin (Chinese Community Edition) has the core advantage of supporting 800 currencies, and the interface adopts WeChat interaction; Kraken (Hong Kong version) has the core advantage of being an old American exchange, holding a Hong Kong SVF license, and the interface is simple; HashKey Exchange (Hong Kong licensed) has the core advantage of being a well-known licensed exchange in Hong Kong, supporting France

Tips and recommendations for the top ten market websites in the currency circle 2025

Apr 22, 2025 am 08:03 AM

Tips and recommendations for the top ten market websites in the currency circle 2025

Apr 22, 2025 am 08:03 AM

Domestic user adaptation solutions include compliance channels and localization tools. 1. Compliance channels: Franchise currency exchange through OTC platforms such as Circle Trade, domestically, they need to go through Hong Kong or overseas platforms. 2. Localization tools: Use the currency circle network to obtain Chinese information, and Huobi Global Station provides a meta-universe trading terminal.

Summary of the top ten Apple version download portals for digital currency exchange apps

Apr 22, 2025 am 09:27 AM

Summary of the top ten Apple version download portals for digital currency exchange apps

Apr 22, 2025 am 09:27 AM

Provides a variety of complex trading tools and market analysis. It covers more than 100 countries, has an average daily derivative trading volume of over US$30 billion, supports more than 300 trading pairs and 200 times leverage, has strong technical strength, a huge global user base, provides professional trading platforms, secure storage solutions and rich trading pairs.