Technology peripherals

Technology peripherals

AI

AI

Why do Tesla's humanoid robots not look like humans? An article to understand the impact of the uncanny valley effect on robotics companies

Why do Tesla's humanoid robots not look like humans? An article to understand the impact of the uncanny valley effect on robotics companies

Why do Tesla's humanoid robots not look like humans? An article to understand the impact of the uncanny valley effect on robotics companies

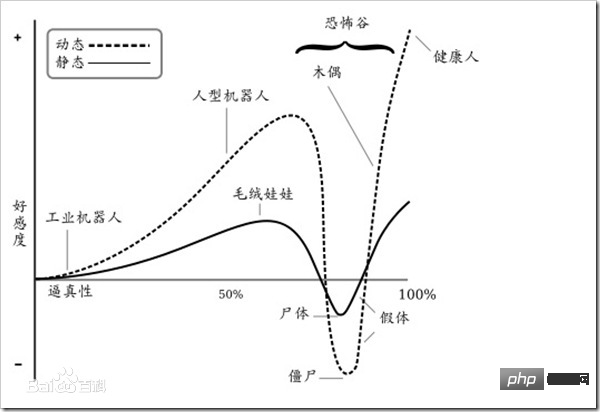

In 1970, robotics expert Masahiro Mori first described the impact of the "uncanny valley", a concept that had a huge impact on the field of robotics. The "uncanny valley" effect describes the positive and negative reactions humans display when seeing human-like objects, especially robots.

The uncanny valley effect theory holds that the more human-like a robot looks and acts, the stronger our empathy for it will be. However, at some point, when a robot or virtual character becomes too lifelike, yet not quite human-like, our brain's visual processing systems get confused. Eventually, we will sink deeply into a very negative emotional state towards robots.

Mori Masahiro’s hypothesis points out that since robots are similar to humans in appearance and actions, Therefore, humans will also have positive emotions towards robots; when the similarity between robots and humans reaches a certain level, humans' reaction to them will suddenly become extremely negative and disgusting, even if the robots are only slightly different from humans. , will appear very conspicuous and dazzling, so that the entire robot has a very stiff and terrifying feeling, just like facing the walking dead; when the similarity between robots and humans continues to increase, equivalent to the similarity between ordinary people, humans will react emotionally to them It will return to the positive side again, creating empathy between humans.

#However, where the uncanny valley effect really makes a difference is in how modern humans interact with robots, an effect that has been shown to change our perception of humanoid robots. view. In a 2016 paper in Cognition, a top international cognitive science journal, Maya Mathur and David Reichling discuss their research on human reactions to robot faces and digitally synthesized faces. They found that there was an uncanny valley effect in these responses. They even found that the uncanny valley effect affects how humans perceive the trustworthiness of robots and avatars.

Karl MacDorman, professor of human-computer interaction at Indiana University-Purdue University Indianapolis (IUPUI), said: "The impact of the uncanny valley effect on the design and development direction of robots can be said to be It is very obvious: the uncanny valley effect slows down the progress of robot development. The uncanny valley effect has become a law that prevents robot designers from exploring a high degree of human similarity in human-computer interaction."

For MacDorman and other researchers, the uncanny valley effect must be addressed in order to accelerate the adoption of robots into society.

Being like humans creates more problems

To find out why, in 2010, Dartmouth College researchers Christine Looser and Thalia Wheatley evaluated humans Reactions to a series of simulated faces. The realism of these faces can be as high as completely human-like, or as low as completely like dolls. The researchers found that participants recognized the simulated faces as human at 65 percent or more.

Companies developing robots are now incorporating such findings into their designs and taking proactive steps to prevent the uncanny valley effect from affecting market acceptance of their robotic technologies. Alex Diel, a researcher at Cardiff University's School of Psychology who studies the uncanny valley, says one possible way is to avoid it altogether.

#Many companies avoid the uncanny valley altogether by using mechanical looks and movements instead of human-like looks and movements," Diel said. This means Companies intentionally remove human-like features from robots, such as lifelike faces or eyes, or design their movements to be distinctly non-human.

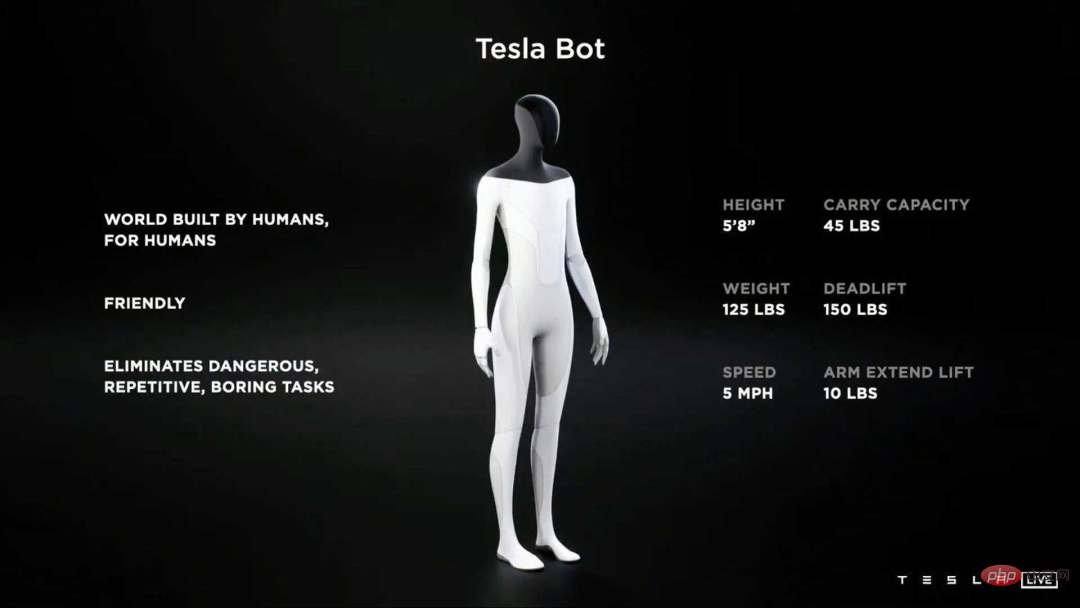

Diel Take the Tesla robot, a concept robot launched by an electric car manufacturer as an example. Although it is a humanoid robot, it does not have a human face designed, which ensures that the human brain Facial processing systems don't see it as a morphed version of a human face.

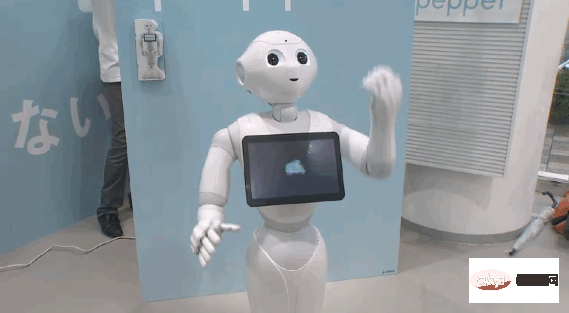

Another way to mitigate the impact of the uncanny valley effect is to design the robot in a cartoon style, which looks human and has a certain appeal, but does not appear too real. . Diel points to Pepper, a robot made by SoftBank Robotics, as one product that goes this route.

Neuropsychologist Sarah Weigelt, who studies the neural basis of visual perception at the Department of Rehabilitation Sciences at the University of Dortmund in Germany, said: "Cuteness is very useful. If If something is cute, you won't be afraid of it, but want to interact with it."

If companies can't make a robot cute, they usually show it in other ways Not human. Weigelt said some companies accomplish this by changing skin tones to non-human colors or intentionally leaving mechanical parts of the robot's body clearly exposed. This avoids any confusion that the strange object might be human, sidestepping the uncanny valley effect.

While companies work hard to avoid falling into the uncanny valley effect, they sometimes try to crawl through the “valley” to the other side, making robots indistinguishable from humans. However, MacDorman said, this also brings its own set of problems.

"As the appearance of robots becomes more and more humane, people have higher and higher expectations for robots. They expect the interaction between humans and machines to be closer to that between humans. level." MacDorman said if robots fail to achieve this level of performance, it could harm a company's reputation and revenue.

The impact of the uncanny valley effect not only hurts the company’s market performance; it also prevents the market from providing funding for fledgling robotics companies. MacDorman worked in a robotics lab in Japan, where robots were widely accepted by society and government. In fact, robots are an integral part of Japanese society, even playing the role of caregivers in a society with an aging and increasingly depleted population.

The uncanny valley effect becomes even more dangerous as Japanese society is quite accepting of robots. MacDorman said anything that damages public perception of robots is anathema. Government agencies are reluctant to fund projects that are too close to the "uncanny valley".

Through the “Uncanny Valley”

However, the uncanny valley effect is not all doom and gloom. Some robotics use cases don’t need to worry about the uncanny valley effect.

Conor McGinn, CEO of Akara Robotics, said: "Companies that take into account the uncanny valley effect are often companies involved in the production of social robots." Akara Robotics provides front-line services for hospitals and other institutions. Workers build cleaning robots.

"Robotics companies developing platforms for more practical tasks, such as autonomous food delivery or factory logistics, are unlikely to consider the uncanny valley effect." The latest data seems to support this idea. Robot orders in North America increased 67% in the second quarter of 2021 compared to the second quarter of 2020, according to the Association for Advancing Automation (A3). More than half of these orders do not belong to manufacturers that typically focus on robotics research and development.

There’s good reason to believe that more human-like features can actually make social robots more effective. “When people anthropomorphize a robot, they rationalize its behavior from a human perspective,” McGinn said. "Robot designers can take advantage of this trend and make it easier for people to understand the robot's behavior."

He gave the example of a company developing a robot receptionist. Adjustable facial expressions can sometimes push people into the uncanny valley effect, but they may help signal to people approaching the receptionist that they've been recognized.

According to Hadas Kress-Gazit and colleagues writing in the September 2021 issue of Communications, it is very important for social robots to follow social norms. However, the authors note, “one of the challenges is how to encode social norms and other behavioral constraints into formal constraints in robotic systems.” Under threat to social robotics companies?

McGinn says it’s possible that in the future people will completely address the uncanny valley effect. There is an argument that the next step should be to develop lifelike social robots that can communicate fluently with humans and follow social norms while retaining enough artifacts to avoid the uncanny valley effect.

MacDorman said popular commercial robots such as Sony's Aibo and SoftBank's Nao and Pepper can already achieve this goal. "They have the basic characteristics needed for a social robot - a torso, arms, a head with eyes and a mouth, and the ability to express emotions," he said. "This is enough for many applications."

Not to mention, if you don't have to pursue the ultimate in realism, you can save a lot of costs. This trade-off between realism and low cost can be understood as an economics problem that humanoid robots often encounter. "Realistic robots are suitable for high-cost specific environments, such as patient simulators in medical schools," MacDorman said. "The realism of the robots helps students train, so when they work on real people, they feel like they're already experts — and their performance improves."

That means We will see humanoid robots in high-cost areas, even with the uncanny valley effect. Even so, MacDorman says, we may still find reasons why these robots won't be acceptable. For example, a robot may one day be indistinguishable from a human, but users may still not accept it because it looks like the person they recently broke up with.

MacDorman said: "The uncanny valley effect not only reflects the relationship between human-machine similarity and acceptance, but also reflects the degree of mystery and coldness brought by objects and the degree to which people lose empathy. relationship.” In other words, we should worry less about the effects of the uncanny valley and more about promoting the development of robots that have real connections with humans. Such expectations will eventually become reality over time. Eventually, Diel says, we'll likely get used to robots that have very lifelike human features, just like their designers, though he admits that may take some time. First, it will require robots to become more commonplace in our daily lives.

"Once these very lifelike robots become part of the norm, they will no longer look like they deviate from the norm," Diehl said.

Weigelt agrees. She expects people's relationships with lifelike robots to change over time, as they become more commonplace. "The uncanny valley effect will change in the future. The current shudder may disappear in the future."

The above is the detailed content of Why do Tesla's humanoid robots not look like humans? An article to understand the impact of the uncanny valley effect on robotics companies. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1662

1662

14

14

1419

1419

52

52

1311

1311

25

25

1261

1261

29

29

1234

1234

24

24

Four recommended AI-assisted programming tools

Apr 22, 2024 pm 05:34 PM

Four recommended AI-assisted programming tools

Apr 22, 2024 pm 05:34 PM

This AI-assisted programming tool has unearthed a large number of useful AI-assisted programming tools in this stage of rapid AI development. AI-assisted programming tools can improve development efficiency, improve code quality, and reduce bug rates. They are important assistants in the modern software development process. Today Dayao will share with you 4 AI-assisted programming tools (and all support C# language). I hope it will be helpful to everyone. https://github.com/YSGStudyHards/DotNetGuide1.GitHubCopilotGitHubCopilot is an AI coding assistant that helps you write code faster and with less effort, so you can focus more on problem solving and collaboration. Git

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

Editor of Machine Power Report: Wu Xin The domestic version of the humanoid robot + large model team completed the operation task of complex flexible materials such as folding clothes for the first time. With the unveiling of Figure01, which integrates OpenAI's multi-modal large model, the related progress of domestic peers has been attracting attention. Just yesterday, UBTECH, China's "number one humanoid robot stock", released the first demo of the humanoid robot WalkerS that is deeply integrated with Baidu Wenxin's large model, showing some interesting new features. Now, WalkerS, blessed by Baidu Wenxin’s large model capabilities, looks like this. Like Figure01, WalkerS does not move around, but stands behind a desk to complete a series of tasks. It can follow human commands and fold clothes

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

Which AI programmer is the best? Explore the potential of Devin, Tongyi Lingma and SWE-agent

Apr 07, 2024 am 09:10 AM

Which AI programmer is the best? Explore the potential of Devin, Tongyi Lingma and SWE-agent

Apr 07, 2024 am 09:10 AM

On March 3, 2022, less than a month after the birth of the world's first AI programmer Devin, the NLP team of Princeton University developed an open source AI programmer SWE-agent. It leverages the GPT-4 model to automatically resolve issues in GitHub repositories. SWE-agent's performance on the SWE-bench test set is similar to Devin, taking an average of 93 seconds and solving 12.29% of the problems. By interacting with a dedicated terminal, SWE-agent can open and search file contents, use automatic syntax checking, edit specific lines, and write and execute tests. (Note: The above content is a slight adjustment of the original content, but the key information in the original text is retained and does not exceed the specified word limit.) SWE-A

Learn how to develop mobile applications using Go language

Mar 28, 2024 pm 10:00 PM

Learn how to develop mobile applications using Go language

Mar 28, 2024 pm 10:00 PM

Go language development mobile application tutorial As the mobile application market continues to boom, more and more developers are beginning to explore how to use Go language to develop mobile applications. As a simple and efficient programming language, Go language has also shown strong potential in mobile application development. This article will introduce in detail how to use Go language to develop mobile applications, and attach specific code examples to help readers get started quickly and start developing their own mobile applications. 1. Preparation Before starting, we need to prepare the development environment and tools. head

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Sweeping and mopping robots are one of the most popular smart home appliances among consumers in recent years. The convenience of operation it brings, or even the need for no operation, allows lazy people to free their hands, allowing consumers to "liberate" from daily housework and spend more time on the things they like. Improved quality of life in disguised form. Riding on this craze, almost all home appliance brands on the market are making their own sweeping and mopping robots, making the entire sweeping and mopping robot market very lively. However, the rapid expansion of the market will inevitably bring about a hidden danger: many manufacturers will use the tactics of sea of machines to quickly occupy more market share, resulting in many new products without any upgrade points. It is also said that they are "matryoshka" models. Not an exaggeration. However, not all sweeping and mopping robots are

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

The following 10 humanoid robots are shaping our future: 1. ASIMO: Developed by Honda, ASIMO is one of the most well-known humanoid robots. Standing 4 feet tall and weighing 119 pounds, ASIMO is equipped with advanced sensors and artificial intelligence capabilities that allow it to navigate complex environments and interact with humans. ASIMO's versatility makes it suitable for a variety of tasks, from assisting people with disabilities to delivering presentations at events. 2. Pepper: Created by Softbank Robotics, Pepper aims to be a social companion for humans. With its expressive face and ability to recognize emotions, Pepper can participate in conversations, help in retail settings, and even provide educational support. Pepper's