Technology peripherals

Technology peripherals

AI

AI

With only so much computing power, how to improve language model performance? Google has a new idea

With only so much computing power, how to improve language model performance? Google has a new idea

With only so much computing power, how to improve language model performance? Google has a new idea

In recent years, language models (LM) have become more prominent in natural language processing (NLP) research and increasingly influential in practice. In general, increasing the size of a model has been shown to improve performance across a range of NLP tasks.

However, the challenge of scaling up the model is also obvious: training new, larger models requires a lot of computing resources. In addition, new models are often trained from scratch and cannot utilize the training weights of previous models.

Regarding this problem, Google researchers explored two complementary methods to significantly improve the performance of existing language models without consuming a lot of additional computing resources.

First of all, in the article "Transcending Scaling Laws with 0.1% Extra Compute", the researchers introduced UL2R, a lightweight second-stage pre-training model that uses a Mixed enoisers target. UL2R improves performance on a range of tasks, unlocking bursts of performance even on tasks that previously had near-random performance.

Paper link: https://arxiv.org/pdf/2210.11399.pdf

In addition, In "Scaling Instruction-Finetuned Language Models", we explore the problem of fine-tuning language models on a data set worded with instructions, a process we call "Flan". This approach not only improves performance but also improves the usability of the language model to user input.

##Paper link: https://arxiv.org/abs/2210.11416

Finally, Flan and UL2R can be combined as complementary technologies in a model called Flan-U-PaLM 540B, which outperforms the untuned PaLM 540B model on a range of challenging evaluation benchmarks. Performance is 10% higher.

Training of UL2R

Traditionally, most language models are pre-trained on causal language modeling goals so that the model can Predict the next word in a sequence (like GPT-3 or PaLM) or denoising goals, where the model learns to recover original sentences from corrupted word sequences (like T5).

Although there are some trade-offs in the language modeling objective, i.e., language models for causality perform better at long sentence generation, while language models trained on the denoising objective perform better at fine-tuning aspect performed better, but in previous work, the researchers showed that a hybrid enoisers objective that included both objectives achieved better performance in both cases.

However, pre-training large language models from scratch on different targets is computationally difficult. Therefore, we propose UL2 repair (UL2R), an additional stage that continues pre-training with the UL2 target and requires only a relatively small amount of computation.

We apply UL2R to PaLM and call the resulting new language model U-PaLM.

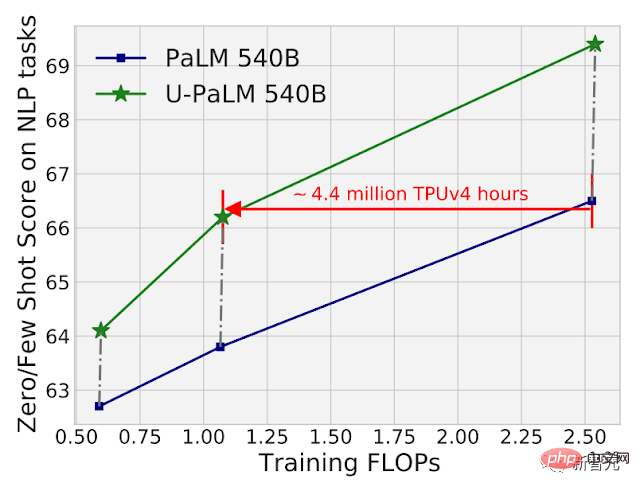

In our empirical evaluation, we found that with only a small amount of UL2 training, the model improved significantly.

For example, by using UL2R on the intermediate checkpoint of PaLM 540B, the performance of PaLM 540B on the final checkpoint can be achieved while using 2 times the computational effort. Of course, applying UL2R to the final PaLM 540B checkpoint will also bring huge improvements.

Comparison of calculation and model performance of PaLM 540B and U-PaLM 540B on 26 NLP benchmarks. U-PaLM 540B continues to train PaLM, with a very small amount of calculation but a great improvement in performance.

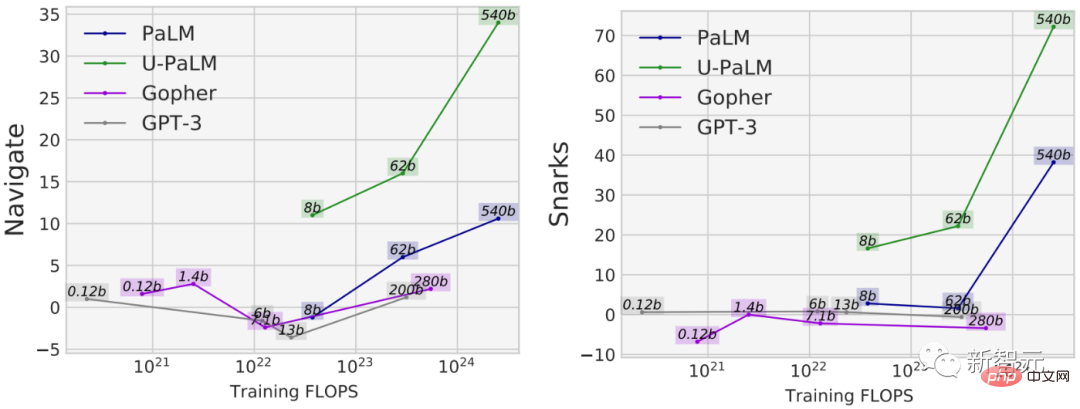

Another benefit of using UL2R is that it performs much better on some tasks than models trained purely on causal language modeling goals. For example, there are many BIG-Bench tasks with so-called "emergent capabilities", which are capabilities that are only available in sufficiently large language models.

While the most common way to discover emerging capabilities is by scaling up the model, UL2R can actually inspire emerging capabilities without scaling up the model.

For example, in the navigation task of BIG-Bench, which measures the model's ability to perform state tracking, all models except U-PaLM have fewer training FLOPs. At 10^23. Another example is BIG-Bench’s Snarks task, which measures a model’s ability to detect sarcastic language.

For both capabilities from BIG-Bench, emerging task performance is demonstrated, U-PaLM achieves emerging performance at a smaller model size due to the use of the UL2R target .

Instruction fine-tuning

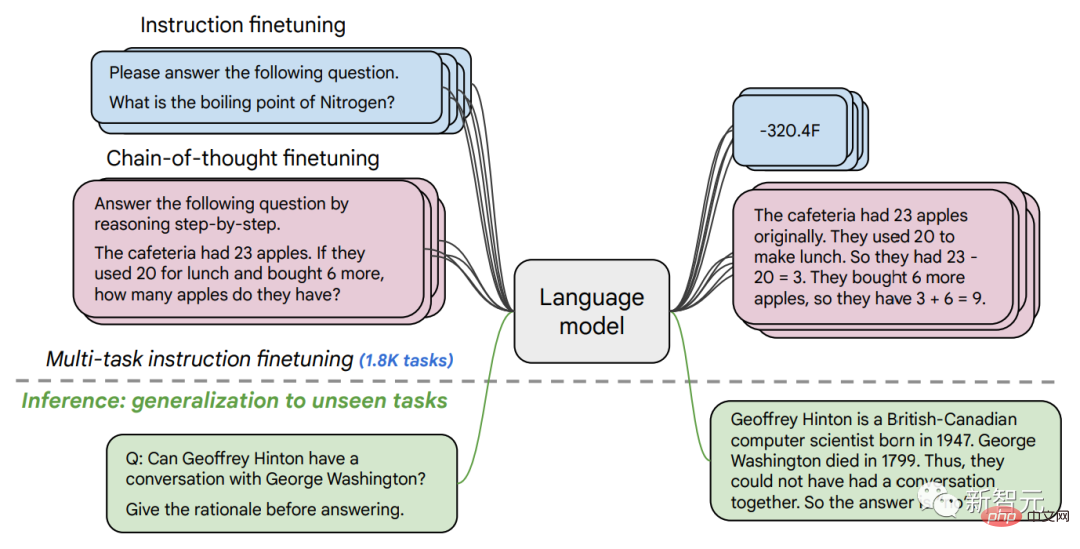

In the second paper, we explore instruction fine-tuning, which involves fine-tuning instructions in a set of instructions. Fine-tuning LM on NLP dataset.

In previous work, we applied instruction fine-tuning to a 137B parameter model on 62 NLP tasks, such as answering a short question, classifying the emotion expressed in a movie, or classifying a sentence Translated into Spanish and more.

In this work, we fine-tune a 540B parameter language model on over 1.8K tasks. Furthermore, previous work only fine-tuned language models with few examples (e.g., MetaICL) or zero-instance language models with no examples (e.g., FLAN, T0), whereas we fine-tune a combination of both.

We also include thought chain fine-tuning data, which enables the model to perform multi-step inference. We call our improved method "Flan" for fine-tuning language models.

It is worth noting that even when fine-tuned on 1.8K tasks, Flan only uses a fraction of the computation compared to pre-training (for PaLM 540B, Flan only uses Requires 0.2% of pre-training calculations).

Fine-tune the language model on 1.8K tasks formulated as instructions and evaluate the model on new tasks. Not included in trimming. Fine-tuning is performed with/without examples (i.e., 0-shot and few-shot), and with/without thought chains, allowing the model to be generalized across a range of evaluation scenarios.

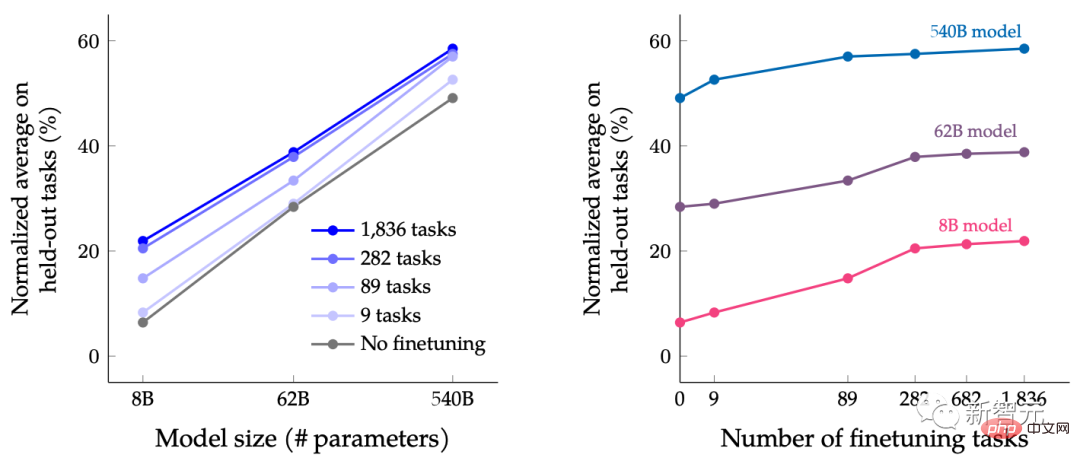

In this paper, LMs of a range of sizes are instructed to fine-tune, with the purpose of studying the joint effects of simultaneously expanding the size of the language model and increasing the number of fine-tuning tasks.

For example, for the PaLM class language model, it includes 8B, 62B and 540B parameter specifications. Our model is evaluated on four challenging benchmark evaluation criteria (MMLU, BBH, TyDiQA, and MGSM) and found that both expanding the number of parameters and fine-tuning the number of tasks can improve performance on new and previously unseen tasks.

Expanding the parameter model to 540B and using 1.8K fine-tuning tasks can improve performance. The y-axis of the above figure is the normalized mean of the four evaluation suites (MMLU, BBH, TyDiQA and MGSM).

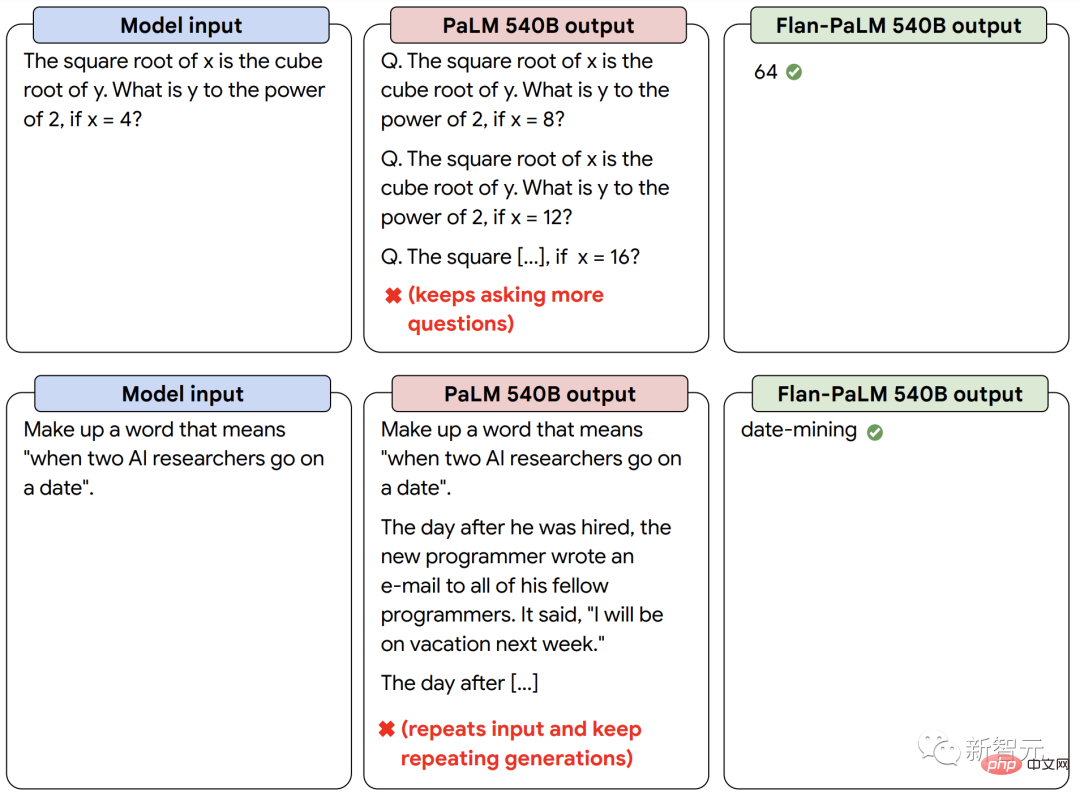

In addition to better performance, instruction fine-tuning LM is able to react to user instructions at inference time without requiring a small number of examples or hint engineering. This makes LM more user-friendly across a range of inputs. For example, LMs without instruction fine-tuning sometimes repeat inputs or fail to follow instructions, but instruction fine-tuning can mitigate such errors.

Our instruction fine-tuned language model Flan-PaLM responds better to instructions than the PaLM model without instruction fine-tuning.

Combining powerful forces to achieve "1 1>2"

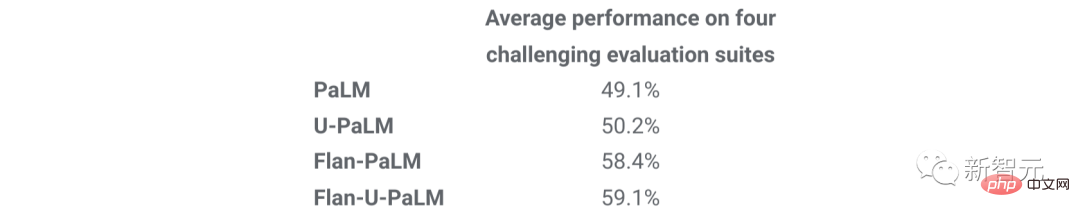

Finally, we show that UL2R and Flan can be combined to train the Flan-U-PaLM model.

Since Flan uses new data from NLP tasks and can achieve zero-point instruction tracking, we use Flan as the second choice method after UL2R.

We again evaluate the four benchmark suites and find that the Flan-U-PaLM model outperforms the PaLM model with only UL2R (U-PaLM) or only Flan (Flan-PaLM). Furthermore, when combined with thought chaining and self-consistency, Flan-U-PaLM reaches a new SOTA on the MMLU benchmark with a score of 75.4%.

Compared with using only UL2R (U-PaLM) or only using Flan (Flan-U-PaLM), combining UL2R and Flan (Flan -U-PaLM) combined leads to the best performance: the normalized average of the four evaluation suites (MMLU, BBH, TyDiQA and MGSM).

In general, UL2R and Flan are two complementary methods for improving pre-trained language models. UL2R uses the same data to adapt LM to denoisers' mixed objectives, while Flan leverages training data from over 1.8K NLP tasks to teach the model to follow instructions.

As language models get larger, techniques like UL2R and Flan, which improve general performance without requiring heavy computation, may become increasingly attractive.

The above is the detailed content of With only so much computing power, how to improve language model performance? Google has a new idea. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1662

1662

14

14

1419

1419

52

52

1312

1312

25

25

1262

1262

29

29

1235

1235

24

24

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Tutorial on how to register, use and cancel Ouyi okex account

Mar 31, 2025 pm 04:21 PM

Tutorial on how to register, use and cancel Ouyi okex account

Mar 31, 2025 pm 04:21 PM

This article introduces in detail the registration, use and cancellation procedures of Ouyi OKEx account. To register, you need to download the APP, enter your mobile phone number or email address to register, and complete real-name authentication. The usage covers the operation steps such as login, recharge and withdrawal, transaction and security settings. To cancel an account, you need to contact Ouyi OKEx customer service, provide necessary information and wait for processing, and finally obtain the account cancellation confirmation. Through this article, users can easily master the complete life cycle management of Ouyi OKEx account and conduct digital asset transactions safely and conveniently.

Detailed tutorial on how to register for binance (2025 beginner's guide)

Mar 18, 2025 pm 01:57 PM

Detailed tutorial on how to register for binance (2025 beginner's guide)

Mar 18, 2025 pm 01:57 PM

This article provides a complete guide to Binance registration and security settings, covering pre-registration preparations (including equipment, email, mobile phone number and identity document preparation), and introduces two registration methods on the official website and APP, as well as different levels of identity verification (KYC) processes. In addition, the article also focuses on key security steps such as setting up a fund password, enabling two-factor verification (2FA, including Google Authenticator and SMS Verification), and setting up anti-phishing codes, helping users to register and use the Binance Binance platform for cryptocurrency transactions safely and conveniently. Please be sure to understand relevant laws and regulations and market risks before trading and invest with caution.

How to optimize jieba word segmentation to improve the keyword extraction effect of scenic spot comments?

Apr 01, 2025 pm 06:24 PM

How to optimize jieba word segmentation to improve the keyword extraction effect of scenic spot comments?

Apr 01, 2025 pm 06:24 PM

How to optimize jieba word segmentation to improve keyword extraction of scenic spot comments? When using jieba word segmentation to process scenic spot comment data, if the word segmentation results are ignored...

Tutorial on using gate.io mobile app

Mar 26, 2025 pm 05:15 PM

Tutorial on using gate.io mobile app

Mar 26, 2025 pm 05:15 PM

Tutorial on using gate.io mobile app: 1. For Android users, visit the official Gate.io website and download the Android installation package, you may need to allow the installation of applications from unknown sources in your mobile phone settings; 2. For iOS users, search "Gate.io" in the App Store to download.

HBAR coins rose 263% this year! Is HBAR coins worth investing in? Potential and investment opportunities

Mar 05, 2025 pm 04:54 PM

HBAR coins rose 263% this year! Is HBAR coins worth investing in? Potential and investment opportunities

Mar 05, 2025 pm 04:54 PM

HederaHashgraph (HBAR) in-depth analysis: resilience performance and investment value HederaHashgraph (HBAR) has shown responsiveness recently and has become the focus of market attention. This article will explore HBAR and its potential investment value in depth. What is HBAR? HederaHashgraph is a distributed ledger platform based on hash graph technology, with its native tokens being HBAR. It aims to provide efficient, secure, and scalable decentralized applications (DApp) solutions. Core advantages include: Hashgraph consensus mechanism: using Hashgraph consensus algorithm based on directed acyclic graph (DAG) to achieve a traditional block

Top 10 recommended for safe and reliable virtual currency purchase apps

Mar 18, 2025 pm 12:12 PM

Top 10 recommended for safe and reliable virtual currency purchase apps

Mar 18, 2025 pm 12:12 PM

Top 10 recommended global virtual currency trading platforms in 2025, helping you to play the digital currency market! This article will deeply analyze the core advantages and special features of ten top platforms including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. Whether you are pursuing high liquidity and rich trading types, or focusing on safety, compliance and innovative functions, you can find a platform that suits you here. We will provide a comprehensive comparison of transaction types, security, special functions, etc. to help you choose the most suitable virtual currency trading platform and seize the opportunities of digital currency investment in 2025

Okex trading platform official website login portal

Mar 18, 2025 pm 12:42 PM

Okex trading platform official website login portal

Mar 18, 2025 pm 12:42 PM

This article introduces in detail the complete steps of logging in to the OKEx web version of Ouyi in detail, including preparation work (to ensure stable network connection and browser update), accessing the official website (to pay attention to the accuracy of the URL and avoid phishing website), finding the login entrance (click the "Login" button in the upper right corner of the homepage of the official website), entering the login information (email/mobile phone number and password, supporting verification code login), completing security verification (sliding verification, Google verification or SMS verification), and finally you can conduct digital asset trading after successfully logging in. A safe and convenient login process to ensure the safety of user assets.