Technology peripherals

Technology peripherals

AI

AI

New ideas for AI painting: Domestic open source new model with 5 billion parameters, achieving a leap in synthetic controllability and quality

New ideas for AI painting: Domestic open source new model with 5 billion parameters, achieving a leap in synthetic controllability and quality

New ideas for AI painting: Domestic open source new model with 5 billion parameters, achieving a leap in synthetic controllability and quality

- ##Paper address: https://arxiv.org/pdf/2302.09778v2.pdf

- Project address: https://github.com/damo-vilab/composer

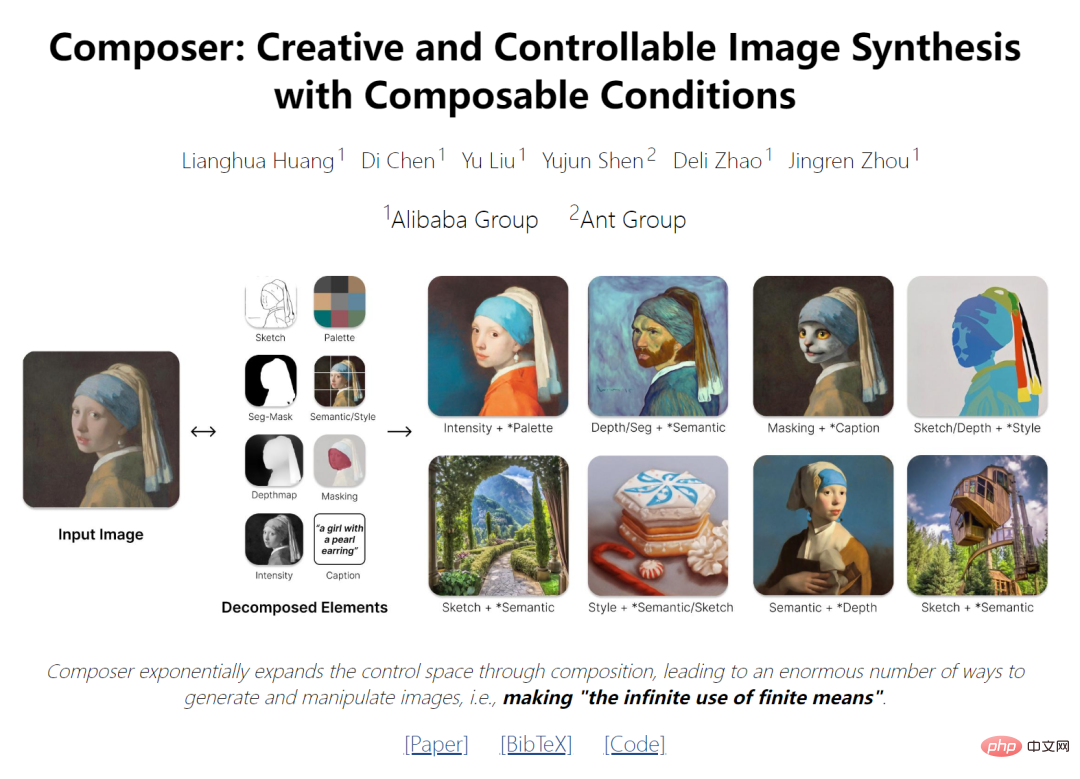

The latest research provides a new generative paradigm that can flexibly control the output image (such as spatial layout and color palette) while maintaining composition quality and model creation. force.

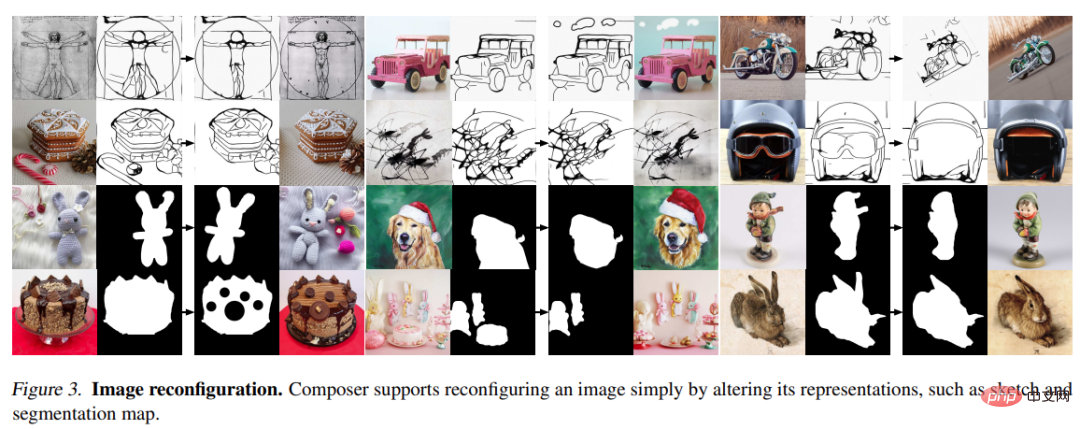

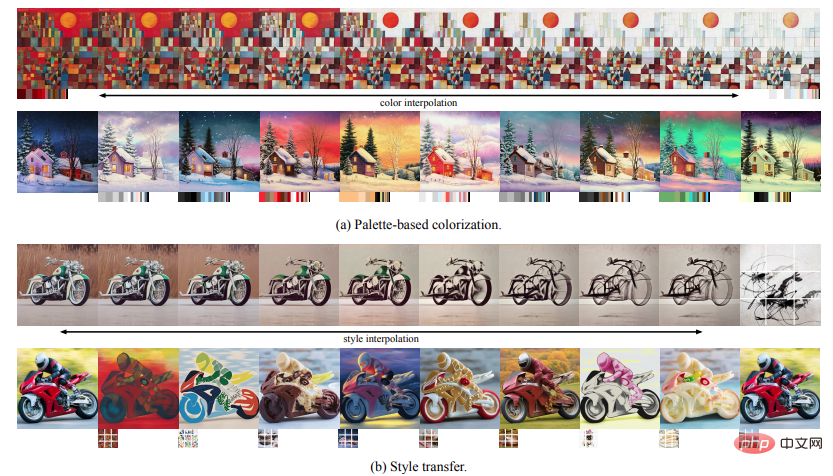

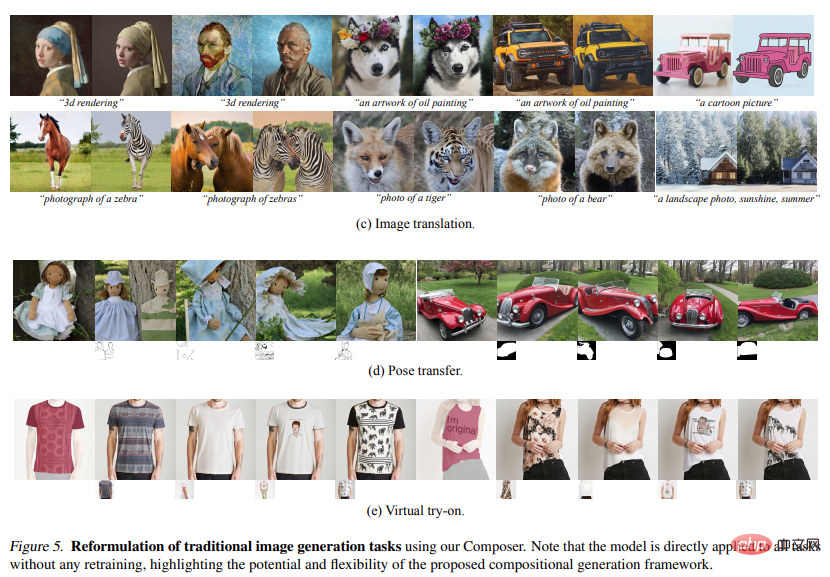

This research takes compositionality as the core idea. It first decomposes the image into representative factors, and then trains a diffusion model conditioned on these factors to reorganize the input. During the inference phase, rich intermediate representations serve as composable elements, providing a huge design space for the creation of customizable content (i.e., exponentially proportional to the number of decomposition factors). It is worth noting that the method named Composer supports various levels of conditions, such as text descriptions as global information, depth maps and sketches as local guidance, color histograms as low-level details, etc.

In addition to improving controllability, the study confirms that Composer can serve as a general framework that facilitates a wide range of classical generation tasks without the need for retraining.

Method

The framework introduced in this article includes a decomposition stage (the image is divided into a set of independent components) and a synthesis stage (the components are recombined using a conditional diffusion model) . Here we first briefly introduce the diffusion model and guidance direction implemented using Composer, and then detail the implementation of image decomposition and synthesis.

2.1. Diffusion model

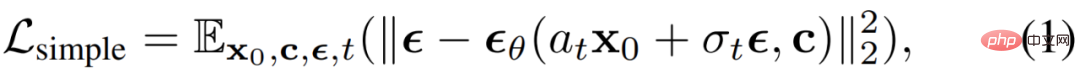

The diffusion model is a generative model that generates data from Gaussian noise through an iterative denoising process. generate data. Usually a simple mean square error is used as the denoising target:

where x_0 is an optional condition For the training data of c,

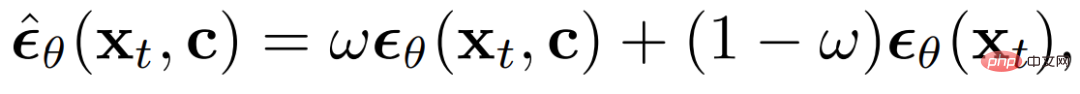

is additive Gaussian noise, a_t and σ_t are scalar functions of t, and  is a diffusion model with learnable parameters θ. Classifier-free bootstrapping has been most widely used in recent work for conditional data sampling of diffusion models, where the predicted noise is adjusted by:

is a diffusion model with learnable parameters θ. Classifier-free bootstrapping has been most widely used in recent work for conditional data sampling of diffusion models, where the predicted noise is adjusted by:

In the formula

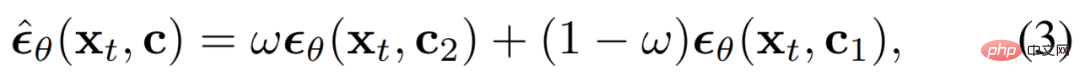

Guidance direction: Composer is a diffusion model that can accept a variety of conditions and can achieve various directions without classifier guidance:

c_1 and c_2 are two sets of conditions. Different choices of c_1 and c_2 represent different emphasis on the condition.

The conditions within (c_2 c_1) are emphasized as ω, the conditions within (c_1 c_2) are suppressed as (1−ω), and the guidance weight of the conditions within c1∩c2 is 1.0. . Bidirectional guidance: By using condition c_1 to invert the image x_0 to the underlying x_T, and then using another condition c_2 to sample from x_T, we are able to use Composer to manipulate the image in a disentangled way, where the direction of manipulation is between c_2 and c_1 defined by differences.

Decomposition

Study on decomposing images into decoupled representations that capture various aspects of the image, and describe the task The eight representations used in , these representations are extracted in real time during the training process.

Description (Caption) : Study the direct use of title or description information in image-text training data ( For example, LAION-5B (Schuhmann et al., 2022) ) as an image illustration. Pretrained images can also be leveraged to illustrate the model when annotations are not available. We characterize these titles using sentence and word embeddings extracted from the pre-trained CLIP ViT-L /14@336px (Radford et al., 2021) model.

Semantics and style: Study images extracted using the pre-trained CLIP ViT-L/14@336px model Embeddings are used to characterize the semantics and style of images, similar to unCLIP.

Color: Study the color statistics of images using smoothed CIELab histograms. Quantize the CIELab color space into 11 hue values, 5 saturation and 5 light values, using a smoothing sigma of 10. From experience, this setting works better.

Sketch : Study the application of edge detection models and then use sketch simplification algorithms to extract sketches of images. Sketch captures local details of an image with less semantics.

Instances: Study using the pre-trained YOLOv5 model to apply instance segmentation to images to extract their instance masks. Instance segmentation masks reflect the category and shape information of visual objects.

Depthmap : Study the use of pre-trained monocular depth estimation model to extract the depth map of the image and roughly capture the image Layout.

Intensity: The study introduces the original grayscale image as a representation, forcing the model to learn to deal with the disentangled degree of freedom of color. To introduce randomness, we uniformly sample from a set of predefined RGB channel weights to create grayscale images.

Masking : Study the introduction of image masks to enable Composer to limit image generation or operations to editable areas . A 4-channel representation is used, where the first 3 channels correspond to the masked RGB image and the last channel corresponds to the binary mask.

It should be noted that although this article conducted experiments using the above eight conditions, users can freely customize the conditions using Composer.

Composition

Studies using diffusion models to recombine images from a set of representations. Specifically, the study exploits the GLIDE architecture and modifies its tuning module. The study explores two different mechanisms to adapt models based on representations:

Global conditioning: For global representations including CLIP sentence embeddings, image embeddings, and color palettes, we project and add them to time-step embeddings. Additionally, we project image embeddings and color palettes into eight additional tokens and concatenate them with CLIP word embeddings, which are then used as context for cross-attention in GLIDE, similar to unCLIP. Since conditions are either additive or can be selectively masked in cross-attention, conditions can be dropped directly during training and inference, or new global conditions introduced.

Localization conditioning: For localized representations, including sketches, segmentation masks, depth maps, intensity images, and mask images, we use stacked convolutional layers to project them with noise The latent x_t has uniform-dimensional embeddings with the same spatial size. The sum of these embeddings is then calculated and the result is concatenated to x_t, which is then fed into UNet. Since embeddings are additive, it is easy to adapt missing conditions or incorporate new localized conditions.

Joint training strategy: It is important to design a joint training strategy that enables the model to learn to decode images from various combinations of conditions. The study experimented with several configurations and identified a simple yet effective configuration that uses an independent exit probability of 0.5 for each condition, a probability of 0.1 for removing all conditions, and a probability of 0.1 for keeping all conditions. A special dropout probability of 0.7 is used for intensity images since they contain the vast majority of information about the image and may weaken other conditions during training.

The basic diffusion model produces a 64 × 64 resolution image. To generate high-resolution images, we trained two unconditional diffusion models for upsampling, respectively upsampling images from 64 × 64 to 256 × 256, and from 256 × 256 to 1024 × 1024 resolution. The architecture of the upsampling model is modified from unCLIP, where the use of more channels in low-resolution layers is studied and self-attention blocks are introduced to expand the capacity. An optional prior model is also introduced that generates image embeddings from subtitles. Empirically, prior models can improve the diversity of generated images under specific combinations of conditions.

Experiment

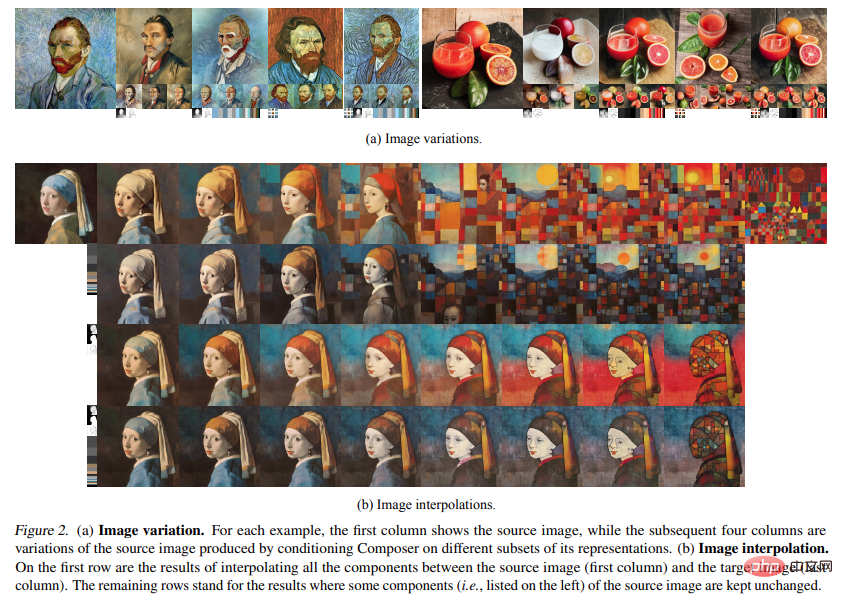

Variation: Using Composer you can create a new image that is similar to a given image, but conditioned on a specific subset of its representation. It's different in some ways. By carefully choosing combinations of different representations, one can flexibly control the range of image changes (Fig. 2a). After incorporating more conditions, the method presented in the study generates a variant of unCLIP that only conditions on the image embedding: using Composer it is possible to create new images that are similar to a given image, but conditional on a specific subset of its representation. Reflection, is different in some ways. By carefully choosing combinations of different representations, one can flexibly control the range of image changes (Fig. 2a). After incorporating more conditions, the proposed method achieves higher reconstruction accuracy than unCLIP, which is only conditioned on image embeddings.

The above is the detailed content of New ideas for AI painting: Domestic open source new model with 5 billion parameters, achieving a leap in synthetic controllability and quality. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

MeMebox 2.0 redefines crypto asset management through innovative architecture and performance breakthroughs. 1) It solves three major pain points: asset silos, income decay and paradox of security and convenience. 2) Through intelligent asset hubs, dynamic risk management and return enhancement engines, cross-chain transfer speed, average yield rate and security incident response speed are improved. 3) Provide users with asset visualization, policy automation and governance integration, realizing user value reconstruction. 4) Through ecological collaboration and compliance innovation, the overall effectiveness of the platform has been enhanced. 5) In the future, smart contract insurance pools, forecast market integration and AI-driven asset allocation will be launched to continue to lead the development of the industry.

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

The top ten cryptocurrency trading platforms in the world include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi Global, Bitfinex, Bittrex, KuCoin and Poloniex, all of which provide a variety of trading methods and powerful security measures.

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms: 1. OKX, 2. Binance, 3. Coinbase, 4. Kraken, 5. Huobi, 6. KuCoin, 7. Bitfinex, 8. Gemini, 9. Bitstamp, 10. Poloniex, these platforms are known for their security, user experience and diverse functions, suitable for users at different levels of digital currency transactions

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

The top ten digital currency exchanges such as Binance, OKX, gate.io have improved their systems, efficient diversified transactions and strict security measures.

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

Currently ranked among the top ten virtual currency exchanges: 1. Binance, 2. OKX, 3. Gate.io, 4. Coin library, 5. Siren, 6. Huobi Global Station, 7. Bybit, 8. Kucoin, 9. Bitcoin, 10. bit stamp.

How to use string streams in C?

Apr 28, 2025 pm 09:12 PM

How to use string streams in C?

Apr 28, 2025 pm 09:12 PM

The main steps and precautions for using string streams in C are as follows: 1. Create an output string stream and convert data, such as converting integers into strings. 2. Apply to serialization of complex data structures, such as converting vector into strings. 3. Pay attention to performance issues and avoid frequent use of string streams when processing large amounts of data. You can consider using the append method of std::string. 4. Pay attention to memory management and avoid frequent creation and destruction of string stream objects. You can reuse or use std::stringstream.