Technology peripherals

Technology peripherals

AI

AI

The real hero behind ChatGPT: OpenAI chief scientist Ilya Sutskever's leap of faith

The real hero behind ChatGPT: OpenAI chief scientist Ilya Sutskever's leap of faith

The real hero behind ChatGPT: OpenAI chief scientist Ilya Sutskever's leap of faith

The emergence of ChatGPT has attracted much attention, but we should not forget the unknown genius behind it. Ilya Sutskever is the co-founder and chief scientist of OpenAI. It was under his leadership that OpenAI made significant progress in developing cutting-edge technologies and advancing the field of artificial intelligence.

In this article, we will explore how Sutskever went from a young researcher to one of the leading figures in the field of artificial intelligence in two decades. Whether you are an AI enthusiast, a researcher, or simply someone curious about the inner workings of this field, this article will provide valuable perspective and information.

This article follows the following timeline:

2003: Ilya Sutskever’s apprenticeship journey

2011: First introduction to AGI

2012: The revolution in image recognition

2013: Auction of DNNresearch to Google

2014: The revolution in language translation

2015: From Google to OpenAI: The new era of artificial intelligence Chapter

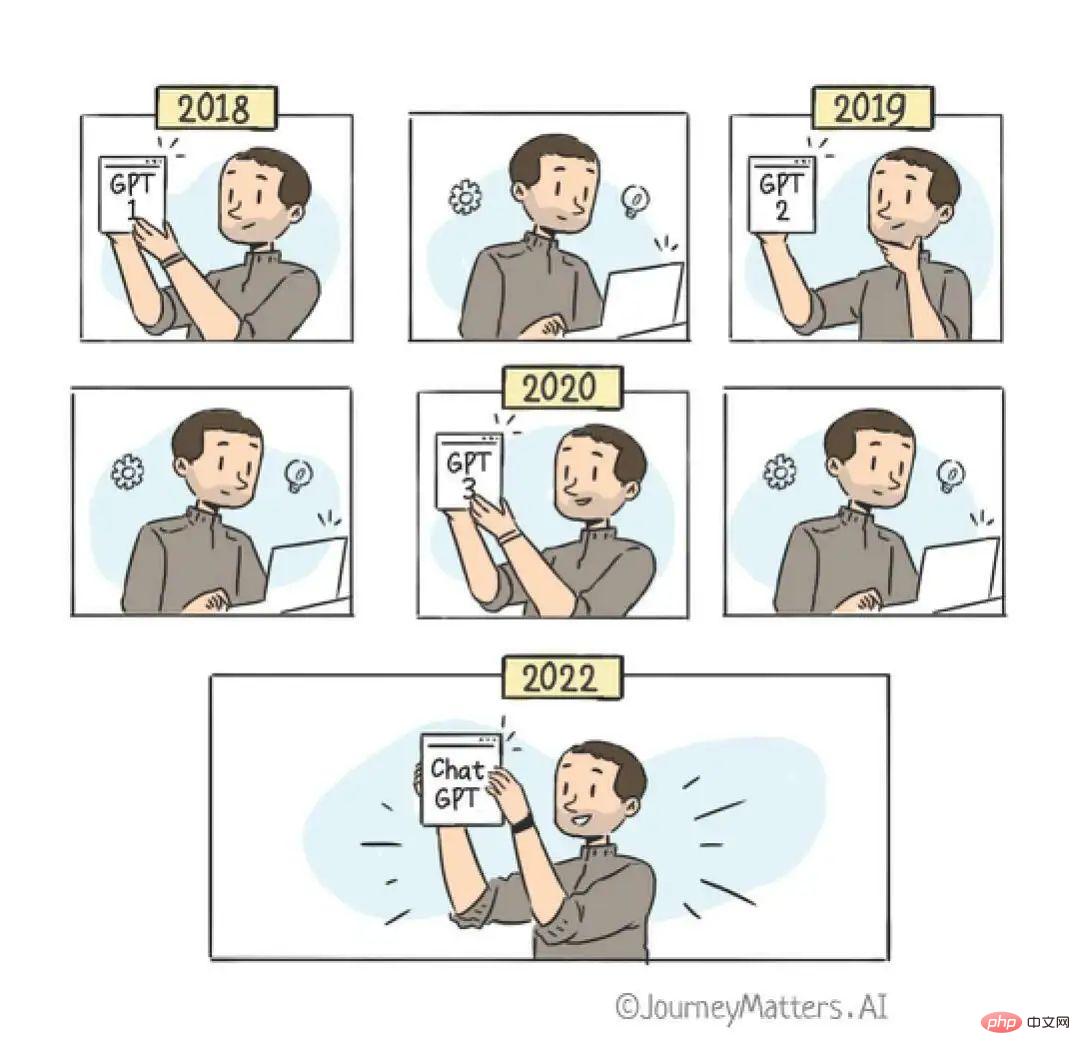

2018: GPT 1, 2 and 3

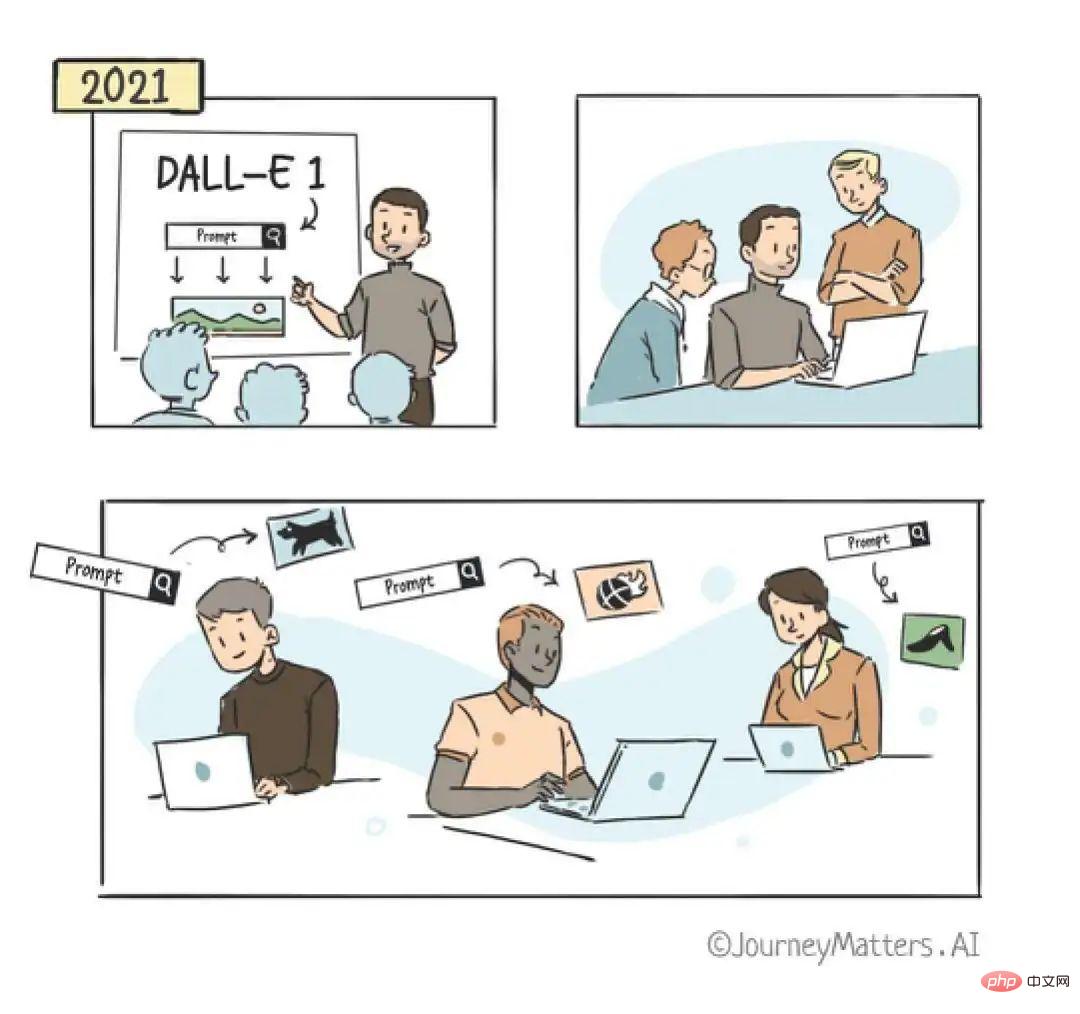

2021: Development of DALL-E 1

2022: Unveiling ChatGPT to the world

Ilya Sutskever

Co-founder and chief scientist of OpenAI, graduated from the University of Toronto in 2005 and received a CS degree in 2012 Ph.D. From 2012 to the present, he has worked at Stanford University, DNNResearch, and Google Brain, conducting research related to machine learning and deep learning. In 2015, he gave up his high-paying position at Google and co-founded OpenAI with Greg Brockman and others. Developed GPT-1, 2, 3 and DALLE series models. In 2022, he was elected as a fellow of the Royal Society of Science. He is a pioneer in the field of artificial intelligence who has been instrumental in shaping the current landscape of artificial intelligence and continues to push the boundaries of what is possible with machine learning. His passion for artificial intelligence has informed his groundbreaking research, which has shaped the development of the fields of deep learning and machine learning.

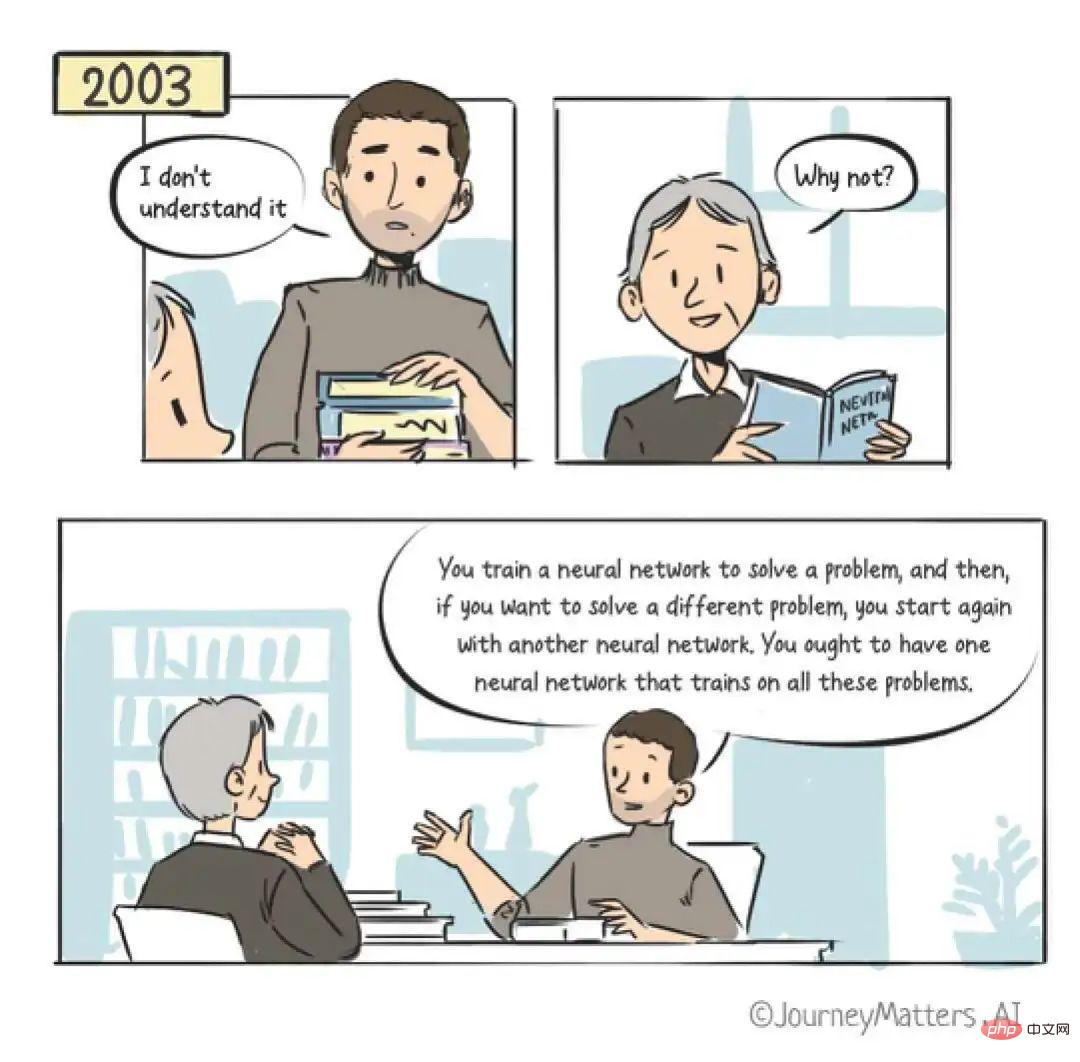

2003: First impression of Ilya Sutskever

Sutskever: I don’t understand. Hinton: Why don’t you understand? Sutskever: People train neural networks to solve problems. When people want to solve different problems, they have to start training again with another neural network. But I think people should have a neural network that can solve all problems.

When he was an undergraduate at the University of Toronto, Sutskever wanted to join Professor Geoffrey Hinton’s deep learning laboratory. So, he knocked on the door of Professor Hinton's office one day and asked if he could join the laboratory. The professor asked him to make an appointment in advance, but Sutskever didn't want to waste any more time, so he immediately asked: "How about now?"

Hinton realized that Sutskever was a keen student, so he gave He had two papers for him to read. A week later, Sutskever returned to the professor's office and told him he didn't understand.

"Why don't you understand?" the professor asked.

##“People train neural networks to solve problems, and when people want to solve a different problem, they have to start over with another neural network. But I think people should have a neural network that can solve all problems."

##“People train neural networks to solve problems, and when people want to solve a different problem, they have to start over with another neural network. But I think people should have a neural network that can solve all problems."

This passage demonstrates Sutskever's unique ability to draw conclusions, and this With abilities that would take even experienced researchers years to find, Hinton extended an invitation to join his lab.

######2011: First acquaintance with AGI############################# Sutskever: Me Don’t agree with this idea (AGI)#########When Sutskever was still at the University of Toronto, he flew to London to find a job at DeepMind. There he met Demis Hassabis and Shane Legg (co-founders of DeepMind), who were building AGI (Artificial General Intelligence). AGI is a general artificial intelligence that can think and reason like humans and complete various tasks related to human intelligence, such as understanding natural language, learning from experience, making decisions, and solving problems.

At the time, AGI was not something serious researchers would talk about. Sutskever also felt they had lost touch with reality, so he turned down the job, went back to college, and eventually joined Google in 2013.

2012: Image Recognition Revolution

Winning the ImageNet competition

Geoffrey Hinton has a unique vision and believed in deep learning when others did not. And he firmly believes that success in the ImageNet competition will settle this debate once and for all.

ImageNet Competition: The Stanford University Laboratory holds the ImageNet Competition every year. They provide contestants with a massive database of carefully labeled photos, and researchers from around the world come to compete to try to create a system that can recognize the most images.

Two of Hinton’s students, Ilya Sutskever and Alex Krizhevsky, participated in this competition. They broke the traditional manual design scheme, adopted a deep neural network, and broke through the 75% accuracy mark. So they won the ImageNet competition and their system was later named AlexNet.

Since then, the field of image recognition has taken on a completely new look.

Later, Sutskever, Krizhevsky, and Hinton published a paper on AlexNet, which became one of the most cited papers in computer science, cited by a total of other researchers More than 60,000 times.

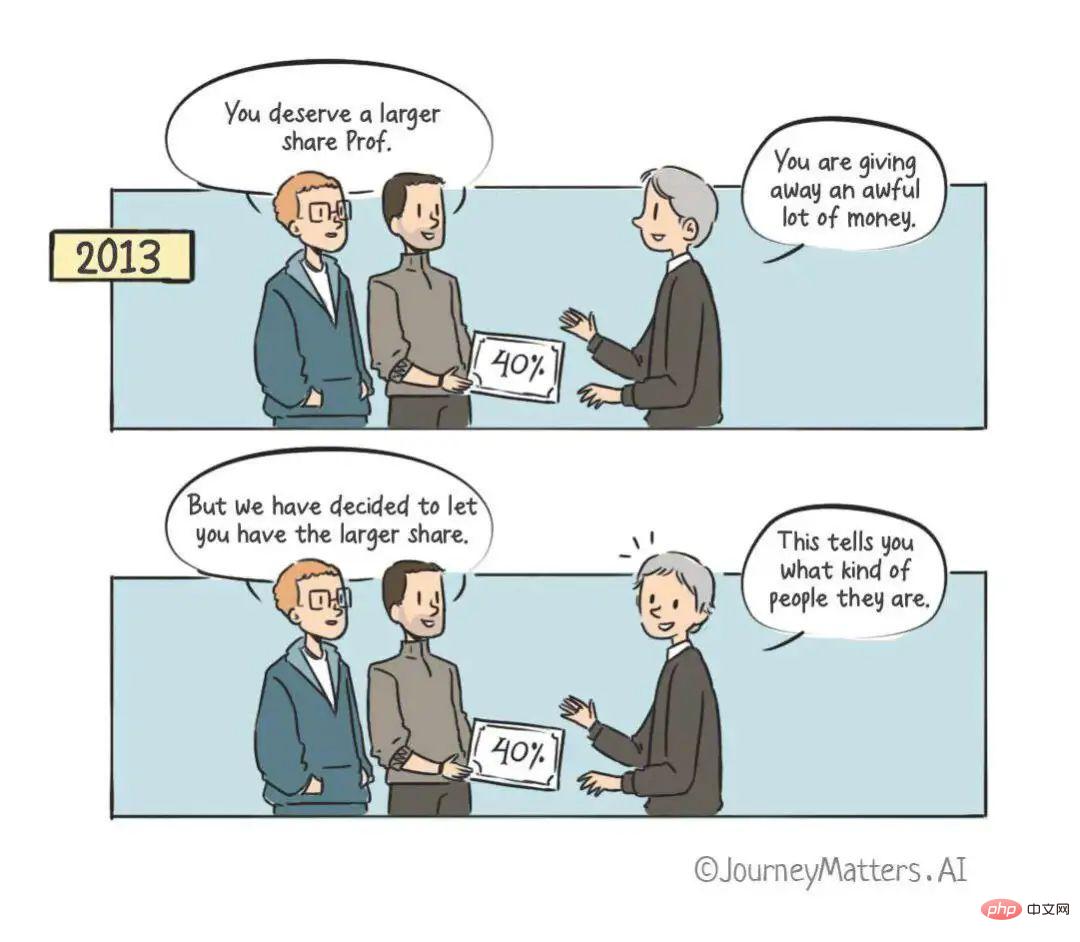

2013: Auction of DNNresearch to Google

##Sutskever&Krizhevsky : You deserve a greater percentage of your dividends. Hinton: You're sharing too much of my money. Sutskever&Krizhevsky: But we have already decided to give you the lion’s share. Hinton: It speaks to their character.

Hinton, together with Sutskever and Krizhevsky, formed a new company called DNNresearch. They don't have any products and have no plans to build any in the future.

Hinton asked the lawyer how to maximize the value of his new company, even though it currently only has three employees, no products, and no foundation. One of the options the lawyer gave him was to set up an auction. Four companies were involved in the acquisition: Baidu, Google, Microsoft and DeepMind (then a young startup based in London). The first to exit was DeepMind, followed by Microsoft, and finally only Baidu and Google were left competing.

By close to midnight one night, the auction price was as high as $44 million, and Hinton paused the bidding and went to sleep. The next day, he announced that the auction was over and sold his company to Google for $44 million, deciding that finding the right home for his research was more important. At this point, Hinton, like his students, puts their ideas ahead of financial gain.

When it came time to split the proceeds, Sutskever and Krizhevsky insisted that Hinton should get a larger share (40%), even though Hinton suggested that they might as well get some sleep. The next day, they still insisted on this distribution method. Hinton later commented: "It reflects who they are as people, not me."

After that, Sutskever became a research scientist at Google Brain. His ideas changed even more and began to gradually align with those of the founder of DeepMind. He began to believe that AGI's future was right in front of him. Of course, Sutskever himself has never been afraid to change his mind in the face of new information or experience. After all, believing in AGI requires a leap of faith, As Sergey Levine (Sutskever’s colleague at Google) commented on Sutskever: "He is a man who is not afraid to 'believe.'"

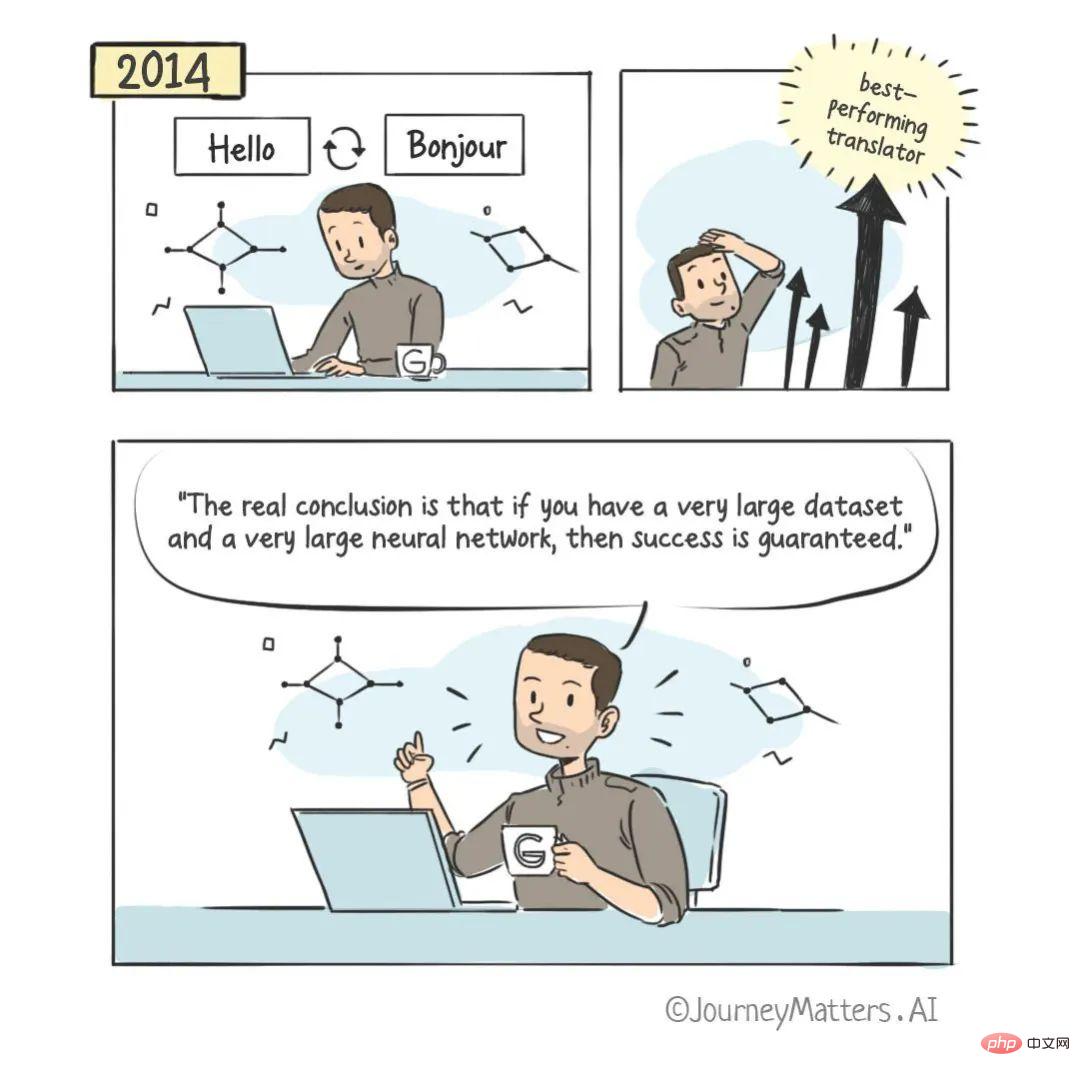

2014: The Revolution in Language Translation

Sutskever: The correct conclusion is that if you have a very large data set and a very large neural network, then success is inevitable. (The best performing translator)

After acquiring DNNResearch, Google hired Sutskever as a research scientist at Google Brain.

While working at Google, Sutskever invented a variant of a neural network that could translate English into French. He proposed "Sequence to Sequence Learning", which captures the sequence structure of the input (such as an English sentence) and maps it to an output that also has a sequence structure (such as a French sentence).

He said the researchers didn't believe neural networks could translate, so it was a big surprise when they actually did. His invention beats the best-performing translators and provides a major upgrade to Google Translate. Language translation will never be the same again.

2015: From Google to OpenAI: A new chapter in artificial intelligence

Sam Altman and Greg Brockman brought Sutskever and nine other researchers together to see if it was still possible to form a research lab with the best minds in the field. When discussions began about the lab that would become OpenAI, Sutskever realized he had found a group of like-minded people who shared his beliefs and aspirations.

Brockman extended invitations to the 10 researchers to join his lab and gave them three weeks to decide. When Google found out about this, they offered Sutskever a substantial amount of money to join them. After being rejected, Google increased their salary to nearly $2 million in the first year, which was two or three times what OpenAI was paying him.

But Sutskever happily passed up a multimillion-dollar job offer at Google to eventually become a co-founder of the nonprofit OpenAI.

OpenAI’s goal is to use artificial intelligence to benefit all mankind and advance artificial intelligence in a responsible manner.

2018: Development of GPT 1, 2 & 3

Led by Sutskever OpenAI invented GPT-1, which was subsequently developed into GPT-2, GPT-3 and ChatGPT.

GPT (Generative Pre-trained Transformer) model is a series of language models based on neural networks. Every update of the GPT model is a breakthrough in the field of natural language processing.

- GPT-1 (2018) : This is the first model in the series, trained on a large-scale Internet text dataset. One of its key innovations is the use of unsupervised pre-training, where the model learns to predict words in a sentence based on the context of preceding words. This enables the model to learn language structures and generate human-like text.

- GPT-2 (2019) : In GPT- 1, it was trained on a larger data set, resulting in a more powerful model. One of the major advancements of GPT-2 is its ability to generate coherent and fluent text passages on a wide range of topics, making it a key player in unsupervised language understanding and generation tasks.

- ##GPT-3 (2020):GPT-3 is a substantial leap forward in both scale and performance. It was trained on a massive dataset using 175 billion parameters, much larger than previous models. GPT-3 achieves state-of-the-art performance on a wide range of language tasks, such as question answering, machine translation, and summarization, with near-human capabilities. It also shows the ability to perform simple coding tasks, write coherent news articles, and even generate poetry.

- GPT-4: Expected to appear soon, expected to be 2023.

2021: Development of DALL-E 1

Many of today's major image generators - DALL-E 2, MidJourney - owe their roots to DALL-E 1, as they are based on the same transformer architecture and work on similar Training is performed on image datasets and related text descriptions. In addition, both DALL-E 2 and MidJourney are based on the fine-tuning process of DALL-E 1.

2022: Unveiling ChatGPT to the world

ChatGPT works by pre-training a deep neural network on a large text dataset and then fine-tuning it on a specific task, such as answering questions or generating text. It is a conversational artificial intelligence system based on the GPT-3 language model.

Understanding the context of a conversation and generating appropriate responses is one of ChatGPT’s primary features. The bot remembers your conversation threads and makes follow-up responses based on previous questions and answers. Unlike other chatbots, which are often limited to pre-programmed reactions, ChatGPT can generate reactions within the app, allowing it to have more dynamic and diverse conversations.

Elon Musk is one of the founders of OpenAI. He said:

"ChatGPT is terrifyingly good. We are not far away from dangerously powerful artificial intelligence. ". Endnotes

Ilya Sutskever’s passion for artificial intelligence drove his groundbreaking research that changed the course of the field. His work in deep learning and machine learning has been instrumental in advancing the state of the art and shaping the future direction of the field.We have also witnessed firsthand the impact of Sutskever’s work in the field of artificial intelligence. He has changed the course of the field and will continue to work in this direction. Despite facing material temptations many times, Sutskever chose to pursue his passion and focus on his research; his dedication to his work is exemplary for any researcher.

Now we have witnessed the impact Sutskever has had on our world. Obviously, this is just the beginning.

The above is the detailed content of The real hero behind ChatGPT: OpenAI chief scientist Ilya Sutskever's leap of faith. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1663

1663

14

14

1419

1419

52

52

1313

1313

25

25

1263

1263

29

29

1237

1237

24

24

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

The top ten cryptocurrency exchanges in the world in 2025 include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi, Bitfinex, KuCoin, Bittrex and Poloniex, all of which are known for their high trading volume and security.

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

Bitcoin’s price ranges from $20,000 to $30,000. 1. Bitcoin’s price has fluctuated dramatically since 2009, reaching nearly $20,000 in 2017 and nearly $60,000 in 2021. 2. Prices are affected by factors such as market demand, supply, and macroeconomic environment. 3. Get real-time prices through exchanges, mobile apps and websites. 4. Bitcoin price is highly volatile, driven by market sentiment and external factors. 5. It has a certain relationship with traditional financial markets and is affected by global stock markets, the strength of the US dollar, etc. 6. The long-term trend is bullish, but risks need to be assessed with caution.

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

The top ten cryptocurrency trading platforms in the world include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi Global, Bitfinex, Bittrex, KuCoin and Poloniex, all of which provide a variety of trading methods and powerful security measures.

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

MeMebox 2.0 redefines crypto asset management through innovative architecture and performance breakthroughs. 1) It solves three major pain points: asset silos, income decay and paradox of security and convenience. 2) Through intelligent asset hubs, dynamic risk management and return enhancement engines, cross-chain transfer speed, average yield rate and security incident response speed are improved. 3) Provide users with asset visualization, policy automation and governance integration, realizing user value reconstruction. 4) Through ecological collaboration and compliance innovation, the overall effectiveness of the platform has been enhanced. 5) In the future, smart contract insurance pools, forecast market integration and AI-driven asset allocation will be launched to continue to lead the development of the industry.

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

Currently ranked among the top ten virtual currency exchanges: 1. Binance, 2. OKX, 3. Gate.io, 4. Coin library, 5. Siren, 6. Huobi Global Station, 7. Bybit, 8. Kucoin, 9. Bitcoin, 10. bit stamp.

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

The top ten digital currency exchanges such as Binance, OKX, gate.io have improved their systems, efficient diversified transactions and strict security measures.

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

Handling high DPI display in C can be achieved through the following steps: 1) Understand DPI and scaling, use the operating system API to obtain DPI information and adjust the graphics output; 2) Handle cross-platform compatibility, use cross-platform graphics libraries such as SDL or Qt; 3) Perform performance optimization, improve performance through cache, hardware acceleration, and dynamic adjustment of the details level; 4) Solve common problems, such as blurred text and interface elements are too small, and solve by correctly applying DPI scaling.