Technology peripherals

Technology peripherals

AI

AI

Use magic to defeat magic! A Go AI that rivaled top human players lost to its peers

Use magic to defeat magic! A Go AI that rivaled top human players lost to its peers

Use magic to defeat magic! A Go AI that rivaled top human players lost to its peers

In recent years, reinforcement learning in self-game has achieved superhuman performance in a series of games such as Go and chess. Furthermore, the idealized version of self-play also converges to a Nash equilibrium. Nash equilibrium is very famous in game theory. This theory was proposed by John Nash, the founder of game theory and Nobel Prize winner. That is, in a game process, regardless of the other party's strategy choice, one party will choose a certain strategy. strategy, the strategy is called a dominant strategy. If any player chooses the optimal strategy when the strategies of all other players are determined, then this combination is defined as a Nash equilibrium.

Previous research has shown that the seemingly effective continuous control strategies in self-game can also be exploited by countermeasures, suggesting that self-game may not be as powerful as previously thought. This leads to a question: Is confrontational strategy a way to overcome self-game, or is self-game strategy itself insufficient?

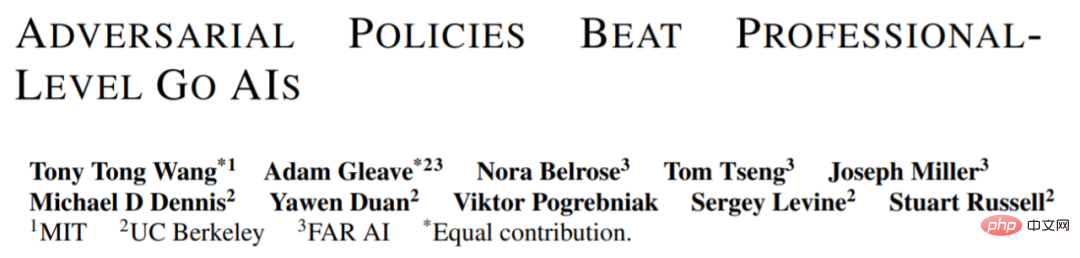

In order to answer this question, researchers from MIT, UC Berkeley and other institutions conducted some research. They chose a field that they are good at in self-games, namely Go. Specifically, they conducted an attack on KataGo, the strongest publicly available Go AI system. For a fixed network (freezing KataGo), they trained an end-to-end adversarial strategy. Using only 0.3% of the calculations when training KataGo, they obtained an adversarial strategy and used this strategy to attack KataGo. In this case, their strategy achieved a 99% winning rate against KataGo, which is comparable to the top 100 European Go players. And when KataGo used enough searches to approach superhuman levels, their win rate reached 50%. Crucially, the attacker (in this paper referring to the strategy learned in this study) cannot win by learning a general Go strategy.

Here we need to talk about KataGo. As this article says, when they wrote this article, KataGo was still the most powerful public Go AI system. With the support of search, it can be said that KataGo is very powerful, defeating ELF OpenGo and Leela Zero, which are superhuman themselves. Now the attacker in this study has defeated KataGo, which can be said to be very powerful.

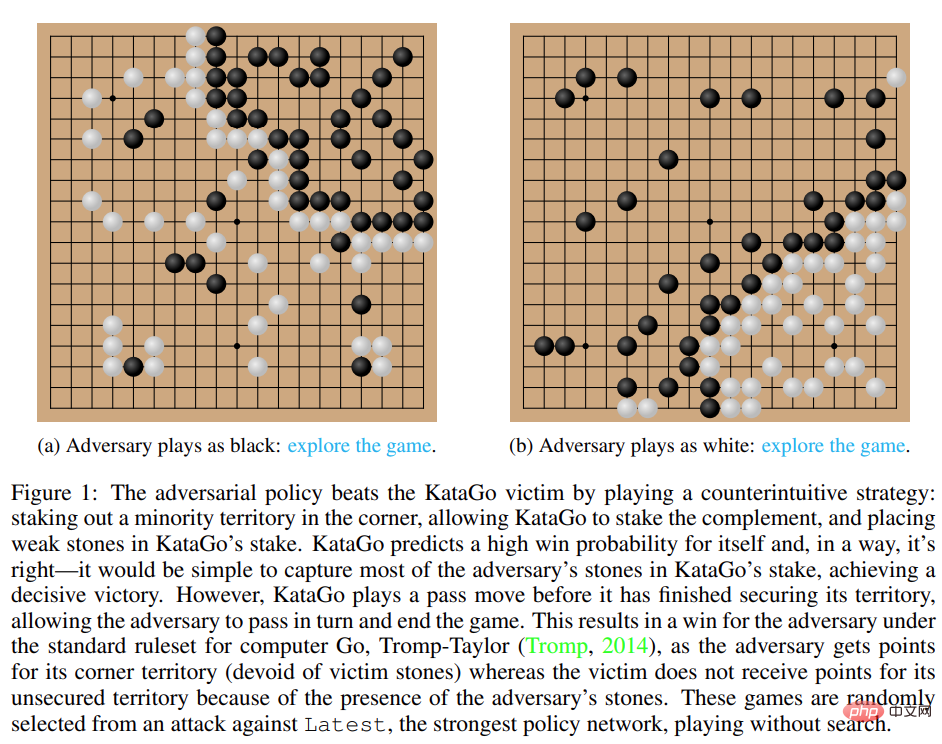

Figure 1: Adversarial strategy defeats KataGo victim.

- Paper address: https://arxiv.org/pdf/2211.00241.pdf

- Research homepage: https://goattack.alignmentfund.org/adversarial-policy-katago?row=0#no_search-board

Interestingly, the adversarial strategy proposed in this study cannot defeat human players, and even amateur players can significantly outperform the proposed model.

Attack method

Previous methods such as KataGo and AlphaZero usually train the agent to play games by itself, and the game opponent is the agent itself. In this research by MIT, UC Berkeley and other institutions, a game is played between the attacker (adversary) and fixed victim (victim) agents, and the attacker is trained in this way. The research hopes to train attackers to exploit game interactions with victim agents rather than just imitating game opponents. This process is called "victim-play".

In conventional self-game, the agent models the opponent's actions by sampling from its own policy network. This approach does work. In the game of self. But in victim-play, modeling the victim from the attacker's policy network is the wrong approach. To solve this problem, this study proposes two types of adversarial MCTS (A-MCTS), including:

- A-MCTS-S: In A-MCTS-S, the researcher sets the attacker's search process as follows: when the victim moves the chess piece, sample from the victim's strategy network; When it is the attacker's turn to move a piece, samples are taken from the attacker's policy network.

- A-MCTS-R: Since A-MCTS-S underestimates the victim's ability, the study proposes A-MCTS-R, in the A-MCTS-R tree Run MCTS for the victim on each victim node. However, this change increases the computational complexity of training and inference for the attacker.

During training, the study trained adversarial strategies against games against frozen KataGo victims. Without search, the attacker can achieve >99% win rate against KataGo victims, which is comparable to the top 100 European Go players. Furthermore, the trained attacker achieved a win rate of over 80% in 64 rounds played against the victim agent, which the researchers estimate was comparable to the best human Go players.

It is worth noting that these games show that the countermeasures proposed in this study are not entirely gaming, but rather deceiving KataGo into positioning in a position favorable to the attacker. End the game early. In fact, while the attacker was able to exploit gaming strategies comparable to those of the best human Go players, it was easily defeated by human amateurs.

In order to test the attacker's ability to play against humans, the study asked Tony Tong Wang, the first author of the paper, to actually play against the attacker model. Wang had never learned the game of Go before this research project, but he still beat the attacker model by a huge margin. This shows that while the adversarial strategy proposed in this study can defeat an AI model that can defeat top human players, it cannot defeat human players. This may indicate that some AI Go models have bugs.

Evaluation results

Attack Victim Policy Network

First, the researchers evaluated themselves The attack method was evaluated on the performance of KataGo (Wu, 2019), and it was found that the A-MCTS-S algorithm achieved a winning rate of more than 99% against the search-free Latest (KataGo's latest network).

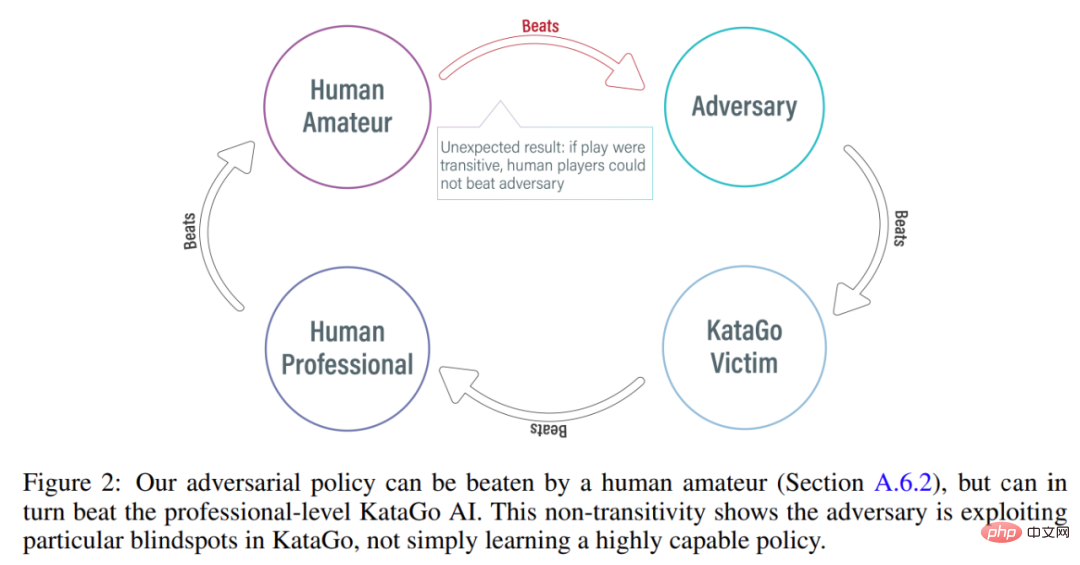

As shown in Figure 3 below, the researcher evaluated the performance of the self-confrontation strategy on the Initial and Latest policy networks. They found that during most of the training, the self-attacker achieved a high winning rate (above 90%) against both victims. However, as time goes by, the attacker overfits Latest, and the winning rate against Initial drops to about 20%.

The researchers also evaluated the best counter-strategy checkpoints against Latest, achieving a win rate of over 99%. Moreover, such a high win rate is achieved while the adversarial strategy is trained for only 3.4 × 10^7 time steps, which is 0.3% of the victim time steps.

Migrate to victims with searches

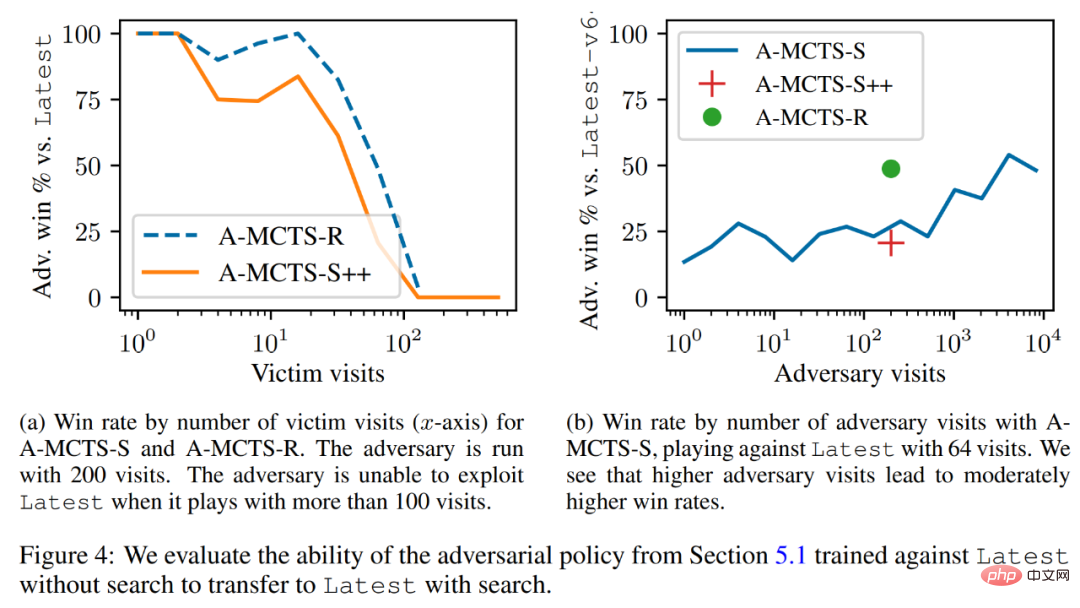

Researchers will The adversarial strategy was successfully transferred to the low search mechanism, and the adversarial strategy trained in the previous section was evaluated for its ability to search Latest. As shown in Figure 4a below, they found that A-MCTS-S’s win rate against victims dropped to 80% at 32 victim rounds. But here, the victim does not search during training and inference.

In addition, the researchers also tested A-MCTS-R and found that it performed better, with 32 victim rounds. Latest achieved a win rate of over 99%, but at round 128 the win rate dropped below 10%.

In Figure 4b, the researchers show that when the attacker comes to 4096 rounds, A-MCTS-S achieves a maximum winning rate of 54% against Latest. This is very similar to the performance of A-MCTS-R at 200 epochs, which achieved a 49% win rate.

Other evaluation

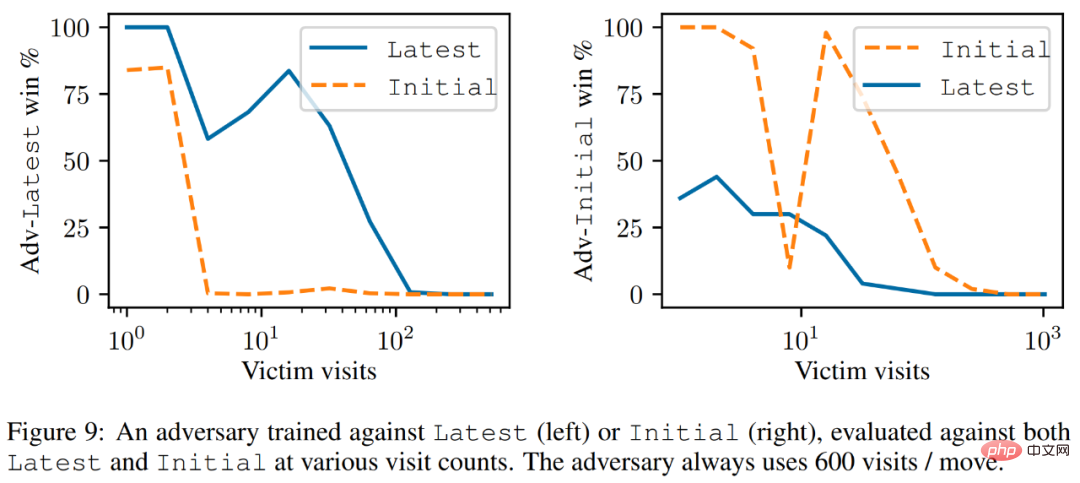

As shown in Figure 9 below, the researchers found that although Latest is a more powerful intelligence body, but the attacker trained against Latest performs better against Latest than Initial.

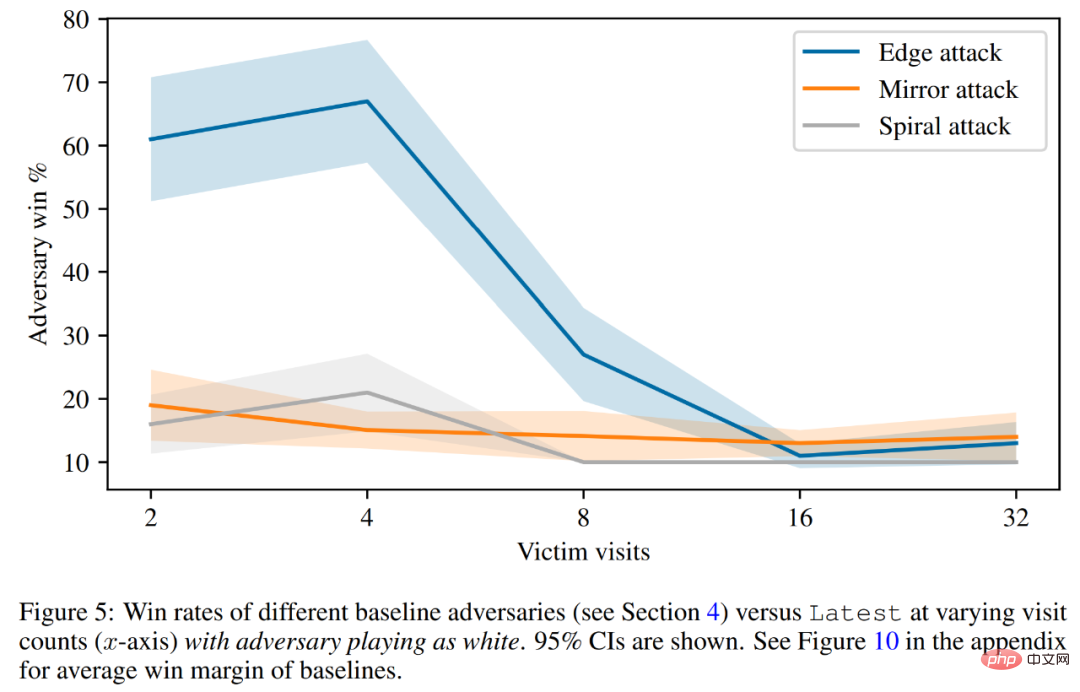

Finally, the researchers discussed the attack principle, including victim value prediction and hard-coded defense evaluation. As shown in Figure 5 below, all baseline attacks perform significantly worse than the adversarial strategies they were trained on.

Please refer to the original paper for more technical details.

The above is the detailed content of Use magic to defeat magic! A Go AI that rivaled top human players lost to its peers. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1655

1655

14

14

1413

1413

52

52

1306

1306

25

25

1252

1252

29

29

1226

1226

24

24

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

Bitcoin’s price ranges from $20,000 to $30,000. 1. Bitcoin’s price has fluctuated dramatically since 2009, reaching nearly $20,000 in 2017 and nearly $60,000 in 2021. 2. Prices are affected by factors such as market demand, supply, and macroeconomic environment. 3. Get real-time prices through exchanges, mobile apps and websites. 4. Bitcoin price is highly volatile, driven by market sentiment and external factors. 5. It has a certain relationship with traditional financial markets and is affected by global stock markets, the strength of the US dollar, etc. 6. The long-term trend is bullish, but risks need to be assessed with caution.

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms: 1. OKX, 2. Binance, 3. Coinbase, 4. Kraken, 5. Huobi, 6. KuCoin, 7. Bitfinex, 8. Gemini, 9. Bitstamp, 10. Poloniex, these platforms are known for their security, user experience and diverse functions, suitable for users at different levels of digital currency transactions

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

The top ten digital currency exchanges such as Binance, OKX, gate.io have improved their systems, efficient diversified transactions and strict security measures.

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

The top ten cryptocurrency trading platforms in the world include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi Global, Bitfinex, Bittrex, KuCoin and Poloniex, all of which provide a variety of trading methods and powerful security measures.

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

MeMebox 2.0 redefines crypto asset management through innovative architecture and performance breakthroughs. 1) It solves three major pain points: asset silos, income decay and paradox of security and convenience. 2) Through intelligent asset hubs, dynamic risk management and return enhancement engines, cross-chain transfer speed, average yield rate and security incident response speed are improved. 3) Provide users with asset visualization, policy automation and governance integration, realizing user value reconstruction. 4) Through ecological collaboration and compliance innovation, the overall effectiveness of the platform has been enhanced. 5) In the future, smart contract insurance pools, forecast market integration and AI-driven asset allocation will be launched to continue to lead the development of the industry.

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

The top ten cryptocurrency exchanges in the world in 2025 include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi, Bitfinex, KuCoin, Bittrex and Poloniex, all of which are known for their high trading volume and security.

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

Currently ranked among the top ten virtual currency exchanges: 1. Binance, 2. OKX, 3. Gate.io, 4. Coin library, 5. Siren, 6. Huobi Global Station, 7. Bybit, 8. Kucoin, 9. Bitcoin, 10. bit stamp.

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron