Technology peripherals

Technology peripherals

AI

AI

The first multi-modal scientific question and answer data set with detailed explanations, deep learning model reasoning has a thinking chain

The first multi-modal scientific question and answer data set with detailed explanations, deep learning model reasoning has a thinking chain

The first multi-modal scientific question and answer data set with detailed explanations, deep learning model reasoning has a thinking chain

When answering complex questions, humans can understand information in different modalities and form a complete chain of thought (CoT). Can the deep learning model open the "black box" and provide a chain of thinking for its reasoning process? Recently, UCLA and the Allen Institute for Artificial Intelligence (AI2) proposed ScienceQA, the first multi-modal scientific question and answer data set with detailed explanations, to test the multi-modal reasoning capabilities of the model. In the ScienceQA task, the author proposed the GPT-3 (CoT) model, which introduced prompt learning based on thought chains into the GPT-3 model, so that the model can generate corresponding reasoning explanations while generating answers. GPT-3 (CoT) achieves 75.17% accuracy on ScienceQA; and human evaluation shows that it can generate higher quality explanations.

Learning and completing complex tasks as effectively as humans is one of the long-term goals pursued by artificial intelligence. Humans can follow a complete chain of thought (CoT) reasoning process during the decision-making process to make reasonable explanations for the answers given.

However, most existing machine learning models rely on a large number of input-output sample training to complete specific tasks. These black box models often directly generate the final answer without revealing the specific reasoning process.

Science Question Answering can well diagnose whether the artificial intelligence model has multi-step reasoning capabilities and interpretability. To answer scientific questions, a model not only needs to understand multimodal content, but also extract external knowledge to arrive at the correct answer. At the same time, a reliable model should also provide explanations that reveal its reasoning process. However, most of the current scientific question and answer data sets lack detailed explanations of the answers, or are limited to text modalities.

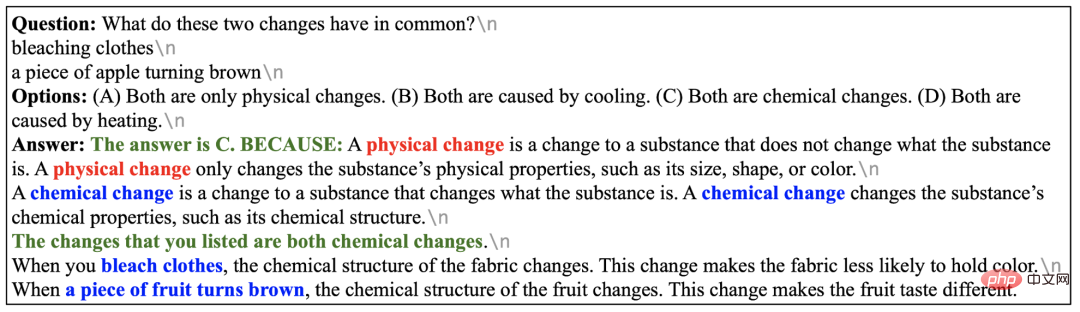

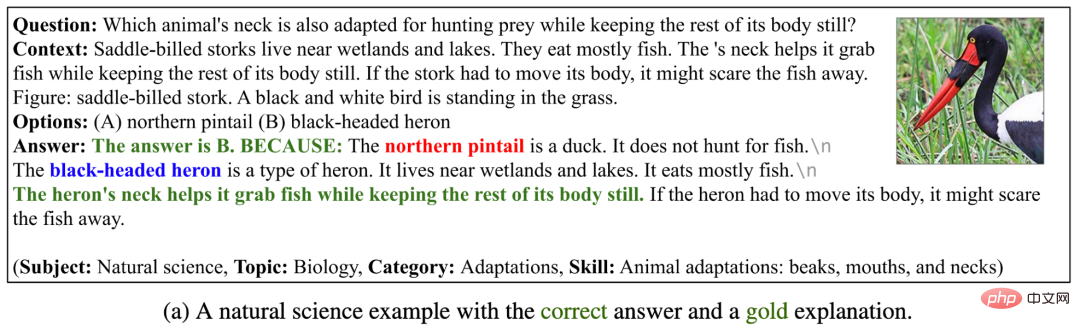

Therefore, The author collected a new science question and answer data set ScienceQA, which contains 21,208 question and answer multiple-choice questions from primary and secondary school science courses. A typical question contains multi-modal context (context), correct options, general background knowledge (lecture), and specific explanation (explanation).

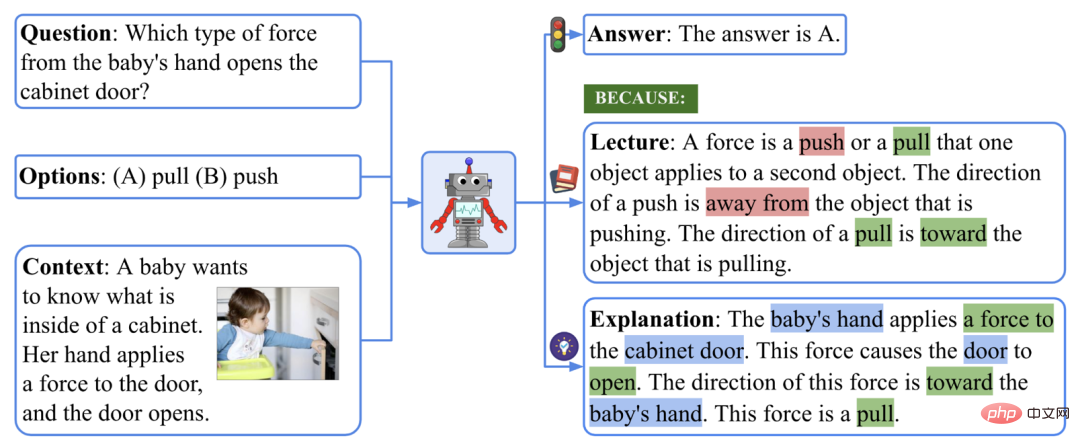

An example of the ScienceQA dataset.

To answer the example shown above, we must first recall the definition of force: "A force is a push or a pull that ... The direction of a push is... The direction of a pull is...", and then form a multi-step reasoning process: "The baby's hand applies a force to the cabinet door. → This force causes the door to open. → The direction of this force is toward the baby's hand.", and finally got the correct answer: "This force is a pull.".

In the ScienceQA task, the model needs to predict the answer while outputting a detailed explanation. In this article, The author utilizes a large-scale language model to generate background knowledge and explanations as a chain of thought (CoT) to imitate the multi-step reasoning ability that humans have.

Experiments show that current multi-modal question answering methods cannot achieve good performance in the ScienceQA task. On the contrary, Through prompt learning based on thought chains, the GPT-3 model can achieve an accuracy of 75.17% on the ScienceQA data set and can generate higher-quality explanations: According to human assessment, where 65.2% of explanations were relevant, correct, and complete. Thoughtchain can also help the UnifiedQA model achieve a 3.99% improvement on the ScienceQA dataset.

- Paper link: https://arxiv.org/abs/2209.09513

- Code link: https:/ /github.com/lupantech/ScienceQA

- Project homepage: https://scienceqa.github.io/

- Data visualization: https://scienceqa.github.io/explore.html

- Leaderboard: https://scienceqa.github .io/leaderboard.html

1. ScienceQA data set

Dataset statistics

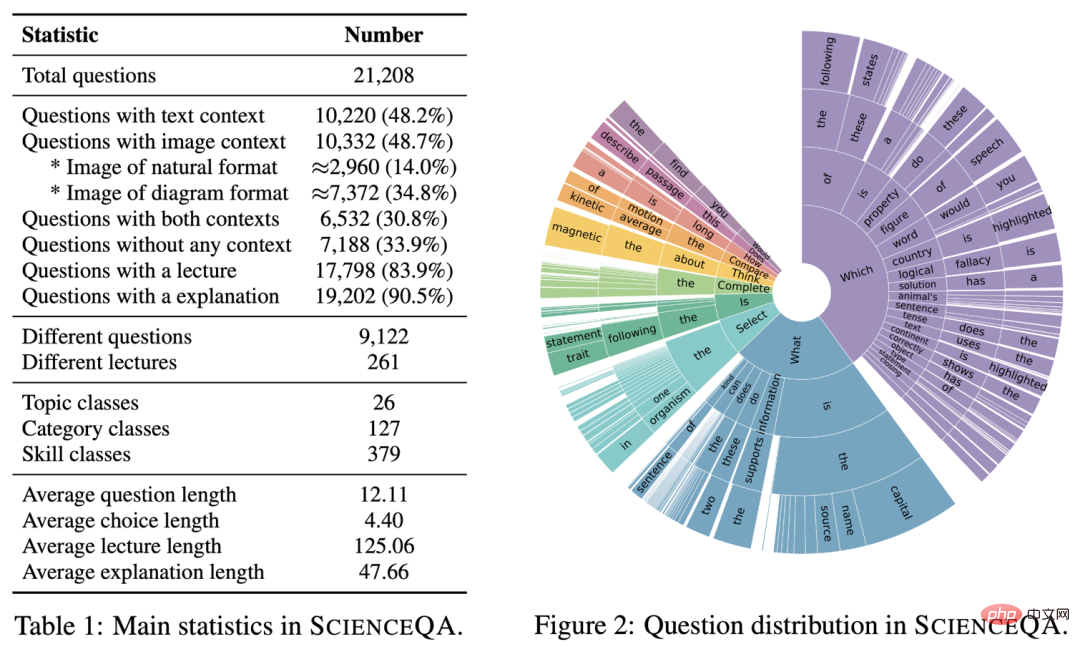

ScienceQA’s main statistics are shown below.

Main information of ScienceQA data set

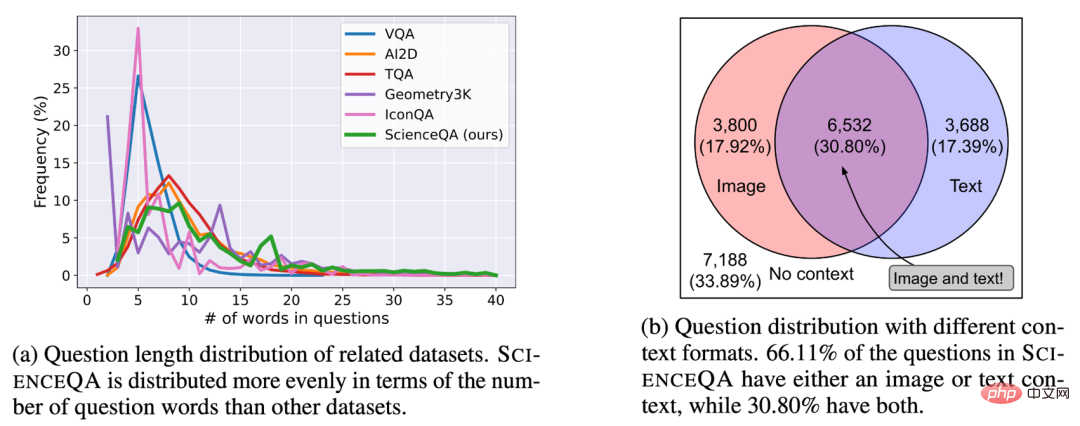

ScienceQA contains 21208 examples, including 9122 different questions. 10332 tracks (48.7%) had visual background information, 10220 tracks (48.2%) had textual background information, and 6532 tracks (30.8%) had visual textual background information. The vast majority of questions are annotated with detailed explanations: 83.9% of the questions have background knowledge annotations (lecture), and 90.5% of the questions have detailed answers (explanation).

## Question and background distribution in ScienceQA dataset.

Dataset topic distribution

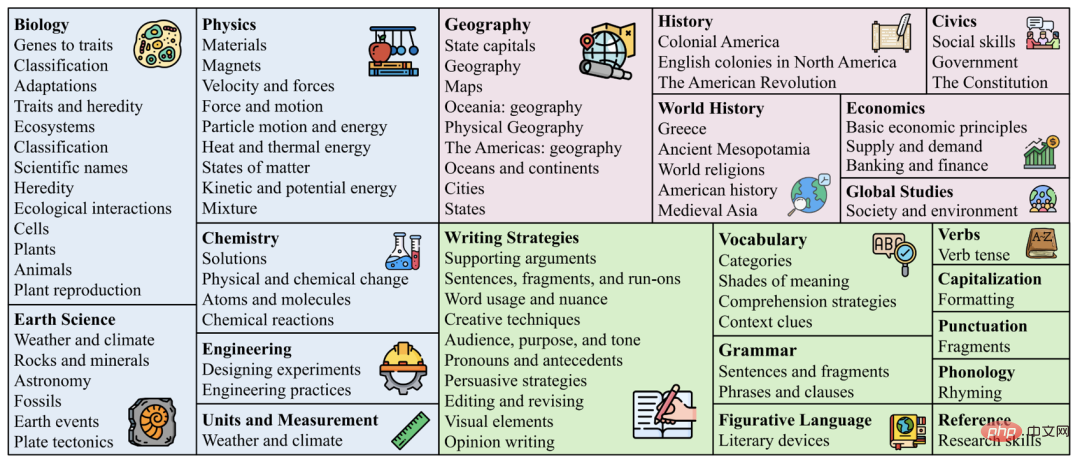

Different from existing data sets,ScienceQA covers three major branches of natural sciences, social sciences and linguistics, including 26 topics, 127 categories and 379 knowledge skills (skills).

## Topic distribution of ScienceQA.

Word cloud distribution of the data set

The word cloud distribution in the figure below is shown, Questions in ScienceQA are rich in semantic diversity. Models need to understand different problem formulations, scenarios, and background knowledge.

#Word cloud distribution of ScienceQA.

Dataset comparison

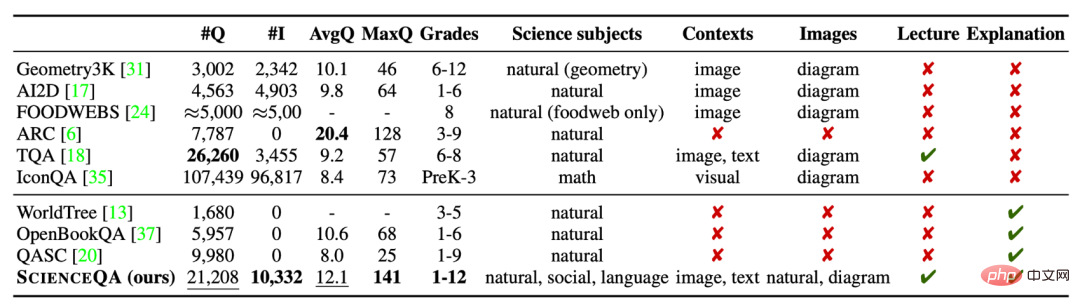

ScienceQA is the first A multi-modal scientific question and answer dataset with detailed explanations. Compared with existing data sets, ScienceQA's data size, question type diversity, topic diversity and other dimensions reflect its advantages.

##Comparison of ScienceQA dataset with other scientific question and answer datasets . 2. Models and methods

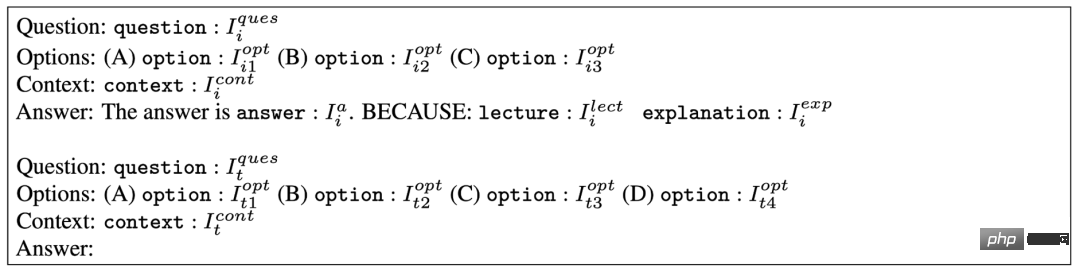

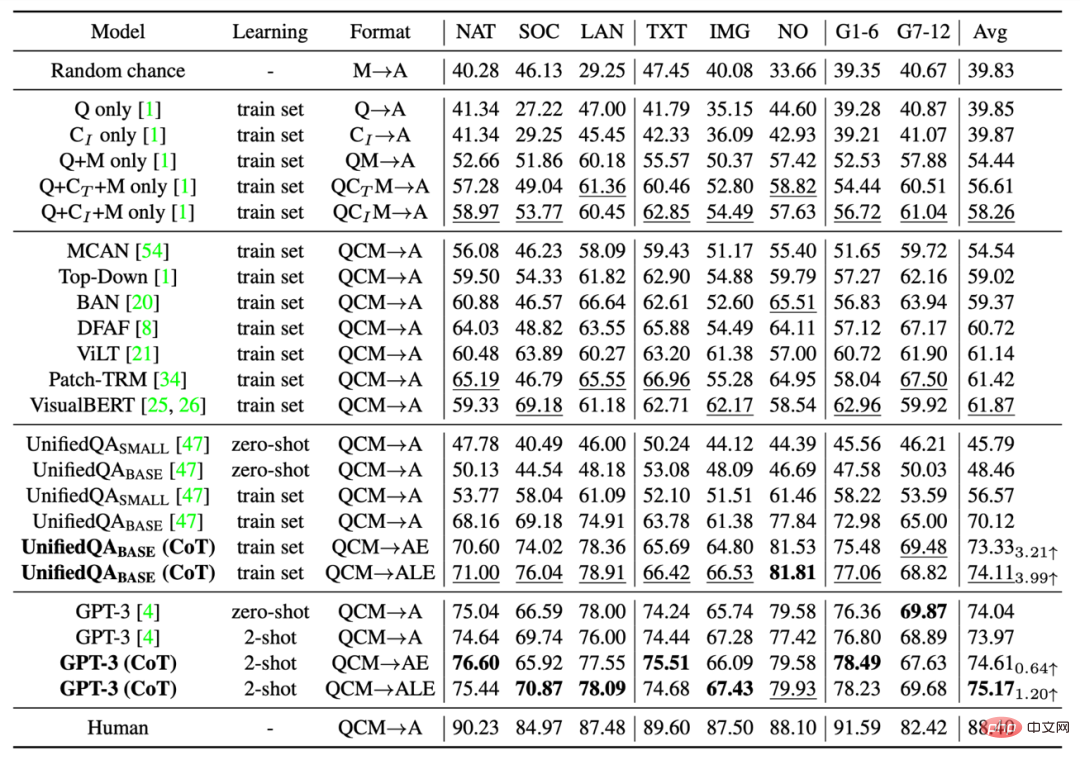

BaselinesThe author evaluates different benchmark methods on the ScienceQA dataset, including VQA models such as Top-Down Attention, MCAN, BAN, DFAF, ViLT, Patch-TRM and VisualBERT, and large-scale language models such as UnifiedQA and GPT- 3, as well as random chance and human performance. For language models UnifiedQA and GPT-3, background images are converted into textual captions. GPT-3 (CoT) Recent research work has shown that, given appropriate cues, The GPT-3 model can show excellent performance on different downstream tasks. To this end, the author proposes the GPT-3 (CoT) model, which adds a chain of thought (CoT) to the prompts, so that the model can generate corresponding background knowledge and explanations while generating answers.

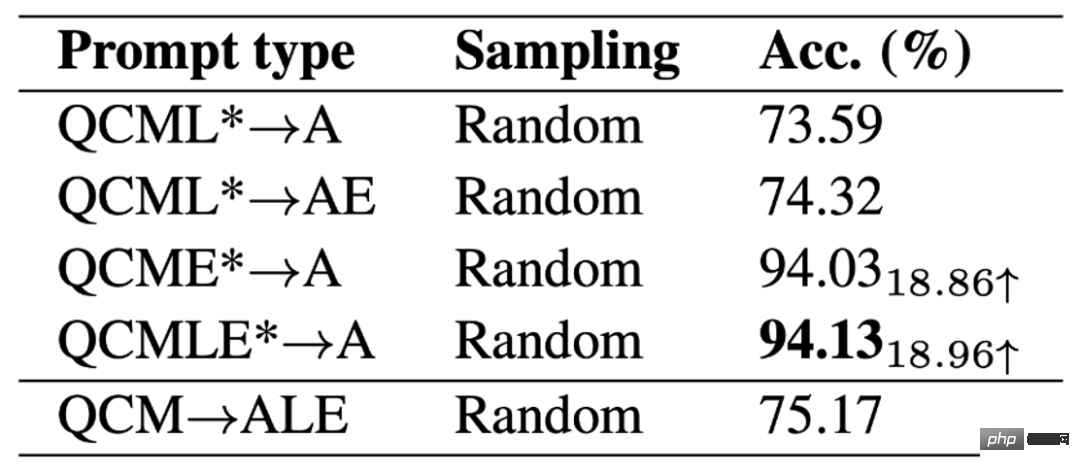

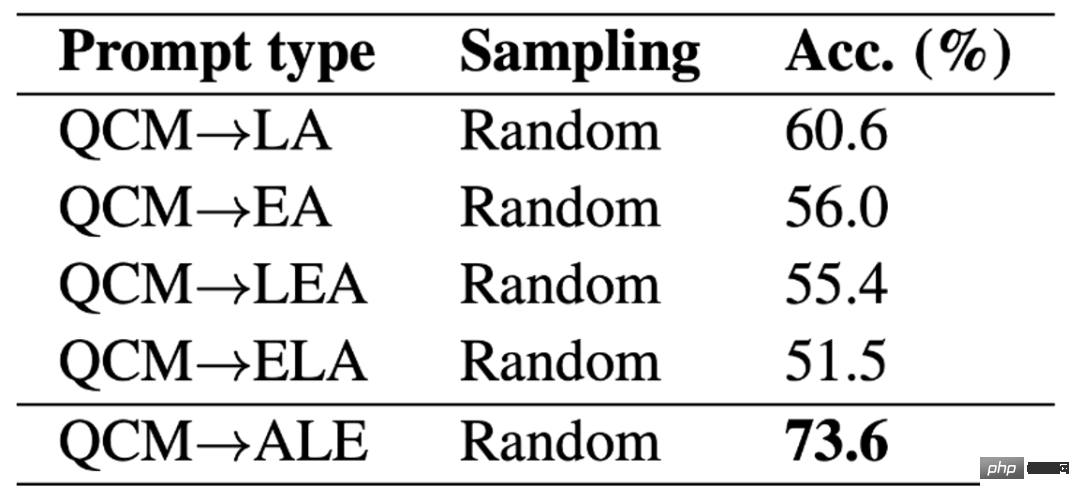

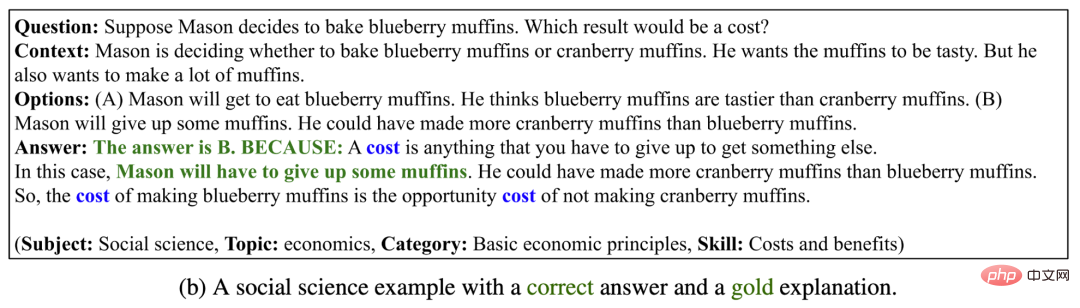

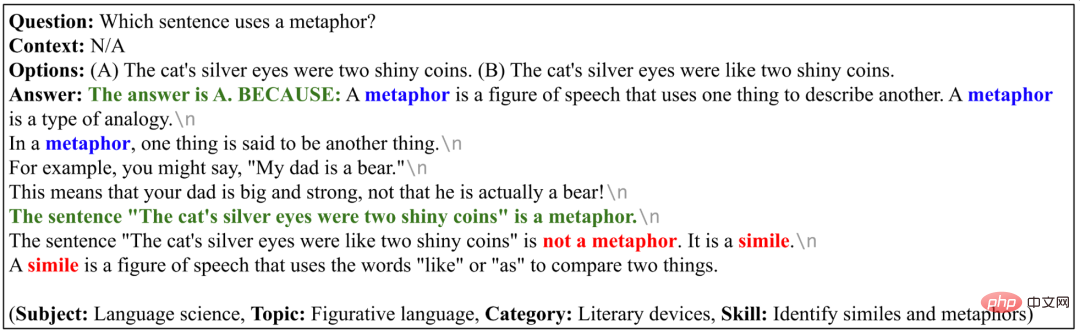

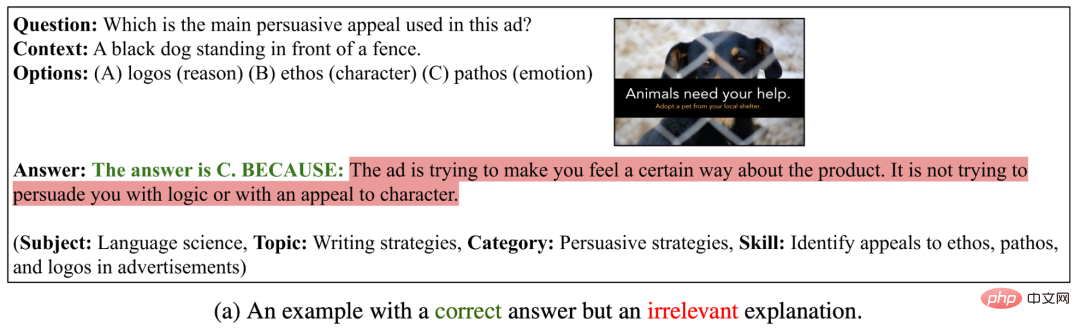

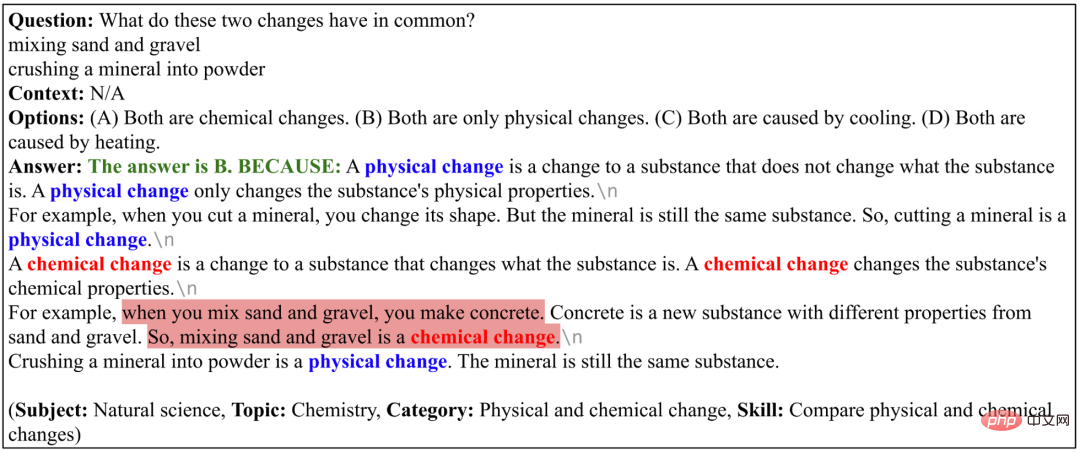

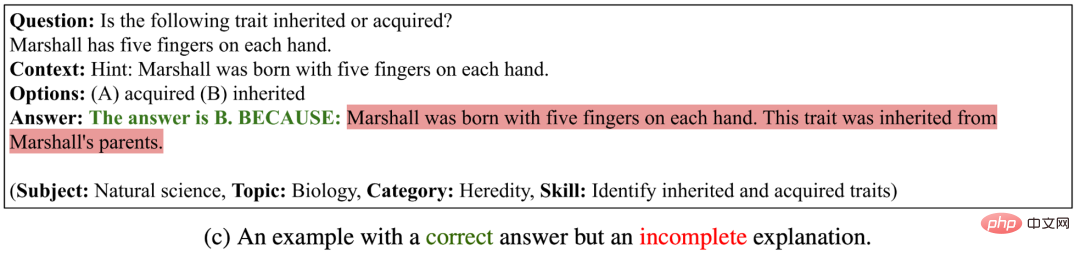

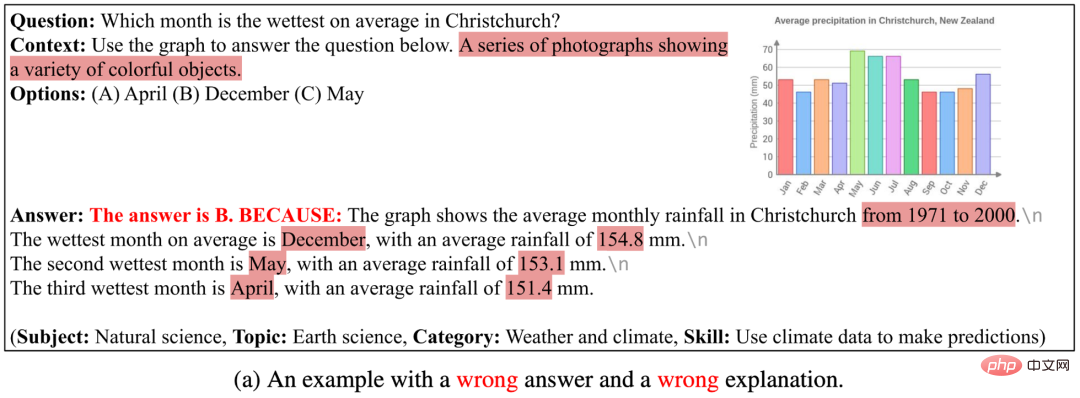

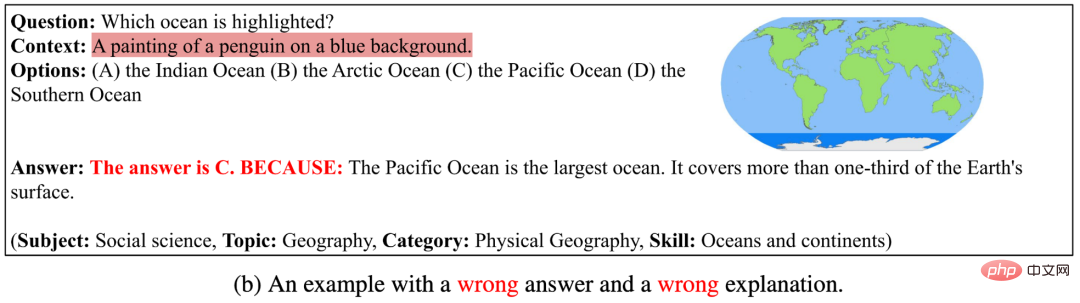

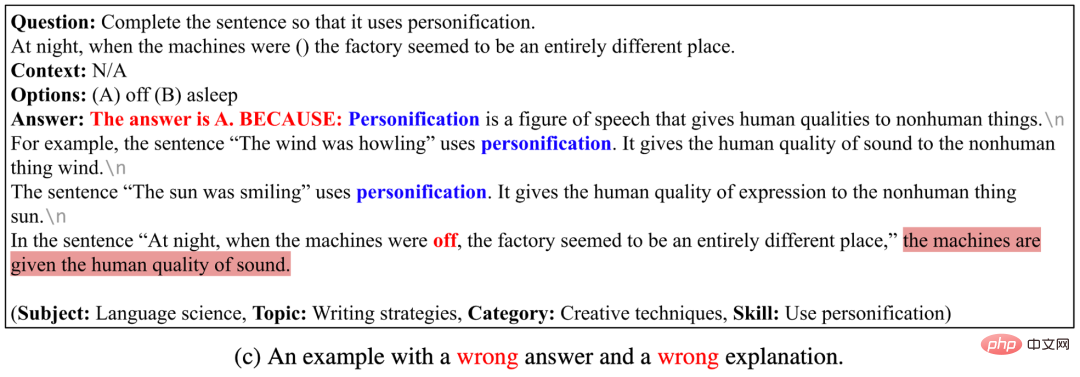

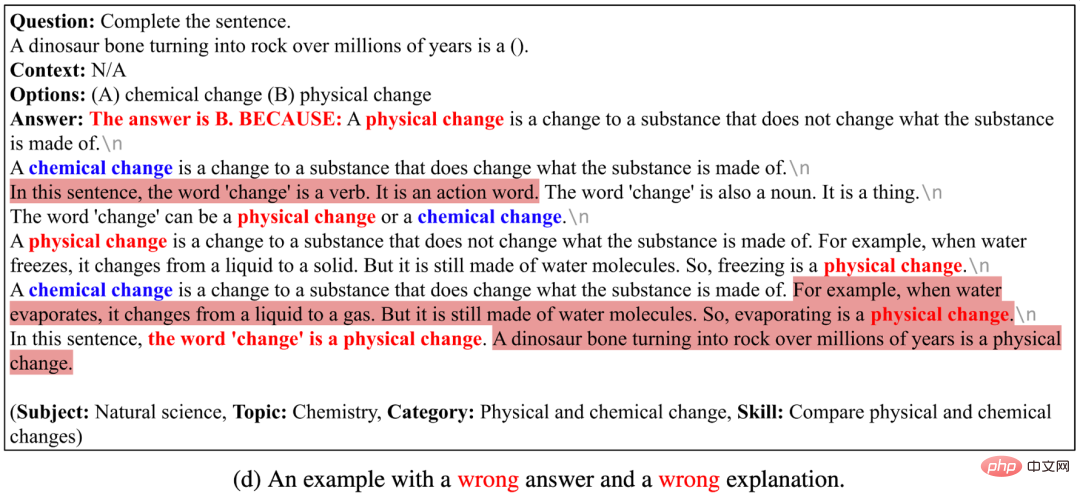

## Hint template adopted by GPT-3 (CoT). 3. Experiment and analysis are different The accuracy results of the benchmarks and methods on the ScienceQA test set are shown in the table below. VisualBERT, one of the current best VQA models, can only achieve 61.87% accuracy. Introducing CoT data during the training process, the UnifiedQA_BASE model can achieve an accuracy of 74.11%. And GPT-3 (CoT) achieved an accuracy of 75.17% with the prompt of 2 training examples, which is higher than other benchmark models. Humans perform well on the ScienceQA dataset, achieving an overall accuracy of 88.40% and performing stably across different categories of questions. Evaluation of generated explanations The author uses automatic evaluation metrics such as BLEU-1, BLEU-2, ROUGE-L and Sentence Similarity evaluate the explanations generated by different methods. Since automatic evaluation metrics can only measure the similarity between prediction results and annotated content, the authors further adopted manual evaluation methods to evaluate the relevance, correctness, and completeness of the generated explanations. As can be seen, 65.2% of the explanations generated by . Different prompt templates The author compared the different The impact of prompt templates on GPT-3 (CoT) accuracy . It can be seen that under the QAM-ALE template, GPT-3 (CoT) can obtain the largest average accuracy and the smallest variance. Additionally, GPT-3 (CoT) performs best when prompted with 2 training examples. Model upper limit In order to explore the performance upper limit of the GPT-3 (CoT) model, the author added annotated background knowledge and explanations to the input of the model (QCMLE*-A). We can see that GPT-3 (CoT) can achieve up to 94.13% accuracy. This also suggests a possible direction for model improvement: the model can perform step-by-step reasoning, that is, first retrieve accurate background knowledge and generate accurate explanations, and then use these results as input. This process is very similar to how humans solve complex problems. ## Performance upper limit for GPT-3 (CoT) models. Different ALE locations The author further discusses GPT-3 (CoT) When generating predictions, the impact of different ALE positions on the results. Experimental results on ScienceQA show that if GPT-3 (CoT) first generates background knowledge L or explanation E, and then generates answer A, its prediction accuracy will drop significantly. The main reason is that background knowledge L and explanation E have a large number of words. If LE is generated first, the GPT-3 model may run out of the maximum number of words, or stop generating text early, so that the final answer A cannot be obtained. Successful Cases Among the following 4 examples, GPT-3 (CoT) Not only generates correct answers, but also gives relevant, correct and complete explanations. This shows that GPT-3 (CoT) exhibits strong multi-step reasoning and explanation capabilities on the ScienceQA dataset. ##GPT-3 (CoT) Examples of generating correct answers and explanations. Failure Case I Although the correct answer was generated, the explanation generated was irrelevant, incorrect, or incomplete. This shows that GPT-3 (CoT) still faces greater difficulties in generating logically consistent long sequences. #GPT-3 (CoT) can generate the correct answer, but the generated explanation is incorrect. Failure Case II In the following four examples, GPT-3 (CoT) cannot be generated correctly The answer also cannot generate the correct explanation . The reasons are: (1) The current image captioning model cannot accurately describe the semantic information of schematic diagrams, tables and other pictures. If the picture is represented by picture annotation text, GPT-3 (CoT) cannot yet answer the question that contains the chart background. problems; (2) When GPT-3 (CoT) generates long sequences, it is prone to inconsistent or incoherent problems; (3) GPT-3 (CoT) is not yet able to answer specific questions. Domain knowledge issues. #GPT-3 (CoT) can generate examples of incorrect answers and explanations. The author proposed ScienceQA, the first multi-modal scientific question and answer data set with detailed explanations. ScienceQA contains 21,208 multiple-choice questions from primary and secondary school science subjects, covering three major science fields and a variety of topics. Most questions are annotated with detailed background knowledge and explanations. ScienceQA evaluates a model's capabilities in multimodal understanding, multistep reasoning, and interpretability. The authors evaluate different baseline models on the ScienceQA dataset and propose that the GPT-3 (CoT) model can generate corresponding background knowledge and explanations while generating answers. A large number of experimental analyzes and case studies have provided useful inspiration for the improvement of the model.

Different LE locations.

Different LE locations.

The above is the detailed content of The first multi-modal scientific question and answer data set with detailed explanations, deep learning model reasoning has a thinking chain. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1318

1318

25

25

1268

1268

29

29

1248

1248

24

24

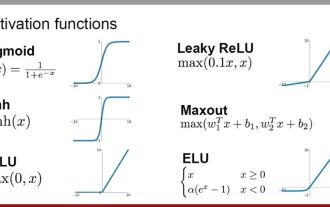

Analysis of commonly used AI activation functions: deep learning practice of Sigmoid, Tanh, ReLU and Softmax

Dec 28, 2023 pm 11:35 PM

Analysis of commonly used AI activation functions: deep learning practice of Sigmoid, Tanh, ReLU and Softmax

Dec 28, 2023 pm 11:35 PM

Activation functions play a crucial role in deep learning. They can introduce nonlinear characteristics into neural networks, allowing the network to better learn and simulate complex input-output relationships. The correct selection and use of activation functions has an important impact on the performance and training results of neural networks. This article will introduce four commonly used activation functions: Sigmoid, Tanh, ReLU and Softmax, starting from the introduction, usage scenarios, advantages, disadvantages and optimization solutions. Dimensions are discussed to provide you with a comprehensive understanding of activation functions. 1. Sigmoid function Introduction to SIgmoid function formula: The Sigmoid function is a commonly used nonlinear function that can map any real number to between 0 and 1. It is usually used to unify the

Methods and steps for using BERT for sentiment analysis in Python

Jan 22, 2024 pm 04:24 PM

Methods and steps for using BERT for sentiment analysis in Python

Jan 22, 2024 pm 04:24 PM

BERT is a pre-trained deep learning language model proposed by Google in 2018. The full name is BidirectionalEncoderRepresentationsfromTransformers, which is based on the Transformer architecture and has the characteristics of bidirectional encoding. Compared with traditional one-way coding models, BERT can consider contextual information at the same time when processing text, so it performs well in natural language processing tasks. Its bidirectionality enables BERT to better understand the semantic relationships in sentences, thereby improving the expressive ability of the model. Through pre-training and fine-tuning methods, BERT can be used for various natural language processing tasks, such as sentiment analysis, naming

Latent space embedding: explanation and demonstration

Jan 22, 2024 pm 05:30 PM

Latent space embedding: explanation and demonstration

Jan 22, 2024 pm 05:30 PM

Latent Space Embedding (LatentSpaceEmbedding) is the process of mapping high-dimensional data to low-dimensional space. In the field of machine learning and deep learning, latent space embedding is usually a neural network model that maps high-dimensional input data into a set of low-dimensional vector representations. This set of vectors is often called "latent vectors" or "latent encodings". The purpose of latent space embedding is to capture important features in the data and represent them into a more concise and understandable form. Through latent space embedding, we can perform operations such as visualizing, classifying, and clustering data in low-dimensional space to better understand and utilize the data. Latent space embedding has wide applications in many fields, such as image generation, feature extraction, dimensionality reduction, etc. Latent space embedding is the main

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Almost 20 years have passed since the concept of deep learning was proposed in 2006. Deep learning, as a revolution in the field of artificial intelligence, has spawned many influential algorithms. So, what do you think are the top 10 algorithms for deep learning? The following are the top algorithms for deep learning in my opinion. They all occupy an important position in terms of innovation, application value and influence. 1. Deep neural network (DNN) background: Deep neural network (DNN), also called multi-layer perceptron, is the most common deep learning algorithm. When it was first invented, it was questioned due to the computing power bottleneck. Until recent years, computing power, The breakthrough came with the explosion of data. DNN is a neural network model that contains multiple hidden layers. In this model, each layer passes input to the next layer and

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning

From basics to practice, review the development history of Elasticsearch vector retrieval

Oct 23, 2023 pm 05:17 PM

From basics to practice, review the development history of Elasticsearch vector retrieval

Oct 23, 2023 pm 05:17 PM

1. Introduction Vector retrieval has become a core component of modern search and recommendation systems. It enables efficient query matching and recommendations by converting complex objects (such as text, images, or sounds) into numerical vectors and performing similarity searches in multidimensional spaces. From basics to practice, review the development history of Elasticsearch vector retrieval_elasticsearch As a popular open source search engine, Elasticsearch's development in vector retrieval has always attracted much attention. This article will review the development history of Elasticsearch vector retrieval, focusing on the characteristics and progress of each stage. Taking history as a guide, it is convenient for everyone to establish a full range of Elasticsearch vector retrieval.

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve