Technology peripherals

Technology peripherals

AI

AI

New research from Google and DeepMind: How does inductive bias affect model scaling?

New research from Google and DeepMind: How does inductive bias affect model scaling?

New research from Google and DeepMind: How does inductive bias affect model scaling?

Scaling of Transformer models has aroused the research interest of many scholars in recent years. However, not much is known about the scaling properties of different inductive biases imposed by model architectures. It is often assumed that improvements at a specific scale (computation, size, etc.) can be transferred to different scales and computational regions.

However, it is crucial to understand the interaction between architecture and scaling laws, and it is of great research significance to design models that perform well at different scales. Several questions remain to be clarified: Do model architectures scale differently? If so, how does inductive bias affect scaling performance? How does it affect upstream (pre-training) and downstream (transfer) tasks?

In a recent paper, researchers at Google sought to understand the impact of inductive bias (architecture) on language model scaling . To do this, the researchers pretrained and fine-tuned ten different model architectures across multiple computational regions and scales (from 15 million to 40 billion parameters). Overall, they pretrained and fine-tuned more than 100 models of different architectures and sizes and presented insights and challenges in scaling these ten different architectures.

They also note that scaling these models is not as simple as it seems, that is, the complex details of scaling are intertwined with the architectural choices studied in detail in this article. For example, a feature of Universal Transformers (and ALBERT) is parameter sharing. This architectural choice significantly warps the scaling behavior compared to the standard Transformer not only in terms of performance, but also in terms of computational metrics such as FLOPs, speed, and number of parameters. In contrast, models like Switch Transformers are quite different, with an unusual relationship between FLOPs and parameter quantities.

Specifically, the main contributions of this article are as follows:

- The first derivation of different inductive biases and the scaling law of the model architecture. The researchers found that this scaling factor varied significantly across models and noted that this is an important consideration in model development. It turns out that of all ten architectures they considered, the vanilla Transformer had the best scaling performance, even if it wasn't the best in absolute terms per compute area. Researchers have observed that

- A model that works well in one computational scaling region is not necessarily the best model in another computational scaling region. Additionally, they found that some models, while performing well in low-computation regions, were difficult to scale. This means that it is difficult to get a complete picture of the model's scalability by comparing point by point in a certain computational area. Researchers found that

- when it comes to scaling different model architectures, upstream pre-training perplexity may be less relevant to downstream transfer. Therefore, the underlying architecture and inductive bias are also critical for downstream migration. The researchers highlighted the difficulty of scaling under certain architectures and showed that some models did not scale (or scaled with a negative trend). They also found a tendency for linear temporal attention models (such as Performer) to be difficult to scale.

- Methods and Experiments

In the third chapter of the paper, the researcher outlines the overall experimental setup and introduces the model evaluated in the experiment.

Table 1 below shows the main results of this article, including the number of trainable parameters, FLOPs (single forward pass) and speed (steps per second). In addition, it also includes Validate perplexity (upstream pre-training) and results on 17 downstream tasks.

Are all models scaled the same way?

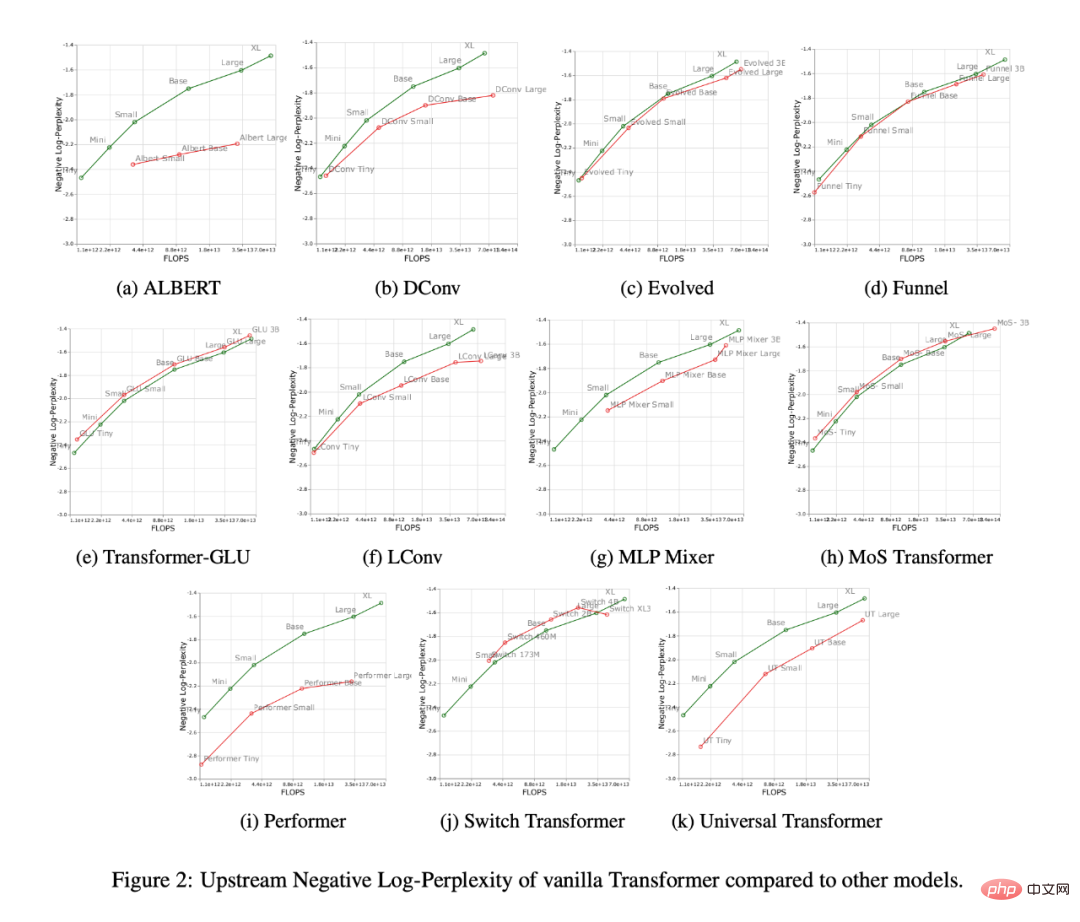

Figure 2 below shows the scaling behavior of all models when increasing the number of FLOPs. It can be observed that the scaling behavior of all models is quite unique and different, i.e. most of them are different from the standard Transformer. Perhaps the biggest finding here is that most models (e.g., LConv, Evolution) appear to perform on par or better than the standard Transformer, but fail to scale with higher compute budgets.

Another interesting trend is that "linear" Transformers, such as Performer, do not scale. As shown in Figure 2i, compared from base to large scale, the pre-training perplexity only dropped by 2.7%. For the vanilla Transformer this figure is 8.4%.

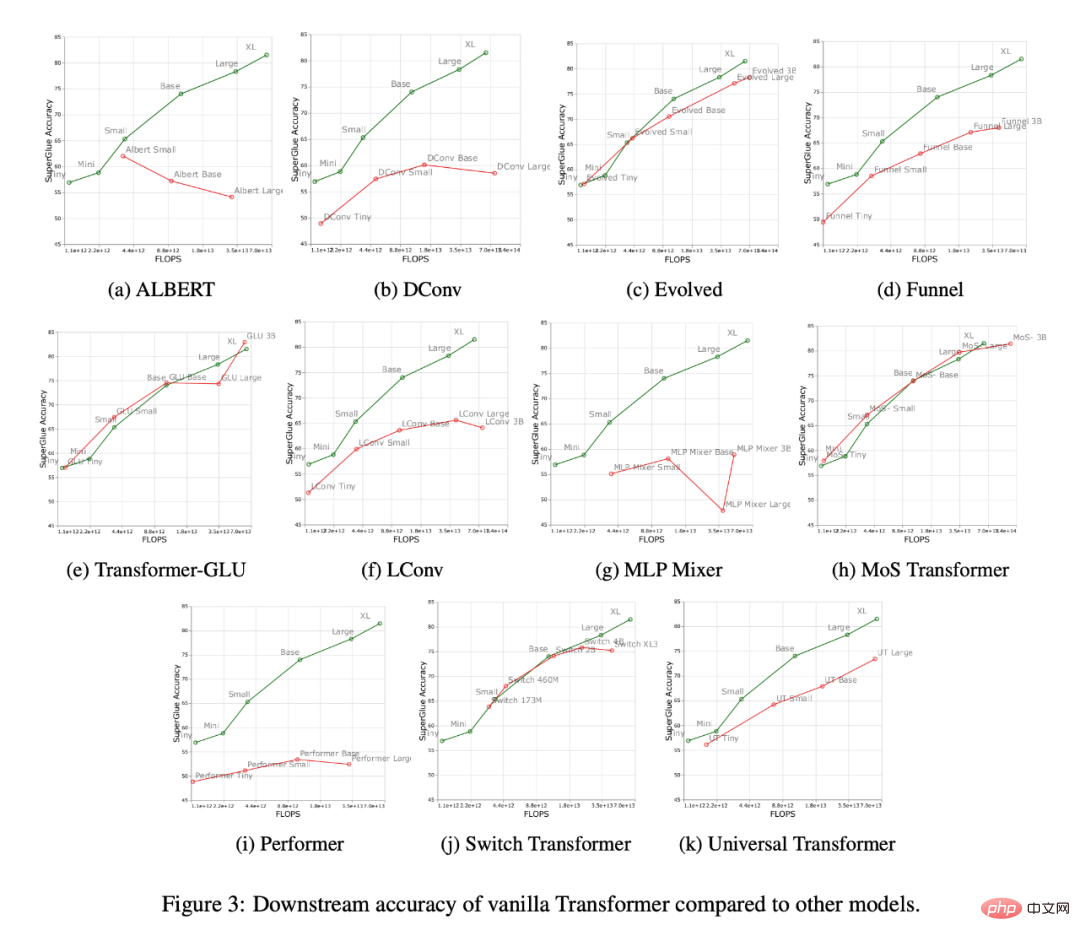

Figure 3 below shows the scaling curves of all models on the downstream migration task. It can be found that compared with Transformer, most models have different The scaling curve,changes significantly in downstream tasks. It is worth noting that most models have different upstream or downstream scaling curves.

The researchers found that some models, such as Funnel Transformer and LConv, seemed to perform quite well upstream, but were greatly affected downstream. As for Performer, the performance gap between upstream and downstream seems to be even wider. It is worth noting that the downstream tasks of SuperGLUE often require pseudo-cross-attention on the encoder, which models such as convolution cannot handle (Tay et al., 2021a).

Therefore, researchers have found that although some models have good upstream performance, they may still have difficulty learning downstream tasks.

#Is the best model different at each scale?

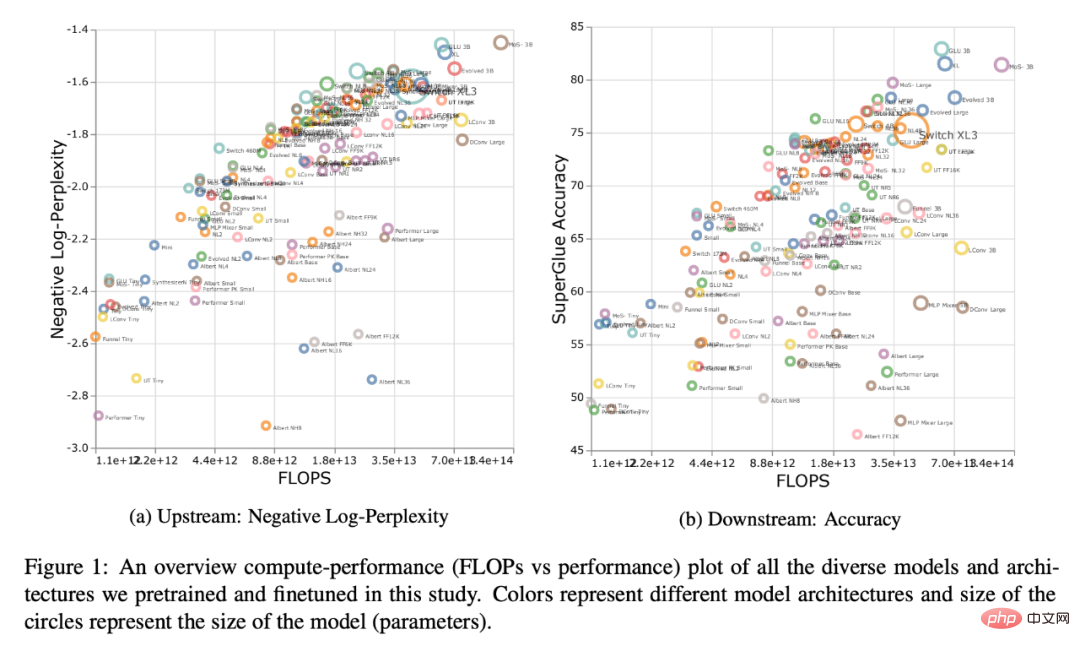

Figure 1 below shows the Pareto frontier when calculated in terms of upstream or downstream performance. The colors of the plot represent different models, and it can be observed that the best model may be different for each scale and calculation area. Additionally, this can also be seen in Figure 3 above. For example, the Evolved Transformer seems to perform just as well as the standard Transformer in the tiny to small region (downstream), but this changes quickly when scaling up the model. The researchers also observed this in MoS-Transformer, which performed significantly better than the ordinary Transformer in some areas, but not in other areas.

Scaling law of each model

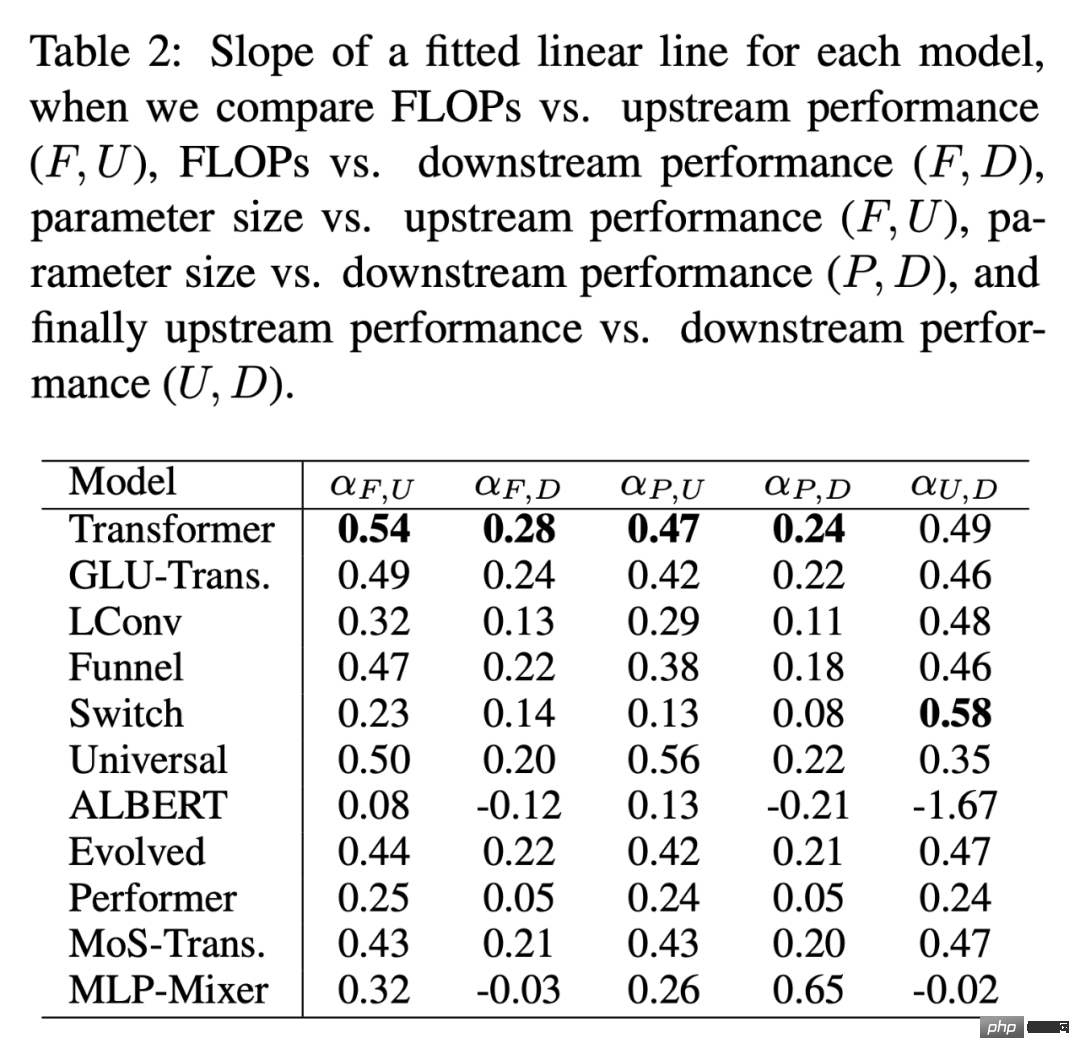

Table 2 below shows the fitting of each model in various situations The slope of the linear straight line α. The researchers obtained α by plotting F (FLOPs), U (upstream perplexity), D (downstream accuracy), and P (number of parameters). Generally speaking, α describes the scalability of the model, e.g. α_F,U plots FLOPs against upstream performance. The only exception is α_U,D, which is a measure of upstream and downstream performance, with high α_U,D values meaning better model scaling to downstream tasks. Overall, the alpha value is a measure of how well a model performs relative to scaling.

Do Scaling Protocols affect model architecture in the same way?

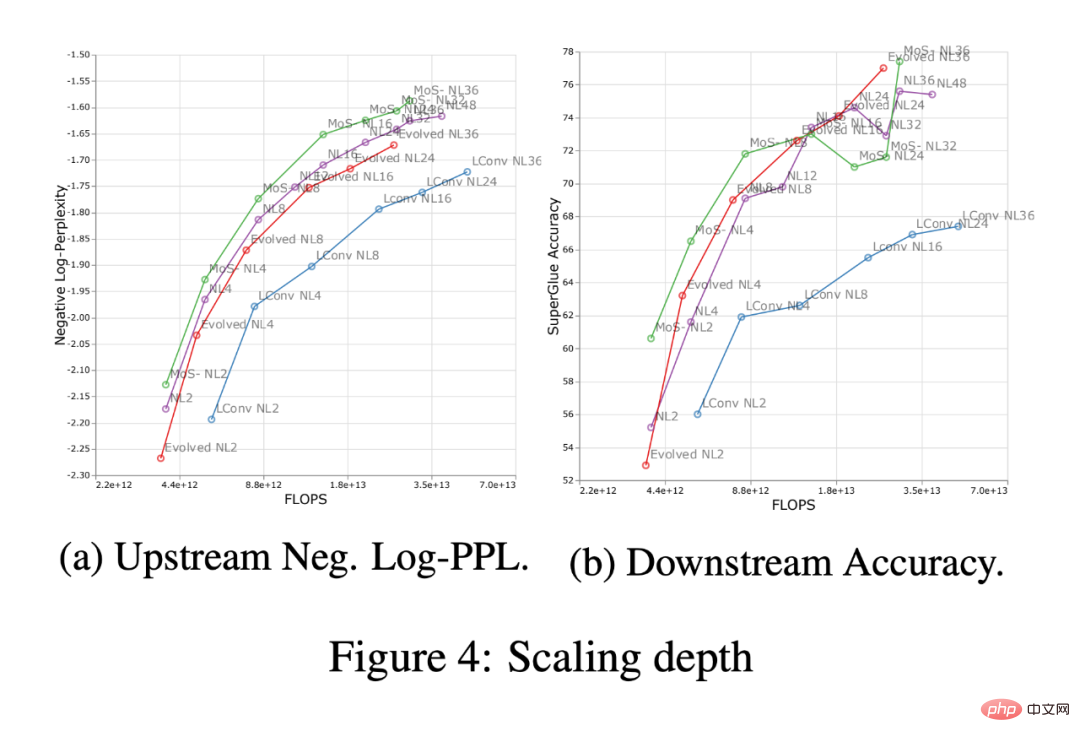

Figure 4 below shows the impact of scaling depth in four model architectures (MoS-Transformer, Transformer, Evolved Transformer, LConv).

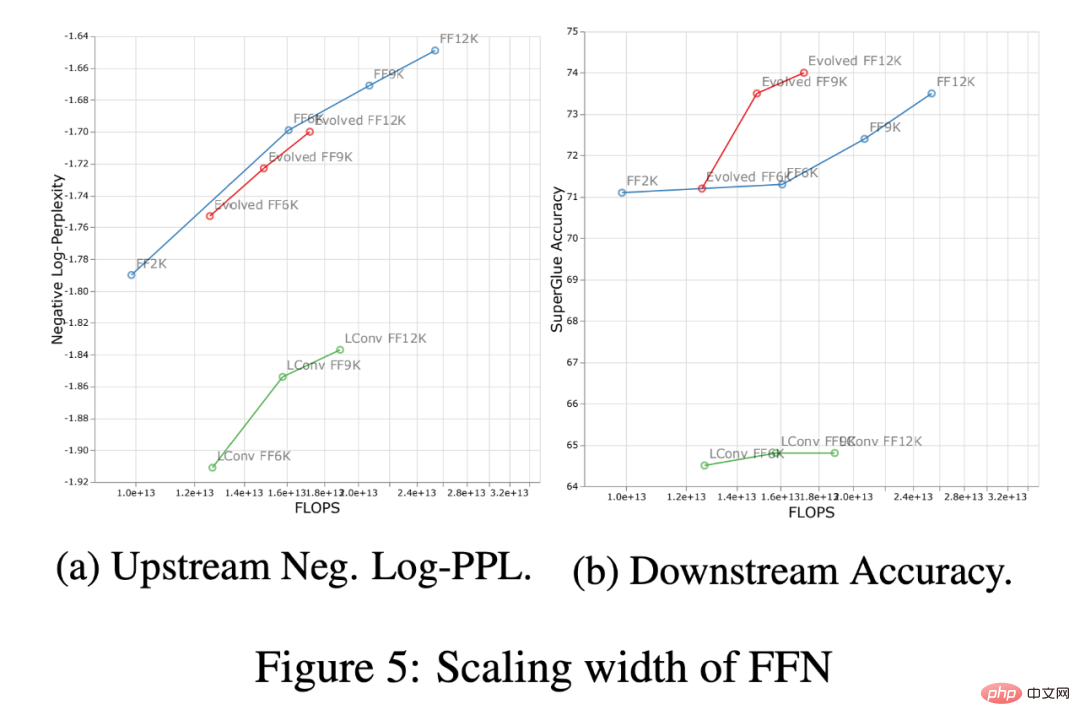

Figure 5 below shows the impact of scaling width across the same four architectures. First, on the upstream (negative log-perplexity) curve it can be noticed that although there are clear differences in absolute performance between different architectures, the scaling trends remain very similar. Downstream, with the exception of LConv, deep scaling (Figure 4 above) appears to work the same on most architectures. Also, it seems that the Evolved Transformer is slightly better at applying width scaling relative to width scaling. It is worth noting that depth scaling has a much greater impact on downstream scaling compared to width scaling.

For more research details, please refer to the original paper.

The above is the detailed content of New research from Google and DeepMind: How does inductive bias affect model scaling?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

A detailed introduction to the login operation of the Sesame Open Exchange web version, including login steps and password recovery process. It also provides solutions to common problems such as login failure, unable to open the page, and unable to receive verification codes to help you log in to the platform smoothly.

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

The latest download address of Bitget in 2025: Steps to obtain the official app

Feb 25, 2025 pm 02:54 PM

The latest download address of Bitget in 2025: Steps to obtain the official app

Feb 25, 2025 pm 02:54 PM

This guide provides detailed download and installation steps for the official Bitget Exchange app, suitable for Android and iOS systems. The guide integrates information from multiple authoritative sources, including the official website, the App Store, and Google Play, and emphasizes considerations during download and account management. Users can download the app from official channels, including app store, official website APK download and official website jump, and complete registration, identity verification and security settings. In addition, the guide covers frequently asked questions and considerations, such as

Tutorial on how to register, use and cancel Ouyi okex account

Mar 31, 2025 pm 04:21 PM

Tutorial on how to register, use and cancel Ouyi okex account

Mar 31, 2025 pm 04:21 PM

This article introduces in detail the registration, use and cancellation procedures of Ouyi OKEx account. To register, you need to download the APP, enter your mobile phone number or email address to register, and complete real-name authentication. The usage covers the operation steps such as login, recharge and withdrawal, transaction and security settings. To cancel an account, you need to contact Ouyi OKEx customer service, provide necessary information and wait for processing, and finally obtain the account cancellation confirmation. Through this article, users can easily master the complete life cycle management of Ouyi OKEx account and conduct digital asset transactions safely and conveniently.

How to register and download the latest app on Bitget official website

Mar 05, 2025 am 07:54 AM

How to register and download the latest app on Bitget official website

Mar 05, 2025 am 07:54 AM

This guide provides detailed download and installation steps for the official Bitget Exchange app, suitable for Android and iOS systems. The guide integrates information from multiple authoritative sources, including the official website, the App Store, and Google Play, and emphasizes considerations during download and account management. Users can download the app from official channels, including app store, official website APK download and official website jump, and complete registration, identity verification and security settings. In addition, the guide covers frequently asked questions and considerations, such as

Why is Bittensor said to be the 'bitcoin' in the AI track?

Mar 04, 2025 pm 04:06 PM

Why is Bittensor said to be the 'bitcoin' in the AI track?

Mar 04, 2025 pm 04:06 PM

Original title: Bittensor=AIBitcoin? Original author: S4mmyEth, Decentralized AI Research Original translation: zhouzhou, BlockBeats Editor's note: This article discusses Bittensor, a decentralized AI platform, hoping to break the monopoly of centralized AI companies through blockchain technology and promote an open and collaborative AI ecosystem. Bittensor adopts a subnet model that allows the emergence of different AI solutions and inspires innovation through TAO tokens. Although the AI market is mature, Bittensor faces competitive risks and may be subject to other open source