Technology peripherals

Technology peripherals

AI

AI

Linguists are back! Start learning from 'pronunciation”: this time the AI model has to teach itself

Linguists are back! Start learning from 'pronunciation”: this time the AI model has to teach itself

Linguists are back! Start learning from 'pronunciation”: this time the AI model has to teach itself

Trying to make computers understand human language has always been an insurmountable difficulty in the field of artificial intelligence.

Early natural language processing models usually used artificially designed features, requiring specialized linguists to manually write patterns. However, the final results were not ideal, and even AI research once fell into a cold winter.

Every time I fire a linguist, the speech recognition system becomes more accurate.

Every time I fire a linguist, the performance of the speech recognizer goes up.

——Frederick Jelinek

With statistical model and large-scale pre-training After the model is built, feature extraction is no longer necessary, but data annotation for specified tasks is still required, and the most critical problem is: the trained model still does not understand human language.

#So, should we start from the original form of language and re-study: How did humans acquire language ability?

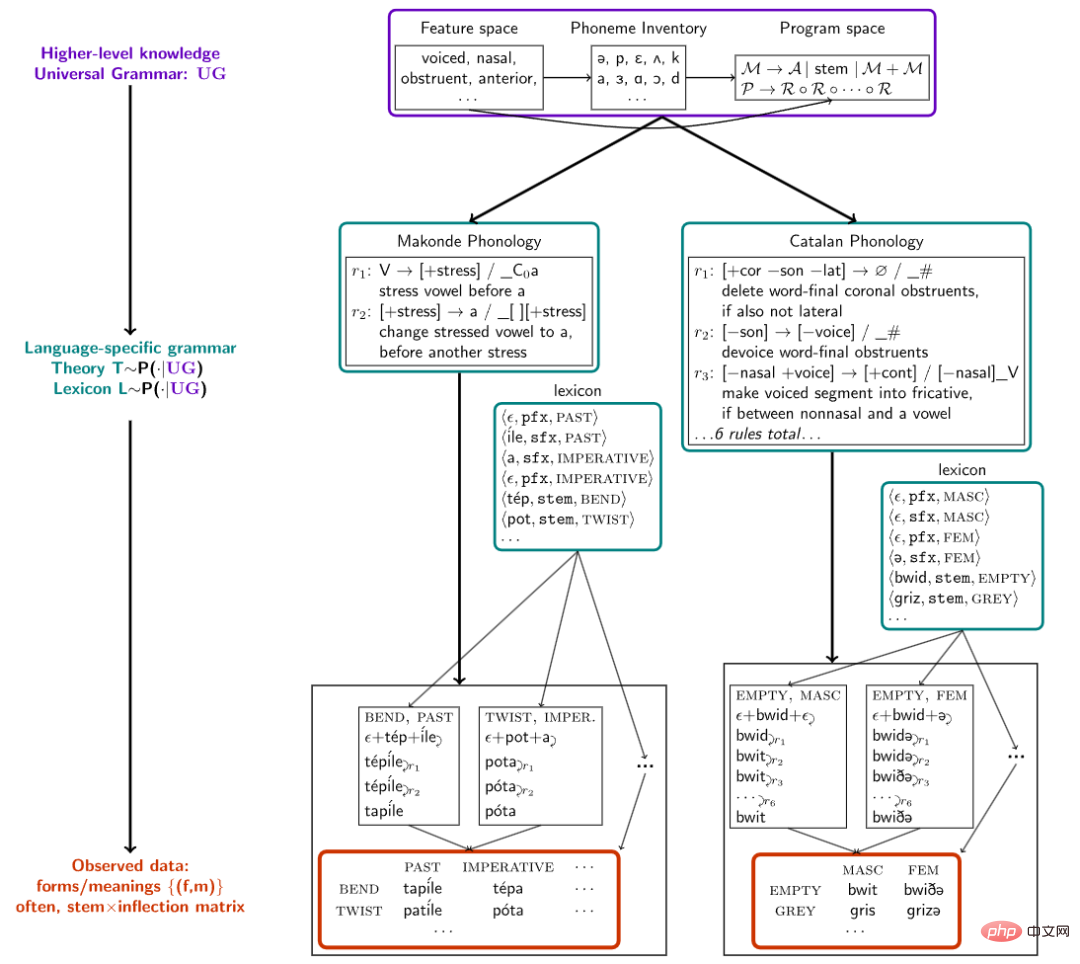

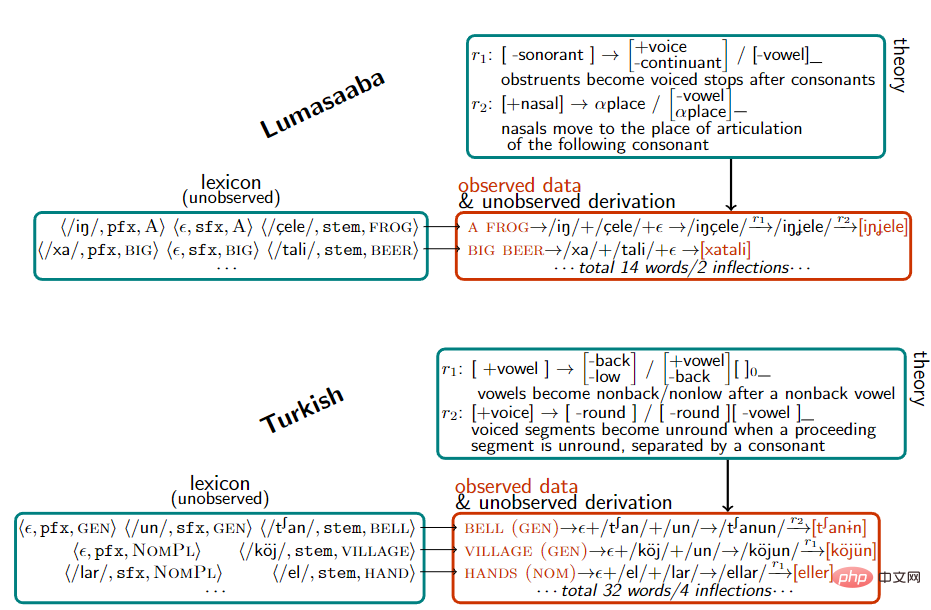

Researchers from Cornell University, MIT and McGill University recently published a paper in Nature Communications, proposing a framework for algorithmic synthesis models, in the most basic part of human language, That is, morpho-phonology began to teach AI to learn language and construct the morphology of the language directly from sounds.

Paper link: https://www.nature.com/articles/s41467-022-32012-w

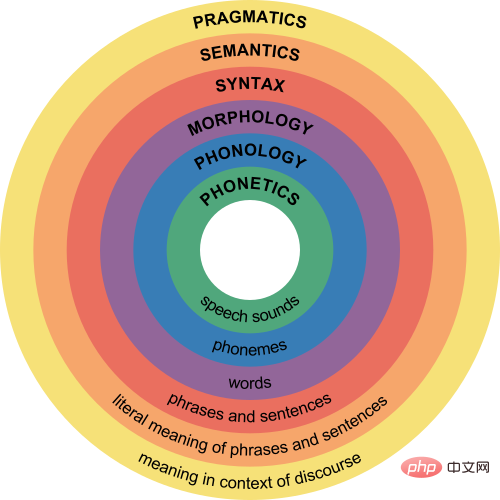

Morphology and phonology is linguistics One of the branches focuses on the sound changes that occur when morphemes (the smallest units of meaning) are combined into words, and attempts to provide a series of rules to predict the regular sound changes of phonemes in language.

For example, the plural morpheme in English is written as -s or -es, but there are three pronunciations [s], [z] and [әz]. For example, the pronunciation of cats is /kæts/, and the pronunciation of dogs is It is /dagz/, and horses is pronounced /hɔrsәz/.

When humans learn to convert plural pronunciation, they first realize that the plural suffix is actually /z/ based on morphology; then based on phonology, the suffix is based on the pronunciation in the stem , such as unvoiced consonants, etc. are converted into /s/ or /әz/

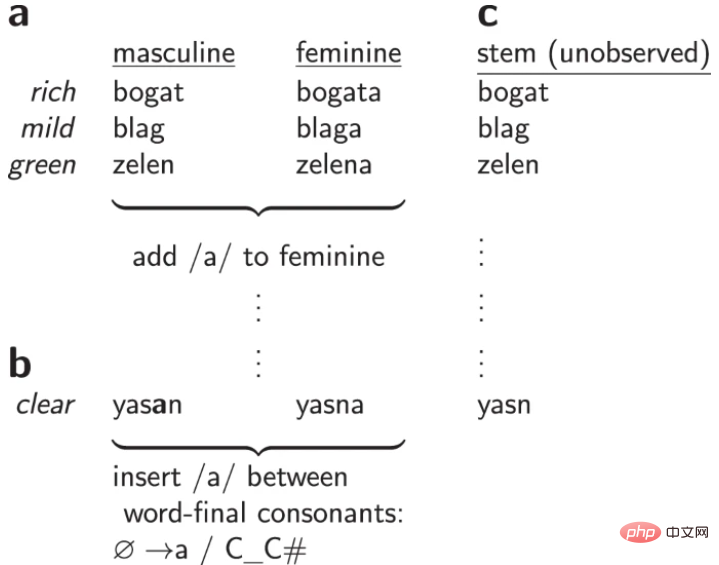

Other languages also have the same phonemic and morphological rules. The researchers studied the phonemic textbooks of 58 languages 70 data sets were collected, each containing only dozens to hundreds of words and only a few grammatical phenomena. The experiment showed that the method of finding grammatical structures in natural language can also simulate the process of infants learning language.

By performing hierarchical Bayesian inference on these language data sets, the researchers found that the model can acquire new morphophonemic rules from just one or a few examples, and Able to extract common cross-language patterns and express them in compact, human-understandable form.

Let the AI model be a "linguist"

Human intelligence is mainly reflected in the ability to establish a theory of cognitive world. For example, after the formation of natural language, linguists summarized a set of rules to Help children learn specific languages more quickly, but current AI models cannot summarize the rules and form a theoretical framework that others can understand.

Before building a model, we need to solve a core problem: "How to describe a word." For example, the learning process of a word includes understanding the concept, intention, usage, pronunciation, and meaning of the word.

When building the vocabulary, the researchers expressed each word as a pair, for example, open is expressed as εn/, [stem: OPEN]>, the past tense is expressed as /, [tense: PAST]>, and the combined opened is expressed as εnd/, [stem: OPEN, [tense: PAST]]>

After having the data set, the researchers built a model to explain the generation of grammatical rules on a set of pair sets through maximum posterior probability inference to explain word changes.

In the representation of sounds, phonemes (atomic sounds) are represented as vectors of binary features, such as /m/, /n/, which are nasal sounds, and then based on the The feature space defines speech rules.

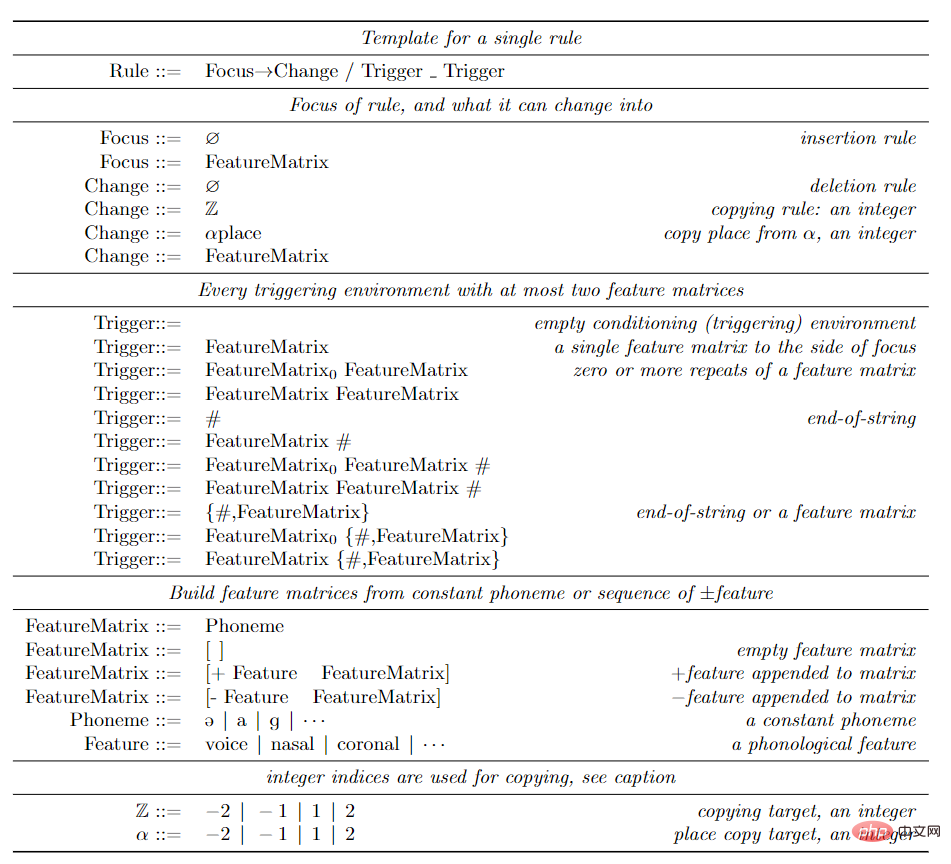

The researchers use the classic rule expression method, that is, context-dependent memory, sometimes also called SPE-style rules, which are widely used in the representation of sound patterns of English. .

The writing method of each rule is

(focus)→(structural_change)/(left_trigger)_(right_trigger), which means that as long as the left/right trigger environment is close to the left/right of focus, The focus phoneme will be converted according to structural changes.

The trigger environment specifies the connection of features (representing the set of phonemes). For example, in English, as long as the phoneme on the left is [-sonorant], the pronunciation at the end of the word is It will change from /d/ to /t/, and the writing rule is [-sonorant] → [-voice]/[-voice -sonorant]_#. For example, after walking applies this rule, the pronunciation changes from /wɔkd/ to /wɔkt/.

When such rules are constrained not to apply cyclically to their own outputs, the rules and lexics correspond to 2-way rational functions, which in turn correspond to finite state converters. -state transductions). It has been argued that the space of finite state converters is expressive enough to cover known empirical phenomena in morphophonetics and represents a limit on the descriptive power of practical uses of phonetic theory.

To learn this grammar, researchers used the Bayesian Program Learning (BPL) method. Model each grammar rule T as a program in a programming language that captures the domain-specific constraints of the problem space. The language structure common to all languages is called universal grammar. This approach can be seen as a modern instance of a long-standing approach in linguistics and employs human-understandable generative representations to formalize universal grammar.

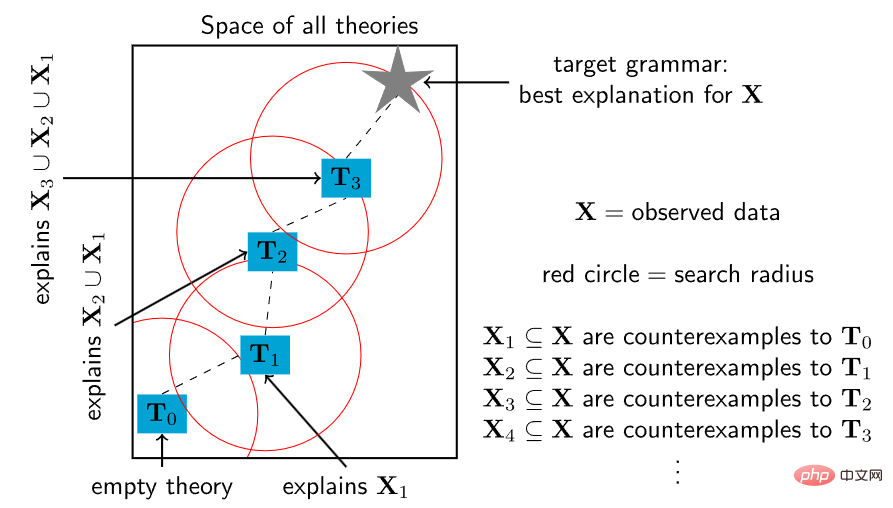

After defining the problem that BPL needs to solve, the search space of all programs is infinite, and no guidance is given on how to solve this problem, and there is a lack of information like In the case of local stationarity exploited by local optimization algorithms such as gradient descent or Markov chain Monte Carlo, the researchers adopted a constraint-based program synthesis strategy to transform the optimization problem into a combinatorial constraint satisfaction problem and use Boolean satisfiability (SAT) solver to solve.

These solvers implement an exhaustive but relatively efficient search and guarantee that an optimal solution will be found if there is enough time. The smallest grammar that is consistent with some data can be solved using the Sketch procedural synthesizer, but must comply with the upper limit of the grammar size.

But in practice, the exhaustive search techniques used by SAT solvers cannot scale to the massive amounts of rules required to interpret large corpora.

To scale the solver to large and complex theories, the researchers took inspiration from a fundamental feature of children acquiring language and scientists building theories.

Children do not learn language overnight, but gradually enrich their grasp of grammar and vocabulary through intermediate stages of language development. Likewise, a complex scientific theory may begin with a simple conceptual core and then gradually develop to encompass an increasing number of linguistic phenomena.

Based on the above ideas, the researchers designed a program synthesis algorithm, starting from a small program, and then repeatedly using the SAT solver to find small modification points so that it can explain more and more data . Specifically, find a counterexample to the current theory and then use a solver to exhaustively explore the space of all small modifications to the theory that can accommodate this counterexample.

##

##

But this heuristic method lacks the integrity guarantee of SAT solver: although it repeatedly calls a complete and accurate SAT solver, it does not guarantee to find an optimal solution, but each repeated call is better than directly Optimizing the entire data is much harder. Because constraining each new theory to be close to its previous theory in theory space results in a polynomial shrinkage of the constraint satisfaction problem, the search time increases exponentially, and the SAT solver in the worst case is exponentially .

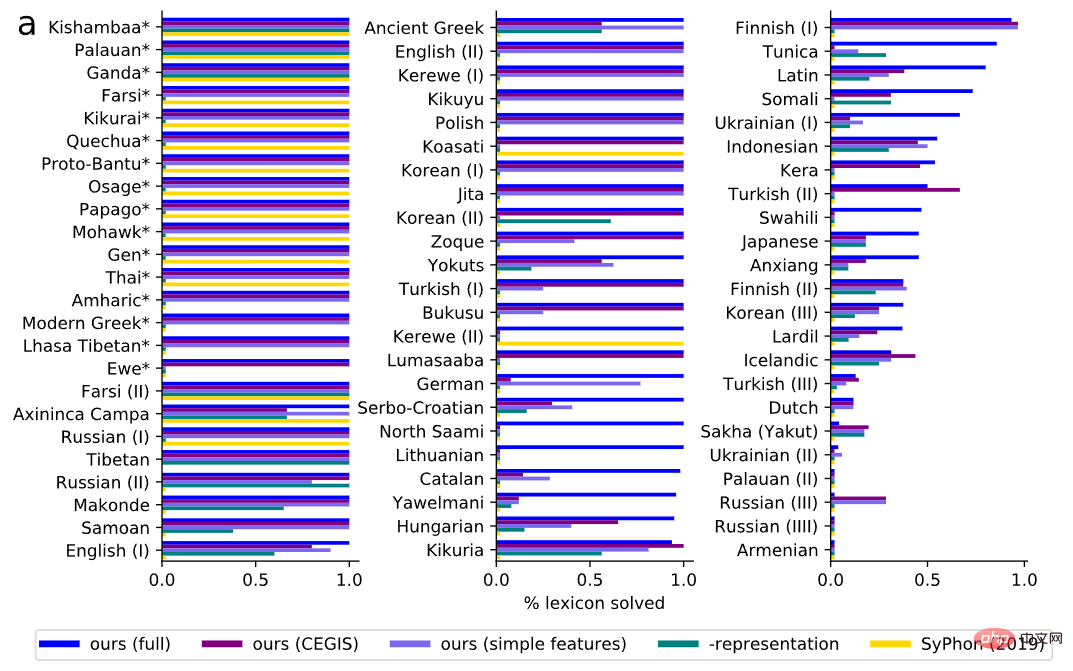

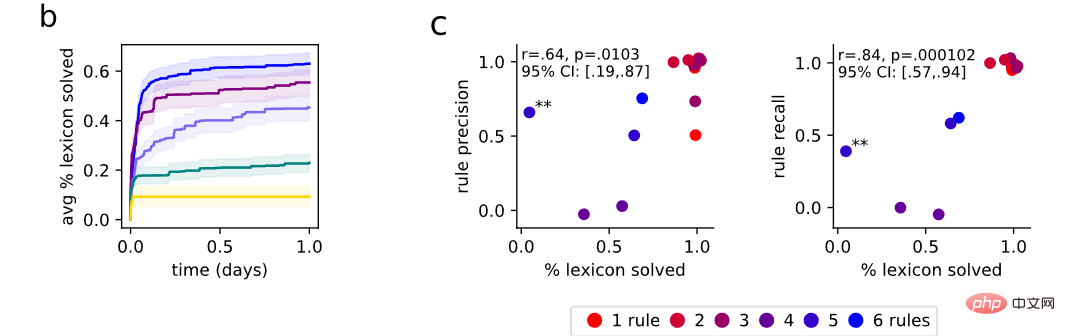

In the experimental evaluation phase, the researchers collected 70 questions from linguistics textbooks, each of which required a comprehensive analysis of some form of theory in natural language. The problems range in difficulty and cover a wide variety of natural language phenomena.

Natural languages are also diverse, including tonal languages. For example, in Kerewe (a Bantu language in Tanzania), to count is /kubala/, but to count it is /kukíbála/, where Stress marks high pitches.

There are also languages with vowel harmony. For example, Turkey has /el/ and /t∫an/, which respectively represent hands and bells, as well as /el-ler/ and /t∫an-lar/. , representing the plurals of hands and clocks respectively; there are many other linguistic phenomena, such as assimilation and extensional forms.

#In evaluation, we first measure the model’s ability to discover the correct vocabulary. Compared to ground-truth vocabularies, the model found syntax that correctly matched the entire vocabulary of the question in 60% of the benchmarks and correctly interpreted a large portion of the vocabulary in 79% of the questions.

Typically, the correct vocabulary for each problem is more specific than the correct rules, and any rules that produce complete data from the correct vocabulary must be consistent with what the model is likely to propose. Any underlying rules of have observational equivalence. Therefore, consistency with the underlying truth lexicon should be used as a metric to measure whether the synchronized rules behave correctly on the data, and this evaluation is related to the quality of the rules.

To test this hypothesis, the researchers randomly selected 15 questions and consulted with a professional linguist to score the discovered rules. Recall (the proportion of actual phonetic rules that were correctly recovered) and precision (the proportion of recovered rules that actually occurred) were measured simultaneously. Under the indicators of precision and recall, it can be found that the accuracy of the rules is positively correlated with the accuracy of the vocabulary.

When the system gets all the lexicon correct, it rarely introduces irrelevant rules (high precision) and almost always gets all the correct rules (high recall Rate).

The above is the detailed content of Linguists are back! Start learning from 'pronunciation”: this time the AI model has to teach itself. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The demand for computing power has exploded under the wave of AI large models. SenseTime's 'large model + large computing power” empowers the development of multiple industries.

Jun 09, 2023 pm 07:35 PM

The demand for computing power has exploded under the wave of AI large models. SenseTime's 'large model + large computing power” empowers the development of multiple industries.

Jun 09, 2023 pm 07:35 PM

Recently, the "Lingang New Area Intelligent Computing Conference" with the theme of "AI leads the era, computing power drives the future" was held. At the meeting, the New Area Intelligent Computing Industry Alliance was formally established. SenseTime became a member of the alliance as a computing power provider. At the same time, SenseTime was awarded the title of "New Area Intelligent Computing Industry Chain Master" enterprise. As an active participant in the Lingang computing power ecosystem, SenseTime has built one of the largest intelligent computing platforms in Asia - SenseTime AIDC, which can output a total computing power of 5,000 Petaflops and support 20 ultra-large models with hundreds of billions of parameters. Train at the same time. SenseCore, a large-scale device based on AIDC and built forward-looking, is committed to creating high-efficiency, low-cost, and large-scale next-generation AI infrastructure and services to empower artificial intelligence.

Image classification with few-shot learning using PyTorch

Apr 09, 2023 am 10:51 AM

Image classification with few-shot learning using PyTorch

Apr 09, 2023 am 10:51 AM

In recent years, deep learning-based models have performed well in tasks such as object detection and image recognition. On challenging image classification datasets like ImageNet, which contains 1,000 different object classifications, some models now exceed human levels. But these models rely on a supervised training process, they are significantly affected by the availability of labeled training data, and the classes the models are able to detect are limited to the classes they were trained on. Since there are not enough labeled images for all classes during training, these models may be less useful in real-world settings. And we want the model to be able to recognize classes it has not seen during training, since it is almost impossible to train on images of all potential objects. We will learn from a few samples

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

Google AI video is awesome again! VideoPrism, an all-in-one universal visual encoder, refreshes 30 SOTA performance features

Feb 26, 2024 am 09:58 AM

Google AI video is awesome again! VideoPrism, an all-in-one universal visual encoder, refreshes 30 SOTA performance features

Feb 26, 2024 am 09:58 AM

After the AI video model Sora became popular, major companies such as Meta and Google have stepped aside to do research and catch up with OpenAI. Recently, researchers from the Google team proposed a universal video encoder - VideoPrism. It can handle various video understanding tasks through a single frozen model. Image paper address: https://arxiv.org/pdf/2402.13217.pdf For example, VideoPrism can classify and locate the person blowing candles in the video below. Image video-text retrieval, based on the text content, the corresponding content in the video can be retrieved. For another example, describe the video below - a little girl is playing with building blocks. QA questions and answers are also available.

Implementing OpenAI CLIP on custom datasets

Sep 14, 2023 am 11:57 AM

Implementing OpenAI CLIP on custom datasets

Sep 14, 2023 am 11:57 AM

In January 2021, OpenAI announced two new models: DALL-E and CLIP. Both models are multimodal models that connect text and images in some way. The full name of CLIP is Contrastive Language-Image Pre-training (ContrastiveLanguage-ImagePre-training), which is a pre-training method based on contrasting text-image pairs. Why introduce CLIP? Because the currently popular StableDiffusion is not a single model, but consists of multiple models. One of the key components is the text encoder, which is used to encode the user's text input, and this text encoder is the text encoder CL in the CLIP model

How to split a dataset correctly? Summary of three common methods

Apr 08, 2023 pm 06:51 PM

How to split a dataset correctly? Summary of three common methods

Apr 08, 2023 pm 06:51 PM

Decomposing the dataset into a training set helps us understand the model, which is important for how the model generalizes to new unseen data. A model may not generalize well to new unseen data if it is overfitted. Therefore good predictions cannot be made. Having an appropriate validation strategy is the first step to successfully creating good predictions and using the business value of AI models. This article has compiled some common data splitting strategies. A simple train and test split divides the data set into training and validation parts, with 80% training and 20% validation. You can do this using Scikit's random sampling. First, the random seed needs to be fixed, otherwise the same data split cannot be compared and the results cannot be reproduced during debugging. If the data set

Researcher: AI model inference consumes more power, and industry electricity consumption in 2027 will be comparable to that of the Netherlands

Oct 14, 2023 am 08:25 AM

Researcher: AI model inference consumes more power, and industry electricity consumption in 2027 will be comparable to that of the Netherlands

Oct 14, 2023 am 08:25 AM

IT House reported on October 13 that "Joule", a sister journal of "Cell", published a paper this week called "The growing energy footprint of artificial intelligence (The growing energy footprint of artificial intelligence)". Through inquiries, we learned that this paper was published by Alex DeVries, the founder of the scientific research institution Digiconomist. He claimed that the reasoning performance of artificial intelligence in the future may consume a lot of electricity. It is estimated that by 2027, the electricity consumption of artificial intelligence may be equivalent to the electricity consumption of the Netherlands for a year. Alex DeVries said that the outside world has always believed that training an AI model is "the most important thing in AI".

PyTorch parallel training DistributedDataParallel complete code example

Apr 10, 2023 pm 08:51 PM

PyTorch parallel training DistributedDataParallel complete code example

Apr 10, 2023 pm 08:51 PM

The problem of training large deep neural networks (DNN) using large datasets is a major challenge in the field of deep learning. As DNN and dataset sizes increase, so do the computational and memory requirements for training these models. This makes it difficult or even impossible to train these models on a single machine with limited computing resources. Some of the major challenges in training large DNNs using large datasets include: Long training time: The training process can take weeks or even months to complete, depending on the complexity of the model and the size of the dataset. Memory limitations: Large DNNs may require large amounts of memory to store all model parameters, gradients, and intermediate activations during training. This can cause out of memory errors and limit what can be trained on a single machine.