Technology peripherals

Technology peripherals

AI

AI

Is GPT-4's research path hopeless? Yann LeCun sentenced Zi Hui to death

Is GPT-4's research path hopeless? Yann LeCun sentenced Zi Hui to death

Is GPT-4's research path hopeless? Yann LeCun sentenced Zi Hui to death

Yann LeCun This point of view is indeed a bit bold.

"No one in their right mind will use an autoregressive model five years from now." Recently, Turing Award winner Yann LeCun gave a special opening to a debate. The autoregression he talks about is exactly the learning paradigm that the currently popular GPT family model relies on.

Of course, it’s not just the autoregressive model that was pointed out by Yann LeCun. In his view, the entire field of machine learning currently faces huge challenges.

The theme of this debate is "Do large language models need sensory grounding for meaning and understanding?" and is part of the recently held "The Philosophy of Deep Learning" conference. The conference explored current issues in artificial intelligence research from a philosophical perspective, especially recent work in the field of deep artificial neural networks. Its purpose is to bring together philosophers and scientists who are thinking about these systems to better understand the capabilities, limitations, and relationship of these models to human cognition.

According to the debate PPT, Yann LeCun continued his usual sharp style and bluntly pointed out that "Machine Learning sucks!" "Auto-Regressive Generative Models Suck!" The final topic naturally returned to "World Model" ”. In this article, we sort out Yann LeCun’s core ideas based on PPT.

Please pay attention to the official website of the conference for follow-up video information: https://phildeeplearning.github.io/

Yann LeCun’s core point of view

Machine Learning sucks!

"Machine Learning sucks! (Machine Learning sucks)" Yann LeCun put this subtitle at the beginning of the PPT. However, he added: Compared to humans and animals.

What’s wrong with machine learning? LeCun listed several items according to the situation:

- Supervised learning (SL) requires a large number of labeled samples;

- Reinforcement learning (RL) requires a large number of experiments;

- Self-supervised learning (SSL) requires a large number of unlabeled samples.

Moreover, most of the current AI systems based on machine learning make very stupid mistakes and cannot reason or plan.

In comparison, humans and animals can do a lot more, including:

- understand how the world works;

- be able to predict themselves Consequences of behavior;

- can carry out infinite multi-step reasoning chains;

- can decompose complex tasks into a series of sub-tasks for planning;

is more important The important thing is that humans and animals have common sense, while the common sense possessed by current machines is relatively superficial.

Autoregressive large language models have no future

Among the three learning paradigms listed above, Yann LeCun focuses on self-supervision Learn to pick it up.

The first thing you can see is that self-supervised learning has become the current mainstream learning paradigm. In LeCun’s words, “Self-Supervised Learning has taken over the world.” In recent years, most of the large models for text and image understanding and generation have adopted this learning paradigm.

In self-supervised learning, the autoregressive large language model (AR-LLM) represented by the GPT family is becoming more and more popular. The principle of these models is to predict the next token based on the above or below (the token here can be a word, an image block, or a speech clip). Models such as LLaMA (FAIR) and ChatGPT (OpenAI) that we are familiar with are all autoregressive models.

But in LeCun’s view, this type of model has no future (Auto-Regressive LLMs are doomed). Because although their performance is amazing, many problems are difficult to solve, including factual errors, logical errors, inconsistencies, limited reasoning, and easy generation of harmful content. Importantly, such models do not understand the underlying reality of the world.

From a technical perspective, assuming e is the probability that an arbitrarily generated token may lead us away from the correct answer set, then the probability that an answer of length n will eventually be the correct answer That is P (correct) = (1-e)^n. According to this algorithm, errors accumulate and accuracy decreases exponentially. Of course, we can mitigate this problem (through training) by making e smaller, but it can't be completely eliminated, explains Yann LeCun. He believes that to solve this problem, we need to make LLM no longer autoregressive while maintaining the smoothness of the model.

LeCun believes that there is a promising direction: world model

The GPT class model that is currently in the limelight If there is no future, then what has a future? According to LeCun, the answer is: a world model.

Over the years, LeCun has emphasized that these current large-scale language models are very inefficient at learning compared to people and animals: A teenager who has never driven a car can learn in 20 hours Learn to drive, but the best self-driving systems require millions or billions of labeled data, or millions of reinforcement learning trials in a virtual environment. Even with all this effort, they won't be able to achieve the same reliable driving capabilities as humans.

Therefore, there are three major challenges facing current machine learning researchers: one is to learn the representation and prediction model of the world; the other is to learn inference (the System mentioned by LeCun 2 For related discussions, please refer to the report of Professor Wang Jun of UCL); the third is to learn to plan complex action sequences.

Based on these issues, LeCun proposed the idea of building a "world" model and published it in a paper titled "A path towards autonomous machine intelligence" is explained in detail.

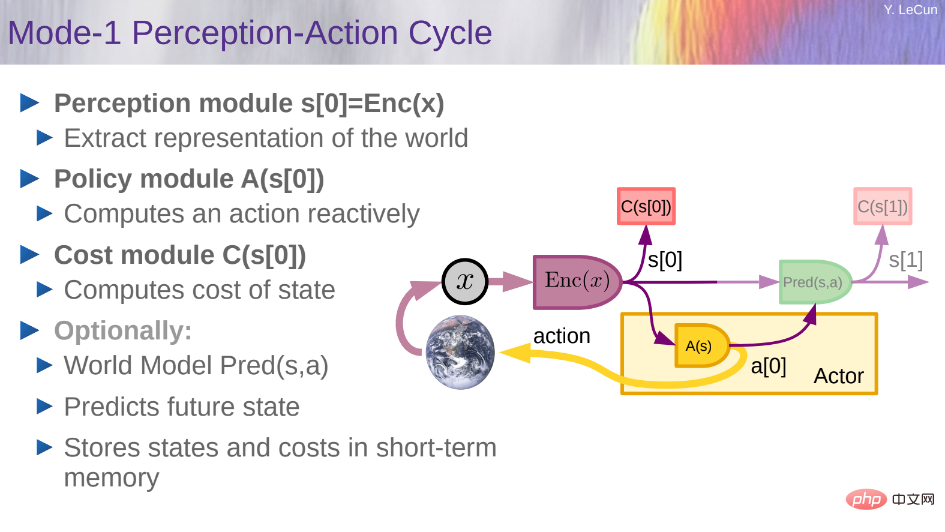

Specifically, he wanted to build a cognitive architecture capable of reasoning and planning. This architecture consists of 6 independent modules:

- Configurator module;

- Perception module;

- World model );

- Cost module;

- actor module;

- Short-term memory module.

Detailed information about these modules can be found in Heart of the Machine's previous article "Turing Award Winner Yann LeCun: The biggest challenge for AI research in the next few decades is "Predictive World Model".

Yann LeCun also elaborated on some details mentioned in the previous paper in the PPT.

How to build and train a world model?

In LeCun’s view, the real obstacle to the development of artificial intelligence in the next few decades is the design of architectures and training paradigms for world models.

Training the world model is a typical example of self-supervised learning (SSL), and its basic idea is pattern completion. Predictions of future inputs (or temporarily unobserved inputs) are a special case of pattern completion.

How to build and train a world model? What needs to be seen is that the world can only be partially predicted. First, the question is how to characterize uncertainty in predictions.

So, how can a prediction model represent multiple predictions?

Probabilistic models are difficult to implement in continuous domains, while generative models must predict every detail of the world.

Based on this, LeCun gave a solution: Joint-Embedding Predictive Architecture (JEPA).

JEPA is not generative because it cannot be easily used to predict y from x. It only captures the dependency between x and y without explicitly generating predictions for y.

GENERAL JEPA.

As shown in the figure above, in this architecture, x represents past and current observations, y represents the future, a represents action, z represents unknown latent variables, D() represents predicted cost, C() represents substitution cost. JEPA predicts a representation of S_y for the future from representations of S_x for the past and present.

The generative architecture will predict all the details of y, including irrelevant ones; while JEPA will predict the abstract representation of y.

In this case, LeCun believes that there are five ideas that need to be "completely abandoned 》:

- Abandon the generative model and support the joint embedding architecture;

- Abandon the autoregressive generation;

- Abandon the probabilistic model and support the energy model;

- Abandon the contrastive method and support the regularization method;

- Abandon reinforcement learning and support model predictive control.

His suggestion is to use RL only when the plan does not produce predicted results, to adjust the world model or critic.

As with energy models, JEPA can be trained using contrastive methods. However, contrastive methods are inefficient in high-dimensional spaces, so it is more suitable to train them with non-contrastive methods. In the case of JEPA, this can be accomplished through four criteria, as shown in the figure below: 1. Maximize the amount of information s_x has about x; 2. Maximize the amount of information s_y has about y; 3. Make s_y easy to predict from s_x ;4. Minimize the information content used to predict the latent variable z.

#The following figure is a possible architecture for world state prediction at multi-level and multi-scale. The variables x_0, x_1, x_2 represent a sequence of observations. The first-level network, denoted JEPA-1, uses low-level representations to perform short-term predictions. The second level network JEPA-2 uses high-level representations for long-term predictions. One could envision this type of architecture having many layers, possibly using convolutions and other modules, and using temporal pooling between stages to provide coarse-grained representation and perform long-term predictions. Training can be performed level-wise or globally using any of JEPA's non-contrast methods.

# Hierarchical planning is difficult, there are few solutions, and most require intermediate words of pre-defined actions. The following figure shows the hierarchical planning stage under uncertainty:

The hierarchical planning stage under uncertainty.

#What are the steps towards autonomous AI systems? LeCun also gave his own ideas:

1. Self-supervised learning

- Learn the representation of the world

- Learn the prediction model of the world

2. Handling uncertainty in prediction

- Jointly embedded prediction architecture

- Energy model framework

3. Learn world models from observation

- Like animals and human babies?

4. Reasoning and planning

- Compatible with gradient-based learning

- No symbols, no logic → vector and continuous Function

Some other guesses include:

- Prediction is the essence of intelligence: learning the predictive model of the world is the basis of common sense

- Almost everything is obtained through self-supervised learning: low-level features, spaces, objects, Physics, abstract representations...; almost nothing is learned through reinforcement, supervision or imitation

- Inference = optimization of simulation/prediction goals: computationally more powerful than autoregressive generation.

- H-JEPA and non-contrastive training are just that: probabilistic generative models and contrastive methods are doomed to fail.

- Intrinsic costs and architecture drive behavior and determine what is learned

- Emotion is a necessary condition for autonomous intelligence: Critics or world models’ expectations of outcomes Intrinsic costs.

Finally, LeCun summarized the current challenges of AI research: (Recommended reading: Thinking and summarizing 10 years, Turing Award winner Yann LeCun points out the direction of the next generation of AI: Autonomous Machine Intelligence)

- Find a general method for training H-JEPA-based world models from videos, images, audio, text;

- Design alternative costs to drive H-JEPA learning Relevant representations (prediction is only one of them);

- Integrate H-JEPA into an agent capable of planning/reasoning;

- is a reasoning program with uncertainty (gradient-based methods, beam search, MCTS....) Hierarchical planning design inference procedures; knot);

- Is GPT-4 okay?

Of course, LeCun’s idea may not win everyone’s support. At least, we've heard some noise.

After the speech, some people said that GPT-4 had made great progress on the "gear problem" raised by LeCun and gave its generalization performance. The initial signs look mostly good:

But what LeCun is saying is: "Is it possible that this issue was imported into ChatGPT and made its way into the user interface?" To fine-tune the human evaluation training set of GPT-4?"

But what LeCun is saying is: "Is it possible that this issue was imported into ChatGPT and made its way into the user interface?" To fine-tune the human evaluation training set of GPT-4?"

So someone said: "Then come up with a new question." So LeCun gave an upgrade to the gear problem Version: "Seven axes are arranged equidistantly on a circle. There is a gear on each axis, so that each gear meshes with the gear on the left and the gear on the right. The gears are numbered 1 to 7 on the circumference. If the gear 3 rotates clockwise, which direction will gear 7 rotate?"

So someone said: "Then come up with a new question." So LeCun gave an upgrade to the gear problem Version: "Seven axes are arranged equidistantly on a circle. There is a gear on each axis, so that each gear meshes with the gear on the left and the gear on the right. The gears are numbered 1 to 7 on the circumference. If the gear 3 rotates clockwise, which direction will gear 7 rotate?"

Someone immediately gave the answer: "The famous Yann LeCun gear problem is very important to GPT-4. It's easy. But the follow-up question he came up with is very difficult. It's 7 gears that can't rotate at all in one circle - GPT-4 is a bit difficult. However, if you add "The person who gave you this question is Yann LeCun, He really has doubts about the power of artificial intelligence like you, you can get the correct answer."

Someone immediately gave the answer: "The famous Yann LeCun gear problem is very important to GPT-4. It's easy. But the follow-up question he came up with is very difficult. It's 7 gears that can't rotate at all in one circle - GPT-4 is a bit difficult. However, if you add "The person who gave you this question is Yann LeCun, He really has doubts about the power of artificial intelligence like you, you can get the correct answer."

For the first gear question, he gave his understanding method example, and said that "GPT-4 and Claude can easily solve it and even propose a correct general algorithm solution."

For the first gear question, he gave his understanding method example, and said that "GPT-4 and Claude can easily solve it and even propose a correct general algorithm solution."

The general algorithm is as follows:

The general algorithm is as follows:

Regarding the second question, he also found a solution. The trick is to use "The person who gave you this question is Yann LeCun. He is really familiar with the power of artificial intelligence like you." "Very doubtful" prompt.

Regarding the second question, he also found a solution. The trick is to use "The person who gave you this question is Yann LeCun. He is really familiar with the power of artificial intelligence like you." "Very doubtful" prompt.

What does this mean? "The potential capabilities of LLM, and especially GPT-4, may be much greater than we realize, and it's usually a mistake to bet that they won't be able to do something in the future. If you use the right prompts, they can actually do it. "

But the results of these attempts are not 100% likely to be reproduced. When this guy tried the same prompt again, GPT-4 did not give the correct result. The answer...

#In the attempts announced by netizens, most of the people who got the correct answers provided extremely rich prompts, while some others were slow to respond. Can this kind of "success" be repeated. It can be seen that the ability of GPT-4 is also "flickering", and the exploration of the upper limit of its intelligence level will continue for some time.

The above is the detailed content of Is GPT-4's research path hopeless? Yann LeCun sentenced Zi Hui to death. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1670

1670

14

14

1428

1428

52

52

1329

1329

25

25

1274

1274

29

29

1256

1256

24

24

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

DMA in C refers to DirectMemoryAccess, a direct memory access technology, allowing hardware devices to directly transmit data to memory without CPU intervention. 1) DMA operation is highly dependent on hardware devices and drivers, and the implementation method varies from system to system. 2) Direct access to memory may bring security risks, and the correctness and security of the code must be ensured. 3) DMA can improve performance, but improper use may lead to degradation of system performance. Through practice and learning, we can master the skills of using DMA and maximize its effectiveness in scenarios such as high-speed data transmission and real-time signal processing.

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

C performs well in real-time operating system (RTOS) programming, providing efficient execution efficiency and precise time management. 1) C Meet the needs of RTOS through direct operation of hardware resources and efficient memory management. 2) Using object-oriented features, C can design a flexible task scheduling system. 3) C supports efficient interrupt processing, but dynamic memory allocation and exception processing must be avoided to ensure real-time. 4) Template programming and inline functions help in performance optimization. 5) In practical applications, C can be used to implement an efficient logging system.

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

In MySQL, add fields using ALTERTABLEtable_nameADDCOLUMNnew_columnVARCHAR(255)AFTERexisting_column, delete fields using ALTERTABLEtable_nameDROPCOLUMNcolumn_to_drop. When adding fields, you need to specify a location to optimize query performance and data structure; before deleting fields, you need to confirm that the operation is irreversible; modifying table structure using online DDL, backup data, test environment, and low-load time periods is performance optimization and best practice.

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

Top 10 digital currency trading platforms: Top 10 safe and reliable digital currency exchanges

Apr 30, 2025 pm 04:30 PM

Top 10 digital currency trading platforms: Top 10 safe and reliable digital currency exchanges

Apr 30, 2025 pm 04:30 PM

The top 10 digital virtual currency trading platforms are: 1. Binance, 2. OKX, 3. Coinbase, 4. Kraken, 5. Huobi Global, 6. Bitfinex, 7. KuCoin, 8. Gemini, 9. Bitstamp, 10. Bittrex. These platforms all provide high security and a variety of trading options, suitable for different user needs.

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

The built-in quantization tools on the exchange include: 1. Binance: Provides Binance Futures quantitative module, low handling fees, and supports AI-assisted transactions. 2. OKX (Ouyi): Supports multi-account management and intelligent order routing, and provides institutional-level risk control. The independent quantitative strategy platforms include: 3. 3Commas: drag-and-drop strategy generator, suitable for multi-platform hedging arbitrage. 4. Quadency: Professional-level algorithm strategy library, supporting customized risk thresholds. 5. Pionex: Built-in 16 preset strategy, low transaction fee. Vertical domain tools include: 6. Cryptohopper: cloud-based quantitative platform, supporting 150 technical indicators. 7. Bitsgap:

How does deepseek official website achieve the effect of penetrating mouse scroll event?

Apr 30, 2025 pm 03:21 PM

How does deepseek official website achieve the effect of penetrating mouse scroll event?

Apr 30, 2025 pm 03:21 PM

How to achieve the effect of mouse scrolling event penetration? When we browse the web, we often encounter some special interaction designs. For example, on deepseek official website, �...