Detailed explanation of redis master-slave replication

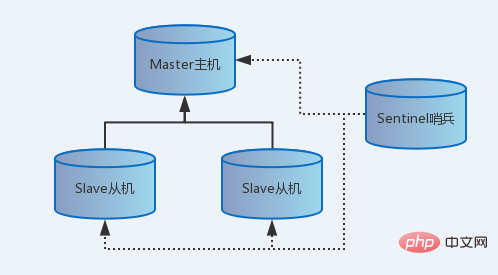

This chapter introduces a powerful feature of Redis-master-slave replication. A master host can have multiple slave machines. And a slave slave can have multiple slave slaves. This continues to form a powerful multi-level server cluster architecture (high scalability). It can avoid Redis single point of failure and achieve disaster recovery effect (high availability). The architecture of separation of reading and writing satisfies concurrent application scenarios with more reading and less writing. Recommended: redis video tutorial

##The role of master-slave replication

Master-slave replication, read-write separation, disaster recovery recover. One host is responsible for writing data, and multiple slave machines are responsible for backing up data. In high-concurrency scenarios, even if the host machine hangs up, the slave machine can be used to continue working in place of the host machine to avoid system performance problems caused by single points of failure. The separation of reading and writing enables better performance for applications that read more and write less.Master-slave replication architecture

At the base of Redis replication there is a very simple to use and configure master-slave replication that allows slave Redis servers to be exact copies of master servers.It is indeed simple. One command: slaveof host ip host port can determine the master-slave relationship; one command: ./redis-sentinel sentinel.conf Sentinel monitoring can be turned on. Building is simple, but maintenance is painful. In high concurrency scenarios, many unexpected problems may arise. We only have a clear understanding of the principle of replication, familiarity with the host machine, and the changes after the slave machine goes down. Only then can we overcome these pitfalls well. Each step below is a small knowledge point and a small scene. Every time you complete a step, you will gain knowledge. Architecture diagram: one master, two servants and one soldier (you can also have multiple masters, multiple servants and multiple soldiers)

Preparation before building Work

Because of poverty, the author chose to use one server to simulate three hosts. The only difference from the production environment is the IP address and port. Step 1: Copy three copies of redis.conf, the names are redis6379.conf, redis6380.conf, redis6381.confStep 2: Modify the ports of the three files , pid file name, log file name, rdb file name Step 3: Open three windows to simulate three servers and start the redis service.[root@itdragon bin]# cp redis.conf redis6379.conf [root@itdragon bin]# cp redis.conf redis6380.conf [root@itdragon bin]# cp redis.conf redis6381.conf [root@itdragon bin]# vim redis6379.conf logfile "6379.log" dbfilename dump_6379.rdb [root@itdragon bin]# vim redis6380.conf pidfile /var/run/redis_6380.pid port 6380 logfile "6380.log" dbfilename dump_6380.rdb [root@itdragon bin]# vim redis6381.conf port 6381 pidfile /var/run/redis_6381.pid logfile "6381.log" dbfilename dump_6381.rdb [root@itdragon bin]# ./redis-server redis6379.conf [root@itdragon bin]# ./redis-cli -h 127.0.0.1 -p 6379 127.0.0.1:6379> keys * (empty list or set) [root@itdragon bin]# ./redis-server redis6380.conf [root@itdragon bin]# ./redis-cli -h 127.0.0.1 -p 6380 127.0.0.1:6380> keys * (empty list or set) [root@itdragon bin]# ./redis-server redis6381.conf [root@itdragon bin]# ./redis-cli -h 127.0.0.1 -p 6381 127.0.0.1:6381> keys * (empty list or set)

Master-slave replication setup steps

Basic setup

Step 1: Query the master From the replication information, select three ports respectively and execute the command: info replication.# 6379 端口 [root@itdragon bin]# ./redis-server redis6379.conf [root@itdragon bin]# ./redis-cli -h 127.0.0.1 -p 6379 127.0.0.1:6379> info replication # Replication role:master connected_slaves:0 ...... # 6380 端口 127.0.0.1:6380> info replication # Replication role:master connected_slaves:0 ...... # 6381 端口 127.0.0.1:6381> info replication # Replication role:master connected_slaves:0 ......

127.0.0.1:6379> set k1 v1 OK

# 6380 端口 127.0.0.1:6380> SLAVEOF 127.0.0.1 6379 OK 127.0.0.1:6380> info replication # Replication role:slave master_host:127.0.0.1 master_port:6379 ...... # 6381 端口 127.0.0.1:6381> SLAVEOF 127.0.0.1 6379 OK 127.0.0.1:6381> info replication # Replication role:slave master_host:127.0.0.1 master_port:6379 ...... # 6379 端口 127.0.0.1:6379> info replication # Replication role:master connected_slaves:2 slave0:ip=127.0.0.1,port=6380,state=online,offset=98,lag=1 slave1:ip=127.0.0.1,port=6381,state=online,offset=98,lag=1 ......

# 6380 端口 127.0.0.1:6380> get k1 "v1" # 6381 端口 127.0.0.1:6381> get k1 "v1"

# 6379 端口 127.0.0.1:6379> set k2 v2 OK # 6380 端口 127.0.0.1:6380> get k2 "v2" # 6381 端口 127.0.0.1:6381> get k2 "v2"

# 6380 端口 127.0.0.1:6380> set k3 v3 (error) READONLY You can't write against a read only slave. # 6379 端口 127.0.0.1:6379> set k3 v3 OK

# 6379 端口 127.0.0.1:6379> SHUTDOWN not connected> QUIT # 6380 端口 127.0.0.1:6380> info replication # Replication role:slave master_host:127.0.0.1 master_port:6379 ...... # 6381 端口 127.0.0.1:6381> info replication # Replication role:slave master_host:127.0.0.1 master_port:6379 ......

# 6379 端口 [root@itdragon bin]# ./redis-server redis6379.conf [root@itdragon bin]# ./redis-cli -h 127.0.0.1 -p 6379 127.0.0.1:6379> set k4 v4 OK # 6380 端口 127.0.0.1:6380> get k4 "v4" # 6381 端口 127.0.0.1:6381> get k4 "v4"

# 6380 端口 127.0.0.1:6380> SHUTDOWN not connected> QUIT [root@itdragon bin]# ./redis-server redis6380.conf [root@itdragon bin]# ./redis-cli -h 127.0.0.1 -p 6380 127.0.0.1:6380> info replication # Replication role:slave master_host:127.0.0.1 master_port:6379 ...... 127.0.0.1:6380> get k5 "v5" # 6379 端口 127.0.0.1:6379> set k5 v5 OK

从机宕机后,一切正常。笔者用的是redis.4.0.2版本的。看过其他教程,从机宕机恢复后,只能同步主机新增数据,也就是k5是没有值的,可是笔者反复试过,均有值。留着备忘!

第十步:去中性化思想,选择6380端口,执行命令:SLAVEOF 127.0.0.1 6381。选择6381端口,执行命令:info replication

# 6380 端口 127.0.0.1:6380> SLAVEOF 127.0.0.1 6381 OK # 6381 端口 127.0.0.1:6381> info replication # Replication role:slave master_host:127.0.0.1 master_port:6379 ...... connected_slaves:1 slave0:ip=127.0.0.1,port=6380,state=online,offset=1677,lag=1 ......

虽然6381 是6380的主机,是6379的从机。在Redis眼中,6381依旧是从机。一台主机配多台从机,一台从机在配多台从机,从而实现了庞大的集群架构。同时也减轻了一台主机的压力,缺点是增加了服务器间的延迟。

从机上位

模拟主机宕机,人为手动怂恿从机上位的场景。先将三个端口恢复成6379是主机,6380和6381是从机的架构。

从机上位步骤:

第一步:模拟主机宕机,选择6379端口,执行命令:shutdown

第二步:断开主从关系,选择6380端口,执行命令:SLAVEOF no one

第三步:重新搭建主从,选择6381端口,执行命令:info replication,SLAVEOF 127.0.0.1 6380

第四步:之前主机恢复,选择6379端口,重启Redis服务,执行命令:info replication

在6379主机宕机后,6380从机断开主从关系,6381开始还在原地待命,后来投靠6380主机后,6379主机回来了当它已是孤寡老人,空头司令。

# 6379端口 127.0.0.1:6379> SHUTDOWN not connected> QUIT # 6380端口 127.0.0.1:6380> SLAVEOF no one OK 127.0.0.1:6380> set k6 v6 OK # 6381端口 127.0.0.1:6381> info replication # Replication role:slave master_host:127.0.0.1 master_port:6379 ...... 127.0.0.1:6381> SLAVEOF 127.0.0.1 6380 OK 127.0.0.1:6381> get k6 "v6"

哨兵监控

从机上位是需要人为控制,在生产环境中是不可取的,不可能有人实时盯着它,也不可能大半夜起床重新搭建主从关系。在这样的需求促使下,哨兵模式来了!!!

哨兵有三大任务:

1 监控:哨兵会不断地检查你的Master和Slave是否运作正常

2 提醒:当被监控的某个Redis出现问题时, 哨兵可以通过API向管理员或者其他应用程序发送通知

3 故障迁移:若一台主机出现问题时,哨兵会自动将该主机下的某一个从机设置为新的主机,并让其他从机和新主机建立主从关系。

哨兵搭建步骤:

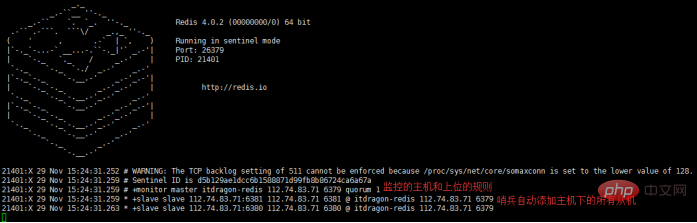

第一步:新开一个窗口,取名sentinel,方便观察哨兵日志信息

第二步:创建sentinel.conf文件,也可以从redis的解压文件夹中拷贝一份。

第三步:设置监控的主机和上位的规则,编辑sentinel.conf,输入 sentinel monitor itdragon-redis 127.0.0.1 6379 1 保存退出。解说:指定监控主机的ip地址,port端口,得票数。

第四步:前端启动哨兵,执行命令:./redis-sentinel sentinel.conf。

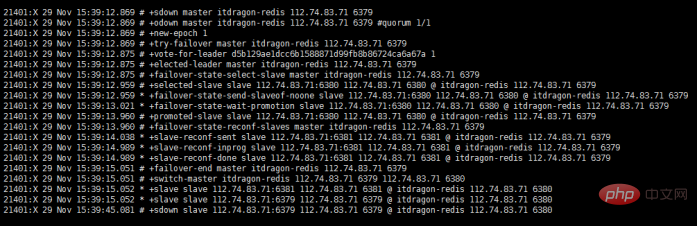

第五步:模拟主机宕机,选择6379窗口,执行命令:shutdown。

第六步:等待从机投票,在sentinel窗口中查看打印信息。

第七步:启动6379服务器,

语法结构:sentinel monitor 自定义数据库名 主机ip 主机port 得票数

若从机得票数大于设置值,则成为新的主机。若之前的主机恢复后,

如果哨兵也宕机了???那就多配几个哨兵并且相互监控。

# sentinel窗口 [root@itdragon bin]# vim sentinel.conf sentinel monitor itdragon-redis 127.0.0.1 6379 1 [root@itdragon bin]# ./redis-sentinel sentinel.conf ...... 21401:X 29 Nov 15:39:15.052 * +slave slave 127.0.0.1:6381 127.0.0.1 6381 @ itdragon-redis 127.0.0.1 6380 21401:X 29 Nov 15:39:15.052 * +slave slave 127.0.0.1:6379 127.0.0.1 6379 @ itdragon-redis 127.0.0.1 6380 21401:X 29 Nov 15:39:45.081 # +sdown slave 127.0.0.1:6379 127.0.0.1 6379 @ itdragon-redis 127.0.0.1 6380 21401:X 29 Nov 16:40:52.055 # -sdown slave 127.0.0.1:6379 127.0.0.1 6379 @ itdragon-redis 127.0.0.1 6380 21401:X 29 Nov 16:41:02.028 * +convert-to-slave slave 127.0.0.1:6379 127.0.0.1 6379 @ itdragon-redis 127.0.0.1 6380 ...... # 6379端口 127.0.0.1:6379> SHUTDOWN not connected> QUIT # 6380端口 127.0.0.1:6380> info replication # Replication role:master connected_slaves:1 slave0:ip=127.0.0.1,port=6381,state=online,offset=72590,lag=0 ...... # 6381端口 127.0.0.1:6381> info replication # Replication role:slave master_host:127.0.0.1 master_port:6380 ......

+slave :一个新的从服务器已经被 Sentinel 识别并关联。

+sdown :给定的实例现在处于主观下线状态。

-sdown :给定的实例已经不再处于主观下线状态。

主从复制的原理

全量复制

实现原理:建立主从关系时,从机会给主机发送sync命令,主机接收命令,后台启动的存盘进程,同时收集所有用于修改命令,传送给从机。

增量复制

实现原理:主机会继续将新收集到的修改命令依次传给从机,实现数据的同步效果。

主从复制的缺点

Redis的主从复制最大的缺点就是延迟,主机负责写,从机负责备份,这个过程有一定的延迟,当系统很繁忙的时候,延迟问题会更加严重,从机器数量的增加也会使这个问题更加严重。

Summary

1 View the master-slave replication relationship command: info replication

2 Set the master-slave relationship command: slaveof host ip host port

3 Turn on the sentinel mode command: ./redis-sentinel sentinel.conf

4 Master-slave replication principle: full assignment at the beginning, followed by incremental assignment

5 Three major tasks of sentinel mode: monitoring , reminder, automatic fault migration

For more redis knowledge, please pay attention to the redis database tutorial column.

The above is the detailed content of Detailed explanation of redis master-slave replication. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1669

1669

14

14

1428

1428

52

52

1329

1329

25

25

1273

1273

29

29

1256

1256

24

24

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

Redis cluster mode deploys Redis instances to multiple servers through sharding, improving scalability and availability. The construction steps are as follows: Create odd Redis instances with different ports; Create 3 sentinel instances, monitor Redis instances and failover; configure sentinel configuration files, add monitoring Redis instance information and failover settings; configure Redis instance configuration files, enable cluster mode and specify the cluster information file path; create nodes.conf file, containing information of each Redis instance; start the cluster, execute the create command to create a cluster and specify the number of replicas; log in to the cluster to execute the CLUSTER INFO command to verify the cluster status; make

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear Redis data: Use the FLUSHALL command to clear all key values. Use the FLUSHDB command to clear the key value of the currently selected database. Use SELECT to switch databases, and then use FLUSHDB to clear multiple databases. Use the DEL command to delete a specific key. Use the redis-cli tool to clear the data.

How to read redis queue

Apr 10, 2025 pm 10:12 PM

How to read redis queue

Apr 10, 2025 pm 10:12 PM

To read a queue from Redis, you need to get the queue name, read the elements using the LPOP command, and process the empty queue. The specific steps are as follows: Get the queue name: name it with the prefix of "queue:" such as "queue:my-queue". Use the LPOP command: Eject the element from the head of the queue and return its value, such as LPOP queue:my-queue. Processing empty queues: If the queue is empty, LPOP returns nil, and you can check whether the queue exists before reading the element.

How to configure Lua script execution time in centos redis

Apr 14, 2025 pm 02:12 PM

How to configure Lua script execution time in centos redis

Apr 14, 2025 pm 02:12 PM

On CentOS systems, you can limit the execution time of Lua scripts by modifying Redis configuration files or using Redis commands to prevent malicious scripts from consuming too much resources. Method 1: Modify the Redis configuration file and locate the Redis configuration file: The Redis configuration file is usually located in /etc/redis/redis.conf. Edit configuration file: Open the configuration file using a text editor (such as vi or nano): sudovi/etc/redis/redis.conf Set the Lua script execution time limit: Add or modify the following lines in the configuration file to set the maximum execution time of the Lua script (unit: milliseconds)

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

Use the Redis command line tool (redis-cli) to manage and operate Redis through the following steps: Connect to the server, specify the address and port. Send commands to the server using the command name and parameters. Use the HELP command to view help information for a specific command. Use the QUIT command to exit the command line tool.

How to implement redis counter

Apr 10, 2025 pm 10:21 PM

How to implement redis counter

Apr 10, 2025 pm 10:21 PM

Redis counter is a mechanism that uses Redis key-value pair storage to implement counting operations, including the following steps: creating counter keys, increasing counts, decreasing counts, resetting counts, and obtaining counts. The advantages of Redis counters include fast speed, high concurrency, durability and simplicity and ease of use. It can be used in scenarios such as user access counting, real-time metric tracking, game scores and rankings, and order processing counting.

How to set the redis expiration policy

Apr 10, 2025 pm 10:03 PM

How to set the redis expiration policy

Apr 10, 2025 pm 10:03 PM

There are two types of Redis data expiration strategies: periodic deletion: periodic scan to delete the expired key, which can be set through expired-time-cap-remove-count and expired-time-cap-remove-delay parameters. Lazy Deletion: Check for deletion expired keys only when keys are read or written. They can be set through lazyfree-lazy-eviction, lazyfree-lazy-expire, lazyfree-lazy-user-del parameters.

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

In Debian systems, readdir system calls are used to read directory contents. If its performance is not good, try the following optimization strategy: Simplify the number of directory files: Split large directories into multiple small directories as much as possible, reducing the number of items processed per readdir call. Enable directory content caching: build a cache mechanism, update the cache regularly or when directory content changes, and reduce frequent calls to readdir. Memory caches (such as Memcached or Redis) or local caches (such as files or databases) can be considered. Adopt efficient data structure: If you implement directory traversal by yourself, select more efficient data structures (such as hash tables instead of linear search) to store and access directory information