Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

Redis single data multi-source ultra-high concurrency solution

Redis single data multi-source ultra-high concurrency solution

Redis single data multi-source ultra-high concurrency solution

Redis is currently the most popular KV cache database. It is simple and easy to use, safe and stable, and has a very wide range of applications in the Internet industry.

This article mainly shares with you the solutions and solutions of Redis under the ultra-high concurrent access of single data and multiple sources.

Preface

Redis mainly solves two problems:

When encountering a business scenario with tens of millions of daily users and millions of people online at the same time , if the front-end access is directly loaded into the back-end database, it may overwhelm the underlying database and cause the business to stop. Or as the number of query conditions increases and the combination conditions become more complex, the response time of the query results cannot be guaranteed, resulting in a decline in user experience and loss of users. In order to solve high-concurrency, low-latency business scenarios, Redis came into being.

Let’s take a look at two scenarios

This is a business scenario for online house hunting. There are so many query conditions that the backend must be complex. Query SQL, is it necessary to use Redis in this scenario?

The answer is no. Due to the low concurrency of the online house search business, customers are not so demanding on business response time. Most requests can be directly queried temporarily through dynamic SQL. Of course, in order to improve the user experience, some hot query results can be pre-cached into Redis to improve the user experience.

Let’s take a look at this scenario again

#The film checking system for video applications has almost the same business scenario as the housing search system, but the concurrency is higher By several orders of magnitude, this scenario is very suitable for using Redis as a cache to increase concurrent access, reduce response time, and meet hundreds of thousands or even millions of concurrent access requirements. It can be seen that the fundamental factors in deciding whether to use Redis are concurrency and latency requirements.

Let’s take a look at how Redis solves the concurrent access requirements in extreme Internet scenarios.

Caching solution under ultra-high concurrent access

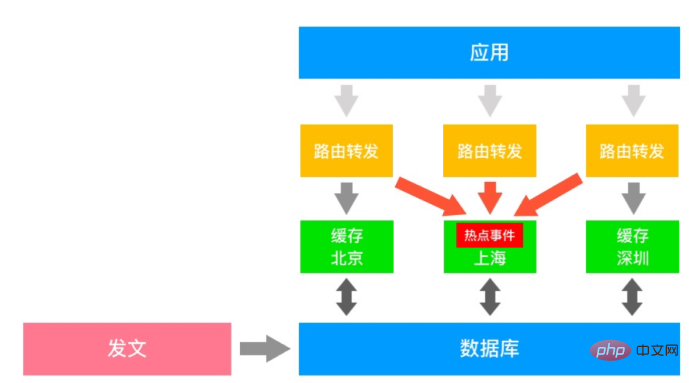

This is a typical media cache architecture diagram. The publishing system updates the media library from time to time, through The distributed cache service synchronizes each latest article to the Redis cache, and the front-end application finds the corresponding data source access through the routing layer. The data of each cache service is out of sync. When a hotspot event occurs, the routing layer may route access from unreachable areas to the cache server where the hotspot data is located, causing an instantaneous traffic surge. In extreme cases, it may cause server downtime and business damage. So how to solve this irregular traffic burst scenario?

Here are a few ideas:

Break up the hotspot keys with prefixes to achieve hot data replication

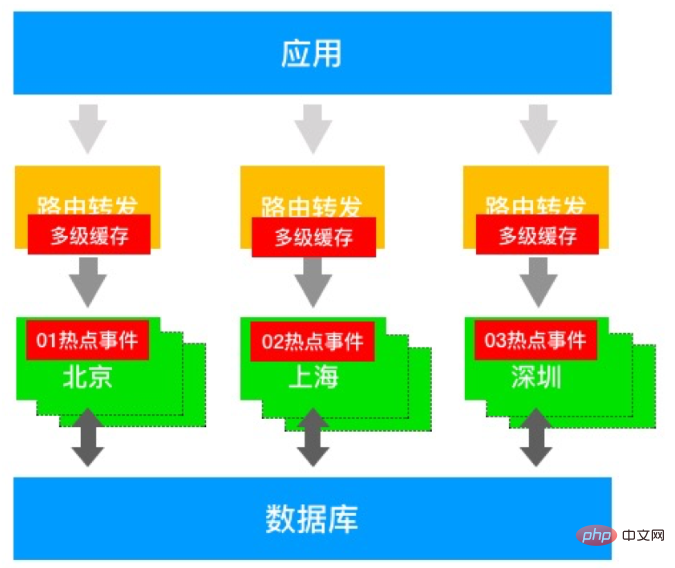

Add local cache to the routing layer , improve caching capabilities through multi-level caching

The cache layer provides data copies and improves concurrent access capabilities

The first solution can effectively dissipate hot data, but hot events occur randomly from time to time. The operation and maintenance pressure is high and the cost is high. This is just a solution to a headache and a pain in the ass.

The second solution can improve caching capabilities by adding local cache. However, how large the local cache should be set, how high the refresh frequency is, and whether the business can tolerate dirty reads are all issues that cannot be avoided.

The third solution can add read-only replicas to achieve data replication, but it will also bring about high costs and high load on the main database.

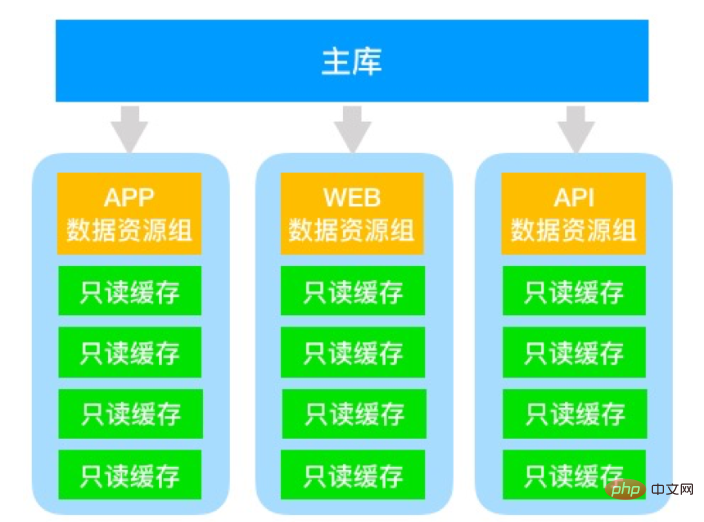

The above architecture diagram is an optimized solution. It pulls multiple branches of read-only slave libraries through the main library, and divides independent caches for different request sources. Serve. For example, mobile applications are fixedly routed to the APP data resource group, and WEB access is routed to the WEB data resource group, etc., and each resource group can provide N read-only copies to improve concurrent access capabilities under same-origin access. This architecture can improve the resource isolation capabilities of different access sources and improve the stability and availability of services under multi-source access.

The problems with this solution are also obvious:

The read and write performance of the main library is poor

There are many read-only copies and the cost is high

The read-only link is passed It is long, difficult to manage and maintain, and has high operation and maintenance costs

The most exaggerated example of our customers has used a 1-master-40-read-only architecture to meet similar business scenarios.

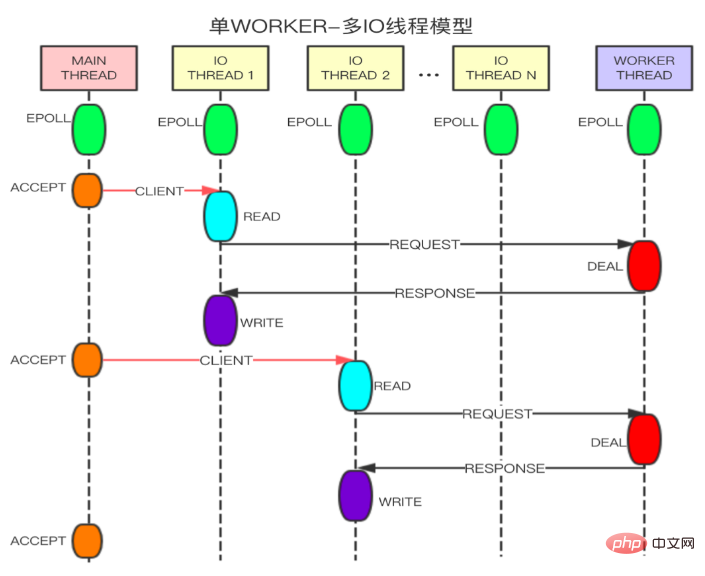

How does Alibaba Cloud Redis solve this ultra-high concurrent access problem?

Alibaba Cloud has launched a performance-enhanced version of Redis. By improving the concurrent processing capabilities of network IO, it has greatly improved the read and write performance of Redis single node. Compared with the community version, the performance has been improved. 3 times. Due to maintaining the single Worker processing mode, it is 100% compatible with the Redis protocol. The above access capability of one million QPS for a single data can be easily achieved. The media scenario introduced in this article can achieve 2 million QPS for a single data by activating a performance-enhanced version of 1 master and 5 read-only instances, effectively alleviating industry pain points such as traffic surges and ultra-high concurrent access caused by sudden hot events. Compared with the self-built community version with 1 master and 40 reads, the Alibaba Cloud Redis performance enhanced version with the same performance standards has a 1 master and 5 read-only architecture, which is more stable, more convenient to manage, and more convenient to use.

The above is the detailed content of Redis single data multi-source ultra-high concurrency solution. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1317

1317

25

25

1268

1268

29

29

1243

1243

24

24

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

Redis cluster mode deploys Redis instances to multiple servers through sharding, improving scalability and availability. The construction steps are as follows: Create odd Redis instances with different ports; Create 3 sentinel instances, monitor Redis instances and failover; configure sentinel configuration files, add monitoring Redis instance information and failover settings; configure Redis instance configuration files, enable cluster mode and specify the cluster information file path; create nodes.conf file, containing information of each Redis instance; start the cluster, execute the create command to create a cluster and specify the number of replicas; log in to the cluster to execute the CLUSTER INFO command to verify the cluster status; make

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear Redis data: Use the FLUSHALL command to clear all key values. Use the FLUSHDB command to clear the key value of the currently selected database. Use SELECT to switch databases, and then use FLUSHDB to clear multiple databases. Use the DEL command to delete a specific key. Use the redis-cli tool to clear the data.

How to read redis queue

Apr 10, 2025 pm 10:12 PM

How to read redis queue

Apr 10, 2025 pm 10:12 PM

To read a queue from Redis, you need to get the queue name, read the elements using the LPOP command, and process the empty queue. The specific steps are as follows: Get the queue name: name it with the prefix of "queue:" such as "queue:my-queue". Use the LPOP command: Eject the element from the head of the queue and return its value, such as LPOP queue:my-queue. Processing empty queues: If the queue is empty, LPOP returns nil, and you can check whether the queue exists before reading the element.

How to configure Lua script execution time in centos redis

Apr 14, 2025 pm 02:12 PM

How to configure Lua script execution time in centos redis

Apr 14, 2025 pm 02:12 PM

On CentOS systems, you can limit the execution time of Lua scripts by modifying Redis configuration files or using Redis commands to prevent malicious scripts from consuming too much resources. Method 1: Modify the Redis configuration file and locate the Redis configuration file: The Redis configuration file is usually located in /etc/redis/redis.conf. Edit configuration file: Open the configuration file using a text editor (such as vi or nano): sudovi/etc/redis/redis.conf Set the Lua script execution time limit: Add or modify the following lines in the configuration file to set the maximum execution time of the Lua script (unit: milliseconds)

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

Use the Redis command line tool (redis-cli) to manage and operate Redis through the following steps: Connect to the server, specify the address and port. Send commands to the server using the command name and parameters. Use the HELP command to view help information for a specific command. Use the QUIT command to exit the command line tool.

How to implement redis counter

Apr 10, 2025 pm 10:21 PM

How to implement redis counter

Apr 10, 2025 pm 10:21 PM

Redis counter is a mechanism that uses Redis key-value pair storage to implement counting operations, including the following steps: creating counter keys, increasing counts, decreasing counts, resetting counts, and obtaining counts. The advantages of Redis counters include fast speed, high concurrency, durability and simplicity and ease of use. It can be used in scenarios such as user access counting, real-time metric tracking, game scores and rankings, and order processing counting.

How to set the redis expiration policy

Apr 10, 2025 pm 10:03 PM

How to set the redis expiration policy

Apr 10, 2025 pm 10:03 PM

There are two types of Redis data expiration strategies: periodic deletion: periodic scan to delete the expired key, which can be set through expired-time-cap-remove-count and expired-time-cap-remove-delay parameters. Lazy Deletion: Check for deletion expired keys only when keys are read or written. They can be set through lazyfree-lazy-eviction, lazyfree-lazy-expire, lazyfree-lazy-user-del parameters.

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

In Debian systems, readdir system calls are used to read directory contents. If its performance is not good, try the following optimization strategy: Simplify the number of directory files: Split large directories into multiple small directories as much as possible, reducing the number of items processed per readdir call. Enable directory content caching: build a cache mechanism, update the cache regularly or when directory content changes, and reduce frequent calls to readdir. Memory caches (such as Memcached or Redis) or local caches (such as files or databases) can be considered. Adopt efficient data structure: If you implement directory traversal by yourself, select more efficient data structures (such as hash tables instead of linear search) to store and access directory information