Backend Development

Backend Development

Python Tutorial

Python Tutorial

Python multi-threaded crawling of Google search link web pages

Python multi-threaded crawling of Google search link web pages

Python multi-threaded crawling of Google search link web pages

1) urllib2+BeautifulSoup captures Google search links

Recently, I have participated in a project that requires processing Google search results. I have previously learned Python tools related to processing web pages. In practical applications, urllib2 and beautifulsoup are used to crawl web pages. However, when crawling Google search results, it is found that if the source code of the Google search results page is directly processed, many "dirty" links will be obtained.

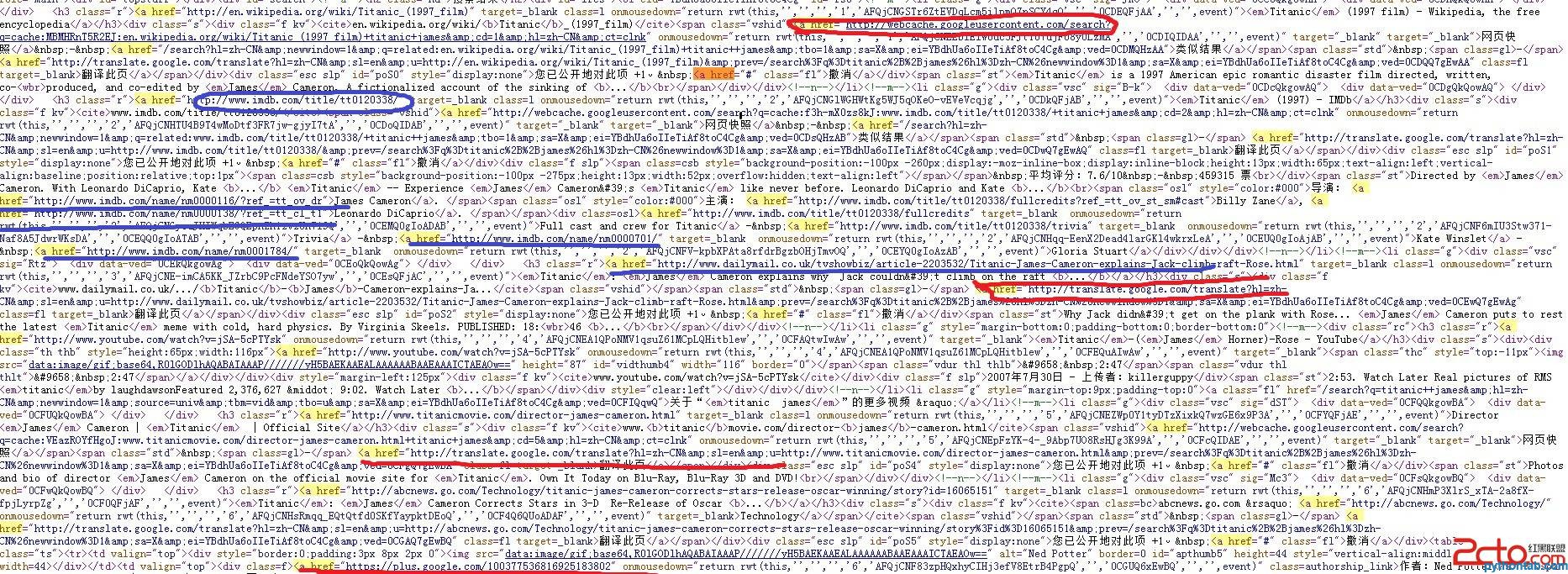

Look at the picture below for the results of searching for "titanic james":

The ones marked in red in the picture are not needed, and the ones marked in blue need to be captured and processed.

Of course, this kind of "dirty link" can be filtered out through rule filtering, but the complexity of the program will be high. Just when I was writing the filtering rules with a frown on my face. A classmate reminded me that Google should provide relevant APIs, and then I suddenly understood.

(2) Google Web Search API + Multithreading

The document gives an example of using Python to search:

The ones marked in red in the picture are not needed, and those marked in blue need to be crawled.

Of course, this kind of "dirty link" can be filtered out through rule filtering, but the complexity of the program will be high. Just when I was writing the filtering rules with a frown on my face. A classmate reminded me that Google should provide relevant APIs, and then I suddenly understood.

(2) Google Web Search API + multi-threading

The document gives an example of using Python to search:

import simplejson

# The request also includes the userip parameter which provides the end

# user's IP address. Doing so will help distinguish this legitimate

# server-side traffic from traffic which doesn't come from an end-user.

url = ('https://ajax.googleapis.com/ajax/services/search/web'

'?v=1.0&q=Paris%20Hilton&userip=USERS-IP-ADDRESS')

request = urllib2.Request(

url, None, {'Referer': /* Enter the URL of your site here */})

response = urllib2.urlopen(request)

# Process the JSON string.

results = simplejson.load(response)

# now have some fun with the results...

import simplejson

# The request also includes the userip parameter which provides the end

# user's IP address. Doing so will help distinguish this legitimate

# server-side traffic from traffic which doesn't come from an end-user.

url = ('https://ajax.googleapis.com/ajax/services/search/web'

'?v=1.0&q=Paris%20Hilton&userip=USERS-IP-ADDRESS')

request = urllib2.Request(

url, None, {'Referer': /* Enter the URL of your site here */})

response = urllib2.urlopen(request)

# Process the JSON string.

results = simplejson.load(response)

# now have some fun with the results..In actual applications, you may need to crawl many web pages on Google, so you need to use multi-threading to share the crawling task . For a detailed reference introduction to using the Google Web Search API, please see here (Standard URL Arguments are introduced here). In addition, special attention should be paid to the parameter rsz in the url must be a value below 8 (including 8). If it is greater than 8, an error will be reported!

(3) Code implementation

There are still problems in the code implementation, but it can run, the robustness is poor, and it needs to be improved. I hope all the experts can point out the mistakes (beginner to Python), I will be grateful.

#-*-coding:utf-8-*-

import urllib2,urllib

import simplejson

import os, time,threading

import common, html_filter

#input the keywords

keywords = raw_input('Enter the keywords: ')

#define rnum_perpage, pages

rnum_perpage=8

pages=8

#定义线程函数

def thread_scratch(url, rnum_perpage, page):

url_set = []

try:

request = urllib2.Request(url, None, {'Referer': 'http://www.sina.com'})

response = urllib2.urlopen(request)

# Process the JSON string.

results = simplejson.load(response)

info = results['responseData']['results']

except Exception,e:

print 'error occured'

print e

else:

for minfo in info:

url_set.append(minfo['url'])

print minfo['url']

#处理链接

i = 0

for u in url_set:

try:

request_url = urllib2.Request(u, None, {'Referer': 'http://www.sina.com'})

request_url.add_header(

'User-agent',

'CSC'

)

response_data = urllib2.urlopen(request_url).read()

#过滤文件

#content_data = html_filter.filter_tags(response_data)

#写入文件

filenum = i+page

filename = dir_name+'/related_html_'+str(filenum)

print ' write start: related_html_'+str(filenum)

f = open(filename, 'w+', -1)

f.write(response_data)

#print content_data

f.close()

print ' write down: related_html_'+str(filenum)

except Exception, e:

print 'error occured 2'

print e

i = i+1

return

#创建文件夹

dir_name = 'related_html_'+urllib.quote(keywords)

if os.path.exists(dir_name):

print 'exists file'

common.delete_dir_or_file(dir_name)

os.makedirs(dir_name)

#抓取网页

print 'start to scratch web pages:'

for x in range(pages):

print "page:%s"%(x+1)

page = x * rnum_perpage

url = ('https://ajax.googleapis.com/ajax/services/search/web'

'?v=1.0&q=%s&rsz=%s&start=%s') % (urllib.quote(keywords), rnum_perpage,page)

print url

t = threading.Thread(target=thread_scratch, args=(url,rnum_perpage, page))

t.start()

#主线程等待子线程抓取完

main_thread = threading.currentThread()

for t in threading.enumerate():

if t is main_thread:

continue

t.join()

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1666

1666

14

14

1425

1425

52

52

1327

1327

25

25

1273

1273

29

29

1253

1253

24

24

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP is mainly procedural programming, but also supports object-oriented programming (OOP); Python supports a variety of paradigms, including OOP, functional and procedural programming. PHP is suitable for web development, and Python is suitable for a variety of applications such as data analysis and machine learning.

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

PHP is suitable for web development and rapid prototyping, and Python is suitable for data science and machine learning. 1.PHP is used for dynamic web development, with simple syntax and suitable for rapid development. 2. Python has concise syntax, is suitable for multiple fields, and has a strong library ecosystem.

How to run sublime code python

Apr 16, 2025 am 08:48 AM

How to run sublime code python

Apr 16, 2025 am 08:48 AM

To run Python code in Sublime Text, you need to install the Python plug-in first, then create a .py file and write the code, and finally press Ctrl B to run the code, and the output will be displayed in the console.

PHP and Python: A Deep Dive into Their History

Apr 18, 2025 am 12:25 AM

PHP and Python: A Deep Dive into Their History

Apr 18, 2025 am 12:25 AM

PHP originated in 1994 and was developed by RasmusLerdorf. It was originally used to track website visitors and gradually evolved into a server-side scripting language and was widely used in web development. Python was developed by Guidovan Rossum in the late 1980s and was first released in 1991. It emphasizes code readability and simplicity, and is suitable for scientific computing, data analysis and other fields.

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python is more suitable for beginners, with a smooth learning curve and concise syntax; JavaScript is suitable for front-end development, with a steep learning curve and flexible syntax. 1. Python syntax is intuitive and suitable for data science and back-end development. 2. JavaScript is flexible and widely used in front-end and server-side programming.

Golang vs. Python: Performance and Scalability

Apr 19, 2025 am 12:18 AM

Golang vs. Python: Performance and Scalability

Apr 19, 2025 am 12:18 AM

Golang is better than Python in terms of performance and scalability. 1) Golang's compilation-type characteristics and efficient concurrency model make it perform well in high concurrency scenarios. 2) Python, as an interpreted language, executes slowly, but can optimize performance through tools such as Cython.

Where to write code in vscode

Apr 15, 2025 pm 09:54 PM

Where to write code in vscode

Apr 15, 2025 pm 09:54 PM

Writing code in Visual Studio Code (VSCode) is simple and easy to use. Just install VSCode, create a project, select a language, create a file, write code, save and run it. The advantages of VSCode include cross-platform, free and open source, powerful features, rich extensions, and lightweight and fast.

How to run python with notepad

Apr 16, 2025 pm 07:33 PM

How to run python with notepad

Apr 16, 2025 pm 07:33 PM

Running Python code in Notepad requires the Python executable and NppExec plug-in to be installed. After installing Python and adding PATH to it, configure the command "python" and the parameter "{CURRENT_DIRECTORY}{FILE_NAME}" in the NppExec plug-in to run Python code in Notepad through the shortcut key "F6".