Pushing The Limits Of Modern LLMs With A Dinner Plate?

In general, we know that AI is big, and getting bigger. It’s fast, and getting faster.

Specifically, though, not everyone’s familiar with some of the newest hardware and software approaches in the industry, and how they promote better results. People are working hard on revealing more of the inherent power in LLM technologies. And things are moving at a rapid clip.

The Cerebras WSE

One of these is the Cerebras WSE or Wafer Scale Engine – this is a massive processor that can power previously unimaginable AI capabilities.

First of all, you wouldn’t call it a microprocessor anymore. It’s famously the size of a dinner plate – 8.5 x 8.5 inches. It has hundreds of thousands of cores, and a context capability that’s staggering.

But let me start with some basic terminology that you can hear in a presentation by Morgan Rockett, previously an MIT student, talking about the basics when it comes to evaluating LLM output.

The Glossary

LLMs are neural networks. They use a process of tokenization, where a token is one small bit of data that gets put into an overall context for the machine programming question.

Then there is context – the extent to which the program can look back at the previous tokens, and tie them into the greater picture.

There’s also inference – the way that the computer thinks in real time when you give it a question or produce a response.

Another term that Rockett goes over is rate limits, where if you don’t own the model, you’re going to have to put up with thresholds imposed by that model’s operators.

Upping the Game with the WSE

What Rockett reveals as he goes along explaining the hardware behind these systems is that he is a fellow with Cerebras, which is pioneering that massive chip.

Looking at common hardware setups, he goes over four systems – Nvidia GPUs, Google TPUs, Grok LPUs (language processing units,) and the Cerebras WSE.

“There’s really nothing like it on the market,” he says of the WSU product noting that you can get big context and fast inference, if you have the right hardware and the right technique. “In terms of speed benchmarks, Cerebras is an up and coming chip company. They have 2500 tokens per second, which is extremely fast. It's almost instant response. The entire page of text will get generated, and it's too fast to read.”

He noted that Grok is currently in second place with around 1600 tokens per second.

Funneling Data

The approach showcased in this presentation was basically a selection of given chunks of a large file, and the summarization of the contents of that file.

Noting that really big files are too big for LLMs to manage, Rockett presents three approaches – Log2, square root, and double square root – all of which involve taking a sampling of chunks to get a cohesive result without overloading your model, using a “funnel” design.

In a demo, he showed a 4 to 5 second inference model on a data set of 4 GB, the equivalent, he said, of a 10-foot-tall stack of paper, or alternately, 4 million tokens.

The JFK Files

The data he chose to use was the total archive of our available information around the transformational event of the assassination of JFK in the 60s.

Rockett showed the model using his approach to summarize, working with virtually unlimited RAM, where tokenization was the major time burden.

With slotted input techniques, he said, you could get around rate limits, and tokenization can conceivably be worked out.

Check out the video for a summary on the archive, going over a lot of the CIA’s clandestine activities in that era, and tying in the Bay of Pigs event and more.

More on the WSE

Going back to practical uses for the Cerebras processor, Rockett mentioned legal, government and the trading world, where quick information is paramount.

I wanted more concrete examples, so I asked ChatGPT. It returned numerous interesting use cases for this hardware, including G42, an AI and cloud company in the United Arab Emirates, as well as the Mayo Clinic, various pharmaceutical companies, and the Lawrence Livermore National Laboratory (here’s a story I did including Lawrence Livermore’s nuclear project).

Then I asked a different question:

“Can you eat dinner off of a Cerebras WSE?”

“Physically?” ChatGPT replied. “Yes, but you'd be committing both a financial and technological atrocity … the Cerebras Wafer-Scale Engine (WSE) is the largest chip ever built—Using it as a plate would be like eating spaghetti off the Rosetta Stone—technically possible, but deeply absurd.”

It gave me these three prime reasons not to attempt something so foolhardy (I attached verbatim):

- It’s extremely fragile—even a crumb could short something out.

- It runs very hot and requires active cooling, so you might cook your food unintentionally (badly).

- The surface isn't food-safe; any sauce would void the warranty and potentially poison you.

“In short: You could eat dinner off of it,” ChatGPT said, “Once. Then you’d have no chip, no dinner, and no job. Using it as a plate would be like eating spaghetti off the Rosetta Stone, technically possible, but deeply absurd.”

Touché, ChatGPT. Touché.

That’s a little about one of the most fascinating pieces of hardware out there, and where it fits into the equation of context inference. When we supercharge these systems, we see what used to take a long time happening pretty much in real time. That’s a real eye-opener.

The above is the detailed content of Pushing The Limits Of Modern LLMs With A Dinner Plate?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1677

1677

14

14

1431

1431

52

52

1334

1334

25

25

1280

1280

29

29

1257

1257

24

24

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

The release includes three distinct models, GPT-4.1, GPT-4.1 mini and GPT-4.1 nano, signaling a move toward task-specific optimizations within the large language model landscape. These models are not immediately replacing user-facing interfaces like

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Simulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

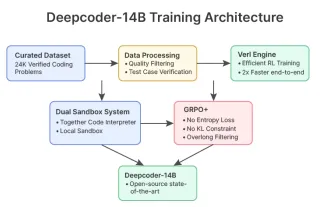

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

In a significant development for the AI community, Agentica and Together AI have released an open-source AI coding model named DeepCoder-14B. Offering code generation capabilities on par with closed-source competitors like OpenAI

The Prompt: ChatGPT Generates Fake Passports

Apr 16, 2025 am 11:35 AM

The Prompt: ChatGPT Generates Fake Passports

Apr 16, 2025 am 11:35 AM

Chip giant Nvidia said on Monday it will start manufacturing AI supercomputers— machines that can process copious amounts of data and run complex algorithms— entirely within the U.S. for the first time. The announcement comes after President Trump si

One Prompt Can Bypass Every Major LLM's Safeguards

Apr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's Safeguards

Apr 25, 2025 am 11:16 AM

HiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

Guy Peri Helps Flavor McCormick's Future Through Data Transformation

Apr 19, 2025 am 11:35 AM

Guy Peri Helps Flavor McCormick's Future Through Data Transformation

Apr 19, 2025 am 11:35 AM

Guy Peri is McCormick’s Chief Information and Digital Officer. Though only seven months into his role, Peri is rapidly advancing a comprehensive transformation of the company’s digital capabilities. His career-long focus on data and analytics informs