Hadoop pseudo-distributed cluster construction

Software preparation

I'm using a virtual machine with CentOS-6.6, with the host name repo. Refer to the steps to install a Linux virtual machine in Windows, I installed JDK in that virtual machine, refer to the guide to installing JDK in Linux. In addition, the virtual machine is configured with a key-free login itself, and the settings for configuring key-free login between each virtual machine are referenced. The download address of the Hadoop installation package is: https://www.php.cn/link/8485694bae96aebc7c4fe6119599d0e0 .

-

Upload the Hadoop installation package to the server and decompress it

[root@repo ~]# tar zxvf hadoop-2.6.5.tar.gz -C /opt/apps/

Copy after login -

Configure environment variables

# You can directly locate the last line of the file [root@repo hadoop-2.6.5]# vi /etc/profile export HADOOP_HOME=/opt/apps/hadoop-2.6.5 export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin [root@repo hadoop-2.6.5]# . /etc/profile

Copy after login -

Modify the three configuration files of hadoop-env.sh, mapred-env.sh, and yarn-env.sh, and add JAVA_HOME

[root@repo hadoop]# pwd /opt/apps/hadoop-2.6.5/etc/hadoop [root@repo hadoop]# vi hadoop-env.sh export JAVA_HOME=/usr/local/jdk1.8.0_73 [root@repo hadoop]# vi mapred-env.sh export JAVA_HOME=/usr/local/jdk1.8.0_73 [root@repo hadoop]# vi yarn-env.sh export JAVA_HOME=/usr/local/jdk1.8.0_73

Copy after login -

Modify the core-site.xml and hdfs-site.xml configuration files to configure pseudo-distributed related content

[root@repo hadoop]# vi core-site.xml <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://repo:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/var/hadoop/pseudo</value> </property> </configuration> [root@repo hadoop]# vi hdfs-site.xml <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.secondary.http-address</name> <value>repo:50090</value> </property> </configuration>

Copy after login -

Modify the slaves configuration file and specify the node where the DataNode is located

[root@repo hadoop]# vi slaves repo

Copy after login -

Format file system

[root@repo hadoop]# hadoop namenode --format # Success message17/09/16 21:17:11 INFO common.Storage: Storage directory /var/hadoop/pseudo/dfs/name has been successfully formatted.

Copy after login -

Start HDFS and YARN

[root@repo hadoop]# start-dfs.sh Starting namesnodes on [repo] repo: starting namenode, logging to /opt/apps/hadoop-2.6.5/logs/hadoop-root-namenode-repo.out repo: starting datanode, logging to /opt/apps/hadoop-2.6.5/logs/hadoop-root-datanode-repo.out Starting secondary namesodes [repo] repo: starting secondarynamenode, logging to /opt/apps/hadoop-2.6.5/logs/hadoop-root-secondarynamenode-repo.out [root@repo hadoop]# start-yarn.sh starting yarn daemons starting resourcemanager, logging to /opt/hadoop-2.7.4/logs/yarn-root-resourcemanager-repo.out repo: starting nodemanager, logging to /opt/hadoop-2.7.4/logs/yarn-root-nodemanager-repo.out [root@repo hadoop]# jps 4368 Jps 3957 ResourceManager 3512 NameNode 3641 DataNode 4058 NodeManager 3805 SecondaryNameNode

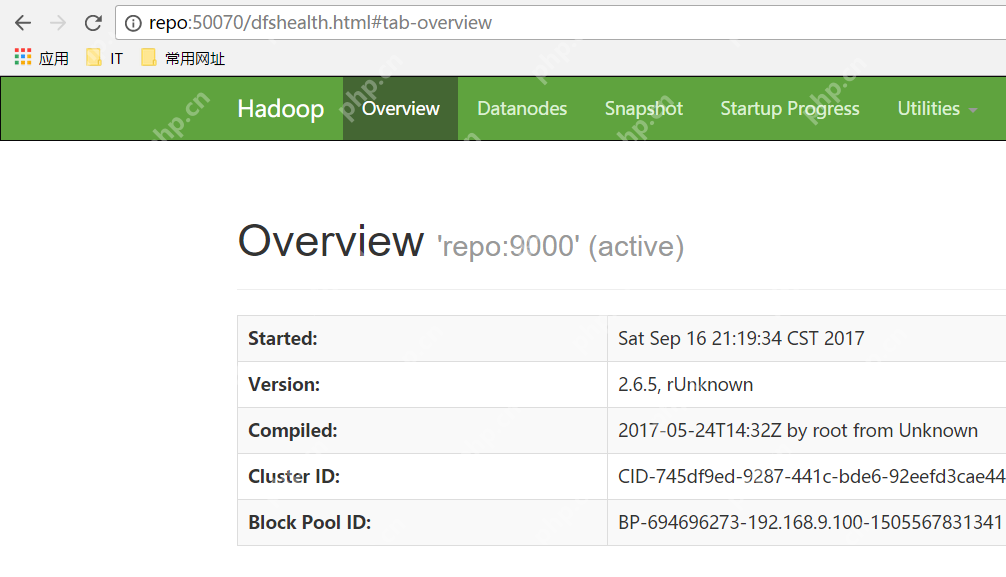

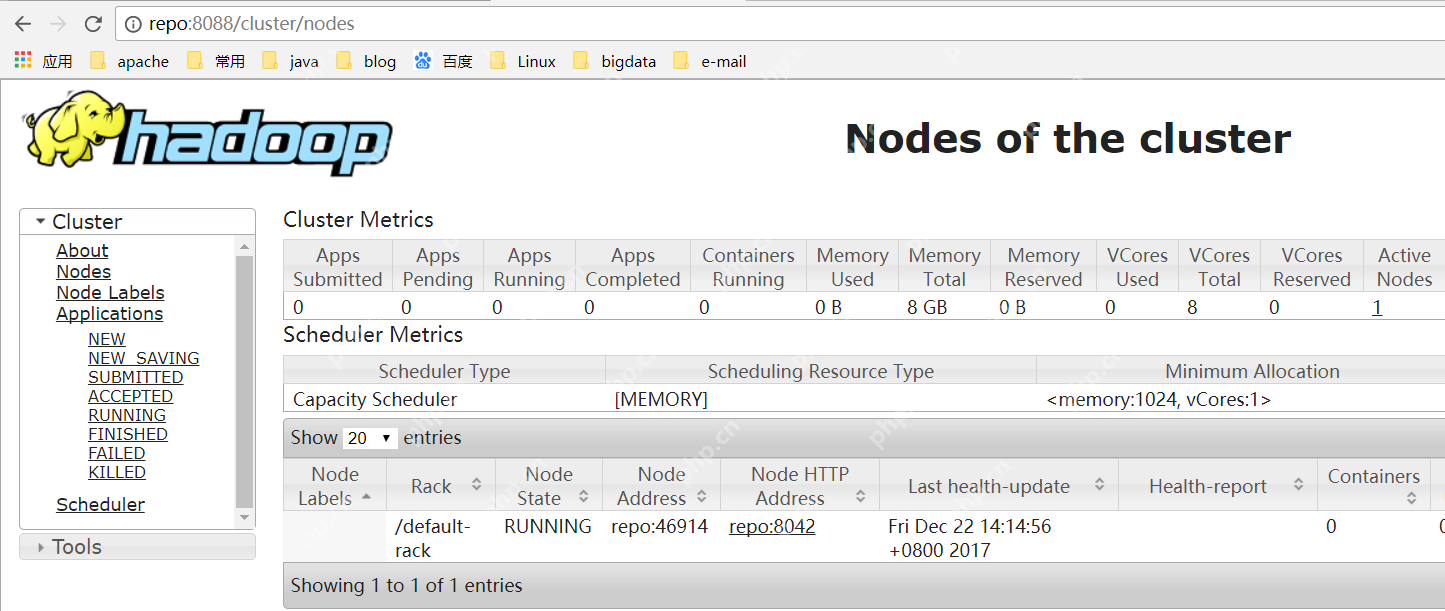

Copy after login Visit the WEB page

Build successfully!

Build successfully!

The above is the detailed content of Hadoop pseudo-distributed cluster construction. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1319

1319

25

25

1269

1269

29

29

1249

1249

24

24

CentOS: What Led to the Decision to End Support

Apr 23, 2025 am 12:10 AM

CentOS: What Led to the Decision to End Support

Apr 23, 2025 am 12:10 AM

RedHatendedsupportforCentOStoshifttowardsacommerciallyfocusedmodelwithCentOSStream.1)CentOStransitionedtoCentOSStreamforRHELdevelopment.2)ThisencourageduserstomovetoRHEL.3)AlternativeslikeAlmaLinux,RockyLinux,andOracleLinuxemergedasreplacements.

Download the official website of Ouyi Exchange app for Apple mobile phone

Apr 28, 2025 pm 06:57 PM

Download the official website of Ouyi Exchange app for Apple mobile phone

Apr 28, 2025 pm 06:57 PM

The Ouyi Exchange app supports downloading of Apple mobile phones, visit the official website, click the "Apple Mobile" option, obtain and install it in the App Store, register or log in to conduct cryptocurrency trading.

NGINX and Apache: Understanding the Key Differences

Apr 26, 2025 am 12:01 AM

NGINX and Apache: Understanding the Key Differences

Apr 26, 2025 am 12:01 AM

NGINX and Apache each have their own advantages and disadvantages, and the choice should be based on specific needs. 1.NGINX is suitable for high concurrency scenarios because of its asynchronous non-blocking architecture. 2. Apache is suitable for low-concurrency scenarios that require complex configurations, because of its modular design.

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

DMA in C refers to DirectMemoryAccess, a direct memory access technology, allowing hardware devices to directly transmit data to memory without CPU intervention. 1) DMA operation is highly dependent on hardware devices and drivers, and the implementation method varies from system to system. 2) Direct access to memory may bring security risks, and the correctness and security of the code must be ensured. 3) DMA can improve performance, but improper use may lead to degradation of system performance. Through practice and learning, we can master the skills of using DMA and maximize its effectiveness in scenarios such as high-speed data transmission and real-time signal processing.

Binance official website entrance Binance official latest entrance 2025

Apr 28, 2025 pm 07:54 PM

Binance official website entrance Binance official latest entrance 2025

Apr 28, 2025 pm 07:54 PM

Visit Binance official website and check HTTPS and green lock logos to avoid phishing websites, and official applications can also be accessed safely.

macOS vs. Linux: Exploring the Differences and Similarities

Apr 25, 2025 am 12:03 AM

macOS vs. Linux: Exploring the Differences and Similarities

Apr 25, 2025 am 12:03 AM

macOSandLinuxbothofferuniquestrengths:macOSprovidesauser-friendlyexperiencewithexcellenthardwareintegration,whileLinuxexcelsinflexibilityandcommunitysupport.macOS,developedbyApple,isknownforitssleekinterfaceandecosystemintegration,whereasLinux,beingo

Using Apache: Building and Hosting Websites

Apr 25, 2025 am 12:07 AM

Using Apache: Building and Hosting Websites

Apr 25, 2025 am 12:07 AM

Apache is an open source web server software that is widely used in website hosting. Installation steps: 1. Install using the command line on Ubuntu; 2. The configuration file is located in /etc/apache2/apache2.conf or /etc/httpd/conf/httpd.conf. Through module extensions, Apache supports static and dynamic content hosting, optimizes performance and security.

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

Handling high DPI display in C can be achieved through the following steps: 1) Understand DPI and scaling, use the operating system API to obtain DPI information and adjust the graphics output; 2) Handle cross-platform compatibility, use cross-platform graphics libraries such as SDL or Qt; 3) Perform performance optimization, improve performance through cache, hardware acceleration, and dynamic adjustment of the details level; 4) Solve common problems, such as blurred text and interface elements are too small, and solve by correctly applying DPI scaling.

Build successfully!

Build successfully!