How to Build an Intelligent FAQ Chatbot Using Agentic RAG

AI agents are now a part of enterprises big and small. From filling forms at hospitals and checking legal documents to analyzing video footage and handling customer support – we have AI agents for all kinds of tasks. Companies often spend hundreds of thousands of dollars on hiring customer support staff who can understand the needs of a customer and resolve them based on the company’s guidelines. Today, having an intelligent chatbot to answer FAQs can efficiently improve customer service. In this article, we will learn how to build an FAQ chatbot that can resolve customer queries in seconds, using agentic RAG (Retrieval Augmented Generation), LangGraph and ChromaDB.

Table of Contents

- Brief on Agentic RAG

- Architecture of the Intelligent FAQ Chatbot

- Hands-on Implementation on Building the Intelligent FAQ Chatbot

- Step 1: Install Dependencies

- Step 2: Import Required Libraries

- Step 3: Set Up the OpenAI API Key

- Step 4: Download the Dataset

- Step 5: Defining the Department Names for Mapping

- Step 6: Define the Helper Functions

- Step 7: Define the LangGraph Agent Components

- Step 8: Define the Graph Function

- Step 9: Initiate Agent Execution

- Step 10: Testing the Agent

- Conclusion

Brief on Agentic RAG

RAG is a hot topic nowadays. Everyone is talking about RAG and building applications on top of it. RAG helps LLMs to get access to the real-time data, which makes LLMs more accurate than ever before. However, traditional RAG systems tend to fail when it comes to choosing the best retrieval method, changing the retrieval workflow, or providing multi-step reasoning. This is where agentic RAG comes in.

Agentic RAG enhances traditional RAG by incorporating the capabilities of AI agents into it. With this superpower, RAGs can dynamically change the workflow based on the nature of the query, do multi-step reasoning, and multi-step retrieval as well. We can even integrate tools into the agentic RAG system, and it can dynamically decide which tool to use when. Overall, it results in improved accuracy and makes the system more efficient and scalable.

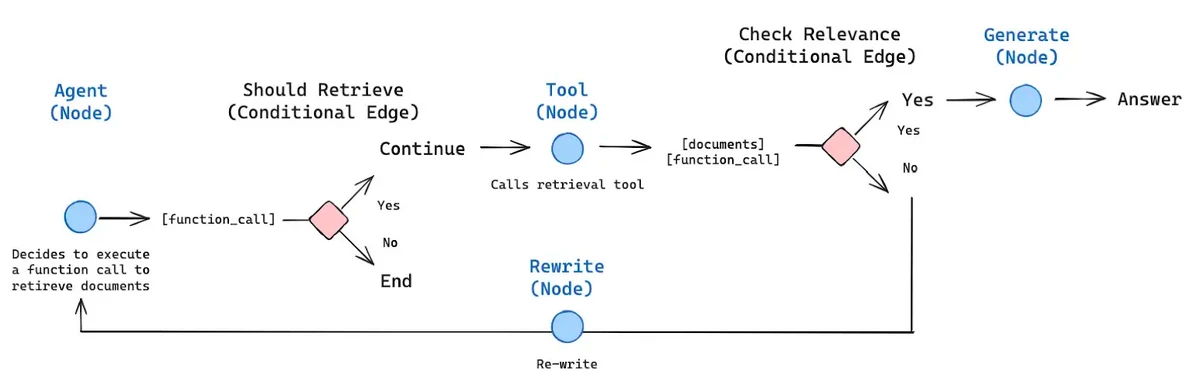

Here’s an example of an agentic RAG workflow.

The image above denotes the architecture of an agentic RAG framework. It shows how AI agents, when combined with RAG, can make decisions under certain conditions. The image clearly shows that if a conditional node is there, the agent will decide which edge to choose based on the context provided.

Also Read: 10 Business Applications of LLM Agents

Architecture of the Intelligent FAQ Chatbot

Now we are going to dive into the architecture of the chatbot we are going to build. We’ll be exploring how it works and what its important components are.

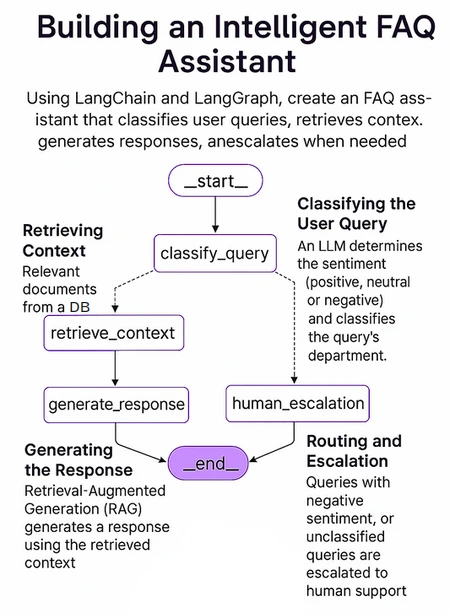

The following figure shows the overall structure of our system. We will be implementing this using LangGraph, which is an open-source AI agents framework from LangChain.

The key components of our system include:

- LangGraph: A powerful open-source AI agent framework that efficiently creates complex, multi-agent, cyclic graph-based agents. These agents can maintain the states throughout the workflow and can efficiently handle the complex queries.

- LLM: An efficient and powerful Large Language Model that can follow the instructions of the user and reply accordingly with the best of its knowledge. Here we will be using OpenAI’s o4-mini, which is a small reasoning model that is specifically designed for speed, affordability, and tool use.

- Vector Database: A vector database is used to store, manage and retrieve vector embeddings which are usually the numeric representation of data. Here we are using ChromaDB which is an open source AI native vector database. It is designed to empower the systems that depend on similarity searches, semantic searches, and other tasks involving vector data.

Also Read: How to Build a Customer Support Voice Agent

Hands-on Implementation on Building the Intelligent FAQ Chatbot

Now, we will be implementing the end-to-end workflow of our chatbot based on the architecture that we have discussed above. We will be doing it step-by-step with detailed explanations, code, as well as sample outputs. So let’s begin.

Step 1: Install Dependencies

We will start by installing all the required libraries into our Jupyter notebook. This includes libraries such as langchain, langgraph, langchain-openai, langchain-community, chromadb, openai, python-dotenv, pydantic, and pysqlite3.

!pip install -q langchain langgraph langchain-openai langchain-community chromadb openai python-dotenv pydantic pysqlite3

Step 2: Import Required Libraries

Now we are ready to import all the remaining libraries that we will need for this project.

import os import json from typing import List, TypedDict, Annotated, Dict from dotenv import load_dotenv # Langchain & LangGraph specific imports from langchain_openai import ChatOpenAI, OpenAIEmbeddings from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder from pydantic import BaseModel, Field from langchain_core.messages import SystemMessage, HumanMessage, AIMessage from langchain_core.documents import Document from langchain_community.vectorstores import Chroma from langgraph.graph import StateGraph, END

Step 3: Set Up the OpenAI API Key

Enter your OpenAI key to set it as an environment variable.

from getpass import getpass

OPENAI_API_KEY = getpass("OpenAI API Key:")

load_dotenv()

os.getenv("OPENAI_API_KEY")Step 4: Download the Dataset

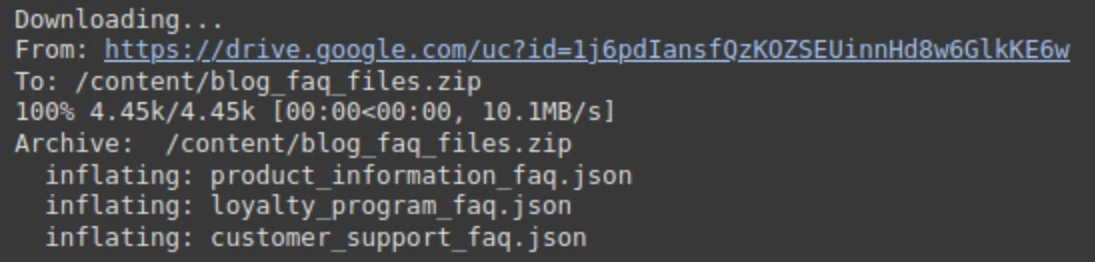

We have made a sample FAQ dataset in json format for different departments. We’ll need to download it from the drive and unzip it.

!gdown 1j6pdIansfQzKOZSEUinnHd8w6GlkKE6w !unzip -o /content/blog_faq_files.zip

Output:

Step 5: Defining the Department Names for Mapping

Now, let’s define the mapping of the departments so that our agentic system can understand which file belongs to which department.

# Define Department Names (ensure these match metadata used during ingestion)

DEPARTMENTS = [

"Customer Support",

"Product Information",

"Loyalty Program / Rewards"

]

UNKNOWN_DEPARTMENT = "Unknown/Other"

FAQ_FILES = {

"Customer Support": "customer_support_faq.json",

"Product Information": "product_information_faq.json",

"Loyalty Program / Rewards": "loyalty_program_faq.json",

}Step 6: Define the Helper Functions

We will define some helper functions which will be responsible for loading FAQs from the json files and also storing them in ChromaDB.

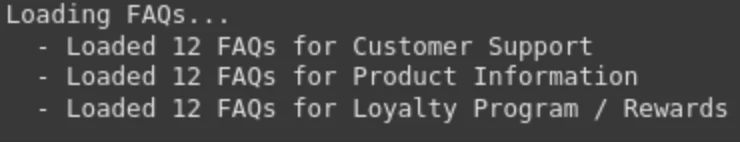

1. load_faqs(…): It is a helper function which loads the FAQ from the json files and store them in a list called all_faqs.

def load_faqs(file_paths: Dict[str, str]) -> Dict[str, List[Dict[str, str]]]:

"""Loads QA pairs from JSON files for each department."""

all_faqs = {}

print("Loading FAQs...")

for dept, file_path in file_paths.items():

try:

with open(file_path, 'r', encoding='utf-8') as f:

all_faqs[dept] = json.load(f)

print(f" - Loaded {len(all_faqs[dept])} FAQs for {dept}")

except FileNotFoundError:

print(f" - WARNING: FAQ file not found for {dept}: {file_path}. Skipping.")

except json.JSONDecodeError:

print(f" - ERROR: Could not decode JSON for {dept} from {file_path}. Skipping.")

return all_faqs2. setup_chroma_vector_store(…): This function sets up the ChromaDB to store the vector embeddings. For this, we will first define the Chroma configuration i.e., the directory which will contain the chroma database files. Then we will convert the FAQs to LangChain’s Documents. It will contain metadata and page content which is the predefined format for an accurate RAG. We can combine question and answers for better contextual retrieval or just embed the answer. We are keeping the question as well department name in the metadata.

# ChromaDB Configuration

CHROMA_PERSIST_DIRECTORY = "./chroma_db_store"

CHROMA_COLLECTION_NAME = "Chatbot_faqs"

def setup_chroma_vector_store(

all_faqs: Dict[str, List[Dict[str, str]]],

persist_directory: str,

collection_name: str,

embedding_model: OpenAIEmbeddings,

) -> Chroma:

"""Creates or loads a Chroma vector store with FAQ data and metadata."""

documents = []

print("\nPreparing documents for vector store...")

for department, faqs in all_faqs.items():

for faq in faqs:

# Combine Q&A for better contextual embedding, or just embed answers

# content = f"Question: {faq['question']}\nAnswer: {faq['answer']}"

content = faq['answer'] # Often embedding just the answer is effective for FAQ retrieval

doc = Document(

page_content=content,

metadata={

"department": department,

"question": faq['question'] # Keep question in metadata for potential display

}

)

documents.append(doc)

print(f"Total documents prepared: {len(documents)}")

if not documents:

raise ValueError("No documents found to add to the vector store. Check FAQ loading.")

print(f"Initializing ChromaDB vector store (Persistence: {persist_directory})...")

vector_store = Chroma(

collection_name=collection_name,

embedding_function=embedding_model,

persist_directory=persist_directory,

)

try:

vector_store = Chroma.from_documents(

documents=documents,

embedding=embedding_model,

persist_directory=persist_directory,

collection_name=collection_name

)

print(f"Created and populated ChromaDB with {len(documents)} documents.")

vector_store.persist() # Ensure persistence after creation

print("Vector store persisted.")

except Exception as create_e:

print(f"FATAL ERROR: Could not create Chroma vector store: {create_e}")

raise create_e

print("ChromaDB setup complete.")

return vector_storeStep 7: Define the LangGraph Agent Components

Let’s now define our AI agent component which is the main component of our work flow.

1. State definition: It is a python class containing the current state of the agent while running. It contains variables such as query, sentiment, department.

class AgentState(TypedDict): query: str sentiment: str department: str context: str # Retrieved context for RAG response: str # Final response to the user error: str | None # To capture potential errors

2. Pydantic model: We have defined a pydantic model here which will ensure a structured LLM output. It contains a sentiment which will have three values, “positive”, “negative” and “neutral” and a department name which will be predicted by the LLM.

class ClassificationResult(BaseModel):

"""Structured output for query classification."""

sentiment: str = Field(description="Sentiment of the query (positive, neutral, negative)")

department: str = Field(description=f"Most relevant department from the list: {DEPARTMENTS [UNKNOWN_DEPARTMENT]}. Use '{UNKNOWN_DEPARTMENT}' if unsure or not applicable.")3. Nodes: The following are the node functions which will handle each task one by one.

- Classify_query_node: It classifies the incoming query into the sentiment as well as the target department name based on the nature of the query.

- retrieve_context_node: It performs the RAG over the vector database and filter the results on the basis of department name.

- generate_response_node: It generates the final response based on the query and retrieved context from the database.

- Human_escalation_node: If the sentiment is negative or the target department is unknown, it will escalate the query to the human user.

- route_query: It determines the next step based on the query and output of the classification node.

# 3. Nodes

def classify_query_node(state: AgentState) -> Dict[str, str]:

"""

Classifies the user query for sentiment and target department using an LLM.

"""

print("--- Classifying Query ---")

query = state["query"]

llm = ChatOpenAI(model="o4-mini", api_key=OPENAI_API_KEY) # Use a reliable, cheaper model

# Prepare prompt for classification

prompt_template = ChatPromptTemplate.from_messages([

SystemMessage(

content=f"""You are an expert query classifier for ShopUNow, a retail company.

Analyze the user's query to determine its sentiment and the most relevant department.

The available departments are: {', '.join(DEPARTMENTS)}.

If the query doesn't clearly fit into one of these, or is ambiguous, classify the department as '{UNKNOWN_DEPARTMENT}'.

If the query expresses frustration, anger, dissatisfaction, or complains about a problem, classify sentiment as 'negative'.

If the query is asking a question, seeking information, or making a neutral statement, classify sentiment as 'neutral'.

If the query expresses satisfaction, praise, or positive feedback, classify sentiment as 'positive'.

Respond ONLY with the structured JSON output format."""

),

HumanMessage(content=f"User Query: {query}")

])

# LLM Chain with structured output

classifier_chain = prompt_template | llm.with_structured_output(ClassificationResult)

try:

result: ClassificationResult = classifier_chain.invoke({}) # Pass empty dict as input seems required now

print(f" Classification Result: Sentiment='{result.sentiment}', Department='{result.department}'")

return {

"sentiment": result.sentiment.lower(), # Normalize

"department": result.department

}

except Exception as e:

print(f" Error during classification: {e}")

return {

"sentiment": "neutral", # Default on error

"department": UNKNOWN_DEPARTMENT,

"error": f"Classification failed: {e}"

}

def retrieve_context_node(state: AgentState) -> Dict[str, str]:

"""

Retrieves relevant context from the vector store based on the query and department.

"""

print("--- Retrieving Context ---")

query = state["query"]

department = state["department"]

if not department or department == UNKNOWN_DEPARTMENT:

print(" Skipping retrieval: Department unknown or not applicable.")

return {"context": "", "error": "Cannot retrieve context without a valid department."}

# Initialize embedding model and vector store access

embedding_model = OpenAIEmbeddings(api_key=OPENAI_API_KEY)

vector_store = Chroma(

collection_name=CHROMA_COLLECTION_NAME,

embedding_function=embedding_model,

persist_directory=CHROMA_PERSIST_DIRECTORY,

)

retriever = vector_store.as_retriever(

search_type="similarity",

search_kwargs={

'k': 3, # Retrieve top 3 relevant docs

'filter': {'department': department} # *** CRITICAL: Filter by department ***

}

)

try:

retrieved_docs = retriever.invoke(query)

if retrieved_docs:

context = "\n\n---\n\n".join([doc.page_content for doc in retrieved_docs])

print(f" Retrieved {len(retrieved_docs)} documents for department '{department}'.")

# print(f" Context Snippet: {context[:200]}...") # Optional: log snippet

return {"context": context, "error": None}

else:

print(" No relevant documents found in vector store for this department.")

return {"context": "", "error": "No relevant context found."}

except Exception as e:

print(f" Error during context retrieval: {e}")

return {"context": "", "error": f"Retrieval failed: {e}"}

def generate_response_node(state: AgentState) -> Dict[str, str]:

"""

Generates a response using RAG based on the query and retrieved context.

"""

print("--- Generating Response (RAG) ---")

query = state["query"]

context = state["context"]

llm = ChatOpenAI(model="o4-mini", api_key=OPENAI_API_KEY) # Can use a more capable model for generation

if not context:

print(" No context provided, generating generic response.")

# Fallback if retrieval failed but routing decided RAG path anyway

response_text = "I couldn't find specific information related to your query in our knowledge base. Could you please rephrase or provide more details?"

return {"response": response_text}

# RAG Prompt

prompt_template = ChatPromptTemplate.from_messages([

SystemMessage(

content=f"""You are a helpful AI Chatbot for ShopUNow. Answer the user's query based *only* on the provided context.

Be concise and directly address the query. If the context doesn't contain the answer, state that clearly.

Do not make up information.

Context:

---

{context}

---"""

),

HumanMessage(content=f"User Query: {query}")

])

RAG_chain = prompt_template | llm

try:

response = RAG_chain.invoke({})

response_text = response.content

print(f" Generated RAG Response: {response_text[:200]}...")

return {"response": response_text}

except Exception as e:

print(f" Error during response generation: {e}")

return {"response": "Sorry, I encountered an error while generating the response.", "error": f"Generation failed: {e}"}

def human_escalation_node(state: AgentState) -> Dict[str, str]:

"""

Provides a message indicating the query will be escalated to a human.

"""

print("--- Escalating to Human Support ---")

reason = ""

if state.get("sentiment") == "negative":

reason = "Due to the nature of your query,"

elif state.get("department") == UNKNOWN_DEPARTMENT:

reason = "As your query requires specific attention,"

response_text = f"{reason} I need to escalate this to our human support team. They will review your request and get back to you shortly. Thank you for your patience."

print(f" Escalation Message: {response_text}")

return {"response": response_text}

# 4. Conditional Routing Logic

def route_query(state: AgentState) -> str:

"""Determines the next step based on classification results."""

print("--- Routing Decision ---")

sentiment = state.get("sentiment", "neutral")

department = state.get("department", UNKNOWN_DEPARTMENT)

if sentiment == "negative" or department == UNKNOWN_DEPARTMENT:

print(f" Routing to: human_escalation (Sentiment: {sentiment}, Department: {department})")

return "human_escalation"

else:

print(f" Routing to: retrieve_context (Sentiment: {sentiment}, Department: {department})")

return "retrieve_context"Step 8: Define the Graph Function

Let’s build the function for the graph and assign the nodes and edges to the graph.

# --- Graph Definition ---

def build_agent_graph(vector_store: Chroma) -> StateGraph:

"""Builds the LangGraph agent."""

graph = StateGraph(AgentState)

# Add nodes

graph.add_node("classify_query", classify_query_node)

graph.add_node("retrieve_context", retrieve_context_node)

graph.add_node("generate_response", generate_response_node)

graph.add_node("human_escalation", human_escalation_node)

# Set entry point

graph.set_entry_point("classify_query")

# Add edges

graph.add_conditional_edges(

"classify_query", # Source node

route_query, # Function to determine the route

{ # Mapping: output of route_query -> destination node

"retrieve_context": "retrieve_context",

"human_escalation": "human_escalation"

}

)

graph.add_edge("retrieve_context", "generate_response")

graph.add_edge("generate_response", END)

graph.add_edge("human_escalation", END)

# Compile the graph

# memory = SqliteSaver.from_conn_string(":memory:") # Example for in-memory persistence

app = graph.compile() # checkpointer=memory optional for stateful conversations

print("\nAgent graph compiled successfully.")

return appStep 9: Initiate Agent Execution

Now, we will be initialising the agent and begin executing the workflow.

1. Let’s start by loading the FAQs.

# 1. Load FAQs

faqs_data = load_faqs(FAQ_FILES)

if not faqs_data:

print("ERROR: No FAQ data loaded. Exiting.")

exit()Output:

2. Set up the embedding models. Here, we’ll be setting up OpenAI embedding models for a faster retrieval.

# 2. Setup Vector Store embedding_model = OpenAIEmbeddings(api_key=OPENAI_API_KEY) vector_store = setup_chroma_vector_store( faqs_data, CHROMA_PERSIST_DIRECTORY, CHROMA_COLLECTION_NAME, embedding_model )

Output:

Also Read: How to Choose the Right Embedding for Your RAG Model?

3. Now, build the agent using the predefined function, visualizing the agent flow using the mermaid diagram.

# 3. Build the Agent Graph agent_app = build_agent_graph(vector_store) from IPython.display import display, Image, Markdown display(Image(agent_app.get_graph().draw_mermaid_png()))

Output:

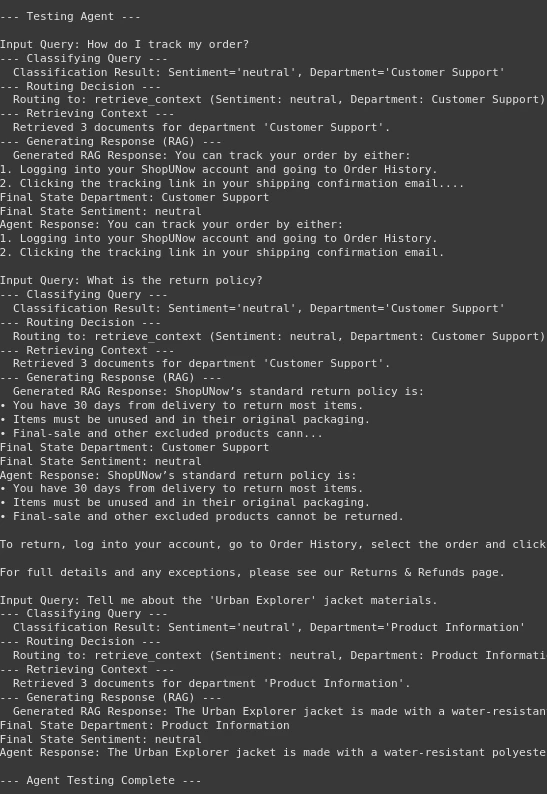

Step 10: Testing the Agent

We have arrived at the last part of our workflow. So far we have built several nodes and functions. Now is the time to test our agent and see the output.

1. First let’s define the test queries.

# Test the Agent test_queries = [ "How do I track my order?", "What is the return policy?", "Tell me about the 'Urban Explorer' jacket materials.", ]

2. Now let’s test the agent.

print("\n--- Testing Agent ---")

for query in test_queries:

print(f"\nInput Query: {query}")

# Define the input for the graph invocation

inputs = {"query": query}

# try:

# Invoke the graph

# The config argument is optional but useful for stateful execution if needed

# config = {"configurable": {"thread_id": "user_123"}} # Example config

final_state = agent_app.invoke(inputs) #, config=config)

print(f"Final State Department: {final_state.get('department')}")

print(f"Final State Sentiment: {final_state.get('sentiment')}")

print(f"Agent Response: {final_state.get('response')}")

if final_state.get('error'):

print(f"Error encountered: {final_state.get('error')}")

# except Exception as e:

# print(f"ERROR running agent graph for query '{query}': {e}")

# import traceback

# traceback.print_exc() # Print detailed traceback for debugging

print("\n--- Agent Testing Complete ---")print(“\n— Testing Agent —“)

Output:

We can see in the output that our agent is performing well. Firstly, it classifies the query and then routes the decision to the retrieval node or the human node. Then, the retrieval part comes it successfully retrieves the context from the vector database. In the last, generating the response as needed. Hence, we have made our intelligent FAQ Chatbot.

You can access the Colab Notebook with all the code here.

Conclusion

If you have reached this far, it means you have learned how to build an intelligent FAQ chatbot using agentic RAG and LangGraph. Here, we saw that building an intelligent agent which can reason and make a decision, is not that hard. The agentic chatbot that we built is cost efficient, fast, and is capable of fully understanding the context of the questions or input queries. The architecture we’ve used here is fully customizable which means one can edit any node of the agent for their particular use case. With agentic RAG, LangGraph, and ChromaDB, making agents has never been this easy. never so easy before. I’m sure what we have covered in this guide has given you the foundational knowledge to build more complex system using these tools.

The above is the detailed content of How to Build an Intelligent FAQ Chatbot Using Agentic RAG. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1665

1665

14

14

1423

1423

52

52

1321

1321

25

25

1269

1269

29

29

1249

1249

24

24

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

Hey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

Introduction OpenAI has released its new model based on the much-anticipated “strawberry” architecture. This innovative model, known as o1, enhances reasoning capabilities, allowing it to think through problems mor

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

Introduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

Pixtral-12B: Mistral AI's First Multimodal Model - Analytics Vidhya

Apr 13, 2025 am 11:20 AM

Pixtral-12B: Mistral AI's First Multimodal Model - Analytics Vidhya

Apr 13, 2025 am 11:20 AM

Introduction Mistral has released its very first multimodal model, namely the Pixtral-12B-2409. This model is built upon Mistral’s 12 Billion parameter, Nemo 12B. What sets this model apart? It can now take both images and tex

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

Beyond The Llama Drama: 4 New Benchmarks For Large Language Models

Apr 14, 2025 am 11:09 AM

Beyond The Llama Drama: 4 New Benchmarks For Large Language Models

Apr 14, 2025 am 11:09 AM

Troubled Benchmarks: A Llama Case Study In early April 2025, Meta unveiled its Llama 4 suite of models, boasting impressive performance metrics that positioned them favorably against competitors like GPT-4o and Claude 3.5 Sonnet. Central to the launc

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global Health

Apr 14, 2025 am 11:27 AM

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global Health

Apr 14, 2025 am 11:27 AM

Can a video game ease anxiety, build focus, or support a child with ADHD? As healthcare challenges surge globally — especially among youth — innovators are turning to an unlikely tool: video games. Now one of the world’s largest entertainment indus