Meta AI App: Now Powered by the Capabilities of Llama 4

Meta AI has been at the forefront of the AI revolution since the advent of its Llama chatbot. Their latest offering, Llama 4, has helped them gain a foothold in the race. From smarter conversations to creating videos, sketching ideas, pulling live research, and even remembering your preferences, Llama 4 is the brain making it all happen. In this article, we’ll walk you through all the exciting features offered by the latest iteration of the Meta AI web app. Considering the transition from Llama 3 to Llama 4, as the model powering Meta AI, we’ll begin with a quick overview of Llama 4.

Table of Contents

- What is Llama 4?

- Why the Transition?

- Things to Do with Llama 4 on Meta AI Web App

- Canvas

- Talk

- New Video

- Connected Apps

- Memory

- Reasoning Mode

- Research

- Search

- Applications of Meta AI

- Conclusion

- Frequently Asked Questions

What is Llama 4?

Llama 4 is Meta’s latest AI model, building on everything they learned from Llama 3. It’s smarter, faster, and more flexible, running a mixture-of-experts (MoE) system under the hood, which means it can pick the best parts of its brain depending on the task. It can work with both text and images, handles huge context windows (up to 10 million tokens for smaller models), and is trained across more than 200 languages. All of Meta’s official AI experiences, from WhatsApp to the new web app, are powered by Llama 4.

Also Read: Llama 4 Models: Meta AI is Open Sourcing the Best

Why the Transition?

Meta is transitioning from Llama 3 to Llama 4 to significantly enhance multimodal capabilities, improve context handling, and address political bias. Llama 4 features a much larger context window, advanced multimodal processing, and a new architecture for handling long context lengths. It also shows improvements in refusing responses to politically charged topics, demonstrating a more balanced approach to controversial issues.

Here’s a more detailed look at the key reasons for the transition:

- Enhanced Multimodality: Llama 4 is designed to handle both text and images, something Llama 3.1 lacked.

- Increased Context Length: Llama 4’s 10 million token context window is a massive leap from Llama 3, enabling the model to process much larger documents and maintain coherence across long spans of text.

- Improved Reasoning and Conversation: Llama 4’s post-training pipeline has been significantly revamped to improve reasoning, conversational abilities, and responsible behavior.

- Reduced Political Bias: Meta has stated that Llama 4 is more balanced in its responses on political and social topics, showing a significant reduction in bias compared to Llama 3.

- Open Source Approach: Llama 4 continues Meta’s commitment to open-weight AI, making the model accessible to a wider community.

- Convergence and Collaboration: The release of Llama 4 emphasizes the importance of open science and collaboration in AI development, encouraging cross-disciplinary research and development.

In essence, the transition to Llama 4 in the Meta AI web app marks a significant leap forward in Meta’s AI efforts. It pushes the boundaries of multimodal AI by improving context handling and addressing critical safety and ethical concerns.

Also Read: How to Access Meta’s Llama 4 Models via API

Things to Do with Llama 4 on Meta AI Web App

Now let’s get to the meat of the topic. Meta AI can now do a lot more than just answer questions. It can sketch, it can talk, it can generate videos, and even run a bunch of errands by connecting to external apps! Here are 8 new features introduced on Meta AI that makes it better and smarter than its peers:

1. Canvas

Canvas lets you sketch diagrams, mind maps, and workflows, and Meta AI understands them. It’s an open playground where you and the AI co-create by mixing visuals, notes, and ideas on one big infinite canvas.

2. Talk

Talk mode adds voice to the mix. Speak your queries instead of typing them, and hear Meta AI reply in a voice you choose, including some celebrity voices Meta partnered with. This mode is perfect for when your hands are busy or you just want that feeling of chatting with a super-smart buddy.

3. New Video

With the New Video tool, you can either upload or record a video and ask Meta AI to “reimagine” it, changing the style, mood, or even the content. Or you can start from scratch and generate short AI videos straight from a text prompt. It’s early days, but the creative doors this opens are considerable.

4. Connected Apps

You can now link your favorite apps, on music, calendars, and shopping, directly to Meta AI through Connected Apps. Want to book dinner, play a playlist, or check your schedule? No problem. You talk to Meta AI, and it handles the work for you.

5. Memory

Memory is where Meta AI differs from its contemporaries. It remembers your preferences, interests, and even details you casually mention, such as your favorite food or the fact that you’re studying for a big exam. That means smarter, more personal replies that feel like they’re coming from someone who knows you, not a stranger.

6. Reasoning Mode

Meta AI now has a “Reasoning” mode, built with a special version of Llama 4 tuned for structured problem-solving. You can toggle it on when you want the assistant to break things down step-by-step, whether you’re solving math problems, planning a trip, or cracking a tricky puzzle. It’s similar to the reasoning tool offered by its contemporaries, such as ChatGPT and DeepSeek.

7. Research

Research mode turns Meta AI into a real-time research assistant. Instead of relying only on what it already knows, it goes out, searches the web (powered by Bing), reads up, and brings back detailed, sourced info. Whether it’s news, niche topics, or academic content, it acts like your personal librarian on speed dial.

8. Search

Search is built for when you just want one straight answer, right now. No essays, just the essentials. Ask anything: the capital of a country, a definition, current events, and Meta AI grabs the latest info from the web and spits it back clean and fast.

Also Read: 10 Innovative Uses of Meta AI for Everyday Tasks

Applications of Meta AI

Thanks all of it’s new features and Llama 4-powered upgrades, here are some applications of Meta AI:

- Customer Support: Meta AI is used in virtual agents across platforms like Facebook and WhatsApp, allowing efficient handling of queries.

- Content Personalization: It creates feeds and recommendations on Facebook and Instagram by analyzing user preferences.

- Language Translation: It automatically translates comments, messages, and posts as they appear, so language doesn’t become a barrier.

- Augmented Reality: It is used in real-time facial tracking and effects, used in Instagram and Facebook filters.

- Content Moderation: It helps identify and remove harmful or misleading content, improving user safety.

- Automatic Captioning: It is used to generate captions for videos, enhancing accessibility and engagement.

- Research and Development: It contributes to projects in healthcare, robotics, and large-scale data analysis, which helps in scientific advancements.

Conclusion

Llama 4 is Meta’s way of making AI a more personalized experience. As more features roll out and mature, Meta AI is shaping up to be an all-rounder digital life assistant, with the inclusion of Llama 4 spearheading this process. With breakthroughs being made in AI, the technology has far surpassed its original use case. Incremental growth in chatbots is constantly blurring the lines discerning human and machine interactions. We are stepping ever closer to Artificial General Intelligence (AGI), where machine interaction would be almost seamless.

Frequently Asked Questions

Q1. What exactly is Llama 4, and how is it different from Llama 3?A. Llama 4 is Meta’s latest AI model, which supports images, has longer context, and better reasoning. It’s faster and smarter than Llama 3.

Q2. What can I actually do with Llama 4 on the Meta AI web app?A. You can sketch ideas, research in real-time, talk to it, generate videos, and even link your apps for tasks.

Q3. How does this ‘memory’ thing work, and should I be concerned about my privacy?A. It remembers your preferences for better replies. You can view and delete what it remembers at any time.

Q4. Is Llama 4 open-source like the older models? Can I run it myself?A. Yes, it’s open-weight and available for developers to run locally or integrate into apps.

Q5. How good is Llama 4 at giving current, real-world info?A. It uses Bing to pull fresh web results in Research and Search modes, so it stays up-to-date.

The above is the detailed content of Meta AI App: Now Powered by the Capabilities of Llama 4. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1673

1673

14

14

1429

1429

52

52

1333

1333

25

25

1278

1278

29

29

1257

1257

24

24

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

The release includes three distinct models, GPT-4.1, GPT-4.1 mini and GPT-4.1 nano, signaling a move toward task-specific optimizations within the large language model landscape. These models are not immediately replacing user-facing interfaces like

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

Unlock the Power of Embedding Models: A Deep Dive into Andrew Ng's New Course Imagine a future where machines understand and respond to your questions with perfect accuracy. This isn't science fiction; thanks to advancements in AI, it's becoming a r

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Simulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Gemini as the Foundation of Google’s AI Strategy Gemini is the cornerstone of Google’s AI agent strategy, leveraging its advanced multimodal capabilities to process and generate responses across text, images, audio, video and code. Developed by DeepM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

“Super happy to announce that we are acquiring Pollen Robotics to bring open-source robots to the world,” Hugging Face said on X. “Since Remi Cadene joined us from Tesla, we’ve become the most widely used software platform for open robotics thanks to

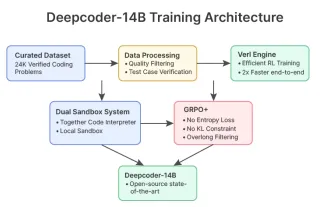

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

In a significant development for the AI community, Agentica and Together AI have released an open-source AI coding model named DeepCoder-14B. Offering code generation capabilities on par with closed-source competitors like OpenAI