How are arrays used in data analysis with Python?

Arrays in Python, particularly through NumPy and Pandas, are essential for data analysis, offering speed and efficiency. 1) NumPy arrays enable efficient handling of large datasets and complex operations like moving averages. 2) Pandas extends NumPy's capabilities with DataFrames for structured data analysis. 3) Arrays support vectorized operations, enhancing code readability and performance. 4) Reshaping and broadcasting further optimize data manipulation tasks.

Arrays in Python, particularly through the NumPy library, are a powerhouse for data analysis. They allow us to efficiently handle large datasets, perform complex mathematical operations, and streamline our data processing workflows. Let's dive into how arrays are used in data analysis with Python, sharing some personal insights and practical examples along the way.

When I first started working with data in Python, I quickly realized that the built-in lists were not always the most efficient for handling large datasets. That's where NumPy arrays came into play. They're not just faster; they open up a world of possibilities for data manipulation and analysis.

NumPy arrays are essentially multi-dimensional arrays that can represent vectors, matrices, and higher-dimensional data structures. They're optimized for numerical operations, which is crucial in data analysis. For instance, if you're dealing with time series data, you can easily perform operations like moving averages or Fourier transforms on entire datasets with just a few lines of code.

Here's a simple example to illustrate how you might use a NumPy array for basic data analysis:

import numpy as np

# Create a sample dataset

data = np.array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

# Calculate the mean

mean = np.mean(data)

print(f"Mean: {mean}")

# Calculate the standard deviation

std_dev = np.std(data)

print(f"Standard Deviation: {std_dev}")This code snippet demonstrates how effortlessly you can perform statistical operations on a dataset using NumPy arrays. The beauty of this approach is that it scales well to larger datasets, something I've found invaluable in my own projects.

One of the things I love about using arrays in data analysis is the ability to perform vectorized operations. Instead of looping through each element, you can apply operations to the entire array at once. This not only speeds up your code but also makes it more readable and less prone to errors. For example, if you want to normalize your data, you can do it like this:

# Normalize the data

normalized_data = (data - np.mean(data)) / np.std(data)

print("Normalized Data:", normalized_data)This approach is not only efficient but also elegant. However, it's worth noting that while NumPy arrays are incredibly powerful, they do have their limitations. For instance, they're not as flexible as Python lists when it comes to storing different data types. If you're working with mixed data, you might need to consider other data structures or libraries like Pandas.

Speaking of Pandas, it's built on top of NumPy and extends its capabilities by providing data structures like DataFrames, which are essentially two-dimensional labeled data structures with columns of potentially different types. This makes Pandas particularly useful for handling structured data, like CSV files or SQL tables, which are common in data analysis.

Here's how you might use a Pandas DataFrame to analyze data:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({

'A': [1, 2, 3, 4, 5],

'B': [5, 4, 3, 2, 1],

'C': ['a', 'b', 'c', 'd', 'e']

})

# Calculate the mean of column 'A'

mean_A = df['A'].mean()

print(f"Mean of column A: {mean_A}")

# Group by column 'C' and calculate the sum of 'B'

grouped = df.groupby('C')['B'].sum()

print("Sum of 'B' grouped by 'C':", grouped)Pandas, with its reliance on NumPy arrays under the hood, allows for powerful data manipulation and analysis. It's particularly useful when you need to perform operations across different columns or when dealing with time series data.

In my experience, one of the challenges with using arrays in data analysis is ensuring that your data is in the right format. Sometimes, you'll need to reshape or transform your data to fit the operations you want to perform. NumPy provides functions like reshape and transpose that can be incredibly useful in these situations.

For example, if you're working with image data, you might need to reshape your array to match the dimensions of the image:

# Create a 2D array representing an image

image = np.random.rand(100, 100)

# Reshape the image to a 1D array

flattened_image = image.reshape(-1)

print("Shape of flattened image:", flattened_image.shape)This kind of operation is common in machine learning and image processing, where you often need to manipulate the shape of your data to fit the requirements of different algorithms.

Another aspect to consider is performance optimization. While NumPy arrays are generally fast, there are ways to further optimize your code. For instance, using NumPy's built-in functions like np.sum or np.mean is usually faster than writing your own loops. Additionally, understanding how to use broadcasting effectively can lead to significant performance gains.

Here's an example of using broadcasting to perform element-wise operations:

# Create two arrays

a = np.array([1, 2, 3])

b = np.array([4, 5, 6])

# Perform element-wise addition using broadcasting

result = a b

print("Result of element-wise addition:", result)Broadcasting allows you to perform operations on arrays of different shapes, which can be a powerful tool in data analysis.

In conclusion, arrays in Python, particularly through NumPy and Pandas, are essential tools for data analysis. They offer speed, efficiency, and a wide range of operations that can transform how you work with data. From simple statistical calculations to complex data manipulations, arrays are at the heart of many data analysis tasks. As you delve deeper into data analysis, you'll find that mastering arrays and their associated libraries will significantly enhance your ability to extract insights from your data.

The above is the detailed content of How are arrays used in data analysis with Python?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Read CSV files and perform data analysis using pandas

Jan 09, 2024 am 09:26 AM

Read CSV files and perform data analysis using pandas

Jan 09, 2024 am 09:26 AM

Pandas is a powerful data analysis tool that can easily read and process various types of data files. Among them, CSV files are one of the most common and commonly used data file formats. This article will introduce how to use Pandas to read CSV files and perform data analysis, and provide specific code examples. 1. Import the necessary libraries First, we need to import the Pandas library and other related libraries that may be needed, as shown below: importpandasaspd 2. Read the CSV file using Pan

Introduction to data analysis methods

Jan 08, 2024 am 10:22 AM

Introduction to data analysis methods

Jan 08, 2024 am 10:22 AM

Common data analysis methods: 1. Comparative analysis method; 2. Structural analysis method; 3. Cross analysis method; 4. Trend analysis method; 5. Cause and effect analysis method; 6. Association analysis method; 7. Cluster analysis method; 8 , Principal component analysis method; 9. Scatter analysis method; 10. Matrix analysis method. Detailed introduction: 1. Comparative analysis method: Comparative analysis of two or more data to find the differences and patterns; 2. Structural analysis method: A method of comparative analysis between each part of the whole and the whole. ; 3. Cross analysis method, etc.

11 basic distributions that data scientists use 95% of the time

Dec 15, 2023 am 08:21 AM

11 basic distributions that data scientists use 95% of the time

Dec 15, 2023 am 08:21 AM

Following the last inventory of "11 Basic Charts Data Scientists Use 95% of the Time", today we will bring you 11 basic distributions that data scientists use 95% of the time. Mastering these distributions helps us understand the nature of the data more deeply and make more accurate inferences and predictions during data analysis and decision-making. 1. Normal Distribution Normal Distribution, also known as Gaussian Distribution, is a continuous probability distribution. It has a symmetrical bell-shaped curve with the mean (μ) as the center and the standard deviation (σ) as the width. The normal distribution has important application value in many fields such as statistics, probability theory, and engineering.

Machine learning and data analysis using Go language

Nov 30, 2023 am 08:44 AM

Machine learning and data analysis using Go language

Nov 30, 2023 am 08:44 AM

In today's intelligent society, machine learning and data analysis are indispensable tools that can help people better understand and utilize large amounts of data. In these fields, Go language has also become a programming language that has attracted much attention. Its speed and efficiency make it the choice of many programmers. This article introduces how to use Go language for machine learning and data analysis. 1. The ecosystem of machine learning Go language is not as rich as Python and R. However, as more and more people start to use it, some machine learning libraries and frameworks

How to use ECharts and php interfaces to implement data analysis and prediction of statistical charts

Dec 17, 2023 am 10:26 AM

How to use ECharts and php interfaces to implement data analysis and prediction of statistical charts

Dec 17, 2023 am 10:26 AM

How to use ECharts and PHP interfaces to implement data analysis and prediction of statistical charts. Data analysis and prediction play an important role in various fields. They can help us understand the trends and patterns of data and provide references for future decisions. ECharts is an open source data visualization library that provides rich and flexible chart components that can dynamically load and process data by using the PHP interface. This article will introduce the implementation method of statistical chart data analysis and prediction based on ECharts and php interface, and provide

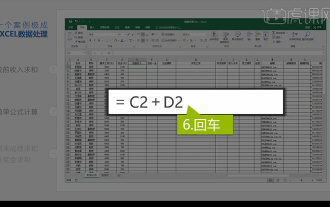

Integrated Excel data analysis

Mar 21, 2024 am 08:21 AM

Integrated Excel data analysis

Mar 21, 2024 am 08:21 AM

1. In this lesson, we will explain integrated Excel data analysis. We will complete it through a case. Open the course material and click on cell E2 to enter the formula. 2. We then select cell E53 to calculate all the following data. 3. Then we click on cell F2, and then we enter the formula to calculate it. Similarly, dragging down can calculate the value we want. 4. We select cell G2, click the Data tab, click Data Validation, select and confirm. 5. Let’s use the same method to automatically fill in the cells below that need to be calculated. 6. Next, we calculate the actual wages and select cell H2 to enter the formula. 7. Then we click on the value drop-down menu to click on other numbers.

What are the recommended data analysis websites?

Mar 13, 2024 pm 05:44 PM

What are the recommended data analysis websites?

Mar 13, 2024 pm 05:44 PM

Recommended: 1. Business Data Analysis Forum; 2. National People’s Congress Economic Forum - Econometrics and Statistics Area; 3. China Statistics Forum; 4. Data Mining Learning and Exchange Forum; 5. Data Analysis Forum; 6. Website Data Analysis; 7. Data analysis; 8. Data Mining Research Institute; 9. S-PLUS, R Statistics Forum.

Learn how to use the numpy library for data analysis and scientific computing

Jan 19, 2024 am 08:05 AM

Learn how to use the numpy library for data analysis and scientific computing

Jan 19, 2024 am 08:05 AM

With the advent of the information age, data analysis and scientific computing have become an important part of more and more fields. In this process, the use of computers for data processing and analysis has become an indispensable tool. In Python, the numpy library is a very important tool, which allows us to process and analyze data more efficiently and get results faster. This article will introduce the common functions and usage of numpy, and give some specific code examples to help you learn in depth. Installation of numpy library