A Comprehensive Guide to Building Multimodal RAG Systems

Retrieval Augmented Generation systems, better known as RAG systems, have become the de-facto standard for building intelligent AI assistants answering questions on custom enterprise data without the hassles of expensive fine-tuning of large language models (LLMs). One of the key advantages of RAG systems is you can easily integrate your own data and augment your LLM’s intelligence, and give more contextual answers to your questions. However, the key limitation of most RAG systems is that it works well only on text data. However, a lot of real-world data is multimodal in nature, which means a mixture of text, images, tables, and more. In this comprehensive hands-on guide, we will look at building a Multimodal RAG System that can handle mixed data formats using intelligent data transformations and multimodal LLMs.

Overview

- Retrieval Augmented Generation (RAG) systems enable intelligent AI assistants to answer questions on custom enterprise data without needing expensive LLM fine-tuning.

- Traditional RAG systems are constrained to text data, making them ineffective for multimodal data, which includes text, images, tables, and more.

- These systems integrate multimodal data processing (text, images, tables) and utilize multimodal LLMs, like GPT-4o, to provide more contextual and accurate answers.

- Multimodal Large Language Models (LLMs) like GPT-4o, Gemini, and LLaVA-NeXT can process and generate responses from multiple data formats, handling mixed inputs like text and images.

- The guide provides a detailed guide on building a Multimodal RAG system with LangChain, integrating intelligent document loaders, vector databases, and multi-vector retrievers.

- The guide shows how to process complex multimodal queries by utilizing multimodal LLMs and intelligent retrieval systems, creating advanced AI systems capable of answering diverse data-driven questions.

Table of contents

- Traditional RAG System Architecture

- Traditional RAG System limitations

- What is Multimodal Data?

- What is a Multimodal Large Language Model?

- Multimodal RAG System Workflow

- End-to-End Workflow

- Multi-Vector Retrieval Workflow

- Detailed Multimodal RAG System Architecture

- Hands-on Implementation of our Multimodal RAG System

- Install Dependencies

- Enter Open AI API Key

- Setup Environment Variables

- Frequently Asked Questions

Traditional RAG System Architecture

A retrieval augmented generation (RAG) system architecture typically consists of two major steps:

- Data Processing and Indexing

- Retrieval and Response Generation

In Step 1, Data Processing and Indexing, we focus on getting our custom enterprise data into a more consumable format by loading typically the text content from these documents, splitting large text elements into smaller chunks, converting them into embeddings using an embedder model and then storing these chunks and embeddings into a vector database as depicted in the following figure.

In Step 2, the workflow starts with the user asking a question, relevant text document chunks which are similar to the input question are retrieved from the vector database and then the question and the context document chunks are sent to an LLM to generate a human-like response as depicted in the following figure.

This two-step workflow is commonly used in the industry to build a traditional RAG system; however, it does have its own set of limitations, some of which we discuss below in detail.

Traditional RAG System limitations

Traditional RAG systems have several limitations, some of which are mentioned as follows:

- They are not privy to real-time data

- The system is as good as the data you have in your vector database

- Most RAG systems only work on text data for both retrieval and generation

- Traditional LLMs can only process text content to generate answers

- Unable to work with multimodal data

In this article, we will focus particularly on solving the limitations of traditional RAG systems in terms of their inability to work with multimodal content, as well as traditional LLMs, which can only reason and analyze text data to generate responses. Before diving into multimodal RAG systems, let’s first understand what Multimodal data is.

What is Multimodal Data?

Multimodal data is essentially data belonging to multiple modalities. The formal definition of modality comes from the context of Human-Computer Interaction (HCI) systems, where a modality is termed as the classification of a single independent channel of input and output between a computer and human (more details on Wikipedia). Common Computer-Human modalities include the following:

- Text: Input and output through written language (e.g., chat interfaces).

- Speech: Voice-based interaction (e.g., voice assistants).

- Vision: Image and video processing for visual recognition (e.g., face detection).

- Gestures: Hand and body movement tracking (e.g., gesture controls).

- Touch: Haptic feedback and touchscreens.

- Audio: Sound-based signals (e.g., music recognition, alerts).

- Biometrics: Interaction through physiological data (e.g., eye-tracking, fingerprints).

In short, multimodal data is essentially data that has a mixture of modalities or formats, as seen in the sample document below, with some of the distinct formats highlighted in various colors.

The key focus here is to build a RAG system that can handle documents with a mixture of data modalities, such as text, images, tables, and maybe even audio and video, depending on your data sources. This guide will focus on handling text, images, and tables. One of the key components needed to understand such data is multimodal large language models (LLMs).

What is a Multimodal Large Language Model?

Multimodal Large Language Models (LLMs) are essentially transformer-based LLMs that have been pre-trained and fine-tuned on multimodal data to analyze and understand various data formats, including text, images, tables, audio, and video. A true multimodal model ideally should be able not just to understand mixed data formats but also generate the same as shown in the following workflow illustration of NExT-GPT, published as a paper, NExT-GPT: Any-to-Any Multimodal Large Language Model

From the paper on NExT-GPT, any true multimodal model would typically have the following stages:

- Multimodal Encoding Stage. Leveraging existing well-established models to encode inputs of various modalities.

- LLM Understanding and Reasoning Stage. An LLM is used as the core agent of NExT-GPT. Technically, they employ the Vicuna LLM which takes as input the representations from different modalities and carries out semantic understanding and reasoning over the inputs. It outputs 1) the textual responses directly and 2) signal tokens of each modality that serve as instructions to dictate the decoding layers whether to generate multimodal content and what content to produce if yes.

- Multimodal Generation Stage. Receiving the multimodal signals with specific instructions from LLM (if any), the Transformer-based output projection layers map the signal token representations into the ones that are understandable to following multimodal decoders. Technically, they employ the current off-the-shelf latent conditioned diffusion models of different modal generations, i.e., Stable Diffusion (SD) for image synthesis, Zeroscope for video synthesis, and AudioLDM for audio synthesis.

However, most current Multimodal LLMs available for practical use are one-sided, which means they can understand mixed data formats but only generate text responses. The most popular commercial multimodal models are as follows:

- GPT-4V & GPT-4o (OpenAI): GPT-4o can understand text, images, audio, and video, although audio and video analysis are still not open to the public.

- Gemini (Google): A multimodal LLM from Google with true multimodal capabilities where it can understand text, audio, video, and images.

- Claude (Anthropic): A highly capable commercial LLM that includes multimodal capabilities in its latest versions, such as handling text and image inputs.

You can also consider open or open-source multimodal LLMs in case you want to build a completely open-source solution or have concerns on data privacy or latency and prefer to host everything locally in-house. The most popular open and open-source multimodal models are as follows:

- LLaVA-NeXT: An open-source multimodal model with capabilities to work with text, images and also video, which an improvement on top of the popular LLaVa model

- PaliGemma: A vision-language model from Google that integrates both image and text processing, designed for tasks like optical character recognition (OCR), object detection, and visual question answering (VQA).

- Pixtral 12B: An advanced multimodal model from Mistral AI with 12 billion parameters that can process both images and text. Built on Mistral’s Nemo architecture, Pixtral 12B excels in tasks like image captioning and object recognition.

For our Multimodal RAG System, we will leverage GPT-4o, one of the most powerful multimodal models currently available.

Multimodal RAG System Workflow

In this section, we will explore potential ways to build the architecture and workflow of a multimodal RAG system. The following figure illustrates potential approaches in detail and highlights the one we will use in this guide.

End-to-End Workflow

Multimodal RAG Systems can be implemented in various ways, the above figure illustrates three possible workflows as recommended in the LangChain blog, this include:

- Option 1: Use multimodal embeddings (such as CLIP) to embed images and text together. Retrieve either using similarity search, but simply link to images in a docstore. Pass raw images and text chunks to a multimodal LLM for synthesis.

- Option 2: Use a multimodal LLM (such as GPT-4o, GPT4-V, LLaVA) to produce text summaries from images. Embed and retrieve text summaries using a text embedding model. Again, reference raw text chunks or tables from a docstore for answer synthesis by a regular LLM; in this case, we exclude images from the docstore.

- Option 3: Use a multimodal LLM (such as GPT-4o, GPT4-V, LLaVA) to produce text, table and image summaries (text chunk summaries are optional). Embed and retrieved text, table, and image summaries with reference to the raw elements, as we did above in option 1. Again, raw images, tables, and text chunks will be passed to a multimodal LLM for answer synthesis. This option is sensible if we don’t want to use multimodal embeddings, which don’t work well when working with images that are more charts and visuals. However, we can also use multimodal embedding models here to embed images and summary descriptions together if necessary.

There are limitations in Option 1 as we cannot use images, which are charts and visuals, which is often the case with a lot of documents. The reason is that multimodal embedding models can’t often encode granular information like numbers in these visual images and compress them into meaningful embedding. Option 2 is severely limited because we do not end up using images at all in this system even if it might contain valuable information and it is not truly a multimodal RAG system.

Hence, we will proceed with Option 3 as our Multimodal RAG System workflow. In this workflow, we will create summaries out of our images, tables, and, optionally, our text chunks and use a multi-vector retriever, which can help in mapping and retrieving the original image, table, and text elements based on their corresponding summaries.

Multi-Vector Retrieval Workflow

Considering the workflow we will implement as discussed in the previous section, for our retrieval workflow, we will be using a multi-vector retriever as depicted in the following illustration, as recommended and mentioned in the LangChain blog. The key purpose of the multi-vector retriever is to act as a wrapper and help in mapping every text chunk, table, and image summary to the actual text chunk, table, and image element, which can then be obtained during retrieval.

The workflow illustrated above will first use a document parsing tool like Unstructured to extract the text, table and image elements separately. Then we will pass each extracted element into an LLM and generate a detailed text summary as depicted above. Next we will store the summaries and their embeddings into a vector database by using any popular embedder model like OpenAI Embedders. We will also store the corresponding raw document element (text, table, image) for each summary in a document store, which can be any database platform likeRedis.

The multi-vector retriever links each summary and its embedding to the original document’s raw element (text, table, image) using a common document identifier (doc_id). Now, when a user question comes in, first, the multi-vector retriever retrieves the relevant summaries, which are similar to the question in terms of semantic (embedding) similarity, and then using the common doc_ids, the original text, table and image elements are returned back which are further passed on to the RAG system’s LLM as the context to answer the user question.

Detailed Multimodal RAG System Architecture

Now, let’s dive deep into the detailed system architecture of our multimodal RAG system. We will understand each component in this workflow and what happens step-by-step. The following illustration depicts this architecture in detail.

We will now discuss the key steps of the above-illustrated multimodal RAG System and how it will work. The workflow is as follows:

- Load all documents and use a document loader like unstructured.io to extract text chunks, image, and tables.

- If necessary, convert HTML tables to markdown; they are often very effective with LLMs

- Pass each text chunk, image, and table into a multimodal LLM like GPT-4o and get a detailed summary.

- Store summaries in a vector DB and the raw document pieces in a document DB like Redis

- Connect the two databases with a common document_id using a multi-vector retriever to identify which summary maps to which raw document piece.

- Connect this multi-vector retrieval system with a multimodal LLM like GPT-4o.

- Query the system, and based on similar summaries to the query, get the raw document pieces, including tables and images, as the context.

- Using the above context, generate a response using the multimodal LLM for the question.

It’s not too complicated once you see all the components in place and structure the flow using the above steps! Let’s implement this system now in the next section.

Hands-on Implementation of our Multimodal RAG System

We will now implement the Multimodal RAG System we have discussed so far using LangChain. We will be loading the raw text, table and image elements from our documents into Redis and the element summaries and their embeddings in our vector database which will be the Chroma database and connect them together using a multi-vector retriever. Connections to LLMs and prompting will be done with LangChain. For our multimodal LLM, we will be using ChatGPT GPT-4o which is a powerful multimodal LLM. However, you are free to use any other multimodal LLM, including the open-source options mentioned earlier. It is recommended to use a powerful multimodal LLM that can effectively understand images, tables, and text to generate quality responses.

Install Dependencies

We start by installing the necessary dependencies, which are going to be the libraries we will be using to build our system. This includes langchain, unstructured as well as necessary dependencies like openai, chroma and utilities for data processing and extraction of tables and images.

!pip install langchain !pip install langchain-openai !pip install langchain-chroma !pip install langchain-community !pip install langchain-experimental !pip install "unstructured[all-docs]" !pip install htmltabletomd # install OCR dependencies for unstructured !sudo apt-get install tesseract-ocr !sudo apt-get install poppler-utils

Downloading Data

We downloaded a report on wildfire statistics in the US from the Congressional Research Service Reports Page which provides open access to detailed reports. This document has a mixture of text, tables and images as shown in the Multimodal Data section above. We will build a simple Chat to my PDF application here using our multimodal RAG System but you can easily extend this to multiple documents also.

!wget <strong>https://sgp.fas.org/crs/misc/IF10244.pdf</strong>

OUTPUT

--2024-08-18 10:08:54-- https://sgp.fas.org/crs/misc/IF10244.pdf<br>Connecting to sgp.fas.org (sgp.fas.org)|18.172.170.73|:443... connected.<br>HTTP request sent, awaiting response... 200 OK<br>Length: 435826 (426K) [application/pdf]<br>Saving to: ‘IF10244.pdf’<br>IF10244.pdf 100%[===================>] 425.61K 732KB/s in 0.6s<br>2024-08-18 10:08:55 (732 KB/s) - ‘IF10244.pdf’ saved [435826/435826]

Extracting Document Elements with Unstructured

We will now use the unstructured library, which provides open-source components for ingesting and pre-processing images and text documents, such as PDFs, HTML, Word docs, and more. We will use it to extract and chunk text elements and extract tables and images separately using the following code snippet.

from langchain_community.document_loaders import UnstructuredPDFLoader

doc = './IF10244.pdf'

# takes 1 min on Colab

loader = UnstructuredPDFLoader(file_path=doc,

strategy='hi_res',

extract_images_in_pdf=True,

infer_table_structure=True,

# section-based chunking

chunking_strategy="by_title",

max_characters=4000, # max size of chunks

new_after_n_chars=4000, # preferred size of chunks

# smaller chunks

<p><strong>OUTPUT</strong></p>

<pre class="brush:php;toolbar:false">7This tells us that unstructured has successfully extracted seven elements from the document and also downloaded the images separately in the `figures` directory. It has used section-based chunking to chunk text elements based on section headings in the documents and a chunk size of roughly 4000 characters. Also document intelligence deep learning models have been used to detect and extract tables and images separately. We can look at the type of elements extracted using the following code.

[doc.metadata['category'] for doc in data]

OUTPUT

['CompositeElement',<br>'CompositeElement',<br>'Table',<br>'CompositeElement',<br>'CompositeElement',<br>'Table',<br>'CompositeElement']

This tells us we have some text chunks and tables in our extracted content. We can now explore and check out the contents of some of these elements.

# This is a text chunk element data[0]

OUTPUT

Document(metadata={'source': './IF10244.pdf', 'filetype': 'application/pdf',<br> 'languages': ['eng'], 'last_modified': '2024-04-10T01:27:48', 'page_number':<br> 1, 'orig_elements': 'eJzF...eUyOAw==', 'file_directory': '.', 'filename':<br> 'IF10244.pdf', 'category': 'CompositeElement', 'element_id':<br> '569945de4df264cac7ff7f2a5dbdc8ed'}, page_content='a. aa = Informing the<br> legislative debate since 1914 Congressional Research Service\n\nUpdated June<br> 1, 2023\n\nWildfire Statistics\n\nWildfires are unplanned fires, including<br> lightning-caused fires, unauthorized human-caused fires, and escaped fires<br> from prescribed burn projects. States are responsible for responding to<br> wildfires that begin on nonfederal (state, local, and private) lands, except<br> for lands protected by federal agencies under cooperative agreements. The<br> federal government is responsible for responding to wildfires that begin on<br> federal lands. The Forest Service (FS)—within the U.S. Department of<br> Agriculture—carries out wildfire management ...... Over 40% of those acres<br> were in Alaska (3.1 million acres).\n\nAs of June 1, 2023, around 18,300<br> wildfires have impacted over 511,000 acres this year.')The following snippet depicts one of the table elements extracted.

# This is a table element data[2]

OUTPUT

Document(metadata={'source': './IF10244.pdf', 'last_modified': '2024-04-<br>10T01:27:48', 'text_as_html': '| 2018 | 2019 | 2020 | 2021 | 2022 | |

|---|---|---|---|---|---|

| Number of Fires (thousands) | |||||

| Federal | 12.5 | 10.9 | 14.4 | 14.0 | 11.7 |

| FS | ......50.5 | 59.0 | 59.0 | 69.0 | |

| Acres Burned (millions) | |||||

| Federal | 4.6 | 3.1 | 7.1 | 5.2 | 40 |

| FS | ......1.6 | 3.1 | Lg | 3.6 | |

| Total | 8.8 | 4.7 | 10.1 | 7.1 | 7.6 |

'page_number': 1, 'orig_elements': 'eJylVW1.....AOBFljW', 'file_directory':

'.', 'filename': 'IF10244.pdf', 'category': 'Table', 'element_id':

'40059c193324ddf314ed76ac3fe2c52c'}, page_content='2018 2019 2020 Number of

Fires (thousands) Federal 12.5 10......Nonfederal 4.1 1.6 3.1 1.9 Total 8.8

4.7 10.1 7.1

We can see the text content extracted from the table using the following code snippet.

print(data[2].page_content)

OUTPUT

2018 2019 2020 Number of Fires (thousands) Federal 12.5 10.9 14.4 FS 5.6 5.3<br> 6.7 DOI 7.0 5.3 7.6 2021 14.0 6.2 7.6 11.7 5.9 5.8 Other 0.1 0.2 0.1 Nonfederal 45.6 39.6 44.6 45.0 57.2 Total 58.1 Acres Burned (millions)<br> Federal 4.6 FS 2.3 DOI 2.3 50.5 3.1 0.6 2.3 59.0 7.1 4.8 2.3 59.0 5.2 4.1<br> 1.0 69.0 4.0 1.9 2.1 Other Total 8.8 4.7 10.1 7.1 <p>While this can be fed into an LLM, the structure of the table is lost here so we can rather focus on the HTML table content itself and do some transformations later.</p> <pre class="brush:php;toolbar:false">data[2].metadata['text_as_html']

OUTPUT

| 2018 | 2019 | 2020 | 2021 | 2022 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Number of Fires (thousands) | ||||||||||

| Federal | 12.5 | 10.9 | 14.4 | 14.0 | 11.7 | ......45.6 | 39.6 | 44.6 | 45.0 | $7.2 |

| Total | 58.1 | 50.5 | 59.0 | 59.0 | 69.0 | |||||

| colspan="6">Acres Burned (millions) | ||||||||||

| Federal | 4.6 | 3.1 | 7.1 | 5.2 | 40 | |||||

| FS | 2.3 | ......1.0 | 2.1 | |||||||

| Other | ||||||||||

The above is the detailed content of A Comprehensive Guide to Building Multimodal RAG Systems. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1673

1673

14

14

1428

1428

52

52

1333

1333

25

25

1278

1278

29

29

1257

1257

24

24

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

The release includes three distinct models, GPT-4.1, GPT-4.1 mini and GPT-4.1 nano, signaling a move toward task-specific optimizations within the large language model landscape. These models are not immediately replacing user-facing interfaces like

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

Unlock the Power of Embedding Models: A Deep Dive into Andrew Ng's New Course Imagine a future where machines understand and respond to your questions with perfect accuracy. This isn't science fiction; thanks to advancements in AI, it's becoming a r

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Simulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Gemini as the Foundation of Google’s AI Strategy Gemini is the cornerstone of Google’s AI agent strategy, leveraging its advanced multimodal capabilities to process and generate responses across text, images, audio, video and code. Developed by DeepM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

“Super happy to announce that we are acquiring Pollen Robotics to bring open-source robots to the world,” Hugging Face said on X. “Since Remi Cadene joined us from Tesla, we’ve become the most widely used software platform for open robotics thanks to

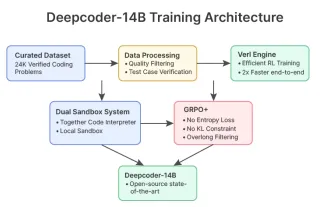

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

In a significant development for the AI community, Agentica and Together AI have released an open-source AI coding model named DeepCoder-14B. Offering code generation capabilities on par with closed-source competitors like OpenAI