What is F-Beta Score?

The F-Beta Score: A Comprehensive Guide to Model Evaluation in Machine Learning

In machine learning and statistical modeling, accurately assessing model performance is crucial. While accuracy is a common metric, it often falls short when dealing with imbalanced datasets, failing to adequately capture the trade-offs between precision and recall. Enter the F-Beta Score—a more flexible evaluation metric that allows you to prioritize either precision or recall depending on the specific task. This article provides a detailed explanation of the F-Beta Score, its calculation, applications, and implementation in Python.

Learning Objectives:

- Grasp the concept and significance of the F-Beta Score.

- Understand the F-Beta Score formula and its components.

- Learn when to apply the F-Beta Score in model assessment.

- Explore practical examples using various β values.

- Master F-Beta Score computation using Python.

Table of Contents:

- What is the F-Beta Score?

- When to Use the F-Beta Score

- Calculating the F-Beta Score

- Practical Applications of the F-Beta Score

- Python Implementation

- Conclusion

- Frequently Asked Questions

What is the F-Beta Score?

The F-Beta Score provides a nuanced assessment of a model's output by considering both precision and recall. Unlike the F1 Score, which averages precision and recall equally, the F-Beta Score allows you to adjust the weighting of recall relative to precision using the β parameter.

- Precision: The proportion of correctly predicted positive instances among all predicted positive instances.

- Recall (Sensitivity): The proportion of correctly predicted positive instances among all actual positive instances.

-

β (Beta): A parameter that controls the relative importance of precision and recall:

- β > 1: Recall is more important.

- β

- β = 1: Precision and recall are equally weighted (equivalent to the F1 Score).

When to Use the F-Beta Score

The F-Beta Score is particularly useful in scenarios demanding a careful balance or prioritization of precision and recall. Here are some key situations:

-

Imbalanced Datasets: In datasets with a skewed class distribution (e.g., fraud detection, medical diagnosis), accuracy can be misleading. The F-Beta Score allows you to adjust β to emphasize recall (fewer missed positives) or precision (fewer false positives), aligning with the cost associated with each type of error.

-

Domain-Specific Prioritization: Different applications have varying tolerances for different types of errors. For example:

- Medical Diagnosis: Prioritize recall (high β) to minimize missed diagnoses.

- Spam Filtering: Prioritize precision (low β) to minimize false positives (flagging legitimate emails as spam).

-

Optimizing the Precision-Recall Trade-off: The F-Beta Score provides a single metric to guide the optimization process, allowing for targeted improvements in either precision or recall.

-

Cost-Sensitive Tasks: When the costs of false positives and false negatives differ significantly, the F-Beta Score helps choose the optimal balance.

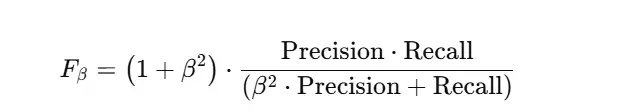

Calculating the F-Beta Score

The F-Beta Score is calculated using the precision and recall derived from a confusion matrix:

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actual Positive | True Positive (TP) | False Negative (FN) |

| Actual Negative | False Positive (FP) | True Negative (TN) |

- Calculate Precision: Precision = TP / (TP FP)

- Calculate Recall: Recall = TP / (TP FN)

- Calculate F-Beta Score: Fβ = (1 β²) (Precision Recall) / (β² * Precision Recall)

Practical Applications of the F-Beta Score

The F-Beta Score finds widespread application across numerous domains:

- Healthcare: Disease detection, drug discovery

- Finance: Fraud detection, risk assessment

- Cybersecurity: Intrusion detection, threat analysis

- Natural Language Processing: Sentiment analysis, spam filtering, text classification

- Recommender Systems: Product recommendations, content suggestions

- Search Engines: Information retrieval, query processing

- Autonomous Systems: Object detection, decision-making

Python Implementation

The scikit-learn library provides a straightforward way to calculate the F-Beta Score:

from sklearn.metrics import fbeta_score, precision_score, recall_score, confusion_matrix

import numpy as np

# Example data

y_true = np.array([1, 0, 1, 1, 0, 1, 0, 0, 1, 0])

y_pred = np.array([1, 0, 1, 0, 0, 1, 0, 1, 1, 0])

# Calculate scores

precision = precision_score(y_true, y_pred)

recall = recall_score(y_true, y_pred)

f1 = fbeta_score(y_true, y_pred, beta=1)

f2 = fbeta_score(y_true, y_pred, beta=2)

f05 = fbeta_score(y_true, y_pred, beta=0.5)

print(f"Precision: {precision:.2f}")

print(f"Recall: {recall:.2f}")

print(f"F1 Score: {f1:.2f}")

print(f"F2 Score: {f2:.2f}")

print(f"F0.5 Score: {f05:.2f}")

# Confusion matrix

conf_matrix = confusion_matrix(y_true, y_pred)

print("\nConfusion Matrix:")

print(conf_matrix)Conclusion

The F-Beta Score is a powerful tool for evaluating machine learning models, particularly when dealing with imbalanced datasets or situations where the cost of different types of errors varies. Its flexibility in weighting precision and recall makes it adaptable to a wide range of applications. By understanding and utilizing the F-Beta Score, you can significantly enhance your model evaluation process and achieve more robust and contextually relevant results.

Frequently Asked Questions

-

Q1: What is the F-Beta Score used for? A1: To evaluate model performance by balancing precision and recall based on application needs.

-

Q2: How does β affect the F-Beta Score? A2: Higher β values prioritize recall; lower β values prioritize precision.

-

Q3: Is the F-Beta Score suitable for imbalanced datasets? A3: Yes, it's highly effective for imbalanced datasets.

-

Q4: How is the F-Beta Score different from the F1 Score? A4: The F1 Score is a special case of the F-Beta Score with β = 1.

-

Q5: Can I calculate the F-Beta Score without a library? A5: Yes, but libraries like

scikit-learnsimplify the process.

The above is the detailed content of What is F-Beta Score?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1666

1666

14

14

1426

1426

52

52

1328

1328

25

25

1273

1273

29

29

1255

1255

24

24

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

Hey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

Introduction OpenAI has released its new model based on the much-anticipated “strawberry” architecture. This innovative model, known as o1, enhances reasoning capabilities, allowing it to think through problems mor

Pixtral-12B: Mistral AI's First Multimodal Model - Analytics Vidhya

Apr 13, 2025 am 11:20 AM

Pixtral-12B: Mistral AI's First Multimodal Model - Analytics Vidhya

Apr 13, 2025 am 11:20 AM

Introduction Mistral has released its very first multimodal model, namely the Pixtral-12B-2409. This model is built upon Mistral’s 12 Billion parameter, Nemo 12B. What sets this model apart? It can now take both images and tex

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-

Beyond The Llama Drama: 4 New Benchmarks For Large Language Models

Apr 14, 2025 am 11:09 AM

Beyond The Llama Drama: 4 New Benchmarks For Large Language Models

Apr 14, 2025 am 11:09 AM

Troubled Benchmarks: A Llama Case Study In early April 2025, Meta unveiled its Llama 4 suite of models, boasting impressive performance metrics that positioned them favorably against competitors like GPT-4o and Claude 3.5 Sonnet. Central to the launc

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global Health

Apr 14, 2025 am 11:27 AM

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global Health

Apr 14, 2025 am 11:27 AM

Can a video game ease anxiety, build focus, or support a child with ADHD? As healthcare challenges surge globally — especially among youth — innovators are turning to an unlikely tool: video games. Now one of the world’s largest entertainment indus

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

The release includes three distinct models, GPT-4.1, GPT-4.1 mini and GPT-4.1 nano, signaling a move toward task-specific optimizations within the large language model landscape. These models are not immediately replacing user-facing interfaces like