Andrej Karpathy on Puzzle-Solving Benchmarks

AI Development Assessment: Beyond Puzzle-Solution Benchmarks

AI benchmarks have long been the standard for measuring advances in AI, providing a practical way to evaluate and compare system capabilities. But is this approach really the best way to evaluate AI systems? Andrej Karpathy recently questioned the adequacy of this approach in an article on X Platform. AI systems are becoming more proficient in solving predefined problems, but their broader utility and adaptability remain uncertain. This begs an important question: Are we focusing only on puzzle-solving benchmarks, thus hindering the true potential of AI?

I personally don't catch a cold about these small puzzle benchmarks and feel like I'm back in the Atari era. The benchmarks I'm more focused on are closer to the sum of total annual revenue (ARR) of AI products, but are not sure if there is a simpler/public metric that captures most of the situation. I know this joke refers to Nvidia.

— Andrej Karpathy (@karpathy) December 23, 2024

Table of contents

- Problems with puzzle benchmarking

- Key challenges of current benchmarking

- Moving towards more meaningful benchmarks

- Real-world mission simulation

- Long-term planning and reasoning

- Ethics and social awareness

- Cross-domain generalization capability

- The future of AI benchmarking

- Conclusion

Problems with puzzle benchmarking

LLM benchmarks like MMLU and GLUE undoubtedly drive significant advances in NLP and deep learning. However, these benchmarks often reduce complex, real-world challenges to well-defined challenges with clear goals and evaluation criteria. While this simplification is feasible for research, it may mask the deeper capabilities needed to have a meaningful impact on society.

Karpathy’s article highlights a fundamental problem: “Benchmarks are becoming more and more like puzzle games.” Responding to his view suggests that there is a broad consensus among the AI community. Many commenters stress that the ability to generalize and adapt to new, undefined tasks is far more important than performing well in narrowly defined benchmarks.

Also read: How to evaluate large language models (LLMs)?

Key challenges of current benchmarking

Overfitting the indicator

AI systems are optimized to perform well on a specific dataset or task, resulting in overfitting. Even if the benchmark dataset is not explicitly used during training, data leakage can occur, causing the model to inadvertently learn benchmark-specific patterns. This can hinder its performance in a wider range of real-world applications. AI systems are optimized to perform well on a specific dataset or task, resulting in overfitting. But this does not necessarily translate into real-world utility.

Lack of generalization ability

Solving benchmarking tasks does not guarantee that AI can handle similar, slightly different problems. For example, a system trained to subtitle an image may have difficulty handling subtitle descriptions outside its training data.

Narrow task definition

Benchmarks usually focus on tasks such as classification, translation, or summary. These tasks do not test a wider range of abilities, such as reasoning, creativity, or ethical decision-making.

Moving towards more meaningful benchmarks

The limitations of puzzle-solving benchmarks require us to change the way we evaluate AI. Here are some recommended ways to redefine AI benchmarks:

Real-world mission simulation

Benchmarks can take dynamic real-world environments rather than static datasets where AI systems must adapt to changing conditions. Google, for example, has already worked on this through initiatives like Genie 2, a large-scale model of the world. More details can be found in their DeepMind blog and Analytics Vidhya's articles.

- Simulation Agent: Test AI in an open environment such as Minecraft or robot simulation to evaluate its problem-solving capabilities and adaptability.

- Complex scenarios: Deploy AI into real-world industries (such as healthcare, climate modeling) to evaluate its utility in practical applications.

Long-term planning and reasoning

Benchmarks should test the AI’s ability to perform tasks that require long-term planning and reasoning. For example:

- Multi-step problem solving needs to be understood over time.

- Tasks involving self-learning of new skills.

Ethics and social awareness

As AI systems increasingly interact with humans, benchmarks must measure ethical reasoning and social understanding. This includes incorporating security measures and regulatory safeguards to ensure responsible use of AI systems. Recent Red Team evaluations provide a comprehensive framework for testing the security and credibility of AI in sensitive applications. Benchmarks must also ensure that AI systems make fair and impartial decisions in scenarios involving sensitive data and interpret their decisions transparently to non-experts. Implementing security measures and regulatory safeguards can reduce risks while enhancing trust in AI applications. To non-experts.

Cross-domain generalization capability

Benchmarks should test the ability of AI to generalize in multiple unrelated tasks. For example, a single AI system performs well in language understanding, image recognition, and robotics without the need for specialized fine-tuning for each field.

The future of AI benchmarking

As the AI field continues to develop, its benchmarks must also develop. Going beyond puzzle-solving benchmarks will require collaboration between researchers, practitioners and policy makers to design benchmarks that meet real-world needs and values. These benchmarks should emphasize:

- Adaptability: The ability to handle various unseen tasks.

- Impact: Measuring contributions to meaningful social challenges.

- Ethics: Ensure that AI is in line with human values and fairness.

Conclusion

Karpathy’s observations prompted us to rethink the purpose and design of AI benchmarks. While puzzle-solving benchmarks have driven incredible progress, they may now hinder us from implementing a broader, more impactful AI system. The AI community must turn to benchmarking testing adaptability, generalization capabilities, and real-world utility to unlock the true potential of AI.

The path forward is not easy, but the rewards – not only powerful but truly transformative AI systems – are worth the effort.

What do you think about this? Please let us know in the comment section below!

The above is the detailed content of Andrej Karpathy on Puzzle-Solving Benchmarks. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1677

1677

14

14

1431

1431

52

52

1334

1334

25

25

1279

1279

29

29

1257

1257

24

24

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

The release includes three distinct models, GPT-4.1, GPT-4.1 mini and GPT-4.1 nano, signaling a move toward task-specific optimizations within the large language model landscape. These models are not immediately replacing user-facing interfaces like

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Simulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

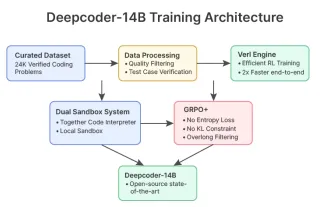

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

In a significant development for the AI community, Agentica and Together AI have released an open-source AI coding model named DeepCoder-14B. Offering code generation capabilities on par with closed-source competitors like OpenAI

The Prompt: ChatGPT Generates Fake Passports

Apr 16, 2025 am 11:35 AM

The Prompt: ChatGPT Generates Fake Passports

Apr 16, 2025 am 11:35 AM

Chip giant Nvidia said on Monday it will start manufacturing AI supercomputers— machines that can process copious amounts of data and run complex algorithms— entirely within the U.S. for the first time. The announcement comes after President Trump si

Guy Peri Helps Flavor McCormick's Future Through Data Transformation

Apr 19, 2025 am 11:35 AM

Guy Peri Helps Flavor McCormick's Future Through Data Transformation

Apr 19, 2025 am 11:35 AM

Guy Peri is McCormick’s Chief Information and Digital Officer. Though only seven months into his role, Peri is rapidly advancing a comprehensive transformation of the company’s digital capabilities. His career-long focus on data and analytics informs

Runway AI's Gen-4: How Can AI Montage Go Beyond Absurdity

Apr 16, 2025 am 11:45 AM

Runway AI's Gen-4: How Can AI Montage Go Beyond Absurdity

Apr 16, 2025 am 11:45 AM

The film industry, alongside all creative sectors, from digital marketing to social media, stands at a technological crossroad. As artificial intelligence begins to reshape every aspect of visual storytelling and change the landscape of entertainment