Technology peripherals

Technology peripherals

AI

AI

Hugging Face's Text Generation Inference Toolkit for LLMs - A Game Changer in AI

Hugging Face's Text Generation Inference Toolkit for LLMs - A Game Changer in AI

Hugging Face's Text Generation Inference Toolkit for LLMs - A Game Changer in AI

Harness the Power of Hugging Face Text Generation Inference (TGI): Your Local LLM Server

Large Language Models (LLMs) are revolutionizing AI, particularly in text generation. This has led to a surge in tools designed to simplify LLM deployment. Hugging Face's Text Generation Inference (TGI) stands out, offering a powerful, production-ready framework for running LLMs locally as a service. This guide explores TGI's capabilities and demonstrates how to leverage it for sophisticated AI text generation.

Understanding Hugging Face TGI

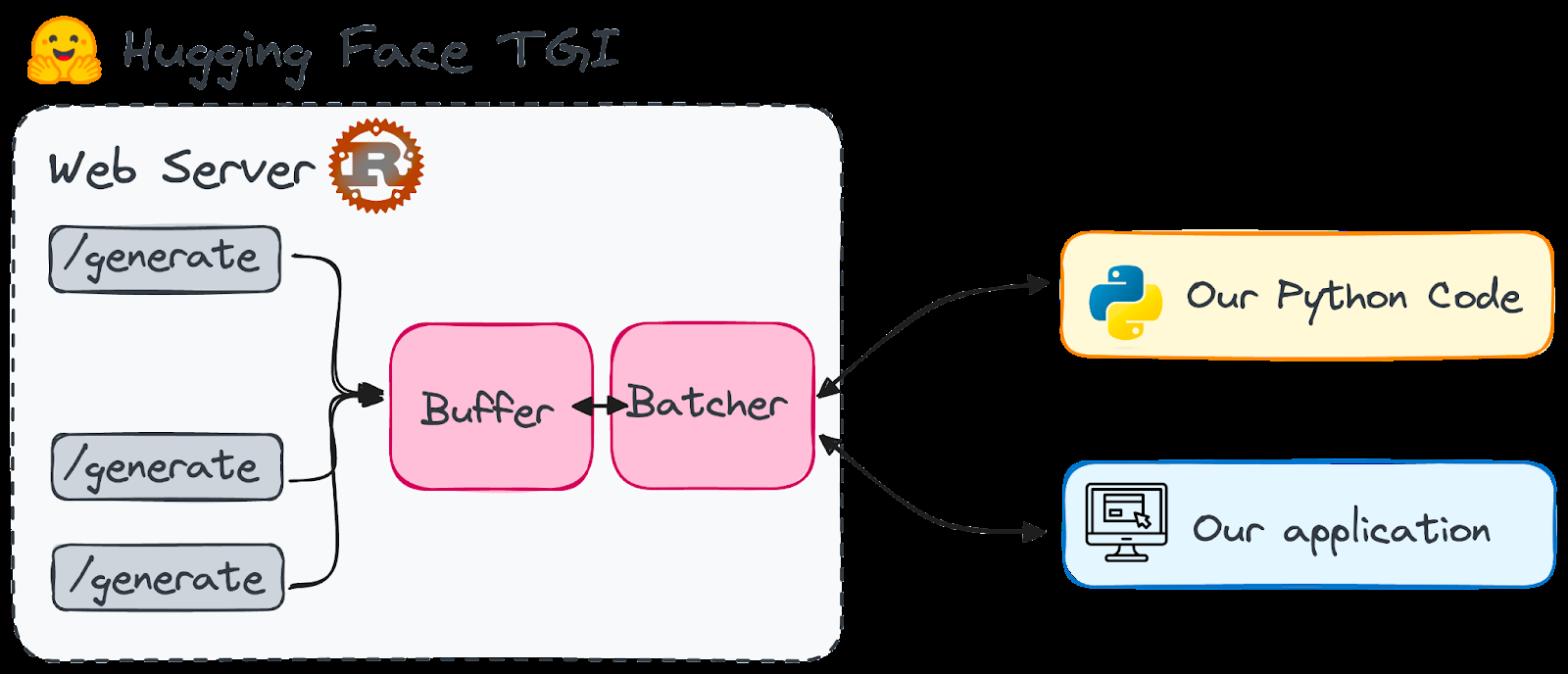

TGI, a Rust and Python framework, enables the deployment and serving of LLMs on your local machine. Licensed under HFOILv1.0, it's suitable for commercial use as a supplementary tool. Its key advantages include:

- High-Performance Text Generation: TGI optimizes performance using Tensor Parallelism and dynamic batching for models like StarCoder, BLOOM, GPT-NeoX, Llama, and T5.

- Efficient Resource Usage: Continuous batching and optimized code minimize resource consumption while handling multiple requests concurrently.

- Flexibility: It supports safety and security features such as watermarking, logit warping for bias control, and stop sequences.

TGI boasts optimized architectures for faster execution of LLMs like LLaMA, Falcon7B, and Mistral (see documentation for the complete list).

Why Choose Hugging Face TGI?

Hugging Face is a central hub for open-source LLMs. Previously, many models were too resource-intensive for local use, requiring cloud services. However, advancements like QLoRa and GPTQ quantization have made some LLMs manageable on local machines.

TGI solves the problem of LLM startup time. By keeping the model ready, it provides instant responses, eliminating lengthy wait times. Imagine having an endpoint readily accessible to a range of top-tier language models.

TGI's simplicity is noteworthy. It's designed for seamless deployment of streamlined model architectures and powers several live projects, including:

- Hugging Chat

- OpenAssistant

- nat.dev

Important Note: TGI is currently incompatible with ARM-based GPU Macs (M1 and later).

Setting Up Hugging Face TGI

Two methods are presented: from scratch and using Docker (recommended for simplicity).

Method 1: From Scratch (More Complex)

- Install Rust:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh - Create a Python virtual environment:

conda create -n text-generation-inference python=3.9 && conda activate text-generation-inference - Install Protoc (version 21.12 recommended): (requires

sudo) Instructions omitted for brevity, refer to the original text. - Clone the GitHub repository:

git clone https://github.com/huggingface/text-generation-inference.git - Install TGI:

cd text-generation-inference/ && BUILD_EXTENSIONS=False make install

Method 2: Using Docker (Recommended)

- Ensure Docker is installed and running.

- (Check compatibility first) Run the Docker command (example using Falcon-7B):

volume=$PWD/data && sudo docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:0.9 --model-id tiiuae/falcon-7b-instruct --num-shard 1 --quantize bitsandbytesReplace"all"with"0"if using a single GPU.

Using TGI in Applications

After launching TGI, interact with it using POST requests to the /generate endpoint (or /stream for streaming). Examples using Python and curl are provided in the original text. The text-generation Python library (pip install text-generation) simplifies interaction.

Practical Tips and Further Learning

- Understand LLM Fundamentals: Familiarize yourself with tokenization, attention mechanisms, and the Transformer architecture.

- Model Optimization: Learn how to prepare and optimize models, including selecting the right model, customizing tokenizers, and fine-tuning.

- Generation Strategies: Explore different text generation strategies (greedy search, beam search, top-k sampling).

Conclusion

Hugging Face TGI offers a user-friendly way to deploy and host LLMs locally, providing benefits like data privacy and cost control. While requiring powerful hardware, recent advancements make it feasible for many users. Further exploration of advanced LLM concepts and resources (mentioned in the original text) is highly recommended for continued learning.

The above is the detailed content of Hugging Face's Text Generation Inference Toolkit for LLMs - A Game Changer in AI. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1655

1655

14

14

1413

1413

52

52

1306

1306

25

25

1252

1252

29

29

1226

1226

24

24

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

Hey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Shopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

Introduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

Introduction OpenAI has released its new model based on the much-anticipated “strawberry” architecture. This innovative model, known as o1, enhances reasoning capabilities, allowing it to think through problems mor

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

For those of you who might be new to my column, I broadly explore the latest advances in AI across the board, including topics such as embodied AI, AI reasoning, high-tech breakthroughs in AI, prompt engineering, training of AI, fielding of AI, AI re