SOLAR-10.7B Fine-Tuned Model Tutorial

SOLAR-10.7B: A Deep Dive into a Highly Efficient Large Language Model

The SOLAR-10.7B project marks a significant advancement in large language model (LLM) development. This article explores its innovative scaling approach, performance benchmarks, practical usage, and potential applications, while also acknowledging its limitations.

Understanding SOLAR-10.7B

Developed by Upstage AI in South Korea, SOLAR-10.7B is a 10.7-billion parameter model built on the Llama-2 architecture. Remarkably, it outperforms other LLMs with significantly larger parameter counts, including the Mixtral 8X7B. For a comprehensive understanding of Llama-2, refer to our guide on fine-tuning this model.

The SOLAR-10.7B-Instruct variant, a fine-tuned version, excels at following complex instructions. This highlights the power of fine-tuning to tailor LLMs for specific tasks. The core innovation behind SOLAR-10.7B is its Depth Up-Scaling (DUS) method, detailed below.

Depth Up-Scaling: A Novel Scaling Technique

DUS allows for increasing the model's depth without a proportional increase in computational resources. This improves both efficiency and performance. The method relies on three key components: Mistral 7B weights, the Llama 2 framework, and continuous pre-training.

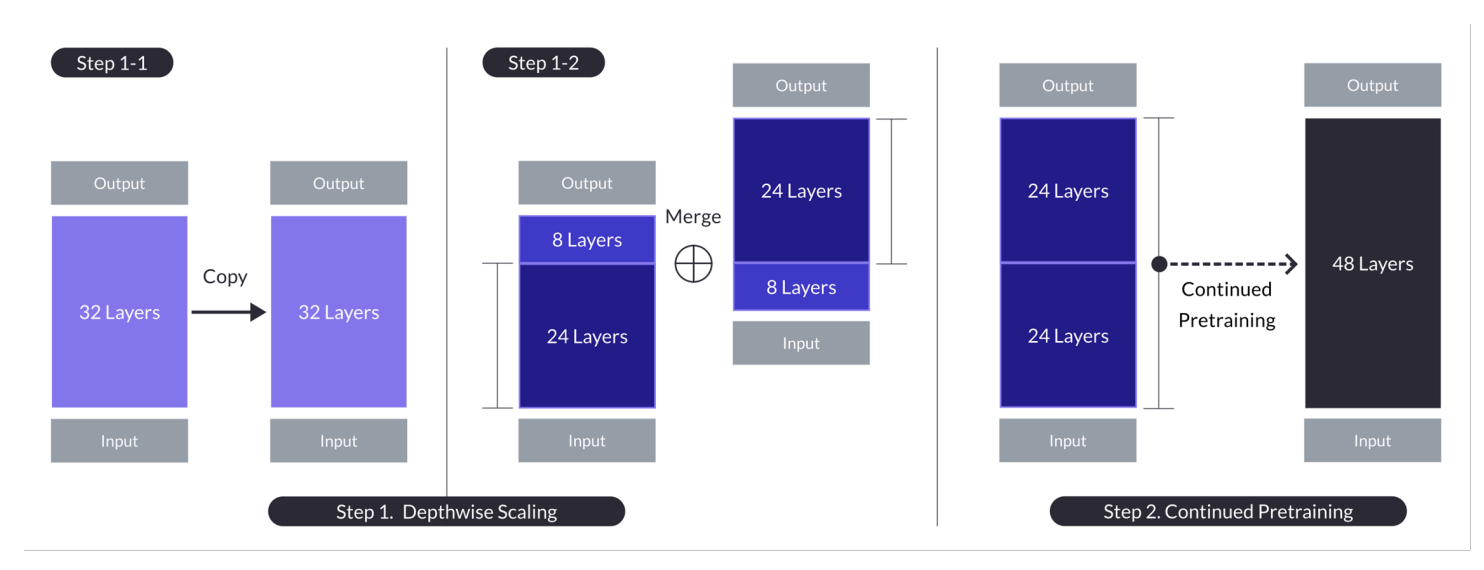

Depth up-scaling illustration for n = 32, s = 48, and m = 8. A two-stage process combines depthwise scaling and continued pre-training. (Source)

The process involves:

- Base Model: A 32-layer Llama 2 model initialized with Mistral 7B weights.

- Depthwise Scaling: The base model is scaled by duplicating it, removing layers from both copies, and concatenating them to achieve a desired layer count (e.g., 48 layers from a 32-layer base).

- Continued Pre-training: Further pre-training mitigates any performance drop caused by the scaling process.

This multi-stage approach enables SOLAR-10.7B to match or exceed the capabilities of far larger models, making it a cost-effective and powerful option.

SOLAR-10.7B-Instruct: Enhanced Instruction Following

SOLAR-10.7B-Instruct is specifically tuned for complex instruction interpretation. This is achieved through fine-tuning using open-source datasets and synthesized math QA datasets to improve mathematical reasoning. The model's foundation in the Llama-2 architecture provides a balance of speed and accuracy.

Applications of the Fine-Tuned Model

The fine-tuned SOLAR-10.7B model offers diverse applications:

- Personalized Education: Create intelligent tutoring systems that adapt to individual learning styles.

- Customer Support: Power advanced chatbots capable of handling complex queries.

- Automated Content Creation: Generate various forms of written content and summarize lengthy documents.

A Practical Guide to Using SOLAR-10.7B-Instruct

This section provides a step-by-step guide to using the SOLAR-10.7B-Instruct v1.0 GGUF model.

1. Installation:

pip -q install transformers==4.35.2 pip -q install accelerate

2. Import Libraries:

import torch from transformers import AutoModelForCausalLM, AutoTokenizer

3. GPU Configuration: Ensure a GPU is enabled (e.g., using Google Colab's runtime settings). Verify with !nvidia-smi.

4. Model Definition:

model_ID = "Upstage/SOLAR-10.7B-Instruct-v1.0" tokenizer = AutoTokenizer.from_pretrained(model_ID) model = AutoModelForCausalLM.from_pretrained(model_ID, device_map="auto", torch_dtype=torch.float16)

5. Model Inference and Result Generation:

user_request = "What is the square root of 24?"

conversation = [{'role': 'user', 'content': user_request}]

prompt = tokenizer.apply_chat_template(conversation, tokenize=False, add_generation_prompt=True)

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, use_cache=True, max_length=4096)

output_text = tokenizer.decode(outputs[0])

print(output_text)Limitations

While powerful, SOLAR-10.7B has limitations:

- Hyperparameter Optimization: More extensive hyperparameter exploration is needed for DUS.

- Computational Demands: Requires significant computational resources.

- Bias: Potential biases in training data may affect performance.

- Environmental Impact: High energy consumption during training and inference.

Conclusion

SOLAR-10.7B represents a significant step forward in efficient LLM scaling. Its innovative DUS method, coupled with its strong performance and diverse applications, positions it as a valuable tool. However, its limitations should be considered. For further exploration of LLM fine-tuning, see our tutorials on FLAN-T5 and GPT-3.5.

The above is the detailed content of SOLAR-10.7B Fine-Tuned Model Tutorial. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Shopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

2024 witnessed a shift from simply using LLMs for content generation to understanding their inner workings. This exploration led to the discovery of AI Agents – autonomous systems handling tasks and decisions with minimal human intervention. Buildin

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

The article reviews top AI voice generators like Google Cloud, Amazon Polly, Microsoft Azure, IBM Watson, and Descript, focusing on their features, voice quality, and suitability for different needs.