OLMo 2 vs. Claude 3.5 Sonnet: Which is Better?

The AI industry is divided between two powerful philosophies – Open-source democratization and proprietary innovation. OLMo 2(Open Language Model 2), developed by AllenAI, represents the pinnacle of transparent AI development with full public access to its architecture and training data. In contrast, Claude 3.5 Sonnet, Anthropic’s flagship model, prioritizes commercial-grade coding capabilities and multimodal reasoning behind closed doors.

This article dives into their technical architectures, use cases, and practical workflows, complete with code examples and dataset references. Whether you’re building a startup chatbot or scaling enterprise solutions, this guide will help you make an informed choice.

Learning Objectives

In this article, you will:

- Understand how design choices (e.g., RMSNorm, rotary embeddings) influence training stability and performance in OLMo 2 and Claude 3.5 Sonnet.

- Learn about token-based API costs (Claude 3.5) versus self-hosting overhead (OLMo 2).

- Implement both models in practical coding scenarios through concrete examples.

- Compare performance metrics for accuracy, speed, and multilingual tasks.

- Understand the fundamental architectural differences between OLMo 2 and Claude 3.5 Sonnet.

- Evaluate cost-performance trade-offs for different project requirements.

This article was published as a part of theData Science Blogathon.

Table of contents

- OLMo 2: A Fully Open Autoregressive Model

- What are the key Architectural Innovations of OLMo 2?

- Training and Post-Training Enhancements

- Claude 3.5 Sonnet: A Closed‑Source Model for Ethical and Coding‑Focused Applications

- Core Features and Innovations

- Technical Comparison of OLMo 2 vs. Claude 3.5 Sonnet

- Pricing Comparison of OLMo 2 vs. Claude 3.5 Sonnet

- Accessing the Olmo 2 Model and Claude 3.5 Sonnet API

- How to run the Ollama (Olmo 2) model locally?

- How to access Claude 3.5 Sonnet Api?

- OLMo 2 vs. Claude 3.5 Sonnet: Comparing Coding Capabilities

- Task 1: Computing the nth Fibonacci Number

- Task 2: Plotting a Scatter plot

- Task 3: Code Translation

- Task 4: Optimizing Inefficient Code

- Task 5: Code Debugging

- Strategic Decision Framework: OLMo 2 vs. Claude 3.5 Sonnet

- When to Choose OLMo 2?

- When to Choose Claude 3.5 Sonnet?

- Conclusion

- Key Takeaways

- Frequently Asked Questions

OLMo 2: A Fully Open Autoregressive Model

OLMo 2 is an entirely open-source autoregressive language model, trained on an enormous dataset comprising 5 trillion tokens. It is released with full disclosure of its weights, training data, and source code empowering researchers and developers to reproduce results, experiment with the training process, and build upon its innovative architecture.

What are the key Architectural Innovations ofOLMo 2?

OLMo 2 incorporates several key architectural modifications designed to enhance both performance and training stability.

- RMSNorm: OLMo2 utilizes Root Mean Square Normalization (RMSNorm) to stabilize and accelerate the training process. RMSNorm, as discussed in various deep learning studies, normalizes activations without the need for bias parameters, ensuring consistent gradient flows even in very deep architectures.

- Rotary Positional Embeddings: To encode the order of tokens effectively, the model integrates rotary positional embeddings. This method, which rotates the embedding vectors in a continuous space, preserves the relative positions of tokens—a technique further detailed in research such as the RoFormer paper.

- Z-loss Regularization: In addition to standard loss functions, OLMo2 applies Z-loss regularization. This extra layer of regularization helps in controlling the scale of activations and prevents overfitting, thereby enhancing generalization across diverse tasks.

Try OLMo 2 model live – here

Training and Post-Training Enhancements

- Two-Stage Curriculum Training: The model is initially trained on the Dolmino Mix-1124 dataset, a large and diverse corpus designed to cover a wide range of linguistic patterns and downstream tasks. This is followed by a second phase where the training focuses on task-specific fine-tuning.

- Instruction Tuning via RLVR: Post-training, OLMo2 undergoes instruction tuning using Reinforcement Learning with Verifiable Rewards (RLVR). This process refines the model’s reasoning abilities, aligning its outputs with human-verified benchmarks. The approach is similar in spirit to techniques like RLHF (Reinforcement Learning from Human Feedback) but places additional emphasis on reward verification for increased reliability.

These architectural and training strategies combine to create a model that is not only high-performing but also robust and adaptable which is a true asset for academic research and practical applications alike.

Claude 3.5 Sonnet: A Closed‑Source Model for Ethical and Coding‑Focused Applications

In contrast to the open philosophy of OLMo2, Claude3.5 Sonnet is a closed‑source model optimized for specialized tasks, particularly in coding and ensuring ethically sound outputs. Its design reflects a careful balance between performance and responsible deployment.

Core Features and Innovations

- Multimodal Processing: Claude3.5 Sonnet is engineered to handle both text and image inputs seamlessly. This multimodal capability allows the model to excel in generating, debugging, and refining code, as well as interpreting visual data, a feature that is supported by contemporary neural architectures and is increasingly featured in research on integrated AI systems.

- Computer Interface Interaction: One of the standout features of Claude3.5 Sonnet is its experimental API integration that enables the model to interact directly with computer interfaces. This functionality, which includes simulating actions like clicking buttons or typing text, bridges the gap between language understanding and direct control of digital environments. Recent technological news and academic discussions on human-computer interaction highlight the significance of such advancements.

- Ethical Safeguards: Recognizing the potential risks of deploying advanced AI models, Claude3.5 Sonnet has been subjected to rigorous fairness testing and safety protocols. These measures ensure that the outputs remain aligned with ethical standards, minimizing the risk of harmful or biased responses. The development and implementation of these safeguards are in line with emerging best practices in the AI community, as evidenced by research on ethical AI frameworks.

By focusing on coding applications and ensuring ethical reliability, Claude3.5 Sonnet addresses niche requirements in industries that demand both technical precision and moral accountability.

Try Claude3.5 Sonnet model live-here.

Technical Comparison of OLMo 2 vs. Claude 3.5 Sonnet

|

OLMo 2 | Claude 3.5Sonnet | |||||||||||||||

| Model Access | Full weights available on Hugging Face | API-only access | |||||||||||||||

| Fine-Tuning | Customizable via PyTorch | Limited to prompt engineering | |||||||||||||||

| Inference Speed | 12 tokens/sec (A100 GPU) | 30 tokens/sec (API) | |||||||||||||||

| Cost | Free (self-hosted) | $15/million tokens |

Pricing Comparison of OLMo 2 vs. Claude 3.5 Sonnet

| Price type | OLMo 2 (Cost per million tokens) | Claude 3.5 Sonnet(Cost per million tokens) |

|---|---|---|

| Input tokens | Free* (compute costs vary) | .00 |

| Output tokens | Free* (compute costs vary) | .00 |

OLMo2 is approximately four times more cost-effective for output-heavy tasks, making it ideal for budget-conscious projects. Note that since OLMo2 is an open‑source model, there is no fixed per‑token licensing fee, its cost depends on your self‑hosting compute resources. In contrast, Anthropic’s API rates set Claude 3.5 Sonnet’s pricing.

Accessing the Olmo 2 Model and Claude 3.5 Sonnet API

How to run the Ollama (Olmo 2) model locally?

Visit the official Ollama repository or website to download the installer –here.

Once you have Ollama, install the necessary Python package

pip install ollama

Download the Olmo 2 Model.This command fetches the Olmo 2 model (7-billion-parameter version)

ollama run olmo2:7b

Create a Python file and execute the following sample code to interact with the model and retrieve its responses.

import ollama

def generate_with_olmo(prompt, n_predict=1000):

"""

Generate text using Ollama's Olmo 2 model (streaming version),

controlling the number of tokens with n_predict.

"""

full_text = []

try:

for chunk in ollama.generate(

model='olmo2:7b',

prompt=prompt,

options={"n_predict": n_predict},

stream=True

):

full_text.append(chunk["response"])

return "".join(full_text)

except Exception as e:

return f"Error with Ollama API: {str(e)}"

if __name__ == "__main__":

output = generate_with_olmo("Explain the concept of quantum computing in simple terms.")

print("Olmo 2 Response:", output)

How to access Claude 3.5 Sonnet Api?

Head over to the Anthropic console page. Select Get API keys.

Click on Create Key and name your key. Click on Add.

Note: Don’t forget to save that API key somewhere you won’t be able to see it again.

Install the Anthropic Library

pip install anthropic

Create a Python file and execute the following sample code to interact with the model and retrieve its responses.

import anthropic

from anthropic import Anthropic

# Create an instance of the Anthropic API client

client = Anthropic(api_key='your-api-key')

def generate_with_claude(prompt, max_tokens=1000):

"""

Generate text using Claude 3.5 API

"""

try:

message = client.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=max_tokens,

messages=[

{

"role": "user",

"content": prompt

}

]

)

return message.content

except Exception as e:

return f"Error with Claude API: {str(e)}"

if __name__ == "__main__":

output = generate_with_claude("Explain the concept of quantum computing in simple terms.")

print("Claude 3.5 Sonnet Response:", output)

OLMo 2 vs. Claude 3.5 Sonnet: Comparing Coding Capabilities

UsingOlmo2 and Claude 3.5 Sonnetmodels for the below tasks.

Task 1: Computing the nth Fibonacci Number

Prompt: “Give me code to Compute the nth Fibonacci Number.”

a) OLMo 2 Response

def fibonacci_optimized(n):

if n <= 0:

return "Input must be a positive integer."

fib_0, fib_1 = 0, 1

for i in range(2, n+1):

fib_i = fib_0 + fib_1

fib_0, fib_1 = fib_1, fib_i

return fib_i

# Example usage:

print(fibonacci_optimized(10)) # Output: 55b) ClaudeSonnet3.5 Response

def fibonacci_recursive(n):

"""

Compute nth Fibonacci number using recursion.

Warning: Not efficient for large numbers due to exponential time complexity.

Time Complexity: O(2^n)

Space Complexity: O(n) due to recursion stack

"""

if n <= 0:

return 0

if n == 1:

return 1

return fibonacci_recursive(n - 1) + fibonacci_recursive(n - 2)

def fibonacci_iterative(n):

"""

Compute nth Fibonacci number using iteration.

Much more efficient than recursive approach.

Time Complexity: O(n)

Space Complexity: O(1)

"""

if n <= 0:

return 0

if n == 1:

return 1

prev, curr = 0, 1

for _ in range(2, n + 1):

prev, curr = curr, prev + curr

return curr

def fibonacci_matrix(n):

"""

Compute nth Fibonacci number using matrix exponentiation.

Most efficient for very large numbers.

Time Complexity: O(log n)

Space Complexity: O(1)

"""

if n <= 0:

return 0

if n == 1:

return 1

def matrix_multiply(a, b):

return [

[a[0][0] * b[0][0] + a[0][1] * b[1][0], a[0][0] * b[0][1] + a[0][1] * b[1][1]],

[a[1][0] * b[0][0] + a[1][1] * b[1][0], a[1][0] * b[0][1] + a[1][1] * b[1][1]]

]

def matrix_power(matrix, power):

if power == 0:

return [[1, 0], [0, 1]]

if power == 1:

return matrix

# Recursive squaring for efficient computation

half = matrix_power(matrix, power // 2)

if power % 2 == 0:

return matrix_multiply(half, half)

else:

return matrix_multiply(matrix_multiply(half, half), matrix)

base_matrix = [[1, 1], [1, 0]]

result_matrix = matrix_power(base_matrix, n - 1)

return result_matrix[0][0]

# Example usage and testing

def test_fibonacci():

test_cases = [0, 1, 2, 5, 10]

for n in test_cases:

result1 = fibonacci_recursive(n)

result2 = fibonacci_iterative(n)

result3 = fibonacci_matrix(n)

print(f"n = {n}:")

print(f" Recursive: {result1}")

print(f" Iterative: {result2}")

print(f" Matrix: {result3}")

print(f" All methods match: {result1 == result2 == result3}\n")

if __name__ == "__main__":

test_fibonacci()Insights:

OLMo 2 provides an iterative approach that is efficient but lacks flexibility, offering only one method. Claude Sonnet 3.5, on the other hand, presents three different implementations: recursive (inefficient but educational), iterative (optimal for general use), and matrix exponentiation (best for large inputs). Claude’s response is significantly more comprehensive, covering multiple use cases and including a test suite to verify correctness.

Task 2: Plotting a Scatter plot

“Prompt: Generate a Python script that uses Matplotlib and Seaborn to produce a vibrant scatter plot showing the relationship between two variables. The plot should include clear axis labels, a descriptive title, and distinct colors to differentiate the data points.“

a) OLMo 2 response:

b) ClaudeSonnet3.5 Response:

You can find the code responses–here.

Insights:

OLMo 2’s response correctly generates a scatter plot but lacks visual enhancements beyond basic differentiation of groups. Claude Sonnet 3.5 goes further by integrating size variation, a regression trend line, and correlation annotation, resulting in a more informative and visually appealing plot. Claude’s response demonstrates a better grasp of advanced visualization techniques and statistical insights.

Task 3: Code Translation

Prompt: “Convert this Java method into Python code while maintaining equivalent functionality:

pip install ollama

a) OLMo 2 response:

ollama run olmo2:7b

b) ClaudeSonnet3.5 Response:

import ollama

def generate_with_olmo(prompt, n_predict=1000):

"""

Generate text using Ollama's Olmo 2 model (streaming version),

controlling the number of tokens with n_predict.

"""

full_text = []

try:

for chunk in ollama.generate(

model='olmo2:7b',

prompt=prompt,

options={"n_predict": n_predict},

stream=True

):

full_text.append(chunk["response"])

return "".join(full_text)

except Exception as e:

return f"Error with Ollama API: {str(e)}"

if __name__ == "__main__":

output = generate_with_olmo("Explain the concept of quantum computing in simple terms.")

print("Olmo 2 Response:", output)Insights:

Both OLMo 2 and Claude Sonnet 3.5 provide identical solutions, accurately translating the Java method to Python. Since the function is straightforward, there is no room for differentiation, making both responses equally effective.

Task 4:Optimizing Inefficient Code

Prompt: “Optimize the following Python function to reduce time complexity.

pip install anthropic

a) OLMo 2 response:

import anthropic

from anthropic import Anthropic

# Create an instance of the Anthropic API client

client = Anthropic(api_key='your-api-key')

def generate_with_claude(prompt, max_tokens=1000):

"""

Generate text using Claude 3.5 API

"""

try:

message = client.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=max_tokens,

messages=[

{

"role": "user",

"content": prompt

}

]

)

return message.content

except Exception as e:

return f"Error with Claude API: {str(e)}"

if __name__ == "__main__":

output = generate_with_claude("Explain the concept of quantum computing in simple terms.")

print("Claude 3.5 Sonnet Response:", output)b) ClaudeSonnet3.5 Response:

def fibonacci_optimized(n):

if n <= 0:

return "Input must be a positive integer."

fib_0, fib_1 = 0, 1

for i in range(2, n+1):

fib_i = fib_0 + fib_1

fib_0, fib_1 = fib_1, fib_i

return fib_i

# Example usage:

print(fibonacci_optimized(10)) # Output: 55Insights:

OLMo 2 improves the function by using a set to track seen elements but retains a list for storing duplicates, leading to potential redundancy. Claude Sonnet 3.5 optimizes further by storing duplicates in a set and converting it back to a list at the end, improving efficiency and avoiding unnecessary operations. Claude’s approach is cleaner, ensuring better time complexity while maintaining correctness.

Task 5: Code Debugging

Prompt: “Below is a Python script that calculates the factorial of a number, but it contains bugs. Identify and correct the errors to ensure it returns the correct factorial for any positive integer:

pip install ollama

a) OLMo 2 response:

ollama run olmo2:7b

b) ClaudeSonnet3.5 Response:

import ollama

def generate_with_olmo(prompt, n_predict=1000):

"""

Generate text using Ollama's Olmo 2 model (streaming version),

controlling the number of tokens with n_predict.

"""

full_text = []

try:

for chunk in ollama.generate(

model='olmo2:7b',

prompt=prompt,

options={"n_predict": n_predict},

stream=True

):

full_text.append(chunk["response"])

return "".join(full_text)

except Exception as e:

return f"Error with Ollama API: {str(e)}"

if __name__ == "__main__":

output = generate_with_olmo("Explain the concept of quantum computing in simple terms.")

print("Olmo 2 Response:", output)Insights:

OLMo 2 correctly fixes the factorial function’s recursion step but lacks input validation. Claude Sonnet 3.5 not only corrects the recursion but also includes input validation to handle negative numbers and non-integer inputs, making it more robust. Claude’s solution is more thorough and suitable for real-world applications.

Strategic Decision Framework: OLMo 2 vs. Claude 3.5 Sonnet

When to Choose OLMo 2?

- Budget-Constrained Projects: Free self-hosting vs API fees

- Transparency Requirements: Academic research/auditable systems

- Customization Needs: Full model architecture access and tasks that require domain-specific fine-tuning

- Language Focus: English-dominant applications

- Rapid Prototyping: Local experimentation without API limits

When to Choose Claude 3.5 Sonnet?

- Enterprise-Grade Coding: Complex code generation/refactoring

- Multimodal Requirements: Image and text processing needs on a live server.

- Global Deployments: 50 language support

- Ethical Compliance: Constitutionally aligned outputs

- Scale Operations: Managed API infrastructure

Conclusion

OLMo 2 democratizes advanced NLP through full transparency and cost efficiency (ideal for academic research and budget-conscious prototyping), Claude 3.5 Sonnet delivers enterprise-grade precision with multimodal coding prowess and ethical safeguards. The choice isn’t binary, forward-thinking organizations will strategically deploy OLMo 2 for transparent, customizable workflows and reserve Claude 3.5 Sonnet for mission-critical coding tasks requiring constitutional alignment. As AI matures, this symbiotic relationship between open-source foundations and commercial polish will define the next era of intelligent systems. I hope you found this OLMo 2 vs. Claude 3.5 Sonnet guide helpful, let me know in the comment section below.

Key Takeaways

- OLMo 2 offers full access to weights and code, while Claude 3.5 Sonnet provides an API-focused, closed-source model with robust enterprise features.

- OLMo 2 is effectively “free” apart from hosting costs, ideal for budget-conscious projects; Claude 3.5 Sonnet uses a pay-per-token model, which is potentially more cost-effective for enterprise-scale usage.

- Claude 3.5 Sonnet excels in code generation and debugging, providing multiple methods and thorough solutions; OLMo 2’s coding output is generally succinct and iterative.

- OLMo 2 supports deeper customization (including domain-specific fine-tuning) and can be self-hosted. Claude 3.5 Sonnet focuses on multimodal inputs, direct computer interface interactions, and strong ethical frameworks.

- Both models can be integrated via Python, but Claude 3.5 Sonnet is particularly user-friendly for enterprise settings, while OLMo 2 encourages local experimentation and advanced research.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Frequently Asked Questions

Q1. Can OLMo 2 match Claude 3.5 Sonnet’s accuracy with enough fine-tuning?Ans. In narrow domains (e.g., legal documents), yes. For general-purpose tasks, Claude’s 140B parameters retain an edge.

Q2. How do the models handle non-English languages?Ans. Claude 3.5 Sonnet supports 50 languages natively. OLMo 2 focuses primarily on English but can be fine-tuned for multilingual tasks.

Q3. Is OLMo 2 available commercially?Ans. Yes, via Hugging Face and AWS Bedrock.

Q4. Which model is better for startups?Ans. OLMo 2 for cost-sensitive projects; Claude 3.5 Sonnet for coding-heavy tasks.

Q5. Which model is better for AI safety research?Ans. OLMo 2’s full transparency makes it superior for safety auditing and mechanistic interpretability work.

The above is the detailed content of OLMo 2 vs. Claude 3.5 Sonnet: Which is Better?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1673

1673

14

14

1429

1429

52

52

1333

1333

25

25

1278

1278

29

29

1257

1257

24

24

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

The release includes three distinct models, GPT-4.1, GPT-4.1 mini and GPT-4.1 nano, signaling a move toward task-specific optimizations within the large language model landscape. These models are not immediately replacing user-facing interfaces like

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

Unlock the Power of Embedding Models: A Deep Dive into Andrew Ng's New Course Imagine a future where machines understand and respond to your questions with perfect accuracy. This isn't science fiction; thanks to advancements in AI, it's becoming a r

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Simulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Gemini as the Foundation of Google’s AI Strategy Gemini is the cornerstone of Google’s AI agent strategy, leveraging its advanced multimodal capabilities to process and generate responses across text, images, audio, video and code. Developed by DeepM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

“Super happy to announce that we are acquiring Pollen Robotics to bring open-source robots to the world,” Hugging Face said on X. “Since Remi Cadene joined us from Tesla, we’ve become the most widely used software platform for open robotics thanks to

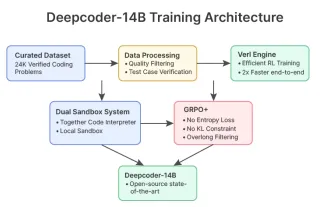

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

In a significant development for the AI community, Agentica and Together AI have released an open-source AI coding model named DeepCoder-14B. Offering code generation capabilities on par with closed-source competitors like OpenAI