Prompt Caching: A Guide With Code Implementation

Prompt caching significantly boosts the efficiency of large language models (LLMs) by storing and reusing responses to frequently asked prompts. This reduces costs, latency, and improves the overall user experience. This blog post delves into the mechanics of prompt caching, its advantages and challenges, and offers practical implementation strategies.

Understanding Prompt Caching

Prompt caching functions by storing prompts and their corresponding responses within a cache. Upon receiving a matching or similar prompt, the system retrieves the cached response instead of recomputing, thus avoiding redundant processing.

Advantages of Prompt Caching

The benefits are threefold:

- Reduced Costs: LLMs typically charge per token. Caching avoids generating responses repeatedly, leading to substantial cost savings.

- Lower Latency: Caching speeds up response times, enhancing system performance.

- Improved User Experience: Faster responses translate to a better user experience, particularly crucial in real-time applications.

Considerations Before Implementing Prompt Caching

Before implementing prompt caching, several factors need careful consideration:

Cache Lifetime (TTL)

Each cached response requires a Time-to-Live (TTL) to ensure data freshness. The TTL defines the validity period of a cached response. Expired entries are removed or updated, triggering recomputation upon subsequent requests. Balancing data freshness and computational efficiency requires careful TTL tuning.

Prompt Similarity

Determining the similarity between new and cached prompts is critical. Techniques like fuzzy matching or semantic search (using vector embeddings) help assess prompt similarity. Finding the right balance in the similarity threshold is crucial to avoid both mismatches and missed caching opportunities.

Cache Update Strategies

Strategies like Least Recently Used (LRU) help manage cache size by removing the least recently accessed entries when the cache is full. This prioritizes frequently accessed prompts.

Implementing Prompt Caching: A Two-Step Process

- Identify Repeated Prompts: Monitor your system to pinpoint frequently repeated prompts.

- Store the Prompt and Response: Store the prompt and its response in the cache, including metadata like TTL and hit/miss rates.

Practical Implementation with Ollama: Caching vs. No Caching

This section demonstrates a practical comparison of cached and non-cached inference using Ollama, a tool for managing LLMs locally. The example uses data from a web-hosted deep learning book to generate summaries using various LLMs (Gemma2, Llama2, Llama3).

Prerequisites:

- Install BeautifulSoup:

!pip install BeautifulSoup - Install and run Ollama (e.g.,

ollama run llama3.1)

The code (omitted for brevity) demonstrates fetching book content, performing non-cached and cached inference using Ollama's ollama.generate() function, and measuring inference times. The results (also omitted) show a significant reduction in inference time with caching.

Best Practices for Prompt Caching

- Identify Repetitive Tasks: Focus on frequently repeated prompts.

- Consistent Instructions: Maintain consistent prompt formatting for better cache hits.

- Balance Cache Size and Performance: Optimize cache size and eviction policy.

- Monitor Cache Effectiveness: Track cache hit rates to assess performance.

Cache Storage and Sharing

- Local vs. Distributed Caches: Choose between local (simpler) and distributed (scalable) caches based on your needs.

- Sharing Cached Prompts: Sharing across systems reduces costs and improves performance.

- Privacy: Encrypt sensitive data and implement access controls.

Preventing Cache Expiration

- Cache Warm-up: Pre-populate the cache with common prompts.

- Keep-alive Pings: Periodically refresh frequently used cache entries.

Pricing of Cached Prompts

Understand the cost model (writes, reads, storage) and optimize by carefully selecting prompts to cache and using appropriate TTL values.

Common Issues with Prompt Caching

- Cache Misses: Address inconsistencies in prompt structures and adjust similarity thresholds.

- Cache Invalidation: Implement automatic or manual invalidation policies to handle data changes.

Conclusion

Prompt caching is a powerful technique for optimizing LLM performance and reducing costs. By following the best practices outlined in this blog post, you can effectively leverage prompt caching to enhance your AI-powered applications.

The above is the detailed content of Prompt Caching: A Guide With Code Implementation. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1676

1676

14

14

1429

1429

52

52

1333

1333

25

25

1278

1278

29

29

1257

1257

24

24

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

The release includes three distinct models, GPT-4.1, GPT-4.1 mini and GPT-4.1 nano, signaling a move toward task-specific optimizations within the large language model landscape. These models are not immediately replacing user-facing interfaces like

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

Unlock the Power of Embedding Models: A Deep Dive into Andrew Ng's New Course Imagine a future where machines understand and respond to your questions with perfect accuracy. This isn't science fiction; thanks to advancements in AI, it's becoming a r

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Simulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Gemini as the Foundation of Google’s AI Strategy Gemini is the cornerstone of Google’s AI agent strategy, leveraging its advanced multimodal capabilities to process and generate responses across text, images, audio, video and code. Developed by DeepM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

“Super happy to announce that we are acquiring Pollen Robotics to bring open-source robots to the world,” Hugging Face said on X. “Since Remi Cadene joined us from Tesla, we’ve become the most widely used software platform for open robotics thanks to

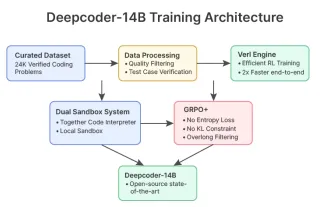

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

In a significant development for the AI community, Agentica and Together AI have released an open-source AI coding model named DeepCoder-14B. Offering code generation capabilities on par with closed-source competitors like OpenAI