6 Common LLM Customization Strategies Briefly Explained

This article explores six key strategies for customizing Large Language Models (LLMs), ranging from simple techniques to more resource-intensive methods. Choosing the right approach depends on your specific needs, resources, and technical expertise.

Why Customize LLMs?

Pre-trained LLMs, while powerful, often fall short of specific business or domain requirements. Customizing an LLM allows you to tailor its capabilities to your exact needs without the prohibitive cost of training a model from scratch. This is especially crucial for smaller teams lacking extensive resources.

Choosing the Right LLM:

Before customization, selecting the appropriate base model is critical. Factors to consider include:

- Open-source vs. Proprietary: Open-source models offer flexibility and control but demand technical skills, while proprietary models provide ease of access and often superior performance at a cost.

- Task and Metrics: Different models excel at various tasks (question answering, summarization, code generation). Benchmark metrics and domain-specific testing are essential.

- Architecture: Decoder-only models (like GPT) are strong at text generation, while encoder-decoder models (like T5) are better suited for translation. Emerging architectures like Mixture of Experts (MoE) show promise.

- Model Size: Larger models generally perform better but require more computational resources.

Six LLM Customization Strategies (Ranked by Resource Intensity):

The following strategies are presented in ascending order of resource consumption:

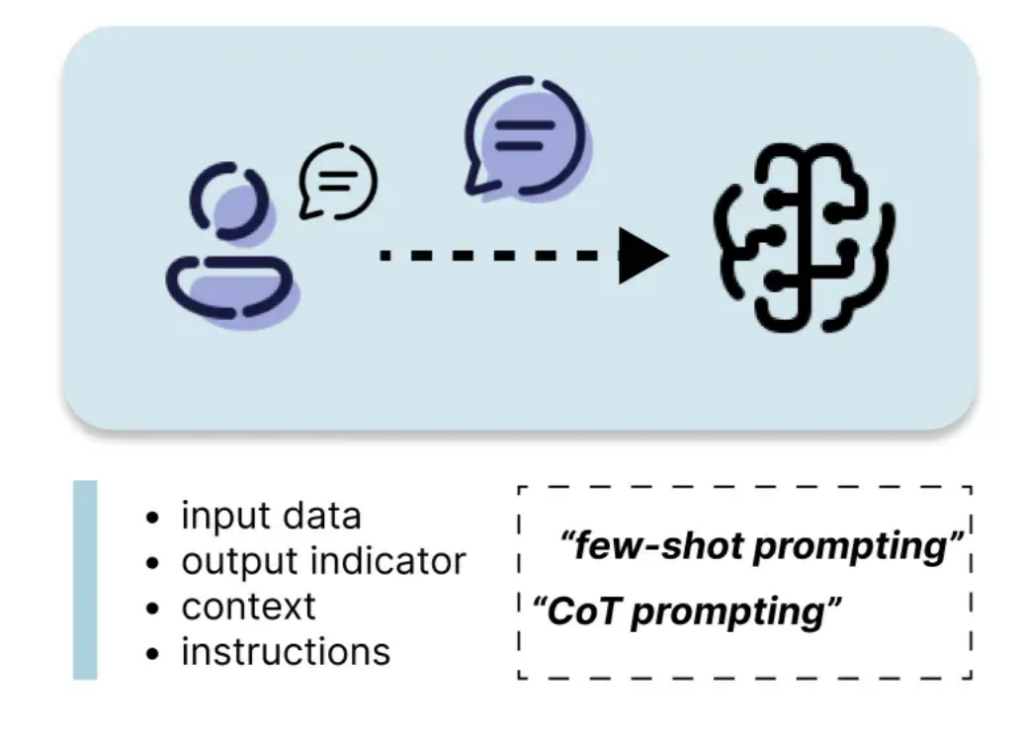

1. Prompt Engineering

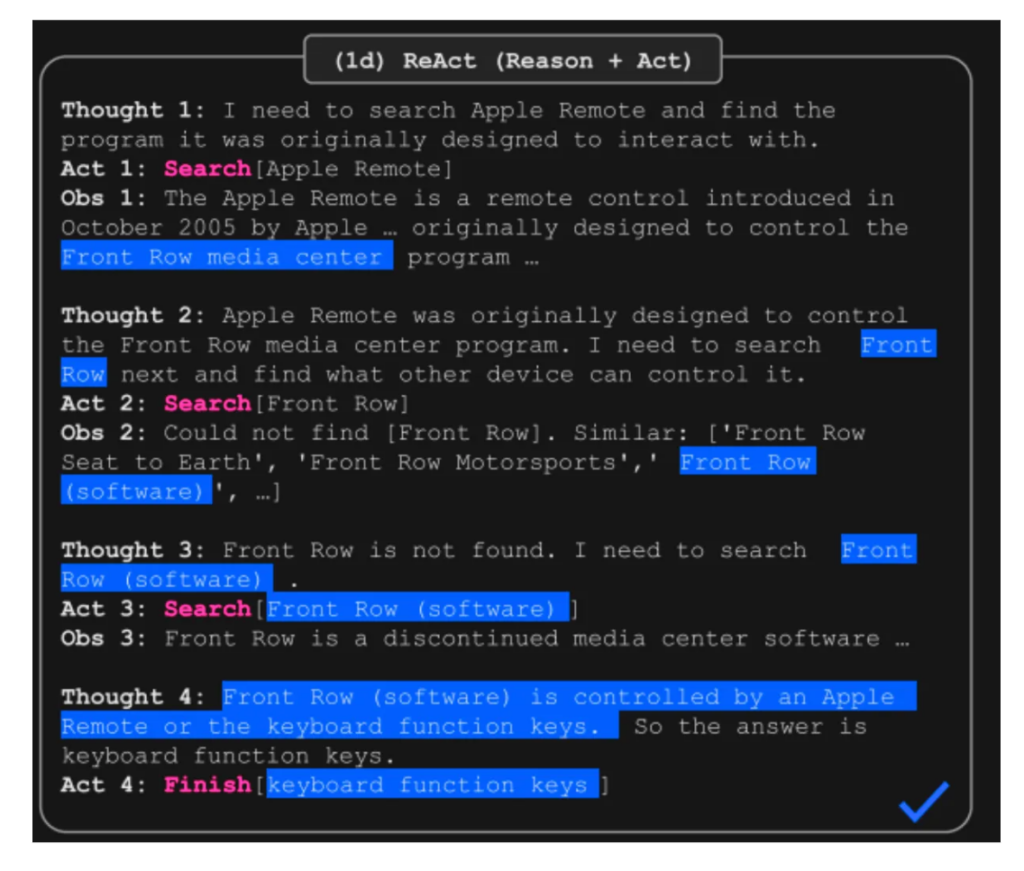

Prompt engineering involves carefully crafting the input text (prompt) to guide the LLM's response. This includes instructions, context, input data, and output indicators. Techniques like zero-shot, one-shot, and few-shot prompting, as well as more advanced methods like Chain of Thought (CoT), Tree of Thoughts, Automatic Reasoning and Tool Use (ART), and ReAct, can significantly improve performance. Prompt engineering is efficient and readily implemented.

2. Decoding and Sampling Strategies

Controlling decoding strategies (greedy search, beam search, sampling) and sampling parameters (temperature, top-k, top-p) at inference time allows you to adjust the randomness and diversity of the LLM's output. This is a low-cost method for influencing model behavior.

3. Retrieval Augmented Generation (RAG)

RAG enhances LLM responses by incorporating external knowledge. It involves retrieving relevant information from a knowledge base and feeding it to the LLM along with the user's query. This reduces hallucinations and improves accuracy, particularly for domain-specific tasks. RAG is relatively resource-efficient as it doesn't require retraining the LLM.

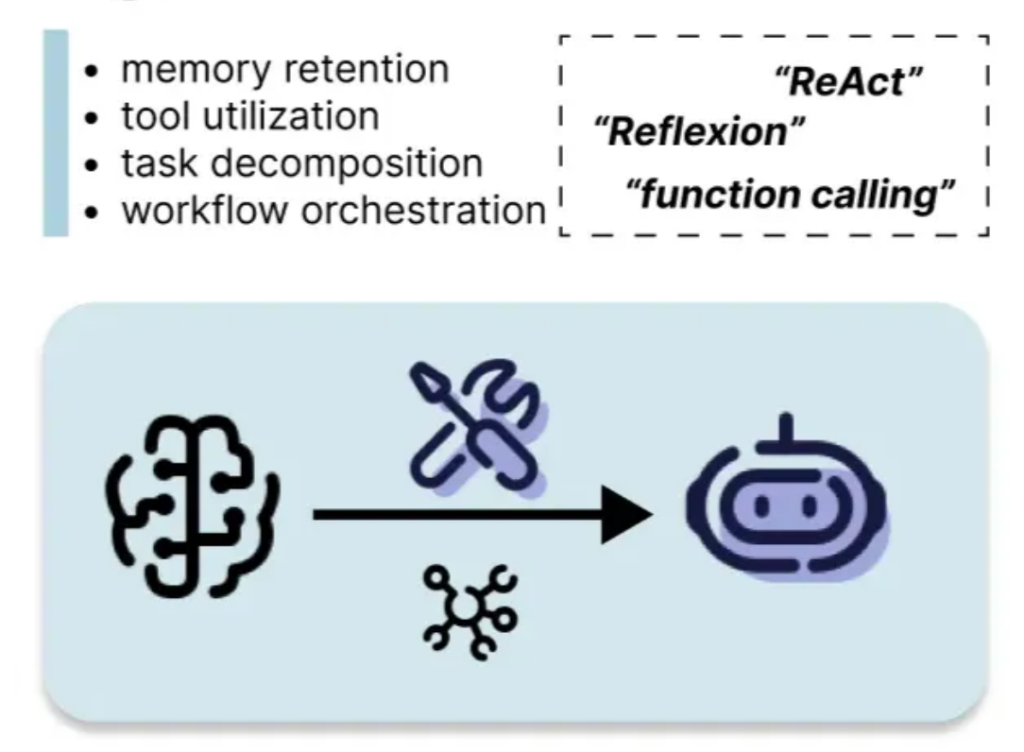

4. Agent-Based Systems

Agent-based systems enable LLMs to interact with the environment, use tools, and maintain memory. Frameworks like ReAct (Synergizing Reasoning and Acting) combine reasoning with actions and observations, improving performance on complex tasks. Agents offer significant advantages in managing complex workflows and tool utilization.

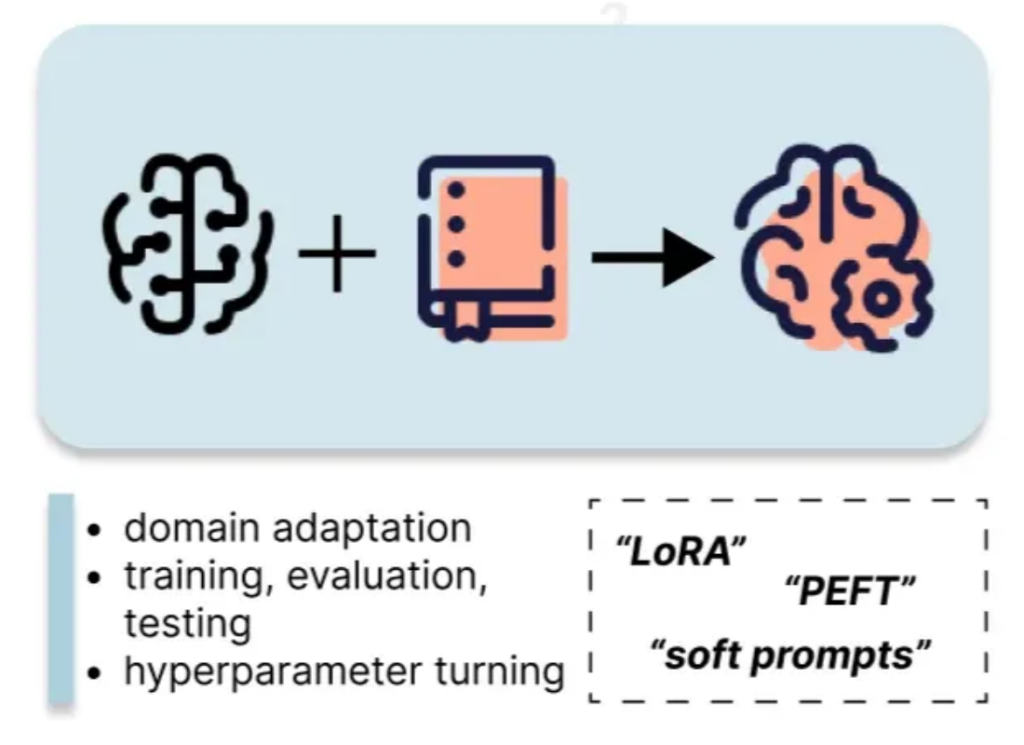

5. Fine-tuning

Fine-tuning involves updating the LLM's parameters using a custom dataset. Parameter-Efficient Fine-Tuning (PEFT) methods like LoRA significantly reduce the computational cost compared to full fine-tuning. This approach requires more resources than the previous methods but provides more substantial performance gains.

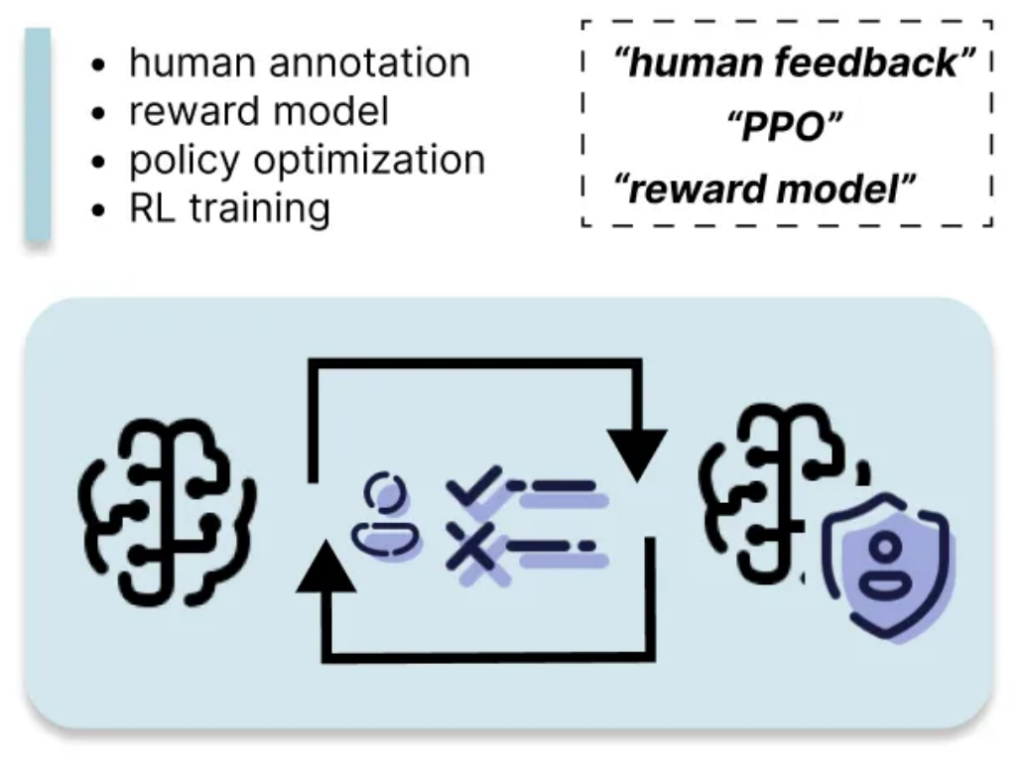

6. Reinforcement Learning from Human Feedback (RLHF)

RLHF aligns the LLM's output with human preferences by training a reward model based on human feedback. This is the most resource-intensive method, requiring significant human annotation and computational power, but it can lead to substantial improvements in response quality and alignment with desired behavior.

This overview provides a comprehensive understanding of the various LLM customization techniques, enabling you to choose the most appropriate strategy based on your specific requirements and resources. Remember to consider the trade-offs between resource consumption and performance gains when making your selection.

The above is the detailed content of 6 Common LLM Customization Strategies Briefly Explained. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1660

1660

14

14

1416

1416

52

52

1310

1310

25

25

1260

1260

29

29

1233

1233

24

24

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

Hey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Shopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

Introduction OpenAI has released its new model based on the much-anticipated “strawberry” architecture. This innovative model, known as o1, enhances reasoning capabilities, allowing it to think through problems mor

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

Introduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

For those of you who might be new to my column, I broadly explore the latest advances in AI across the board, including topics such as embodied AI, AI reasoning, high-tech breakthroughs in AI, prompt engineering, training of AI, fielding of AI, AI re