Backend Development

Backend Development

C++

C++

Understanding and Solving False Sharing in Multi-threaded Applications with an actual issue I had

Understanding and Solving False Sharing in Multi-threaded Applications with an actual issue I had

Understanding and Solving False Sharing in Multi-threaded Applications with an actual issue I had

Recently, I was working on a multi-threaded implementation of a function to calculate the Poisson distribution (amath_pdist). The goal was to divide the workload across multiple threads to improve performance, especially for large arrays. However, instead of achieving the expected speedup, I noticed a significant slowdown as the size of the array increased.

After some investigation, I discovered the culprit: false sharing. In this post, I’ll explain what false sharing is, show the original code causing the problem, and share the fixes that led to a substantial performance improvement.

The Problem: False Sharing in Multi-threaded Code

False sharing happens when multiple threads work on different parts of a shared array, but their data resides in the same cache line. Cache lines are the smallest unit of data transferred between memory and the CPU cache (typically 64 bytes). If one thread writes to part of a cache line, it invalidates the line for other threads—even if they’re working on logically independent data. This unnecessary invalidation leads to significant performance degradation due to repeated reloading of cache lines.

Here’s a simplified version of my original code:

void *calculate_pdist_segment(void *data) {

struct pdist_segment *segment = (struct pdist_segment *)data;

size_t interval_a = segment->interval_a, interval_b = segment->interval_b;

double lambda = segment->lambda;

int *d = segment->data;

for (size_t i = interval_a; i < interval_b; i++) {

segment->pdist[i] = pow(lambda, d[i]) * exp(-lambda) / tgamma(d[i] + 1);

}

return NULL;

}

double *amath_pdist(int *data, double lambda, size_t n_elements, size_t n_threads) {

double *pdist = malloc(sizeof(double) * n_elements);

pthread_t threads[n_threads];

struct pdist_segment segments[n_threads];

size_t step = n_elements / n_threads;

for (size_t i = 0; i < n_threads; i++) {

segments[i].data = data;

segments[i].lambda = lambda;

segments[i].pdist = pdist;

segments[i].interval_a = step * i;

segments[i].interval_b = (i == n_threads - 1) ? n_elements : (step * (i + 1));

pthread_create(&threads[i], NULL, calculate_pdist_segment, &segments[i]);

}

for (size_t i = 0; i < n_threads; i++) {

pthread_join(threads[i], NULL);

}

return pdist;

}

Where the Problem Occurs

In the above code:

- The array pdist is shared among all threads.

- Each thread writes to a specific range of indices (interval_a to interval_b).

- At segment boundaries, adjacent indices may reside in the same cache line. For example, if pdist[249999] and pdist[250000] share a cache line, Thread 1 (working on pdist[249999]) and Thread 2 (working on pdist[250000]) invalidate each other’s cache lines.

This issue scaled poorly with larger arrays. While the boundary issue might seem small, the sheer number of iterations magnified the cost of cache invalidations, leading to seconds of unnecessary overhead.

The Solution: Align Memory to Cache Line Boundaries

To fix the problem, I used posix_memalign to ensure that the pdist array was aligned to 64-byte boundaries. This guarantees that threads operate on completely independent cache lines, eliminating false sharing.

Here’s the updated code:

double *amath_pdist(int *data, double lambda, size_t n_elements, size_t n_threads) {

double *pdist;

if (posix_memalign((void **)&pdist, 64, sizeof(double) * n_elements) != 0) {

perror("Failed to allocate aligned memory");

return NULL;

}

pthread_t threads[n_threads];

struct pdist_segment segments[n_threads];

size_t step = n_elements / n_threads;

for (size_t i = 0; i < n_threads; i++) {

segments[i].data = data;

segments[i].lambda = lambda;

segments[i].pdist = pdist;

segments[i].interval_a = step * i;

segments[i].interval_b = (i == n_threads - 1) ? n_elements : (step * (i + 1));

pthread_create(&threads[i], NULL, calculate_pdist_segment, &segments[i]);

}

for (size_t i = 0; i < n_threads; i++) {

pthread_join(threads[i], NULL);

}

return pdist;

}

Why Does This Work?

-

Aligned Memory:

- Using posix_memalign, the array starts on a cache line boundary.

- Each thread’s assigned range aligns neatly with cache lines, preventing overlap.

-

No Cache Line Sharing:

- Threads operate on distinct cache lines, eliminating invalidations caused by false sharing.

-

Improved Cache Efficiency:

- Sequential memory access patterns align well with CPU prefetchers, further boosting performance.

Results and Takeaways

After applying the fix, the runtime of the amath_pdist function dropped significantly. For a dataset I was testing, the wall clock time dropped from 10.92 seconds to 0.06 seconds.

Key Lessons:

- False sharing is a subtle yet critical issue in multi-threaded applications. Even small overlaps at segment boundaries can degrade performance.

- Memory alignment using posix_memalign is a simple and effective way to solve false sharing. Aligning memory to cache line boundaries ensures threads operate independently.

- Always analyze your code for cache-related issues when working with large arrays or parallel processing. Tools like perf or valgrind can help pinpoint bottlenecks.

Thank you for reading!

For anyone curious about the code, you can find it here

The above is the detailed content of Understanding and Solving False Sharing in Multi-threaded Applications with an actual issue I had. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1655

1655

14

14

1413

1413

52

52

1306

1306

25

25

1252

1252

29

29

1226

1226

24

24

C language data structure: data representation and operation of trees and graphs

Apr 04, 2025 am 11:18 AM

C language data structure: data representation and operation of trees and graphs

Apr 04, 2025 am 11:18 AM

C language data structure: The data representation of the tree and graph is a hierarchical data structure consisting of nodes. Each node contains a data element and a pointer to its child nodes. The binary tree is a special type of tree. Each node has at most two child nodes. The data represents structTreeNode{intdata;structTreeNode*left;structTreeNode*right;}; Operation creates a tree traversal tree (predecision, in-order, and later order) search tree insertion node deletes node graph is a collection of data structures, where elements are vertices, and they can be connected together through edges with right or unrighted data representing neighbors.

The truth behind the C language file operation problem

Apr 04, 2025 am 11:24 AM

The truth behind the C language file operation problem

Apr 04, 2025 am 11:24 AM

The truth about file operation problems: file opening failed: insufficient permissions, wrong paths, and file occupied. Data writing failed: the buffer is full, the file is not writable, and the disk space is insufficient. Other FAQs: slow file traversal, incorrect text file encoding, and binary file reading errors.

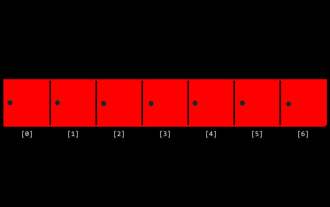

CS-Week 3

Apr 04, 2025 am 06:06 AM

CS-Week 3

Apr 04, 2025 am 06:06 AM

Algorithms are the set of instructions to solve problems, and their execution speed and memory usage vary. In programming, many algorithms are based on data search and sorting. This article will introduce several data retrieval and sorting algorithms. Linear search assumes that there is an array [20,500,10,5,100,1,50] and needs to find the number 50. The linear search algorithm checks each element in the array one by one until the target value is found or the complete array is traversed. The algorithm flowchart is as follows: The pseudo-code for linear search is as follows: Check each element: If the target value is found: Return true Return false C language implementation: #include#includeintmain(void){i

C# vs. C : History, Evolution, and Future Prospects

Apr 19, 2025 am 12:07 AM

C# vs. C : History, Evolution, and Future Prospects

Apr 19, 2025 am 12:07 AM

The history and evolution of C# and C are unique, and the future prospects are also different. 1.C was invented by BjarneStroustrup in 1983 to introduce object-oriented programming into the C language. Its evolution process includes multiple standardizations, such as C 11 introducing auto keywords and lambda expressions, C 20 introducing concepts and coroutines, and will focus on performance and system-level programming in the future. 2.C# was released by Microsoft in 2000. Combining the advantages of C and Java, its evolution focuses on simplicity and productivity. For example, C#2.0 introduced generics and C#5.0 introduced asynchronous programming, which will focus on developers' productivity and cloud computing in the future.

C language multithreaded programming: a beginner's guide and troubleshooting

Apr 04, 2025 am 10:15 AM

C language multithreaded programming: a beginner's guide and troubleshooting

Apr 04, 2025 am 10:15 AM

C language multithreading programming guide: Creating threads: Use the pthread_create() function to specify thread ID, properties, and thread functions. Thread synchronization: Prevent data competition through mutexes, semaphores, and conditional variables. Practical case: Use multi-threading to calculate the Fibonacci number, assign tasks to multiple threads and synchronize the results. Troubleshooting: Solve problems such as program crashes, thread stop responses, and performance bottlenecks.

How to output a countdown in C language

Apr 04, 2025 am 08:54 AM

How to output a countdown in C language

Apr 04, 2025 am 08:54 AM

How to output a countdown in C? Answer: Use loop statements. Steps: 1. Define the variable n and store the countdown number to output; 2. Use the while loop to continuously print n until n is less than 1; 3. In the loop body, print out the value of n; 4. At the end of the loop, subtract n by 1 to output the next smaller reciprocal.

How to define the call declaration format of c language function

Apr 04, 2025 am 06:03 AM

How to define the call declaration format of c language function

Apr 04, 2025 am 06:03 AM

C language functions include definitions, calls and declarations. Function definition specifies function name, parameters and return type, function body implements functions; function calls execute functions and provide parameters; function declarations inform the compiler of function type. Value pass is used for parameter pass, pay attention to the return type, maintain a consistent code style, and handle errors in functions. Mastering this knowledge can help write elegant, robust C code.

Integers in C: a little history

Apr 04, 2025 am 06:09 AM

Integers in C: a little history

Apr 04, 2025 am 06:09 AM

Integers are the most basic data type in programming and can be regarded as the cornerstone of programming. The job of a programmer is to give these numbers meanings. No matter how complex the software is, it ultimately comes down to integer operations, because the processor only understands integers. To represent negative numbers, we introduced two's complement; to represent decimal numbers, we created scientific notation, so there are floating-point numbers. But in the final analysis, everything is still inseparable from 0 and 1. A brief history of integers In C, int is almost the default type. Although the compiler may issue a warning, in many cases you can still write code like this: main(void){return0;} From a technical point of view, this is equivalent to the following code: intmain(void){return0;}