Updating website content on schedule via GitHub Actions

I would like to share my journey on building a self-sustainable content management system that does not require a content database in a traditional sense.

The Problem

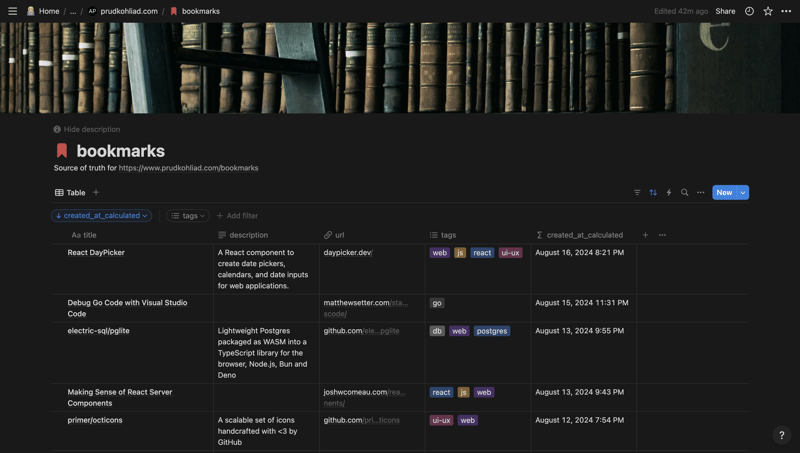

The content (blog posts and bookmarks) of this website is stored in a Notion database:

The problem that I was trying to solve was to not have to deploy the website manually after each bookmark that I add there. And on top of that – keep the hosting as cheap as possible, because for me it does not really matter how fast the bookmarks that I add to my Notion database end up online.

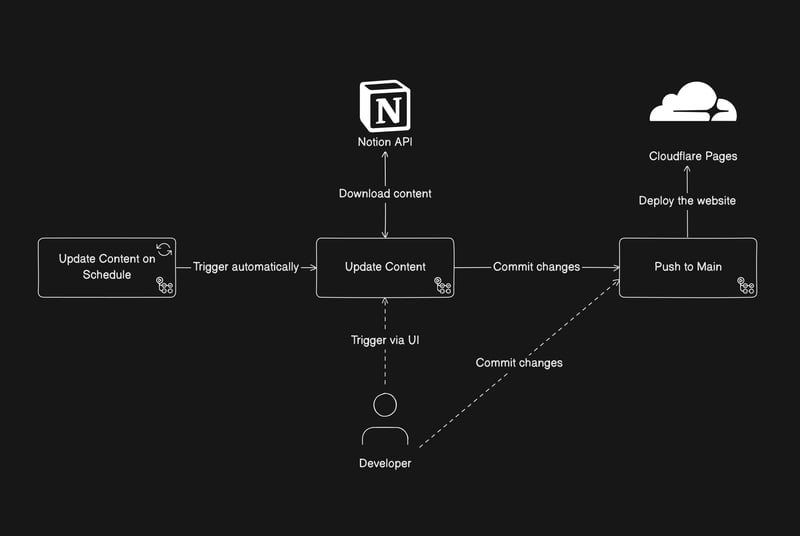

So, after some research I came up with the following setup:

The system consists of several components:

- The “Push to Main” action that deploys the changes

- The “Update Content” action that downloads content from Notion API and commits the changes

- The “Update Content on Schedule” action runs once in a while and triggers the “Update Content” action

Let us look into each one of them from the inside out in detail.

The “Push to Main” Workflow

There is not a lot to say here, pretty standard setup, – when there is a push to the main branch, this workflow builds the app and deploys it to Cloudflare Pages using the Wrangler CLI:

name: Push to Main

on:

push:

branches: [main]

workflow_dispatch: {}

jobs:

deploy-cloudflare-pages:

runs-on: ubuntu-latest

timeout-minutes: 5

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Setup pnpm

uses: pnpm/action-setup@v4

- name: Setup Node

uses: actions/setup-node@v4

with:

node-version-file: .node-version

cache: pnpm

- name: Install node modules

run: |

pnpm --version

pnpm install --frozen-lockfile

- name: Build the App

run: |

pnpm build

- name: Publish Cloudflare Pages

env:

CLOUDFLARE_ACCOUNT_ID: ${{ secrets.CLOUDFLARE_ACCOUNT_ID }}

CLOUDFLARE_API_TOKEN: ${{ secrets.CLOUDFLARE_API_TOKEN }}

run: |

pnpm wrangler pages deploy ./out --project-name ${{ secrets.CLOUDFLARE_PROJECT_NAME }}

The “Update Content” Workflow

This Workflow can only be triggered “manually”… but also automatically because you can trigger it using a GitHub Personal Access Token, a.k.a. PAT. I initially wrote it because I wanted to deploy changes from my phone. It downloads the posts and bookmarks using the Notion API and then – if there are any change to the codebase – creates a commit and pushes it. In order to function properly, this workflow must be provided with a PAT that has “Read and Write access to code” of the repository:

name: Update Content

on:

workflow_dispatch: {}

jobs:

download-content:

runs-on: ubuntu-latest

timeout-minutes: 5

steps:

- name: Checkout

uses: actions/checkout@v4

with:

# A Github Personal Access Token with access to the repository

# that has the follwing permissions:

# ✅ Read and Write access to code

token: ${{ secrets.GITHUB_PAT_CONTENT }}

- name: Setup pnpm

uses: pnpm/action-setup@v4

- name: Setup Node

uses: actions/setup-node@v4

with:

node-version-file: .node-version

cache: pnpm

- name: Install node modules

run: |

pnpm --version

pnpm install --frozen-lockfile

- name: Download articles content from Notion

env:

NOTION_KEY: "${{ secrets.NOTION_KEY }}"

NOTION_ARTICLES_DATABASE_ID: "${{ secrets.NOTION_ARTICLES_DATABASE_ID }}"

run: |

pnpm download-articles

- name: Download bookmarks content from Notion

env:

NOTION_KEY: ${{ secrets.NOTION_KEY }}

NOTION_BOOKMARKS_DATABASE_ID: ${{ secrets.NOTION_BOOKMARKS_DATABASE_ID }}

run: |

pnpm download-bookmarks

- name: Configure Git

run: |

git config --global user.email "${{ secrets.GIT_USER_EMAIL }}"

git config --global user.name "${{ secrets.GIT_USER_NAME }}"

- name: Check if anything changed

id: check-changes

run: |

if [ -n "$(git status --porcelain)" ]; then

echo "There are changes"

echo "HAS_CHANGED=true" >> $GITHUB_OUTPUT

else

echo "There are no changes"

echo "HAS_CHANGED=false" >> $GITHUB_OUTPUT

fi

- name: Commit changes

if: steps.check-changes.outputs.HAS_CHANGED == 'true'

run: |

git add ./src/content

git add ./public

git commit -m "Automatic content update commit"

git push

The “Update Content on Schedule” Workflow

This one is pretty simple: it just runs every once in a while and triggers the workflow above. In order to function properly, this workflow must be provided with a GitHub PAT that has “Read and Write access to actions” of the repository. In my case it’s a different PAT:

name: Update Content on Schedule

on:

schedule:

- cron: "13 0,12 * * *"

workflow_dispatch: {}

jobs:

trigger-update-content:

runs-on: ubuntu-latest

timeout-minutes: 5

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Dispatch the Update Content workflow

env:

# A Github Personal Access Token with access to the repository

# that has the follwing permissions:

# ✅ Read and Write access to actions

GH_TOKEN: ${{ secrets.GITHUB_PAT_ACTIONS }}

run: |

gh workflow run "Update Content" --ref main

Conclusion

For me this setup has proven to be really good and flexible. Because of the modular structure, the “Update Content” action can be triggered manually – e.g. from my phone while travelling. To me this was another valuable experience of progressive enhancement of a workflow.

Hope you find this helpful ?

The above is the detailed content of Updating website content on schedule via GitHub Actions. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1665

1665

14

14

1423

1423

52

52

1321

1321

25

25

1269

1269

29

29

1249

1249

24

24

JavaScript Engines: Comparing Implementations

Apr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing Implementations

Apr 13, 2025 am 12:05 AM

Different JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python is more suitable for beginners, with a smooth learning curve and concise syntax; JavaScript is suitable for front-end development, with a steep learning curve and flexible syntax. 1. Python syntax is intuitive and suitable for data science and back-end development. 2. JavaScript is flexible and widely used in front-end and server-side programming.

From C/C to JavaScript: How It All Works

Apr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All Works

Apr 14, 2025 am 12:05 AM

The shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript and the Web: Core Functionality and Use Cases

Apr 18, 2025 am 12:19 AM

JavaScript and the Web: Core Functionality and Use Cases

Apr 18, 2025 am 12:19 AM

The main uses of JavaScript in web development include client interaction, form verification and asynchronous communication. 1) Dynamic content update and user interaction through DOM operations; 2) Client verification is carried out before the user submits data to improve the user experience; 3) Refreshless communication with the server is achieved through AJAX technology.

JavaScript in Action: Real-World Examples and Projects

Apr 19, 2025 am 12:13 AM

JavaScript in Action: Real-World Examples and Projects

Apr 19, 2025 am 12:13 AM

JavaScript's application in the real world includes front-end and back-end development. 1) Display front-end applications by building a TODO list application, involving DOM operations and event processing. 2) Build RESTfulAPI through Node.js and Express to demonstrate back-end applications.

Understanding the JavaScript Engine: Implementation Details

Apr 17, 2025 am 12:05 AM

Understanding the JavaScript Engine: Implementation Details

Apr 17, 2025 am 12:05 AM

Understanding how JavaScript engine works internally is important to developers because it helps write more efficient code and understand performance bottlenecks and optimization strategies. 1) The engine's workflow includes three stages: parsing, compiling and execution; 2) During the execution process, the engine will perform dynamic optimization, such as inline cache and hidden classes; 3) Best practices include avoiding global variables, optimizing loops, using const and lets, and avoiding excessive use of closures.

Python vs. JavaScript: Community, Libraries, and Resources

Apr 15, 2025 am 12:16 AM

Python vs. JavaScript: Community, Libraries, and Resources

Apr 15, 2025 am 12:16 AM

Python and JavaScript have their own advantages and disadvantages in terms of community, libraries and resources. 1) The Python community is friendly and suitable for beginners, but the front-end development resources are not as rich as JavaScript. 2) Python is powerful in data science and machine learning libraries, while JavaScript is better in front-end development libraries and frameworks. 3) Both have rich learning resources, but Python is suitable for starting with official documents, while JavaScript is better with MDNWebDocs. The choice should be based on project needs and personal interests.

Python vs. JavaScript: Development Environments and Tools

Apr 26, 2025 am 12:09 AM

Python vs. JavaScript: Development Environments and Tools

Apr 26, 2025 am 12:09 AM

Both Python and JavaScript's choices in development environments are important. 1) Python's development environment includes PyCharm, JupyterNotebook and Anaconda, which are suitable for data science and rapid prototyping. 2) The development environment of JavaScript includes Node.js, VSCode and Webpack, which are suitable for front-end and back-end development. Choosing the right tools according to project needs can improve development efficiency and project success rate.