Technology peripherals

Technology peripherals

AI

AI

How to evaluate the output quality of large language models (LLMS)? A comprehensive review of evaluation methods!

How to evaluate the output quality of large language models (LLMS)? A comprehensive review of evaluation methods!

How to evaluate the output quality of large language models (LLMS)? A comprehensive review of evaluation methods!

Evaluating Large Language Models' Output Quality is crucial for ensuring reliability and effectiveness. Accuracy, coherence, fluency, and relevance are key considerations. Human evaluation, automated metrics, task-based evaluation, and error analysis

How to Evaluate the Output Quality of Large Language Models (LLMs)

Evaluating the output quality of LLMs is crucial to ensure their reliability and effectiveness. Here are some key considerations:

- Accuracy: The output should соответствовать фактическим данным and be free from errors or biases.

- Coherence: The output should be logically consistent and easy to understand.

- Fluency: The output should be well-written and grammatically correct.

- Relevance: The output should be relevant to the input prompt and meet the intended purpose.

Common Methods for Evaluating LLM Output Quality

Several methods can be used to assess LLM output quality:

- Human Evaluation: Human raters manually evaluate the output based on predefined criteria, providing subjective but often insightful feedback.

- Automatic Evaluation Metrics: Automated tools measure specific aspects of output quality, such as BLEU (for text generation) or Rouge (for summarization).

- Task-Based Evaluation: Output is evaluated based on its ability to perform a specific task, such as generating code or answering questions.

- Error Analysis: Identifying and analyzing errors in the output helps pinpoint areas for improvement.

Choosing the Most Appropriate Evaluation Method

The choice of evaluation method depends on several factors:

- Purpose of Evaluation: Determine the specific aspects of output quality that need to be assessed.

- Data Availability: Consider the availability of labeled data or expert annotations for human evaluation.

- Time and Resources: Assess the time and resources available for evaluation.

- Expertise: Determine the level of expertise required for manual evaluation or the interpretation of automatic metric scores.

By carefully considering these factors, researchers and practitioners can select the most appropriate evaluation method to objectively assess the output quality of LLMs.

The above is the detailed content of How to evaluate the output quality of large language models (LLMS)? A comprehensive review of evaluation methods!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1672

1672

14

14

1428

1428

52

52

1332

1332

25

25

1276

1276

29

29

1256

1256

24

24

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

How to Build MultiModal AI Agents Using Agno Framework?

Apr 23, 2025 am 11:30 AM

While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AM

The release includes three distinct models, GPT-4.1, GPT-4.1 mini and GPT-4.1 nano, signaling a move toward task-specific optimizations within the large language model landscape. These models are not immediately replacing user-facing interfaces like

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

New Short Course on Embedding Models by Andrew Ng

Apr 15, 2025 am 11:32 AM

Unlock the Power of Embedding Models: A Deep Dive into Andrew Ng's New Course Imagine a future where machines understand and respond to your questions with perfect accuracy. This isn't science fiction; thanks to advancements in AI, it's becoming a r

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics Vidhya

Apr 19, 2025 am 11:12 AM

Simulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Google Unveils The Most Comprehensive Agent Strategy At Cloud Next 2025

Apr 15, 2025 am 11:14 AM

Gemini as the Foundation of Google’s AI Strategy Gemini is the cornerstone of Google’s AI agent strategy, leveraging its advanced multimodal capabilities to process and generate responses across text, images, audio, video and code. Developed by DeepM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen Robotics

Apr 15, 2025 am 11:25 AM

“Super happy to announce that we are acquiring Pollen Robotics to bring open-source robots to the world,” Hugging Face said on X. “Since Remi Cadene joined us from Tesla, we’ve become the most widely used software platform for open robotics thanks to

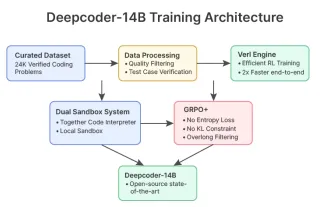

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

DeepCoder-14B: The Open-source Competition to o3-mini and o1

Apr 26, 2025 am 09:07 AM

In a significant development for the AI community, Agentica and Together AI have released an open-source AI coding model named DeepCoder-14B. Offering code generation capabilities on par with closed-source competitors like OpenAI