Hardware Tutorial

Hardware Tutorial

Hardware News

Hardware News

NVIDIA releases 560.81 graphics driver: supports 'Hunter: Showdown 1896' and fixes game lag and other bugs

NVIDIA releases 560.81 graphics driver: supports 'Hunter: Showdown 1896' and fixes game lag and other bugs

NVIDIA releases 560.81 graphics driver: supports 'Hunter: Showdown 1896' and fixes game lag and other bugs

According to news from this site on August 7, NVIDIA updated the GeForce Game Ready driver yesterday (August 6). The latest 560.81 WHQL version adds support for the game "Manhunt: Showdown 1896", and also optimizes "Chain Together", " Game configurations such as "Dungeon Children", "Seven Day World" and "Storm Gate".

The new version of 560.81 WHQL driver also fixes a bug in the previous 560.70 version: when using certain NVIDIA GeForce RTX 30 or RTX 40 series graphics cards, the "Farming Simulator 22" game will cause the game to crash during the loading screen.

This site attaches the following bugs that have been fixed in the new version of the driver:

covers the bug where the GPU indicator stays at 0%, which will cause the game to freeze.

On some monitors it is not possible to display all available display refresh rates of the current monitor.

Container service exhibits unusually high CPU utilization.

Download address: https://www.nvidia.cn/geforce/drivers/results/230598/

The above is the detailed content of NVIDIA releases 560.81 graphics driver: supports 'Hunter: Showdown 1896' and fixes game lag and other bugs. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

ASUS releases BIOS update to improve gaming stability on Intel's 13th/14th generation processors

Apr 20, 2024 pm 05:01 PM

ASUS releases BIOS update to improve gaming stability on Intel's 13th/14th generation processors

Apr 20, 2024 pm 05:01 PM

According to news from this site on April 20, ASUS recently released a BIOS update, which improves instability such as crashes when running games on Intel's 13th/14th generation processors. This site previously reported that players reported problems including that when running the PC demo version of Bandai Namco's fighting game "Tekken 8", even if the computer has sufficient memory and video memory, the system will crash and prompt an error message indicating insufficient memory. Similar crashing issues have also appeared in many games such as "Battlefield 2042", "Remnant 2", "Fortnite", "Lord of the Fallen", "Hogwarts Legacy" and "The Finals". RAD published a long article in February this year, explaining that the game crash problem is a combination of BIOS settings, high clock frequency and high power consumption of Intel processors.

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

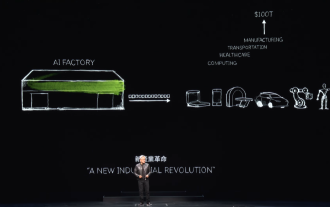

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

According to news from this site on June 2, at the ongoing Huang Renxun 2024 Taipei Computex keynote speech, Huang Renxun introduced that generative artificial intelligence will promote the reshaping of the full stack of software and demonstrated its NIM (Nvidia Inference Microservices) cloud-native microservices. Nvidia believes that the "AI factory" will set off a new industrial revolution: taking the software industry pioneered by Microsoft as an example, Huang Renxun believes that generative artificial intelligence will promote its full-stack reshaping. To facilitate the deployment of AI services by enterprises of all sizes, NVIDIA launched NIM (Nvidia Inference Microservices) cloud-native microservices in March this year. NIM+ is a suite of cloud-native microservices optimized to reduce time to market

Paving the way for PS5 Pro, the 'No Man's Sky' update code 'surprised' the game console development code name 'Trinity' and image quality configuration file

Jul 22, 2024 pm 01:10 PM

Paving the way for PS5 Pro, the 'No Man's Sky' update code 'surprised' the game console development code name 'Trinity' and image quality configuration file

Jul 22, 2024 pm 01:10 PM

According to news from this site on July 22, foreign media twistedvoxel discovered the rumored PS5 development codename "Trinity" and related image quality configuration files in the latest "World Part 1" update code of "No Man's Sky", which proves that Sony is expected to The PS5Pro model was recently launched. Although "No Man's Sky" has enhanced the graphics performance of the game in recent updates, many players still believe that this may be HelloGames paving the way for new models in advance. According to the latest graphics presets, under PS5 Pro The game's dynamic resolution scaling has been increased from 0.6 to 0.8, which means the game has a higher average resolution and some graphical details are upgraded from "High" to "Ultra" levels, but since each game

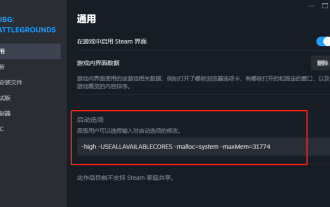

PlayerUnknown's Battlegrounds FPS optimization settings, Chicken PUBG game frame rate optimization

Jun 19, 2024 am 10:35 AM

PlayerUnknown's Battlegrounds FPS optimization settings, Chicken PUBG game frame rate optimization

Jun 19, 2024 am 10:35 AM

Optimize the frame rate of the PlayerUnknown's Battlegrounds game to improve the smoothness and performance of the game. Method: Update the graphics card driver: Make sure you have the latest graphics card driver installed on your computer. This helps optimize game performance and fix possible compatibility issues. Lower game settings: Adjust the graphics settings in the game to a lower level, such as reducing resolution, reducing special effects and shadows, etc. This takes the load off your computer and increases your frame rate. Close unnecessary background programs: While the game is running, close other unnecessary background programs and processes to free up system resources and improve game performance. Clear hard drive space: Make sure your hard drive has enough free space. Delete unnecessary files and programs, clean temporary files and Recycle Bin, etc. Turn off vertical sync (V-Sync): in game

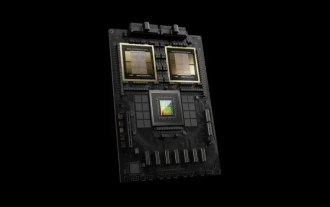

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

According to news from this site on April 17, TrendForce recently released a report, believing that demand for Nvidia's new Blackwell platform products is bullish, and is expected to drive TSMC's total CoWoS packaging production capacity to increase by more than 150% in 2024. NVIDIA Blackwell's new platform products include B-series GPUs and GB200 accelerator cards integrating NVIDIA's own GraceArm CPU. TrendForce confirms that the supply chain is currently very optimistic about GB200. It is estimated that shipments in 2025 are expected to exceed one million units, accounting for 40-50% of Nvidia's high-end GPUs. Nvidia plans to deliver products such as GB200 and B100 in the second half of the year, but upstream wafer packaging must further adopt more complex products.

The computer I spent 300 yuan to assemble successfully ran through the local large model

Apr 12, 2024 am 08:07 AM

The computer I spent 300 yuan to assemble successfully ran through the local large model

Apr 12, 2024 am 08:07 AM

If 2023 is recognized as the first year of AI, then 2024 is likely to be a key year for the popularization of large AI models. In the past year, a large number of large AI models and a large number of AI applications have emerged. Manufacturers such as Meta and Google have also begun to launch their own online/local large models to the public, similar to "AI artificial intelligence" that is out of reach. The concept suddenly came to people. Nowadays, people are increasingly exposed to artificial intelligence in their lives. If you look carefully, you will find that almost all of the various AI applications you have access to are deployed on the "cloud". If you want to build a device that can run large models locally, then the hardware is a brand-new AIPC priced at more than 5,000 yuan. For ordinary people,

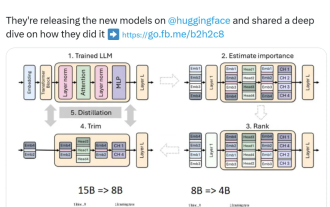

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

The rise of small models. Last month, Meta released the Llama3.1 series of models, which includes Meta’s largest model to date, the 405B model, and two smaller models with 70 billion and 8 billion parameters respectively. Llama3.1 is considered to usher in a new era of open source. However, although the new generation models are powerful in performance, they still require a large amount of computing resources when deployed. Therefore, another trend has emerged in the industry, which is to develop small language models (SLM) that perform well enough in many language tasks and are also very cheap to deploy. Recently, NVIDIA research has shown that structured weight pruning combined with knowledge distillation can gradually obtain smaller language models from an initially larger model. Turing Award Winner, Meta Chief A