Technology peripherals

Technology peripherals

AI

AI

After Sora, OpenAI Lilian Weng personally wrote an article to teach you how to design a video generation diffusion model from scratch.

After Sora, OpenAI Lilian Weng personally wrote an article to teach you how to design a video generation diffusion model from scratch.

After Sora, OpenAI Lilian Weng personally wrote an article to teach you how to design a video generation diffusion model from scratch.

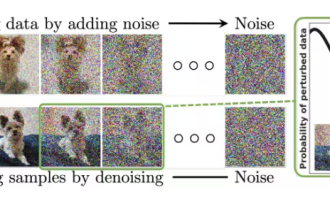

In the past few years, the powerful image synthesis capabilities of diffusion models have been fully demonstrated. The research community is now tackling a more difficult task: video generation. Recently, Lilian Weng, head of OpenAI Safety Systems, wrote a blog about the diffusion model of video generation.

Itself is a superset of image synthesis, Because the image is a single frame of video. Video synthesis is much more difficult for the following reasons: 1. Video synthesis also requires temporal consistency between different frames, which naturally requires encoding more world knowledge into the model.

2. Compared with text or images, it is more difficult to collect a large amount of high-quality, high-dimensional video data, let alone paired text-video data.

If you want to learn about the application of diffusion models in image generation, you can read the previous blog post "What are Diffusion Models?" published by the author of this article, Lilian Weng. Link: https://lilianweng.github.io/posts/2021-07 -11-diffusion-models/

Modeling video generation from scratchFirst, let’s take a look at how to design and train a diffusion video model from scratch, that is, without using a pre-trained image generator.

Parameterization and Sampling

The variable definitions used here are slightly different from the previous article, but the mathematical form is consistent. Let ?~?_real be a data point sampled from this real data distribution. Now, adding a small amount of Gaussian noise to time creates a sequence of noisy variants of ?, denoted as: {?_? | ? = 1..., ?}, where the noise grows with the increase of ?, and finally ?(?_?)~?(?,?). This forward process of adding noise is a Gaussian process. Let ?_? and ?_? be the differentiable noise schedule of this Gaussian process:

In order to represent ?(?_?|?_?), where 0≤?≤?, There is:

, then the DDIM update can be expressed as: ≤?,有:

The paper "Progressive Distillation for Fast Sampling of Diffusion Models" by Salimans & Ho (2022) is proposed here A special prediction parameter:  . Research shows that the ? parameter helps avoid color change issues in video generation compared to the ? parameter. The parameterization of

. Research shows that the ? parameter helps avoid color change issues in video generation compared to the ? parameter. The parameterization of

? is derived via tricks in angular coordinates. First, define ?_?=arctan (?_?/?_?), from which we can get ?_?=cos ?, ?_?=sin ?, ?_?=cos ??+sin ??. The speed of ?_? can be written as:

For the model, the parameterization of ? is prediction  For video generation tasks, in order to extend the video length or increase the frame rate, the diffusion model needs to run multiple upsampling steps. This requires the ability to sample the second video ?^? based on the first video ?^?, , where ?^? may be an autoregressive extension of ?^? or a missing frame in a low frame rate video . In addition to its own corresponding noisy variable, the sampling of ?^? also needs to be based on ?^?. The video diffusion model (VDM) of Ho & Salimans et al. in 2022 proposes to use an adjusted denoising model to implement the reconstruction guidance method, so that the sampling of ?^? can be well based on ?^?:

For video generation tasks, in order to extend the video length or increase the frame rate, the diffusion model needs to run multiple upsampling steps. This requires the ability to sample the second video ?^? based on the first video ?^?, , where ?^? may be an autoregressive extension of ?^? or a missing frame in a low frame rate video . In addition to its own corresponding noisy variable, the sampling of ?^? also needs to be based on ?^?. The video diffusion model (VDM) of Ho & Salimans et al. in 2022 proposes to use an adjusted denoising model to implement the reconstruction guidance method, so that the sampling of ?^? can be well based on ?^?:

where is the reconstruction of ?^? and ?^? according to the provided denoising model. And ?_? is a weighting factor, you can find a larger ?_? > 1 to improve the sampling quality. Note that using the same reconstruction guidance method, it is also possible to expand samples based on low-resolution videos into high-resolution samples.

where is the reconstruction of ?^? and ?^? according to the provided denoising model. And ?_? is a weighting factor, you can find a larger ?_? > 1 to improve the sampling quality. Note that using the same reconstruction guidance method, it is also possible to expand samples based on low-resolution videos into high-resolution samples.

Model architecture: 3D U-Net and DiT

Model architecture: 3D U-Net and DiT

VDM uses a standard diffusion model setup, but makes some modifications to the architecture to make it more suitable for video modeling tasks. It extends the 2D U-net to handle 3D data, where each feature map represents a 4D tensor: number of frames x height x width x number of channels. This 3D U-net is decomposed in space and time, which means that each layer only operates one dimension of space or time, but not both at the same time.

VDM uses a standard diffusion model setup, but makes some modifications to the architecture to make it more suitable for video modeling tasks. It extends the 2D U-net to handle 3D data, where each feature map represents a 4D tensor: number of frames x height x width x number of channels. This 3D U-net is decomposed in space and time, which means that each layer only operates one dimension of space or time, but not both at the same time.

Processing space: The original 2D convolution layer as in 2D U-net will be expanded into a 3D convolution only for space. Specifically, 3x3 convolution becomes 1x3x3 convolution. Each spatial attention module still focuses on spatial attention, and the first axis (frames) is treated as a batch dimension.

Processing time: A temporal attention module is added after each spatial attention module. It focuses on the first axis (frames) and treats the spatial axis as the batch dimension. Using this relative position embedding it is possible to track the order of frames. This temporal attention module allows the model to achieve good temporal consistency.

- Figure 2: 3D U-net architecture. The inputs to the network are the noisy video z_?, the condition information c, and the logarithmic signal-to-noise ratio (log-SNR) λ_?. The channel multipliers M_1,...,M_? represent the number of channels in each layer.

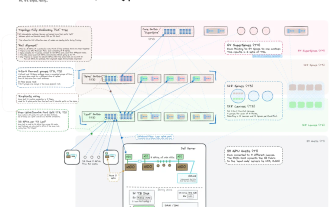

A frozen T5 text encoder to provide text embeddings as conditional input. A basic video diffusion model.

- A set of cascaded interleaved spatial and temporal super-resolution diffusion models, containing 3 TSR (temporal super-resolution) and 3 SSR (spatial super-resolution) components. Figure 3: Imagen Video’s cascade sampling process. In practice, text embeddings are injected into all components, not just the base model.

- The base denoising model uses shared parameters to perform spatial operations on all frames simultaneously, and then the temporal layer blends the activations across frames to better achieve temporal consistency; this approach has been proven to perform better than Frame autoregressive method.

-

圖 4中對一個中一個中的空間中分離車型 - 空間架構 - 架構時間。

SSR 和 TSR 模型都基於在通道方面連接了有雜訊資料 ?_? 的上取樣的輸入。 SSR 是透過雙線性大小調整來上採樣,而 TSR 則是透過重複幀或填充空白幀來上採樣。

Imagen Video 也應用了漸進式蒸餾來加速取樣,每次蒸餾迭代都可以將所需的取樣步驟減少一半。在實驗中,他們能夠將所有 7 個視訊擴散模型蒸餾為每個模型僅 8 個採樣步驟,同時不會對感知品質造成任何明顯損失。

為了更好地擴大模型規模,Sora 採用了 DiT(擴散 Transformer)架構,其操作的是視訊和影像隱程式碼的時空區塊(spacetime patch)。其會將視覺輸入表示成一個時空區塊序列,並將這些時空區塊用作 Transformer 輸入 token。

圖 5中中:Sora 為擴散 Transformer 模型。

調整圖像模型來產生視頻

在擴散視頻建模方面,另一種重要方法是通過插入時間層來“擴增”預訓練的文生圖擴散模型,然後就可以選擇僅在影片上對新的層進行微調或完全避免進行額外的訓練。這個新模型會繼承文字 - 圖像對的先驗知識,由此可以幫助緩解對文字 - 影片對資料的需求。

在視訊資料上進行微調

Singer et al. 在2022 年提出的Make-A-Video 是在一個預訓練擴散圖像模型的基礎上擴展一個時間維度,其包含三個關鍵組件:

1. 一個在文字- 圖像對資料上訓練的基礎文生圖模型。

2. 時空卷積和注意力層,使網路覆蓋時間維度。

3. 一個幀插值網絡,用於高幀率生成。

圖 6中:Make-A- 工作流程中示意圖。

最終的視頻推理方案的數學形式可以寫成這樣:

?是輸入文字

- P (.) 是先驗,給定文本嵌入?_? 和BPE 編碼的文本

生成圖像嵌入?_?:

這部分是在文本- 圖像對數據上訓練的,不會在視頻數據上進行微調。

這部分是在文本- 圖像對數據上訓練的,不會在視頻數據上進行微調。

是時空解碼器,其能產生一系列的 16 幀視頻,其中每一幀都是低分辨率的 64x64 RGB 圖像

是時空解碼器,其能產生一系列的 16 幀視頻,其中每一幀都是低分辨率的 64x64 RGB 圖像 。

。  是幀插值網絡,可透過在產生的幀之間插值而有效提升幀率。這是一個經過微調的模型,可用於為視訊上採樣任務預測被遮蔽的幀。

是幀插值網絡,可透過在產生的幀之間插值而有效提升幀率。這是一個經過微調的模型,可用於為視訊上採樣任務預測被遮蔽的幀。  是空間和時空超解析度模型,可分別將影像解析度提升至 256x256 和 768x768。

是空間和時空超解析度模型,可分別將影像解析度提升至 256x256 和 768x768。  是最終生成的影片。

是最終生成的影片。

時空超解析度層包含偽3D 卷積層和偽3D 注意力層:

偽3D 卷積層:每個空間2D 卷積層(由預訓練影像模型初始化)後面跟著一個時間1D 層(由恆等函數初始化)。從概念上講,其中的 2D 卷積層首先產生多幀,然後這些幀會被調整為一段影片。

偽 3D 注意力層:在每個(預訓練)空間注意力層之後堆疊一個時間注意力層,從而近似得到一個完整的時空注意力層。

圖 7:中點 3D 磁碟區的工作和注意力。

它們可以表示成:

其中有輸入張量?∈ℝ^{?×?×?×?×?}(對應於批量大小、通道數、幀數、高度和寬度) ;

的作用是交換時間和空間維度;flatten (.) 是一個矩陣算子,可將? 轉換成?'∈ℝ^{?×?×?×??},而flatten⁻¹(.) 的作用則相反。

的作用是交換時間和空間維度;flatten (.) 是一個矩陣算子,可將? 轉換成?'∈ℝ^{?×?×?×??},而flatten⁻¹(.) 的作用則相反。 訓練時,Make-A-Video 工作流程中的不同組件是分開訓練的。

1. 解碼器 D^?、先驗 P 和兩個超解析度組件

首先單獨在圖像上訓練,而不使用配對的文字。

首先單獨在圖像上訓練,而不使用配對的文字。 2. 接下來新增新的時間層,其初始化為恆等函數,然後在未標註的視訊資料上進行微調。

Wu et al. 在2023 年提出的Tune-A-Video 是對一個預訓練圖像擴散模型進行擴展,使之可以實現單樣本視頻微調:給定一段包含? 幀的視頻?={ ?_? | ?=1,...,?},搭配上描述性的prompt ?,任務目標是基於經過稍有編輯且相關的文本prompt ?* 生成一段新視頻?*。舉個例子,? = "A man is skiing" 可以擴展成 ?* ="Spiderman is skiing on the beach"。 Tune-A-Video 的設計目的是用於物件編輯、背景修改和風格遷移。

除了擴展 2D 卷積層之外,Tune-A-Video 的 U-Net 架構還整合了 ST-Attention(時空注意力)模組,可透過查詢先前幀中的相關位置來實現時間一致性。給定幀?_?、前一幀?_{?-1} 和第一幀?_1 的隱含特徵(它們被投射成查詢?、鍵? 和值?),ST-Attention 的定義是:

圖 8:Tune-A-Video 架構概況。在採樣階段之前,它首先在單一影片上運行一個輕量加權的微調階段。請注意整個時間自註意力(T-Attn)層都會微調,因為它們是新加入的,但在微調階段,ST-Attn 和Cross-Attn 中只有查詢投射會被更新,以保留先驗的文生圖知識。 ST-Attn 能提升時空一致性,Cross-Attn 能優化文字 - 影片的對齊。

Esser et al. 在 2023 年提出的 Gen-1 模型(Runway)針對的任務是根據文字輸入編輯給定影片。它在考慮生成條件時會將影片的結構和內容分開處理:p (? | ?,c)。但是,要將這兩方面清楚地分開實非易事。

內容 ? 是指影片的外觀和語義,其可從文字取樣來進行條件化編輯。視訊幀的 CLIP 嵌入能很好地表示內容,並且能在很大程度上與結構特徵保持正交。

結構 ? 描述了幾何性質和動態情況,包括形狀、位置、物體的時間變化情況,? 是從輸入視頻採樣的。可以使用深度估計或其它針對特定任務的輔助資訊(例如用於人類視訊合成的人體姿勢或人臉識別資訊)。

Gen-1 中的架構變化相當標準,即在其殘差模組中的每個2D 空間卷積層之後添加1D 時間卷積層,在其註意力模組中的每個2D 空間注意力模組之後加入1D 時間注意力模組。訓練期間,結構變數 ? 會與擴散隱變數 ? 連接起來,其中內容變數 ? 會在交叉注意力層中提供。在推理時間,會透過一個先驗來轉換 CLIP 嵌入 —— 將其從 CLIP 文字嵌入轉換成 CLIP 影像嵌入。

圖 9中中:Gen-1 模型的訓練流程概況。

Blattmann et al. 在 2023 年提出的 Video LDM 首先是訓練一個 LDM(隱擴散模型)影像產生器。然後微調該模型,使之得到添加了時間維度的影片。這個微調過程僅用於那些在編碼後的影像序列上新增增加的時間層。 Video LDM 中的時間層

(見圖 10)會與現有的空間層

(見圖 10)會與現有的空間層 交錯放置,而這些空間層在微調過程中會保持凍結。也就是說,這裡僅微調新參數 ?,而不會微調預訓練的圖像骨幹模型參數 ?。 Video LDM 的工作流程是先產生低幀率的關鍵幀,然後透過 2 步驟隱含幀插值流程來提升幀率。

交錯放置,而這些空間層在微調過程中會保持凍結。也就是說,這裡僅微調新參數 ?,而不會微調預訓練的圖像骨幹模型參數 ?。 Video LDM 的工作流程是先產生低幀率的關鍵幀,然後透過 2 步驟隱含幀插值流程來提升幀率。 長度為 ? 的輸入序列會被解釋成用於基礎圖像模型 ? 的一批圖像(即 ?・?),然後再調整為用於

時間層的視頻格式。其中有一個 skip 連結透過一個學習到的融合參數 ? 導向了時間層輸出 ?' 和空間輸出 ? 的組合。在實務中,實現的時間混合層有兩種:(1) 時間注意力,(2) 基於 3D 卷積的殘差模組。

時間層的視頻格式。其中有一個 skip 連結透過一個學習到的融合參數 ? 導向了時間層輸出 ?' 和空間輸出 ? 的組合。在實務中,實現的時間混合層有兩種:(1) 時間注意力,(2) 基於 3D 卷積的殘差模組。

圖 10:一個用於影像合成的預訓練 LDM 被擴展成一個視訊產生器。 B、?、?、?、? 分別是批量大小、序列長度、通道數、高度和寬度。 ?_S 是一個可選的條件 / 上下文幀。

但是,LDM 的預訓練自動編碼器依然還有問題:它只能看見影像,永遠看不見影片。直接使用它來產生影片會產生閃爍的偽影,這樣的時間一致性就很差。因此Video LDM 為解碼器添加了額外的時間層,並使用一個用3D 卷積構建的逐塊時間判別器在視頻資料進行微調,同時編碼器保持不變,這樣就依然還能復用預訓練的LDM。在時間解碼器微調期間,凍結的編碼器會獨立地處理視訊中每一幀,並使用視訊感知型判別器強制在幀之間實現在時間上一致的重建。

圖 11:視訊隱擴散模式中自動編碼器的訓練流程。其中編碼器的微調目標是透過新的跨幀判別器獲得時間一致性,而編碼器則保持不變。

類似於Video LDM,Blattmann et al. 在2023 年提出的Stable Video Diffusion(SVD)的架構設計也是基於LDM,其中每一個空間卷積和注意力層之後都插入時間層,但SVD 是在整個模型層面執行微調。訓練影片 LDM 分為三個階段:

1. 文生圖預訓練很重要,有助於提升品質以及遵從 prompt 的能力。

2. 將視訊預訓練分開是有利的,理想情況下應在更大規模的經過整編的資料集上進行。

3. 使用一段更小的、高視覺保真度的預先標註了字幕的影片進行高品質視訊微調。

SVD 專門強調了資料集整編對模型效能的關鍵作用。他們使用了一個剪輯檢測流程來從每段影片獲得更多剪輯,然後對其使用三個不同的字幕標註器模型:(1) 用於中間幀的CoCa,(2) 用於視訊字幕的V- BLIP,(3) 基於前兩個標註使用LLM 來進行標註。然後他們還能繼續提升視訊資料集,其做法包括移除運動較少的影片片段(透過以2 fps 速度計算低光流分數進行過濾)、清除過多的文字(使用光學字元辨識來識別具有大量文字的影片)、清除看起來不夠美的影片(使用CLIP 嵌入標註每段影片的第一幀、中間幀和最後幀併計算美學分數和文字- 圖像相似度)。實驗表明,使用經過過濾的更高品質的資料集能得到更好的模型質量,即便這個資料集要小得多。

對於首先產生遠距離關鍵影格然後再使用時間超解析度進行插值的方法,其中的關鍵挑戰是如何維持高品質的時間一致性。 Bar-Tal et al. 在2024 年提出的Lumiere 則是採用了一種時空U-Net(STUNet)架構,其可在單次通過中一次性生成整段時間上持續的視頻,這樣就無需依賴TSR (時間超解析度)組件了。 STUNet 會在時間和空間維度上對視訊進行下採樣,因此會在一個緊湊的時間 - 空間隱空間內具備很高的計算成本。

圖 12:Lumiere 無需 TSR(時間超解析度)模型。由於記憶體限制,經過擴展的 SSR 網路可以僅使用影片的短片段,因此 SSR 模型可以使用較短但重疊的影片片段集。

STUNet 在預訓練文生圖 U-Net 上擴展之後能夠同時在時間和空間維度上對視頻進行下採樣和上採樣。基於卷積的模組由預先訓練的文生圖層構成,之後是分解的時空卷積。而在最粗粒度 U-Net 層面上的基於注意力的模組包含這個預訓練文生圖模組,之後是時間注意力。只有新增加的層需要進一步訓練。

圖 13中:架構示意圖

無訓練適應

也有可能不使用任何訓練就讓預訓練的文生圖模型輸出視頻,這多少有點讓人驚訝。

如果我們直接簡單地隨機採樣一個隱含代碼的序列,然後用解碼出來的對應圖像構建一段視頻,那麼無法保證物體和語義在時間上的一致性。 Khachatryan et al. 在 2023 年提出的 Text2Video-Zero 可實現零樣本無訓練的視訊生成,其做法是讓預先訓練的影像擴散模型具備用於時間一致性的兩個關鍵機制。

1. 取樣具有運動動態的隱含程式碼序列,以確保全域場景和背景的時間一致性。

2. 使用一個新的跨幀注意力(每一幀在第一幀上的注意力)重新編程幀層面的自註意力,以保證前景事物的上下文、外觀和身份資訊的一致性。

圖中 14:TextVideo2-Zero 工作流程示意圖。

下面用數學描述這個取樣帶有運動訊息的隱含變數序列的過程:

1. Define a direction ?=(?_?, ?_?)∈ℝ² to control the global scene and camera movement; by default, set ?=(1, 1). Define another hyperparameter λ>0 to control the amount of global motion.

2. First randomly sample the hidden code of the first frame

3. Use a pre-trained image diffusion model (such as the Stable Diffusion (SD) model in the paper) to perform a Δ?≥0 DDIM backward update step, and get The corresponding implicit code

, where ?'=?-Δ?.

, where ?'=?-Δ?. 4. For each frame in the implicit code sequence, use a distortion operation (which is defined as ?^?=λ(?-1)? ) to perform the corresponding motion translation, and get

5. Finally, for All

Using DDIM forward step, we get

Using DDIM forward step, we get

Additionally, Text2Video-Zero also replaces the self-attention layer in the pre-trained SD model and replaces it with a new cross-frame attention that references the first frame force mechanism. The goal is to preserve the context, appearance, and identity information of foreground objects in the generated video results.

There is also the option to use a background mask to make the video background transition smoother and further improve background consistency. Assume that we have used some method to obtain the corresponding foreground mask of frame ?_?, and then the background smoothing operation can fuse the actual hidden code and the hidden code distorted by the diffusion step according to the following background matrix:

where

is the actual hidden code,

is the actual hidden code,  is the hidden code distorted on the background, ? is a hyperparameter, set ?=0.6 in the experiment of this paper.

is the hidden code distorted on the background, ? is a hyperparameter, set ?=0.6 in the experiment of this paper. Text2Video-Zero can be combined with ControlNet, where at each diffusion time step ?=?,...,1, each frame is pretrained using ControlNet on

(?=1,...,?) copy branch and add the output of the ControlNet branch to the skip connection of the main U-Net.

(?=1,...,?) copy branch and add the output of the ControlNet branch to the skip connection of the main U-Net. ControlVideo proposed by Zhang et al. in 2023 aims to generate videos based on text prompts and motion sequences (such as depth or edge maps)

. This model is adjusted based on ControlNet, with three new mechanisms added:

. This model is adjusted based on ControlNet, with three new mechanisms added: 1. Cross-frame attention: Add complete cross-frame interaction in the self-attention module. It introduces interaction between all frames by mapping hidden frames for all time steps to a ?,?,? matrix, unlike Text2Video-Zero which has all frames focus on the first frame.

2. The interleaved-frame smoother mechanism reduces the flicker effect by using frame interpolation on alternating frames. At each time step ?, the smoother interpolates even or odd frames to smooth their corresponding three-frame clip. Note that the number of frames decreases over time after the smoothing step.

3. The layered sampler can ensure the temporal consistency of long videos under memory constraints. A long video will be divided into multiple short videos, and a key frame will be selected for each short video. The model pre-generates these keyframes using full cross-frame attention for long-term consistency, and each corresponding short video is sequentially synthesized based on these keyframes.

圖 15中:ControlVideo之後與。

原文連結:https://lilianweng.github.io/posts/2024-04-12-diffusion-video/

The above is the detailed content of After Sora, OpenAI Lilian Weng personally wrote an article to teach you how to design a video generation diffusion model from scratch.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1659

1659

14

14

1416

1416

52

52

1310

1310

25

25

1258

1258

29

29

1232

1232

24

24

A Diffusion Model Tutorial Worth Your Time, from Purdue University

Apr 07, 2024 am 09:01 AM

A Diffusion Model Tutorial Worth Your Time, from Purdue University

Apr 07, 2024 am 09:01 AM

Diffusion can not only imitate better, but also "create". The diffusion model (DiffusionModel) is an image generation model. Compared with the well-known algorithms such as GAN and VAE in the field of AI, the diffusion model takes a different approach. Its main idea is a process of first adding noise to the image and then gradually denoising it. How to denoise and restore the original image is the core part of the algorithm. The final algorithm is able to generate an image from a random noisy image. In recent years, the phenomenal growth of generative AI has enabled many exciting applications in text-to-image generation, video generation, and more. The basic principle behind these generative tools is the concept of diffusion, a special sampling mechanism that overcomes the limitations of previous methods.

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Kimi: In just one sentence, in just ten seconds, a PPT will be ready. PPT is so annoying! To hold a meeting, you need to have a PPT; to write a weekly report, you need to have a PPT; to make an investment, you need to show a PPT; even when you accuse someone of cheating, you have to send a PPT. College is more like studying a PPT major. You watch PPT in class and do PPT after class. Perhaps, when Dennis Austin invented PPT 37 years ago, he did not expect that one day PPT would become so widespread. Talking about our hard experience of making PPT brings tears to our eyes. "It took three months to make a PPT of more than 20 pages, and I revised it dozens of times. I felt like vomiting when I saw the PPT." "At my peak, I did five PPTs a day, and even my breathing was PPT." If you have an impromptu meeting, you should do it

Zhipu AI enters video generation: 'Qingying' is online, 6 seconds long, free and unlimited

Jul 26, 2024 pm 03:35 PM

Zhipu AI enters video generation: 'Qingying' is online, 6 seconds long, free and unlimited

Jul 26, 2024 pm 03:35 PM

The Zhipu large model team is self-developed and built. Since Kuaishou Keling AI has become popular at home and abroad, domestic video generation is becoming more and more popular, just like the large text model in 2023. Just now, another large video generation model product has been officially launched: Zhipu AI officially released "Qingying". As long as you have good ideas (a few words to hundreds of words) and a little patience (30 seconds), "Qingying" can generate high-precision videos with 1440x960 resolution. From now on, Qingying will be launched on Qingyan App, and all users can fully experience the functions of dialogue, pictures, videos, codes and agent generation. In addition to covering the web and App of Zhipu Qingyan, you can also operate on the "AI Dynamic Photo Mini Program" to quickly achieve dynamic effects for photos on your mobile phone.

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

In the early morning of June 20th, Beijing time, CVPR2024, the top international computer vision conference held in Seattle, officially announced the best paper and other awards. This year, a total of 10 papers won awards, including 2 best papers and 2 best student papers. In addition, there were 2 best paper nominations and 4 best student paper nominations. The top conference in the field of computer vision (CV) is CVPR, which attracts a large number of research institutions and universities every year. According to statistics, a total of 11,532 papers were submitted this year, and 2,719 were accepted, with an acceptance rate of 23.6%. According to Georgia Institute of Technology’s statistical analysis of CVPR2024 data, from the perspective of research topics, the largest number of papers is image and video synthesis and generation (Imageandvideosyn

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

We know that LLM is trained on large-scale computer clusters using massive data. This site has introduced many methods and technologies used to assist and improve the LLM training process. Today, what we want to share is an article that goes deep into the underlying technology and introduces how to turn a bunch of "bare metals" without even an operating system into a computer cluster for training LLM. This article comes from Imbue, an AI startup that strives to achieve general intelligence by understanding how machines think. Of course, turning a bunch of "bare metal" without an operating system into a computer cluster for training LLM is not an easy process, full of exploration and trial and error, but Imbue finally successfully trained an LLM with 70 billion parameters. and in the process accumulate

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

Accelerate diffusion model, generate SOTA-level images in the fastest 1 step, Byte Hyper-SD is open source

Apr 25, 2024 pm 05:25 PM

Accelerate diffusion model, generate SOTA-level images in the fastest 1 step, Byte Hyper-SD is open source

Apr 25, 2024 pm 05:25 PM

Recently, DiffusionModel has made significant progress in the field of image generation, bringing unprecedented development opportunities to image generation and video generation tasks. Despite the impressive results, the multi-step iterative denoising properties inherent in the inference process of diffusion models result in high computational costs. Recently, a series of diffusion model distillation algorithms have emerged to accelerate the inference process of diffusion models. These methods can be roughly divided into two categories: i) trajectory-preserving distillation; ii) trajectory reconstruction distillation. However, these two types of methods are limited by the limited effect ceiling or changes in the output domain. In order to solve these problems, the ByteDance technical team proposed a trajectory segmentation consistent method called Hyper-SD.

AI in use | AI created a life vlog of a girl living alone, which received tens of thousands of likes in 3 days

Aug 07, 2024 pm 10:53 PM

AI in use | AI created a life vlog of a girl living alone, which received tens of thousands of likes in 3 days

Aug 07, 2024 pm 10:53 PM

Editor of the Machine Power Report: Yang Wen The wave of artificial intelligence represented by large models and AIGC has been quietly changing the way we live and work, but most people still don’t know how to use it. Therefore, we have launched the "AI in Use" column to introduce in detail how to use AI through intuitive, interesting and concise artificial intelligence use cases and stimulate everyone's thinking. We also welcome readers to submit innovative, hands-on use cases. Video link: https://mp.weixin.qq.com/s/2hX_i7li3RqdE4u016yGhQ Recently, the life vlog of a girl living alone became popular on Xiaohongshu. An illustration-style animation, coupled with a few healing words, can be easily picked up in just a few days.

這部分是在文本- 圖像對數據上訓練的,不會在視頻數據上進行微調。

這部分是在文本- 圖像對數據上訓練的,不會在視頻數據上進行微調。

是時空解碼器,其能產生一系列的 16 幀視頻,其中每一幀都是低分辨率的 64x64 RGB 圖像

是時空解碼器,其能產生一系列的 16 幀視頻,其中每一幀都是低分辨率的 64x64 RGB 圖像 。

。  是幀插值網絡,可透過在產生的幀之間插值而有效提升幀率。這是一個經過微調的模型,可用於為視訊上採樣任務預測被遮蔽的幀。

是幀插值網絡,可透過在產生的幀之間插值而有效提升幀率。這是一個經過微調的模型,可用於為視訊上採樣任務預測被遮蔽的幀。  是空間和時空超解析度模型,可分別將影像解析度提升至 256x256 和 768x768。

是空間和時空超解析度模型,可分別將影像解析度提升至 256x256 和 768x768。  是最終生成的影片。

是最終生成的影片。

的作用是交換時間和空間維度;flatten (.) 是一個矩陣算子,可將? 轉換成?'∈ℝ^{?×?×?×??},而flatten⁻¹(.) 的作用則相反。

的作用是交換時間和空間維度;flatten (.) 是一個矩陣算子,可將? 轉換成?'∈ℝ^{?×?×?×??},而flatten⁻¹(.) 的作用則相反。  首先單獨在圖像上訓練,而不使用配對的文字。

首先單獨在圖像上訓練,而不使用配對的文字。

(見圖 10)會與現有的空間層

(見圖 10)會與現有的空間層 交錯放置,而這些空間層在微調過程中會保持凍結。也就是說,這裡僅微調新參數 ?,而不會微調預訓練的圖像骨幹模型參數 ?。 Video LDM 的工作流程是先產生低幀率的關鍵幀,然後透過 2 步驟隱含幀插值流程來提升幀率。

交錯放置,而這些空間層在微調過程中會保持凍結。也就是說,這裡僅微調新參數 ?,而不會微調預訓練的圖像骨幹模型參數 ?。 Video LDM 的工作流程是先產生低幀率的關鍵幀,然後透過 2 步驟隱含幀插值流程來提升幀率。  時間層的視頻格式。其中有一個 skip 連結透過一個學習到的融合參數 ? 導向了時間層輸出 ?' 和空間輸出 ? 的組合。在實務中,實現的時間混合層有兩種:(1) 時間注意力,(2) 基於 3D 卷積的殘差模組。

時間層的視頻格式。其中有一個 skip 連結透過一個學習到的融合參數 ? 導向了時間層輸出 ?' 和空間輸出 ? 的組合。在實務中,實現的時間混合層有兩種:(1) 時間注意力,(2) 基於 3D 卷積的殘差模組。

, where ?'=?-Δ?.

, where ?'=?-Δ?.

Using DDIM forward step, we get

Using DDIM forward step, we get

is the actual hidden code,

is the actual hidden code,  is the hidden code distorted on the background, ? is a hyperparameter, set ?=0.6 in the experiment of this paper.

is the hidden code distorted on the background, ? is a hyperparameter, set ?=0.6 in the experiment of this paper.  (?=1,...,?) copy branch and add the output of the ControlNet branch to the skip connection of the main U-Net.

(?=1,...,?) copy branch and add the output of the ControlNet branch to the skip connection of the main U-Net.  . This model is adjusted based on ControlNet, with three new mechanisms added:

. This model is adjusted based on ControlNet, with three new mechanisms added: