A Deep Dive into CNCF's Cloud-Native AI Whitepaper

During KubeCon EU 2024, CNCF launched its first Cloud-Native AI Whitepaper. This article provides an in-depth analysis of the content of this whitepaper.

In March 2024, during KubeCon EU, the Cloud-Native Computing Foundation (CNCF) released its first detailed whitepaper on Cloud-Native Artificial Intelligence (CNAI) 1. This report extensively explores the current state, challenges, and future development directions of integrating cloud-native technologies with artificial intelligence. This article will delve into the core content of this whitepaper.

This article is first published in the medium MPP plan. If you are a medium user, please follow me in medium. Thank you very much.

What is Cloud-Native AI?

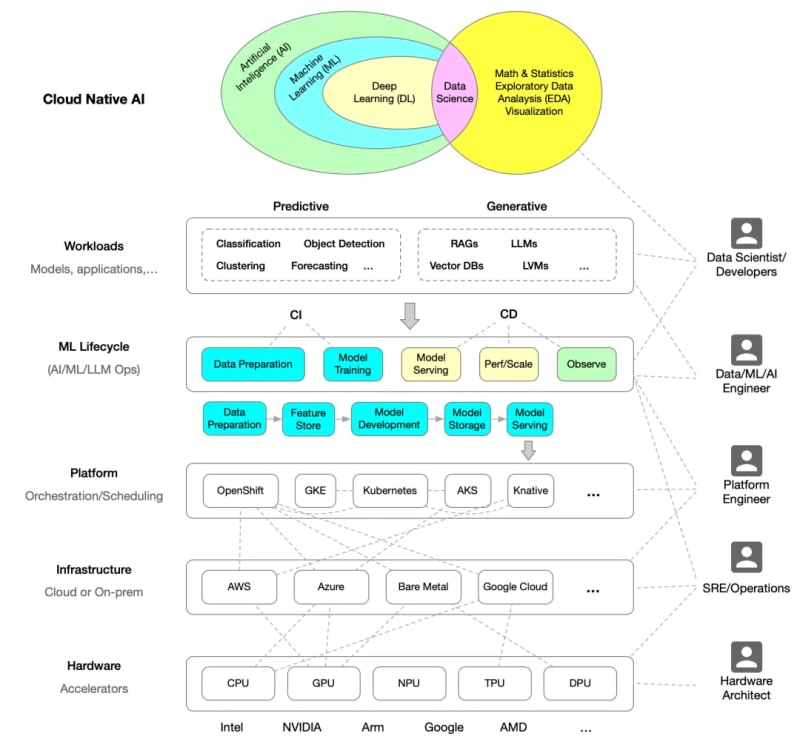

Cloud-Native AI refers to building and deploying artificial intelligence applications and workloads using cloud-native technology principles. This includes leveraging microservices, containerization, declarative APIs, and continuous integration/continuous deployment (CI/CD) among other cloud-native technologies to enhance AI applications’ scalability, reusability, and operability.

The following diagram illustrates the architecture of Cloud-Native AI, redrawn based on the whitepaper.

Relationship between Cloud-Native AI and Cloud-Native Technologies

Cloud-native technologies provide a flexible, scalable platform that makes the development and operation of AI applications more efficient. Through containerization and microservices architecture, developers can iterate and deploy AI models quickly while ensuring high availability and scalability of the system. Kuuch as resource scheduling, automatic scaling, and service discovery.

The whitepaper provides two examples to illustrate the relationship between Cloud-Native AI and cloud-native technologies, namely running AI on cloud-native infrastructure:

- Hugging Face Collaborates with Microsoft to launch Hugging Face Model Catalog on Azure2

- OpenAI Scaling Kubernetes to 7,500 nodes3

Challenges of Cloud-Native AI

Despite providing a solid foundation for AI applications, there are still challenges when integrating AI workloads with cloud-native platforms. These challenges include data preparation complexity, model training resource requirements, and maintaining model security and isolation in multi-tenant environments. Additionally, resource management and scheduling in cloud-native environments are crucial for large-scale AI applications and need further optimization to support efficient model training and inference.

Development Path of Cloud-Native AI

The whitepaper proposes several development paths for Cloud-Native AI, including improving resource scheduling algorithms to better support AI workloads, developing new service mesh technologies to enhance the performance and security of AI applications, and promoting innovation and standardization of Cloud-Native AI technology through open-source projects and community collaboration.

Cloud-Native AI Technology Landscape

Cloud-Native AI involves various technologies, ranging from containers and microservices to service mesh and serverless computing. Kubernetes plays a central role in deploying and managing AI applications, while service mesh technologies such as Istio and Envoy provide robust traffic management and security features. Additionally, monitoring tools like Prometheus and Grafana are crucial for maintaining the performance and reliability of AI applications.

Below is the Cloud-Native AI landscape diagram provided in the whitepaper.

- Kubernetes

- Volcano

- Armada

- Kuberay

- Nvidia NeMo

- Yunikorn

- Kueue

- Flame

Distributed Training

- Kubeflow Training Operator

- Pytorch DDP

- TensorFlow Distributed

- Open MPI

- DeepSpeed

- Megatron

- Horovod

- Apla

- …

ML Serving

- Kserve

- Seldon

- VLLM

- TGT

- Skypilot

- …

CI/CD — Delivery

- Kubeflow Pipelines

- Mlflow

- TFX

- BentoML

- MLRun

- …

Data Science

- Jupyter

- Kubeflow Notebooks

- PyTorch

- TensorFlow

- Apache Zeppelin

Workload Observability

- Prometheus

- Influxdb

- Grafana

- Weights and Biases (wandb)

- OpenTelemetry

- …

AutoML

- Hyperopt

- Optuna

- Kubeflow Katib

- NNI

- …

Governance & Policy

- Kyverno

- Kyverno-JSON

- OPA/Gatekeeper

- StackRox Minder

- …

Data Architecture

- ClickHouse

- Apache Pinot

- Apache Druid

- Cassandra

- ScyllaDB

- Hadoop HDFS

- Apache HBase

- Presto

- Trino

- Apache Spark

- Apache Flink

- Kafka

- Pulsar

- Fluid

- Memcached

- Redis

- Alluxio

- Apache Superset

- …

Vector Databases

- Chroma

- Weaviate

- Quadrant

- Pinecone

- Extensions

- Redis

- Postgres SQL

- ElasticSearch

- …

Model/LLM Observability

- • Trulens

- Langfuse

- Deepchecks

- OpenLLMetry

- …

Conclusion

Finally, the following key points are summarized:

- Role of Open Source Community : The whitepaper indicates the role of the open-source community in advancing Cloud-Native AI, including accelerating innovation and reducing costs through open-source projects and extensive collaboration.

- Importance of Cloud-Native Technologies : Cloud-Native AI, built according to cloud-native principles, emphasizes the importance of repeatability and scalability. Cloud-native technologies provide an efficient development and operation environment for AI applications, especially in resource scheduling and service scalability.

- Existing Challenges : Despite bringing many advantages, Cloud-Native AI still faces challenges in data preparation, model training resource requirements, and model security and isolation.

- Future Development Directions : The whitepaper proposes development paths including optimizing resource scheduling algorithms to support AI workloads, developing new service mesh technologies to enhance performance and security, and promoting technology innovation and standardization through open-source projects and community collaboration.

- Key Technological Components : Key technologies involved in Cloud-Native AI include containers, microservices, service mesh, and serverless computing, among others. Kubernetes plays a central role in deploying and managing AI applications, while service mesh technologies like Istio and Envoy provide necessary traffic management and security.

For more details, please download the Cloud-Native AI whitepaper 4.

Reference Links

Whitepaper: ↩︎

Hugging Face Collaborates with Microsoft to launch Hugging Face Model Catalog on Azure ↩︎

OpenAI Scaling Kubernetes to 7,500 nodes: ↩︎

Cloud-Native AI Whitepaper: ↩︎

The above is the detailed content of A Deep Dive into CNCF's Cloud-Native AI Whitepaper. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1672

1672

14

14

1428

1428

52

52

1332

1332

25

25

1276

1276

29

29

1256

1256

24

24

Golang vs. Python: Performance and Scalability

Apr 19, 2025 am 12:18 AM

Golang vs. Python: Performance and Scalability

Apr 19, 2025 am 12:18 AM

Golang is better than Python in terms of performance and scalability. 1) Golang's compilation-type characteristics and efficient concurrency model make it perform well in high concurrency scenarios. 2) Python, as an interpreted language, executes slowly, but can optimize performance through tools such as Cython.

Golang and C : Concurrency vs. Raw Speed

Apr 21, 2025 am 12:16 AM

Golang and C : Concurrency vs. Raw Speed

Apr 21, 2025 am 12:16 AM

Golang is better than C in concurrency, while C is better than Golang in raw speed. 1) Golang achieves efficient concurrency through goroutine and channel, which is suitable for handling a large number of concurrent tasks. 2)C Through compiler optimization and standard library, it provides high performance close to hardware, suitable for applications that require extreme optimization.

Getting Started with Go: A Beginner's Guide

Apr 26, 2025 am 12:21 AM

Getting Started with Go: A Beginner's Guide

Apr 26, 2025 am 12:21 AM

Goisidealforbeginnersandsuitableforcloudandnetworkservicesduetoitssimplicity,efficiency,andconcurrencyfeatures.1)InstallGofromtheofficialwebsiteandverifywith'goversion'.2)Createandrunyourfirstprogramwith'gorunhello.go'.3)Exploreconcurrencyusinggorout

Golang vs. C : Performance and Speed Comparison

Apr 21, 2025 am 12:13 AM

Golang vs. C : Performance and Speed Comparison

Apr 21, 2025 am 12:13 AM

Golang is suitable for rapid development and concurrent scenarios, and C is suitable for scenarios where extreme performance and low-level control are required. 1) Golang improves performance through garbage collection and concurrency mechanisms, and is suitable for high-concurrency Web service development. 2) C achieves the ultimate performance through manual memory management and compiler optimization, and is suitable for embedded system development.

Golang vs. Python: Key Differences and Similarities

Apr 17, 2025 am 12:15 AM

Golang vs. Python: Key Differences and Similarities

Apr 17, 2025 am 12:15 AM

Golang and Python each have their own advantages: Golang is suitable for high performance and concurrent programming, while Python is suitable for data science and web development. Golang is known for its concurrency model and efficient performance, while Python is known for its concise syntax and rich library ecosystem.

Golang and C : The Trade-offs in Performance

Apr 17, 2025 am 12:18 AM

Golang and C : The Trade-offs in Performance

Apr 17, 2025 am 12:18 AM

The performance differences between Golang and C are mainly reflected in memory management, compilation optimization and runtime efficiency. 1) Golang's garbage collection mechanism is convenient but may affect performance, 2) C's manual memory management and compiler optimization are more efficient in recursive computing.

The Performance Race: Golang vs. C

Apr 16, 2025 am 12:07 AM

The Performance Race: Golang vs. C

Apr 16, 2025 am 12:07 AM

Golang and C each have their own advantages in performance competitions: 1) Golang is suitable for high concurrency and rapid development, and 2) C provides higher performance and fine-grained control. The selection should be based on project requirements and team technology stack.

Golang vs. Python: The Pros and Cons

Apr 21, 2025 am 12:17 AM

Golang vs. Python: The Pros and Cons

Apr 21, 2025 am 12:17 AM

Golangisidealforbuildingscalablesystemsduetoitsefficiencyandconcurrency,whilePythonexcelsinquickscriptinganddataanalysisduetoitssimplicityandvastecosystem.Golang'sdesignencouragesclean,readablecodeanditsgoroutinesenableefficientconcurrentoperations,t