Technology peripherals

Technology peripherals

AI

AI

ICLR 2024 Oral | To deal with distribution shifts that change over time, the University of Western Ontario and others proposed a learning time series trajectory method

ICLR 2024 Oral | To deal with distribution shifts that change over time, the University of Western Ontario and others proposed a learning time series trajectory method

ICLR 2024 Oral | To deal with distribution shifts that change over time, the University of Western Ontario and others proposed a learning time series trajectory method

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com

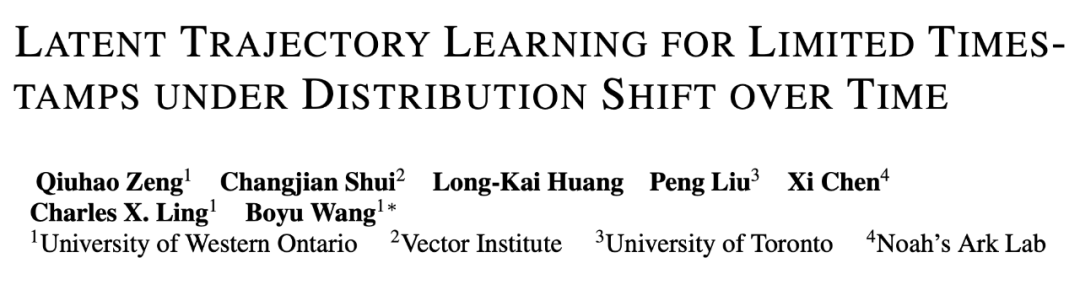

##The author Zeng Qiulin graduated from Harbin Institute of Technology and received a master's degree from the National University of Singapore. Under the guidance of Professor Wang Bo and Academician Ling Xiaofeng, during his doctoral period, he mainly carried out theoretical demonstration, method and application research on the distribution of random time changes. Currently, he has published many academic papers in ICLR/AAAI/IEEE TNNLS.

Personal homepage: https://hardworkingpearl.github.io/

Distribution shifts over time in real-world machine learning applications is a common question. This situation is framed as time-varying domain generalization (EDG), where the goal is to enable the model to generalize well to unseen target domains in time-varying systems by learning underlying evolving patterns across domains and exploiting these patterns. However, due to the limited number of timestamps in the EDG dataset, existing methods encounter challenges in capturing the evolving dynamics and avoiding overfitting to sparse timestamps, which limits their generalization and adaptability to new tasks. To solve this problem, we propose a new method SDE-EDG, which collects the infinite subdivision grid evolution trajectory (IFGET) of the data distribution through continuous interpolation samples to overcome the problem of overfitting. . Furthermore, by exploiting the inherent ability of stochastic differential equations (SDEs) to capture continuous trajectories, we propose to align the trajectories modeled by SDEs with those of IFGET through maximum likelihood estimation, thereby achieving the capture of distribution evolution trends.

- Paper title: Latent Trajectory Learning for Limited Timestamps under Distribution Shift over Time

- Paper link: https://openreview.net/pdf?id=bTMMNT7IdW

- Project link: https://github.com/HardworkingPearl/SDE-EDG-iclr2024

Method

Core Idea

To overcome this challenge, SDE-EDG proposes a novel approach by Construct an Infinitely Fined-Grid Evolving Trajectory (IFGET) to create consecutive interpolated samples in the latent representation space to bridge the gap between timestamps. In addition, SDE-EDG utilizes the inherent ability of Stochastic Differential Equations (SDEs) to capture continuous trajectory dynamics, and aligns the SDE modeled trajectories with IFGET through the path alignment regularizer to achieve cross-domain capture of evolving distribution trends. .Method details

1. Construct IFGET:First, SDE-EDG builds a sample-to-sample representation for each sample in the latent representation space. Correspondence, collect the evolution trajectory of each individual sample. For any sample of each category k at time  , we search for the

, we search for the  closest to it in the feature space at time

closest to it in the feature space at time  for its corresponding sample in

for its corresponding sample in  :

:

Here  is to calculate the distance between two vectors,

is to calculate the distance between two vectors,  is the set of

is the set of  samples sampled from the next field

samples sampled from the next field  .

.

This correspondence is then used to generate continuous interpolation samples, aiming to connect the time gaps between timestamp intervals and avoid overfitting of sparse timestamps,

Here is sampled from the Beta distribution. By collecting the timing trace

is sampled from the Beta distribution. By collecting the timing trace of the samples generated in the above way, we get IFGET.

of the samples generated in the above way, we get IFGET.

2. Model trajectories using SDE:

SDE-EDG adopts neural SDE to model the continuous time trajectory of data in the latent space. Unlike traditional discrete timestamp-based models, SDE is naturally suitable for simulating continuous time trajectories. SDE-EDG models time series trajectories and can predict samples at any future time  through samples at time

through samples at time  :

:

Here the feature space variable  is It is predicted by the sample at time

is It is predicted by the sample at time  ,

,  is the drift function, and

is the drift function, and  is the diffusion function.

is the diffusion function.

3. Path alignment and maximum likelihood estimation:

SDE-EDG trains the model by maximizing the likelihood estimation of IFGET,

The final training function is  , and the first item is the error loss function for the prediction classification task.

, and the first item is the error loss function for the prediction classification task.

4. Experiment

下表展示了 SDE-EDG 与其他基线方法在多个数据集上分类准确率的比较。这些数据集包括 Rotated Gaussian (RG), Circle (Cir), Rotated MNIST (RM), Portraits (Por), Caltran (Cal), PowerSupply (PS), 和 Ocular Disease (OD)。结果显示,SDE-EDG 在所有数据集上的平均准确率均优于其他方法。

下图提供了一个直观的比较,展示了 SDE-EDG 算法(左)与传统 DG 方法 IRM(右)在特征表示方面的差异。通过数据特征空间的可视化,我们可以观察到 SDE-EDG 学习到的特征表示具有明显的决策边界,其中不同类别的数据点被清晰地区分开来,以不同形状表示,并且不同域的数据以彩虹条的颜色区分。这表明 SDE-EDG 能够成功捕捉数据随时间演变的动态,并在特征空间中保持类别的可分性。相比之下,IRM 的特征表示则倾向于将数据点坍缩到单一方向,导致决策边界不明显,这反映出 IRM 在捕捉时变分布趋势方面的不足。

下图通过一系列子图深入展示了 SDE-EDG 算法在捕捉数据随时间演变的能力方面的优势。子图 (a) 提供了 Sine 数据集的真实标签分布,其中正例和负例用不同颜色的点表示,为后续的比较提供了基准。接着,子图 (b) 和 (c) 分别展示了基于 ERM 的传统方法和 SDE-EDG 算法对同一数据集的预测结果,通过对比可以看出 SDE-EDG 在捕捉数据演变模式上的明显优势。子图 (d) 和 (e) 进一步揭示了 SDE-EDG 学习到的演变路径,其中 (d) 展示了应用了路径对齐损失(最大似然损失函数)后的路径,而 (e) 展示了未应用该损失时的路径。通过这一对比,可以直观地看到路径对齐损失对于确保模型能够正确捕捉和表征数据随时间变化的重要性。

-

下图子图 (a) 展示了在 Portraits 数据集上,使用不同算法进行训练时的准确率收敛轨迹。这个子图提供了一个直观的视角,用以比较 SDE-EDG 算法与其他基线方法(如 ERM、MLDG、GI)在训练过程中性能的变化情况。通过观察训练准确率随时间推移的增长趋势,我们可以评估不同算法的学习能力和收敛速度。SDE-EDG 算法的收敛轨迹尤其值得关注,因为它揭示了该算法在适应不断演变的数据分布时的效率和稳定性。

下图子图 (b) 和 (c) 分别展示了 RMNIST 和 Circle 数据集上,SDE-EDG 算法在这些数据集上的表现显示出其在处理时变分布时的优越性,即使在面对较大时间跨度的目标域时,也能保持较高的准确率,这表明了 SDE-EDG 算法在捕捉和适应数据演变模式方面的强大能力。

下图子图 (d) 和 (e) 探讨了最大似然损失(Maximum Likelihood Loss)在 RMNIST 和 PowerSupply 数据集上对 SDE-EDG 性能的影响。通过改变正则化权重 α 的值,这两个子图展示了不同 α 设置对模型性能的具体影响。实验结果表明,适当的 α 值可以显著提高 SDE-EDG 在特定数据集上的性能,这强调了在实际应用中根据数据集特性和任务需求调整超参数的重要性。

结论

论文作者提出了一种新的 SDE-EDG 方法,用于建模时变域泛化(EDG)问题。方法涉及通过识别样本到样本的对应关系并生成连续插值样本来构建 IFGET。随后,作者采用随机微分方程(SDE)并将其与 IFGET 对齐进行训练。文章的贡献在于揭示了通过收集个体的时间轨迹来捕获演变模式的重要性,以及在时间间隔之间进行插值以减轻源时间戳数量有限的问题,这有效地防止了 SDE-EDG 对有限时间戳的过拟合。

The above is the detailed content of ICLR 2024 Oral | To deal with distribution shifts that change over time, the University of Western Ontario and others proposed a learning time series trajectory method. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1655

1655

14

14

1414

1414

52

52

1307

1307

25

25

1254

1254

29

29

1228

1228

24

24

The author of ControlNet has another hit! The whole process of generating a painting from a picture, earning 1.4k stars in two days

Jul 17, 2024 am 01:56 AM

The author of ControlNet has another hit! The whole process of generating a painting from a picture, earning 1.4k stars in two days

Jul 17, 2024 am 01:56 AM

It is also a Tusheng video, but PaintsUndo has taken a different route. ControlNet author LvminZhang started to live again! This time I aim at the field of painting. The new project PaintsUndo has received 1.4kstar (still rising crazily) not long after it was launched. Project address: https://github.com/lllyasviel/Paints-UNDO Through this project, the user inputs a static image, and PaintsUndo can automatically help you generate a video of the entire painting process, from line draft to finished product. follow. During the drawing process, the line changes are amazing. The final video result is very similar to the original image: Let’s take a look at a complete drawing.

Topping the list of open source AI software engineers, UIUC's agent-less solution easily solves SWE-bench real programming problems

Jul 17, 2024 pm 10:02 PM

Topping the list of open source AI software engineers, UIUC's agent-less solution easily solves SWE-bench real programming problems

Jul 17, 2024 pm 10:02 PM

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com The authors of this paper are all from the team of teacher Zhang Lingming at the University of Illinois at Urbana-Champaign (UIUC), including: Steven Code repair; Deng Yinlin, fourth-year doctoral student, researcher

From RLHF to DPO to TDPO, large model alignment algorithms are already 'token-level'

Jun 24, 2024 pm 03:04 PM

From RLHF to DPO to TDPO, large model alignment algorithms are already 'token-level'

Jun 24, 2024 pm 03:04 PM

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com In the development process of artificial intelligence, the control and guidance of large language models (LLM) has always been one of the core challenges, aiming to ensure that these models are both powerful and safe serve human society. Early efforts focused on reinforcement learning methods through human feedback (RL

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

arXiv papers can be posted as 'barrage', Stanford alphaXiv discussion platform is online, LeCun likes it

Aug 01, 2024 pm 05:18 PM

arXiv papers can be posted as 'barrage', Stanford alphaXiv discussion platform is online, LeCun likes it

Aug 01, 2024 pm 05:18 PM

cheers! What is it like when a paper discussion is down to words? Recently, students at Stanford University created alphaXiv, an open discussion forum for arXiv papers that allows questions and comments to be posted directly on any arXiv paper. Website link: https://alphaxiv.org/ In fact, there is no need to visit this website specifically. Just change arXiv in any URL to alphaXiv to directly open the corresponding paper on the alphaXiv forum: you can accurately locate the paragraphs in the paper, Sentence: In the discussion area on the right, users can post questions to ask the author about the ideas and details of the paper. For example, they can also comment on the content of the paper, such as: "Given to

A significant breakthrough in the Riemann Hypothesis! Tao Zhexuan strongly recommends new papers from MIT and Oxford, and the 37-year-old Fields Medal winner participated

Aug 05, 2024 pm 03:32 PM

A significant breakthrough in the Riemann Hypothesis! Tao Zhexuan strongly recommends new papers from MIT and Oxford, and the 37-year-old Fields Medal winner participated

Aug 05, 2024 pm 03:32 PM

Recently, the Riemann Hypothesis, known as one of the seven major problems of the millennium, has achieved a new breakthrough. The Riemann Hypothesis is a very important unsolved problem in mathematics, related to the precise properties of the distribution of prime numbers (primes are those numbers that are only divisible by 1 and themselves, and they play a fundamental role in number theory). In today's mathematical literature, there are more than a thousand mathematical propositions based on the establishment of the Riemann Hypothesis (or its generalized form). In other words, once the Riemann Hypothesis and its generalized form are proven, these more than a thousand propositions will be established as theorems, which will have a profound impact on the field of mathematics; and if the Riemann Hypothesis is proven wrong, then among these propositions part of it will also lose its effectiveness. New breakthrough comes from MIT mathematics professor Larry Guth and Oxford University

The first Mamba-based MLLM is here! Model weights, training code, etc. have all been open source

Jul 17, 2024 am 02:46 AM

The first Mamba-based MLLM is here! Model weights, training code, etc. have all been open source

Jul 17, 2024 am 02:46 AM

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com. Introduction In recent years, the application of multimodal large language models (MLLM) in various fields has achieved remarkable success. However, as the basic model for many downstream tasks, current MLLM consists of the well-known Transformer network, which

LLM is really not good for time series prediction. It doesn't even use its reasoning ability.

Jul 15, 2024 pm 03:59 PM

LLM is really not good for time series prediction. It doesn't even use its reasoning ability.

Jul 15, 2024 pm 03:59 PM

Can language models really be used for time series prediction? According to Betteridge's Law of Headlines (any news headline ending with a question mark can be answered with "no"), the answer should be no. The fact seems to be true: such a powerful LLM cannot handle time series data well. Time series, that is, time series, as the name suggests, refers to a set of data point sequences arranged in the order of time. Time series analysis is critical in many areas, including disease spread prediction, retail analytics, healthcare, and finance. In the field of time series analysis, many researchers have recently been studying how to use large language models (LLM) to classify, predict, and detect anomalies in time series. These papers assume that language models that are good at handling sequential dependencies in text can also generalize to time series.