Technology peripherals

Technology peripherals

AI

AI

3 times the generation speed and reduced memory costs, an efficient decoding framework that surpasses Medusa2 is finally here

3 times the generation speed and reduced memory costs, an efficient decoding framework that surpasses Medusa2 is finally here

3 times the generation speed and reduced memory costs, an efficient decoding framework that surpasses Medusa2 is finally here

Efficiently decode n-token sequences, CLLMs+Jacobi decoding framework.

Traditionally, large language models (LLMs) are thought of as sequential decoders, decoding each token one by one.

A research team from Shanghai Jiao Tong University and the University of California shows that pre-trained LLMs can be easily taught to become efficient parallel decoders and introduces a new family of parallel decoders called coherence Large Language Models (CLLMs) are able to reduce inference latency by efficiently decoding a n-token sequence at each inference step.

In this paper, the research shows that: "Imitating the cognitive process that humans use to express word-for-word expressions after forming complete sentences in their heads can be effectively learned by simply fine-tuning pre-trained LLMs."

Specifically, CLLMs produce decoding sequences with the same results as autoregressive (AR) decoding by mapping any randomly initialized n-token sequence into as few steps as possible. In this way, parallel decoding training can be performed.

Experimental results show that the CLLMs obtained using the method proposed by the research team are very effective, showing that the method obtains a 2.4- to 3.4-fold improvement in generation speed, and is consistent with other fast inference techniques such as Medusa2 Comparable to Eagle, and requires no additional memory cost to accommodate auxiliary model components during inference.

Paper name: "CLLMs: Consistency Large Language Models"

Paper link: https:/ /arxiv.org/pdf/2403.00835

## Figure 1: CLLM-ABEL when using Jacobi decoding on GSM8K -7B-001 is a demonstration of approximately 3x the speed of baseline ABEL-7B-001.

Jacobi Decoding

Large language models (LLMs) are changing the face of human life, from programming to providing legal and health advice. However, during the inference process, LLMs use autoregressive decoding to generate responses token by token, as shown in Figure 1, which results in high latency for longer responses. Using autoregressive decoding often requires architectural modifications, auxiliary components, or first draft models to speed up inference by generating multiple tokens at once.

图 2 2: Traditional self -regression (AR) decoding schematic diagram: generate one token at a time.

Jacobi decoding is derived from the method of Jacobi and Gauss-Seidel fixed-point iteration for solving nonlinear equations, and has been proven to be exactly the same as autoregressive generation using greedy decoding.

Jacobi decoding reconstructs the sequential generation process into a system of n nonlinear equations containing n variables, and can be solved in parallel based on Jacobi iteration.

Each iteration step may predict multiple correct tokens (the so-called "correct" refers to aligning with the autoregressive decoding results under the greedy sampling strategy), thereby potentially accelerating autoregressive decoding.

## .

Specifically, the Jacobi decoding method first randomly guesses the next token of the sequence from the input prompt (hereinafter referred to as then-token sequence, unless otherwise stated ).

Then, then -token sequence is fed into the LLM along with the hints for iterative updates. This process will continue until the sequence of n-token stabilizes, no longer changes, and reaches a fixed point.

It is worth noting that Jacobi decoding does not require any more queries to the LLM than autoregressive (AR) decoding. Eventually, the sequence ofn-tokens will converge to the output generated by AR decoding under the greedy strategy. The process from the initial random guess to the final AR generated result is called the "Jacobi trajectory."

An example of the Jacobi decoding iteration process and Jacobi trajectory is illustrated in Figure 2.Limitations of Jacobi decoding:

However, in practice, ordinary Jacobi decoding only slightly improves the acceleration effect of LLMs. For example, the average acceleration ratio is only 1.05 times. This is because it is difficult for LLM to generate correct tokens when there are errors in previous tokens.Therefore, most Jacobi iterations can only obtain one correction for a sequence of n -tokens, resulting in the longer trajectory shown on the left side of Figure 3.

Look-ahead decoding and speculative decoding methods attempt to alleviate the inefficiencies of Jacobi decoding and traditional autoregressive decoding, but incur additional memory costs during inference.

CLLMs do not require these additional memory costs.

Consistent Large Language Models (CLLMs)

Preliminary Jacobi decoding:

Given a prompt xAnd a pre-trained LLM p(·|x), usually researchers will use the standard autoregressive (AR) decoding method to obtain the response of the model under the greedy strategy, that is:

Jacobi decoding restructures the LLM inference process as a process of solving a system of nonlinear equations to transform the decoding process into a form that can be calculated in parallel. Considering:

The researcher can rewrite the above equation as a nonlinear system of equations:

Note is:

The process exits at a certain k value such that:

Then, define  as the fixed point, and

as the fixed point, and  as the Jacobi trajectory.

as the Jacobi trajectory.

To solve this problem, the research team proposed to adjust the pre-trained LLMs so that they can consistently assign any point on the Jacobi trajectory Jy Maps to fixed point y*.

Surprisingly, they found that such a goal is similar to that of the consistency model—a major acceleration method for diffusion models.

In the method proposed by the team, the model is trained using Jacobi trajectories collected from the target model and uses a loss function that encourages single-step convergence during Jacobi iterations.

For each target model to be adjusted to CLLMp, training includes two parts:

(1) Jacobi trajectory preparation:

For each prompt, the author sequentially performs Jacobi decoding on each token truncation until the entire response sequence l is generated, which is equivalent to all consecutive fixed points of series connection.

Each sequence generated along the trajectory is counted as a data entry.

It should be noted here that for I containing N (N ≫ n) tokens Long responses, this truncation avoids slow model evaluation on long inputs.

(2) Training using consistency and AR loss:

The author jointly optimizes the two losses to adjust CLLMs. The consistency loss ensures that multiple tokens are predicted at one time, while the AR loss prevents CLLM Deviate from target LLM to maintain build quality.

图 Figure 4: One-Step Swatching of Concentration Training: Adjust the target LLM into any state on the Jacobi trajectory as an input and always predict the fixed point.

Consistency and AR loss:

(1) Consistency loss

Supposep represents the target LLM.

Let  be represented as a CLLM with parameters θ initialized to p.

be represented as a CLLM with parameters θ initialized to p.

For prompt x and the corresponding Jacobi trajectory J, let y and y* respectively Represents random states and fixed points on the trajectory.

You can prompt CLLM to output y when the input is y* by minimizing the following loss, which is called Global Consistency (GC) loss:

In this formula,

In this formula,

The author uses symbols extensively to represent uniform sampling from the data set.

D(·||·) represents the distance between two distributions. The choice is discussed in the GKD method. In this article, forward KL is mainly used.

Alternatively, use local consistency (LC) loss according to the formula in the consistency model.

Where adjacent states:  In the Jacobi trajectory J , is driven to produce the same output:

In the Jacobi trajectory J , is driven to produce the same output:

(2) AR loss:

In order to avoid deviating from the distribution of the target LLM, the author combines the generation based on the target LLM p## Traditional AR loss of #l:

ω, train CLLM The total loss is:

Experiment

Result:

Total Say, the experiment covers three domain-specific tasks: (1) Spider (Text to SQL) (2) Human-Eval (Python code completion) and GSM8k (Math) (3) Wider open domain session challenge MT-bench. The reported experiments use fine-tuned encoder LLM, Deepseek-coder-7B-instruct, LLaMA-2-7B or ABEL-7B-001 as the target model, depending on the task. Training and evaluation are performed on NVIDIA A100 40GB server.

Figure 5: Acceleration effect of CLLM on different downstream tasks. The results show: "CLLM is significantly faster than the pre-trained model and achieves comparable speedup compared to Medusa, but at no additional cost during inference."

Figure 6: Comparison diagram between CLLM and other benchmarks on specific domain tasks (Spider, CSN-Python, GSM8k) and MT-bench. CLLM achieves similar or even better speedups in comparison with Medusa2 while introducing no additional inference cost (judged by FLOPS and memory consumption).

Specialized areas:

From Figure 5, it can be seen that compared with other benchmarks (including the original target model, Medusa2 and guess decoding) In comparison, CLLMs achieve the most significant speedup.Open Domain Session Challenge (MT-bench):

When CLLM trained from LLaMA2-7B using the ShareGPT dataset is combined with lookahead decoding, It is possible to achieve roughly the same speedup as Medusa2 and obtain comparable scores on MT-bench. However, CLLM is more adaptable and memory efficient because it does not require modifications to the original architecture of the target model and does not require auxiliary components.Training Cost:

The fine-tuning cost of CLLMs is modest. For example, for LLaMA-7B, only passing about 1M tokens can achieve its 3.4x speedup on the Spider dataset. In cases where the dataset size is large (such as for CodeSearchNet-Python), only 10% of the dataset needs to be used to generate Jacobi trajectories for training CLLMs, resulting in an approximately 2.5x speedup. The total number of tokens can be estimated in the following way: N = average trajectory amount of each prompt × average trajectory length × number of prompts.

Figure 7: Jacobi trajectory comparison between target LLM and CLLM on Spider. Each point on the Jacobi trajectory is a color-coded sequence: correct matches to the AR results are marked in blue, inaccurate ones are marked in red. CLLM exhibits enhanced efficiency, converging to the fixed point 2 times faster than the target LLM. This enhanced efficiency of CLLM can be attributed to the consistency loss, which facilitates the learning of the structure of the n-token sequence for each given prefix.

The left side of Figure 6 shows that the target LLM usually only generates one correct token in one iteration. In contrast, in CLLMs, the authors found the phenomenon of rapid advancement, where multiple consecutive tokens are correctly predicted in a single Jacobi iteration.

In addition, in the target LLM, tokens that are correctly generated in advance (such as "country" and "H" at indexes 6 and 7 on the left side of Figure 7) are often inaccurately replaced in subsequent iterations. .

On the other hand, CLLMs have shown the ability to predict the correct token, ensuring that the token remains unchanged even in the presence of a previous incorrect token.

The author calls such a token a "fixed token". These two phenomena together contribute to the rapid convergence of CLLMs in Jacobi decoding, resulting in considerable generation speed improvements.

The research team also observed that through training, CLLMs acquired a key language concept - collocation: "a series of words or terms that co-occur more frequently than expected by random chance."

Language is not only made up of isolated words, but also relies heavily on specific word pairs. Examples of collocations are abundant in both natural and programming languages.

They include:

Verb + preposition combination (such as "talk to", "remind ... of ...")

Verb + noun structures (e.g. "make a decision", "catch a cold")

Many domain-specific syntactic structures (e.g. "SELECT ... FROM . ..", "if ... else" is used in programming).

The consistency generation goal enables CLLMs to infer such structures from any point in the Jacobi trajectory, facilitating CLLMs to master a large number of collocations and thus predict multiple words simultaneously to minimize iteration steps .

Reference link:

https://hao-ai-lab.github.io/blogs/cllm/

The above is the detailed content of 3 times the generation speed and reduced memory costs, an efficient decoding framework that surpasses Medusa2 is finally here. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1665

1665

14

14

1424

1424

52

52

1322

1322

25

25

1270

1270

29

29

1249

1249

24

24

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

But maybe he can’t defeat the old man in the park? The Paris Olympic Games are in full swing, and table tennis has attracted much attention. At the same time, robots have also made new breakthroughs in playing table tennis. Just now, DeepMind proposed the first learning robot agent that can reach the level of human amateur players in competitive table tennis. Paper address: https://arxiv.org/pdf/2408.03906 How good is the DeepMind robot at playing table tennis? Probably on par with human amateur players: both forehand and backhand: the opponent uses a variety of playing styles, and the robot can also withstand: receiving serves with different spins: However, the intensity of the game does not seem to be as intense as the old man in the park. For robots, table tennis

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

On August 21, the 2024 World Robot Conference was grandly held in Beijing. SenseTime's home robot brand "Yuanluobot SenseRobot" has unveiled its entire family of products, and recently released the Yuanluobot AI chess-playing robot - Chess Professional Edition (hereinafter referred to as "Yuanluobot SenseRobot"), becoming the world's first A chess robot for the home. As the third chess-playing robot product of Yuanluobo, the new Guoxiang robot has undergone a large number of special technical upgrades and innovations in AI and engineering machinery. For the first time, it has realized the ability to pick up three-dimensional chess pieces through mechanical claws on a home robot, and perform human-machine Functions such as chess playing, everyone playing chess, notation review, etc.

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

The start of school is about to begin, and it’s not just the students who are about to start the new semester who should take care of themselves, but also the large AI models. Some time ago, Reddit was filled with netizens complaining that Claude was getting lazy. "Its level has dropped a lot, it often pauses, and even the output becomes very short. In the first week of release, it could translate a full 4-page document at once, but now it can't even output half a page!" https:// www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/ in a post titled "Totally disappointed with Claude", full of

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference being held in Beijing, the display of humanoid robots has become the absolute focus of the scene. At the Stardust Intelligent booth, the AI robot assistant S1 performed three major performances of dulcimer, martial arts, and calligraphy in one exhibition area, capable of both literary and martial arts. , attracted a large number of professional audiences and media. The elegant playing on the elastic strings allows the S1 to demonstrate fine operation and absolute control with speed, strength and precision. CCTV News conducted a special report on the imitation learning and intelligent control behind "Calligraphy". Company founder Lai Jie explained that behind the silky movements, the hardware side pursues the best force control and the most human-like body indicators (speed, load) etc.), but on the AI side, the real movement data of people is collected, allowing the robot to become stronger when it encounters a strong situation and learn to evolve quickly. And agile

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

At this ACL conference, contributors have gained a lot. The six-day ACL2024 is being held in Bangkok, Thailand. ACL is the top international conference in the field of computational linguistics and natural language processing. It is organized by the International Association for Computational Linguistics and is held annually. ACL has always ranked first in academic influence in the field of NLP, and it is also a CCF-A recommended conference. This year's ACL conference is the 62nd and has received more than 400 cutting-edge works in the field of NLP. Yesterday afternoon, the conference announced the best paper and other awards. This time, there are 7 Best Paper Awards (two unpublished), 1 Best Theme Paper Award, and 35 Outstanding Paper Awards. The conference also awarded 3 Resource Paper Awards (ResourceAward) and Social Impact Award (

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Deep integration of vision and robot learning. When two robot hands work together smoothly to fold clothes, pour tea, and pack shoes, coupled with the 1X humanoid robot NEO that has been making headlines recently, you may have a feeling: we seem to be entering the age of robots. In fact, these silky movements are the product of advanced robotic technology + exquisite frame design + multi-modal large models. We know that useful robots often require complex and exquisite interactions with the environment, and the environment can be represented as constraints in the spatial and temporal domains. For example, if you want a robot to pour tea, the robot first needs to grasp the handle of the teapot and keep it upright without spilling the tea, then move it smoothly until the mouth of the pot is aligned with the mouth of the cup, and then tilt the teapot at a certain angle. . this

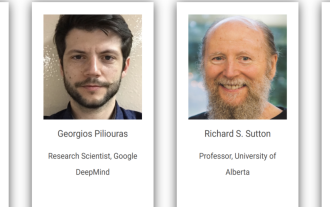

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Conference Introduction With the rapid development of science and technology, artificial intelligence has become an important force in promoting social progress. In this era, we are fortunate to witness and participate in the innovation and application of Distributed Artificial Intelligence (DAI). Distributed artificial intelligence is an important branch of the field of artificial intelligence, which has attracted more and more attention in recent years. Agents based on large language models (LLM) have suddenly emerged. By combining the powerful language understanding and generation capabilities of large models, they have shown great potential in natural language interaction, knowledge reasoning, task planning, etc. AIAgent is taking over the big language model and has become a hot topic in the current AI circle. Au

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

This afternoon, Hongmeng Zhixing officially welcomed new brands and new cars. On August 6, Huawei held the Hongmeng Smart Xingxing S9 and Huawei full-scenario new product launch conference, bringing the panoramic smart flagship sedan Xiangjie S9, the new M7Pro and Huawei novaFlip, MatePad Pro 12.2 inches, the new MatePad Air, Huawei Bisheng With many new all-scenario smart products including the laser printer X1 series, FreeBuds6i, WATCHFIT3 and smart screen S5Pro, from smart travel, smart office to smart wear, Huawei continues to build a full-scenario smart ecosystem to bring consumers a smart experience of the Internet of Everything. Hongmeng Zhixing: In-depth empowerment to promote the upgrading of the smart car industry Huawei joins hands with Chinese automotive industry partners to provide