Technology peripherals

Technology peripherals

AI

AI

Yann LeCun: ViT is slow and inefficient. Real-time image processing still depends on convolution.

Yann LeCun: ViT is slow and inefficient. Real-time image processing still depends on convolution.

Yann LeCun: ViT is slow and inefficient. Real-time image processing still depends on convolution.

In the era of Transformer unification, is it still necessary to study the CNN direction of computer vision?

At the beginning of this year, OpenAI’s large video model Sora made the Vision Transformer (ViT) architecture popular. Since then, there has been an ongoing debate about who is more powerful, ViT or traditional convolutional neural networks (CNN).

Recently, Yann LeCun, Turing Award winner and Meta chief scientist who has been active on social media, has also joined the discussion on the dispute between ViT and CNN.

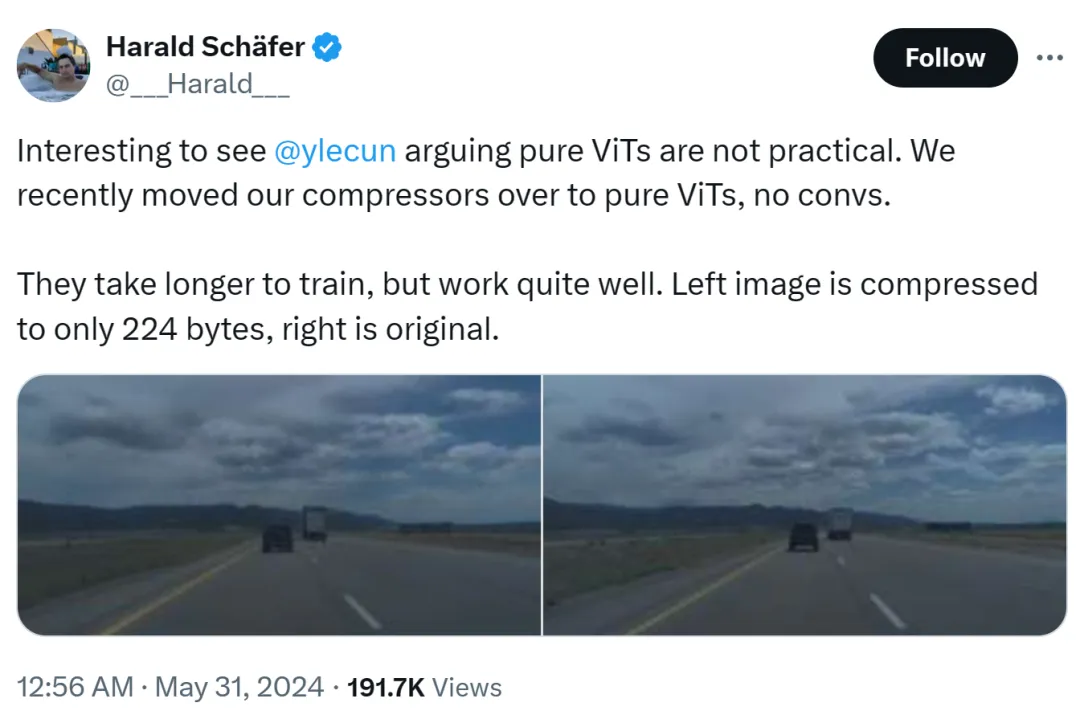

The cause of this incident was that Harald Schäfer, CTO of Comma.ai, was showing off his latest research. He (like many recent AI scholars) cue Yann LeCun's expression that although the Turing Award tycoon believes that pure ViT is not practical, we have recently changed our compressor to pure ViT. There is no quick gain and it will take longer. training, but the effect is very good.

For example, the image on the left is compressed to only 224 bytes, and the right is the original image.

Only 14×128, which is very large for the world model used for autonomous driving, which means that a large amount of data can be input for training. Training in a virtual environment is less expensive than in a real environment, where agents need to be trained according to policies to work properly. Higher resolutions for virtual training will work better, but the simulator will become very slow, so compression is necessary for now.

His demonstration sparked discussion in the AI circle, and Eric Jang, vice president of artificial intelligence at 1X, replied that the results were amazing.

Harald continued to praise ViT: It is a very beautiful architecture.

Someone started to get angry here: Masters like LeCun sometimes fail to keep up with the pace of innovation.

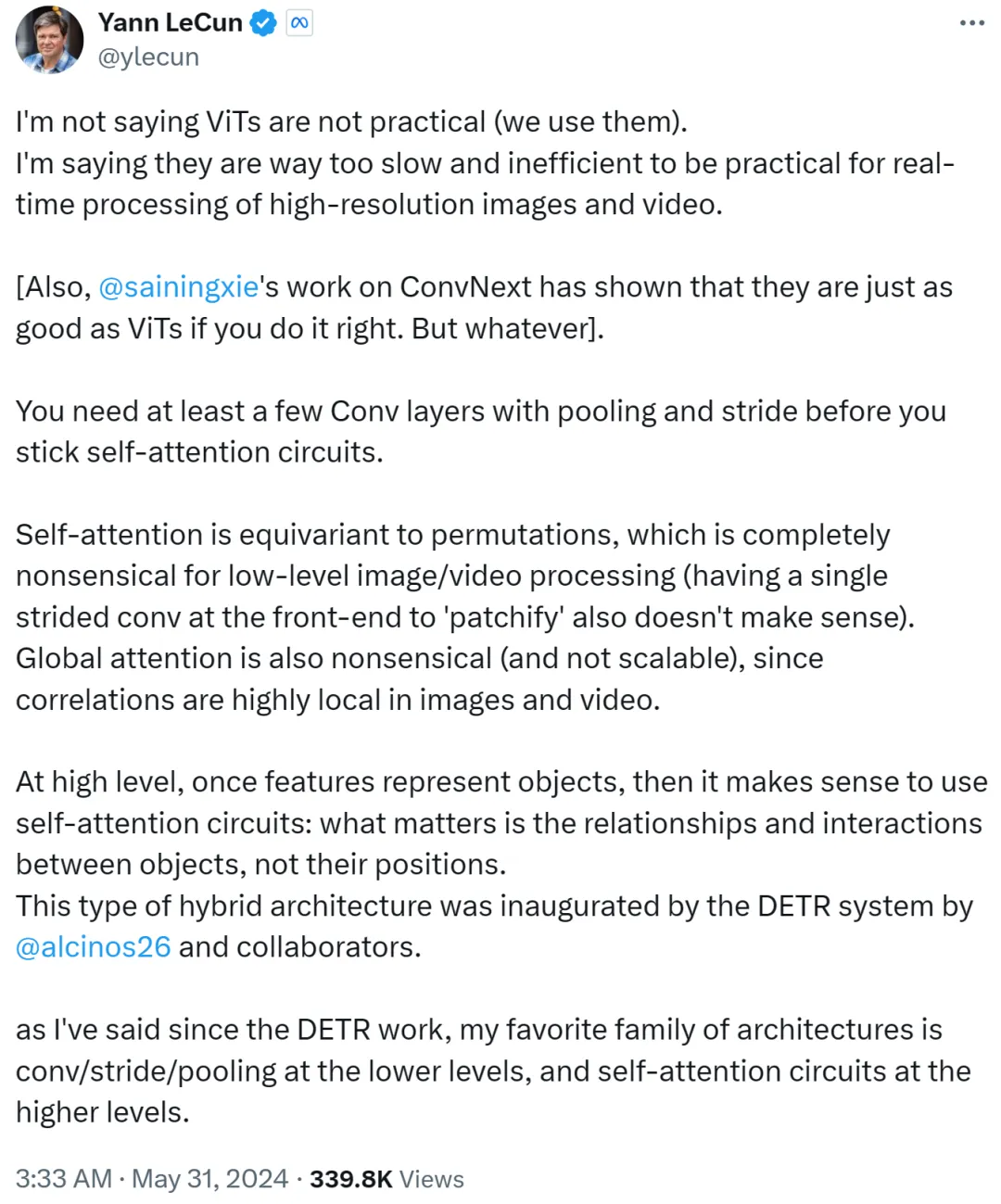

However, Yann LeCun quickly replied and argued that he was not saying that ViT is not practical, and everyone is using it now it. What he wants to express is that ViT is too slow and inefficient, making it unsuitable for real-time processing of high-resolution image and video tasks.

Yann LeCun also Cue Xie Saining, an assistant professor at New York University, whose work ConvNext proved that CNN can be as good as ViT if the method is right.

He goes on to say that you need at least a few convolutional layers with pooling and strides before sticking to a self-attention loop.

If self-attention is equivalent to permutation, it makes no sense at all for low-level image or video processing, nor does using a single stride for patchify on the front end. In addition, since the correlation in images or videos is highly concentrated locally, global attention is meaningless and unscalable.

At a higher level, once features represent objects, then using a self-attention loop makes sense: it is the relationships and interactions between objects that matter, not their Location. This hybrid architecture was pioneered by the DETR system completed by Meta research scientist Nicolas Carion and co-authors.

Since the emergence of DETR work, Yann LeCun said that his favorite architecture is low-level convolution/stride/pooling, and high-level self-attention loop.

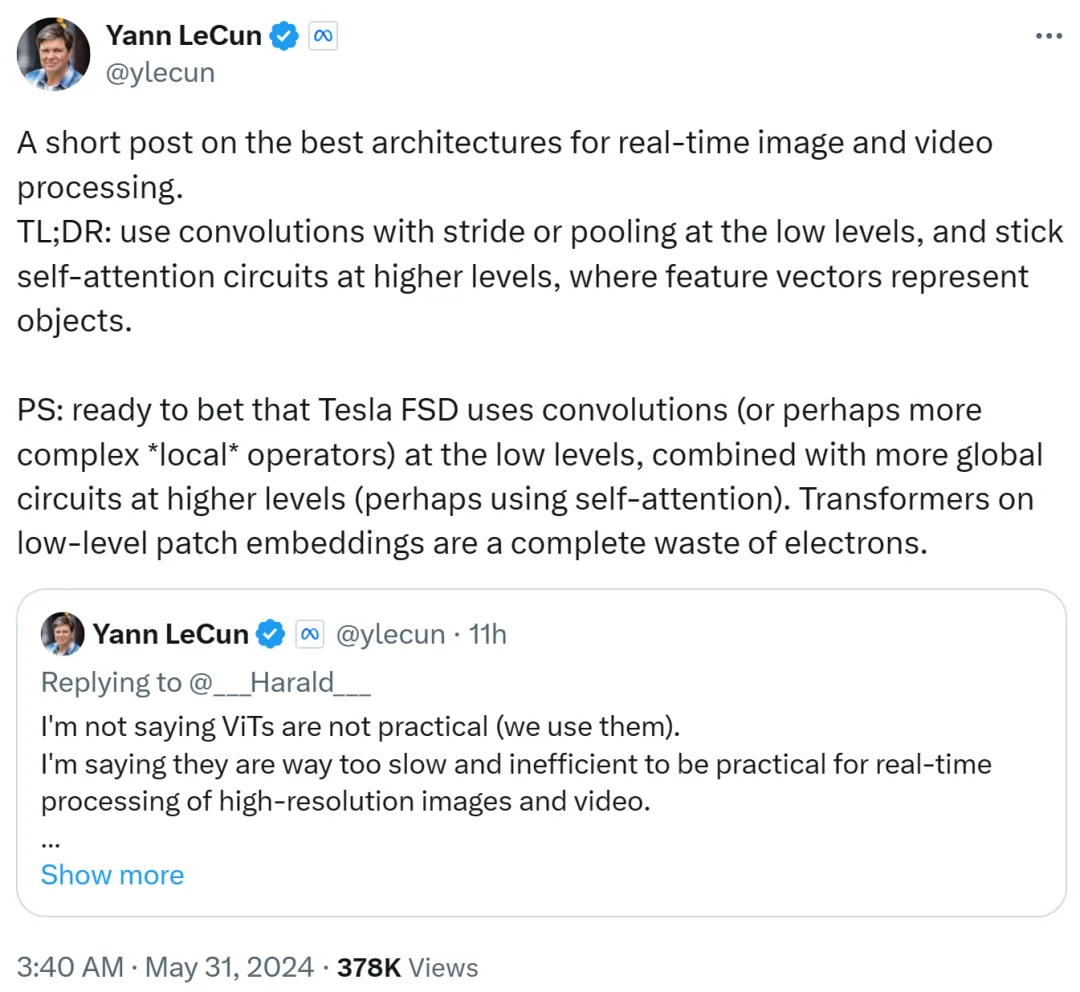

Yann LeCun summed it up in the second post: use convolution with stride or pooling at low level, and at high level Use a self-attention loop and use feature vectors to represent objects.

He also bets that Tesla Fully Self-Driving (FSD) uses convolutions (or more complex local operators) at low levels and combines more at higher levels Global loop (possibly using self-attention). Therefore, using Transformers on low-level patch embeddings is a complete waste.

I guess that the archenemy Musk still uses the convolution route.

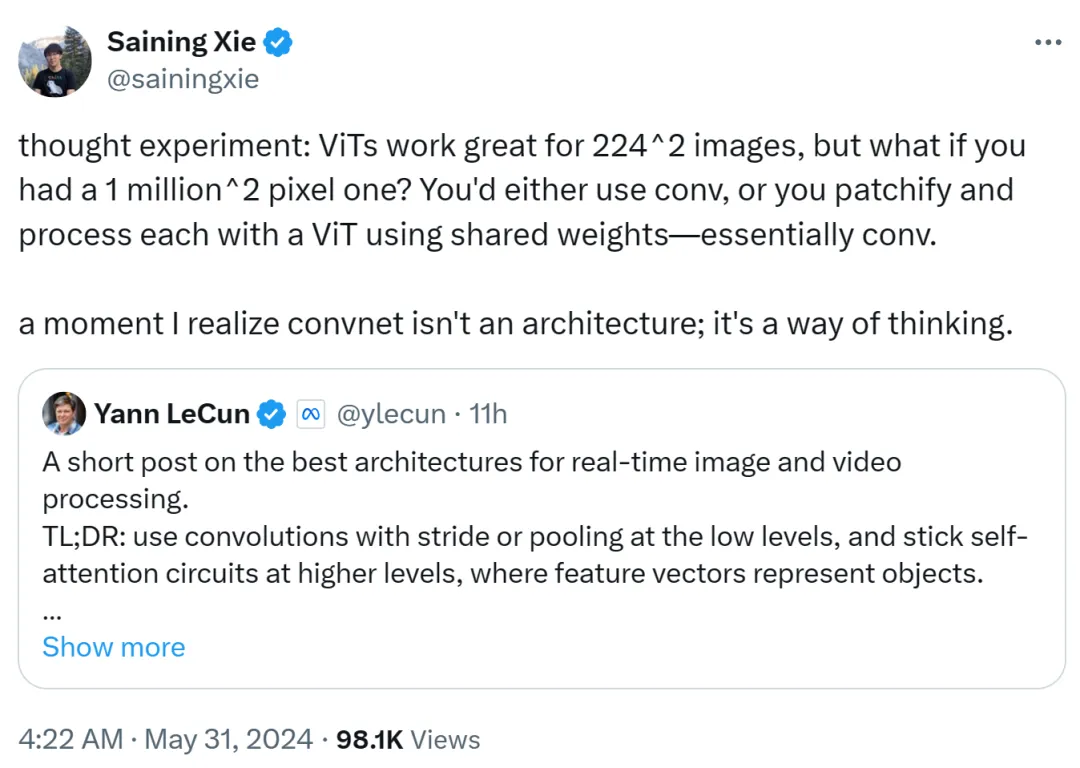

Xie Saining also expressed his opinion. He believes that ViT is very suitable for low-resolution images of 224x224, but what should we do if the image resolution reaches 1 million x 1 million? At this time, either convolution is used, or ViT is patched and processed using shared weights, which is still convolution in nature.

Therefore, Xie Saining said that there was a moment when he realized that the convolutional network was not an architecture, but a way of thinking.

This view is recognized by Yann LeCun.

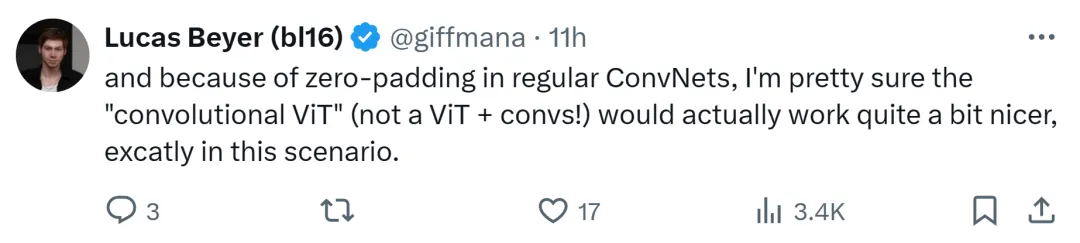

Google DeepMind researcher Lucas Beyer also said that thanks to the zero padding of conventional convolutional networks, he is very sure "Convolution ViT" (instead of ViT + convolution) will work well.

##It is foreseeable that this debate between ViT and CNN will continue until another update is made in the future. The emergence of powerful architecture.

The above is the detailed content of Yann LeCun: ViT is slow and inefficient. Real-time image processing still depends on convolution.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1666

1666

14

14

1425

1425

52

52

1328

1328

25

25

1273

1273

29

29

1253

1253

24

24

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

DMA in C refers to DirectMemoryAccess, a direct memory access technology, allowing hardware devices to directly transmit data to memory without CPU intervention. 1) DMA operation is highly dependent on hardware devices and drivers, and the implementation method varies from system to system. 2) Direct access to memory may bring security risks, and the correctness and security of the code must be ensured. 3) DMA can improve performance, but improper use may lead to degradation of system performance. Through practice and learning, we can master the skills of using DMA and maximize its effectiveness in scenarios such as high-speed data transmission and real-time signal processing.

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

Handling high DPI display in C can be achieved through the following steps: 1) Understand DPI and scaling, use the operating system API to obtain DPI information and adjust the graphics output; 2) Handle cross-platform compatibility, use cross-platform graphics libraries such as SDL or Qt; 3) Perform performance optimization, improve performance through cache, hardware acceleration, and dynamic adjustment of the details level; 4) Solve common problems, such as blurred text and interface elements are too small, and solve by correctly applying DPI scaling.

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

C performs well in real-time operating system (RTOS) programming, providing efficient execution efficiency and precise time management. 1) C Meet the needs of RTOS through direct operation of hardware resources and efficient memory management. 2) Using object-oriented features, C can design a flexible task scheduling system. 3) C supports efficient interrupt processing, but dynamic memory allocation and exception processing must be avoided to ensure real-time. 4) Template programming and inline functions help in performance optimization. 5) In practical applications, C can be used to implement an efficient logging system.

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

In MySQL, add fields using ALTERTABLEtable_nameADDCOLUMNnew_columnVARCHAR(255)AFTERexisting_column, delete fields using ALTERTABLEtable_nameDROPCOLUMNcolumn_to_drop. When adding fields, you need to specify a location to optimize query performance and data structure; before deleting fields, you need to confirm that the operation is irreversible; modifying table structure using online DDL, backup data, test environment, and low-load time periods is performance optimization and best practice.

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

The built-in quantization tools on the exchange include: 1. Binance: Provides Binance Futures quantitative module, low handling fees, and supports AI-assisted transactions. 2. OKX (Ouyi): Supports multi-account management and intelligent order routing, and provides institutional-level risk control. The independent quantitative strategy platforms include: 3. 3Commas: drag-and-drop strategy generator, suitable for multi-platform hedging arbitrage. 4. Quadency: Professional-level algorithm strategy library, supporting customized risk thresholds. 5. Pionex: Built-in 16 preset strategy, low transaction fee. Vertical domain tools include: 6. Cryptohopper: cloud-based quantitative platform, supporting 150 technical indicators. 7. Bitsgap:

How does deepseek official website achieve the effect of penetrating mouse scroll event?

Apr 30, 2025 pm 03:21 PM

How does deepseek official website achieve the effect of penetrating mouse scroll event?

Apr 30, 2025 pm 03:21 PM

How to achieve the effect of mouse scrolling event penetration? When we browse the web, we often encounter some special interaction designs. For example, on deepseek official website, �...