Write a simple web crawler in Python to capture videos

From the comments on the previous article, it seems that many children's shoes pay more attention to the source code of the crawler. All this article has made a very detailed record of using Python to write a simple web crawler to capture video download resources. Almost every step is introduced to everyone. I hope it can be helpful to everyone.

This is my first time coming into contact with crawlers. It was in May of this year that I wrote a blog search engine. The crawler used was quite intelligent, at least much higher than the crawler used by the website Here Comes the Movie!

Back to the topic of writing crawlers in Python.

Python has always been the main scripting language I use, bar none. Python's language is simple and flexible, and its standard library is powerful. It can be used as a calculator, text encoding conversion, image processing, batch downloading, batch text processing, etc. In short, I like it very much, and the more I use it, the better I get at it. I don’t tell most people about such a useful tool. . .

Because of its powerful string processing capabilities and the existence of modules such as urllib2, cookielib, re, and threading, it is simply easy to write a crawler in Python. How simple can it be? I told a classmate at that time that the total number of lines of script code for the several crawlers and scattered scripts I used to organize the movie did not exceed 1,000, and the website "Come to Write a Movie" only had about 150 lines. code. Because the code of the crawler is on another 64-bit Black Apple, I will not list it. I will only list the code of the website on the VPS. The code of the tornadoweb framework is

1 2 3 4 5 6 7 |

|

. Let’s directly show the code of the crawler. Write a process. The following content is for communication and learning purposes only and has no other meaning.

Take the latest video download resource of a certain bay as an example. Its URL is

http://piratebay.se/browse/200

because this web page There are a lot of advertisements, so I will only post part of the text:

For a python crawler, download the source code of this page, one line of code is enough. The urllib2 library is used here.

1 2 3 4 |

|

Of course, you can also use the system function in the os module to call the wget command to download web content. It is very convenient for students who have mastered the wget or curl tool.

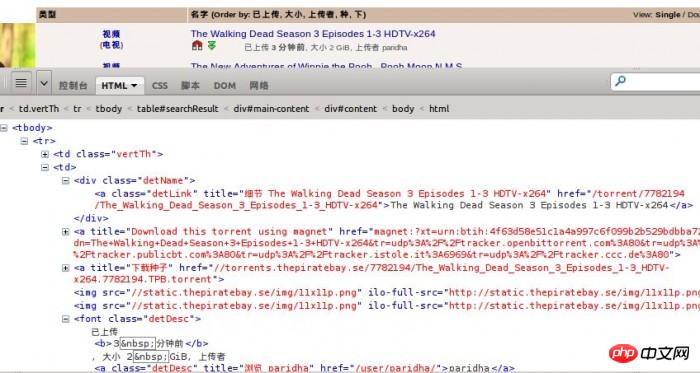

Use Firebug to observe the structure of the web page, and you can know that the html in the main text is a table. Each resource is a tr tag.

For each resource, the information that needs to be extracted is:

1, video classification

2, resource name

3, resource Link

4, resource size

5, upload time

is enough, if necessary, you can increase it.

First extract a piece of code in the tr tag to observe.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

|

The following uses regular expressions to extract the content in the html code. Students who don’t know about regular expressions can go to http://docs.python.org/2/library/re.html to learn more.

There is a reason why you should use regular expressions instead of some other tools for parsing HTML or DOM trees. I tried using BeautifulSoup3 to extract content before, but later found that the speed was really slow. Being able to process 100 pieces of content in one second was already the limit of my computer. . . But by changing the regular expression and processing the content after compilation, the speed directly kills it!

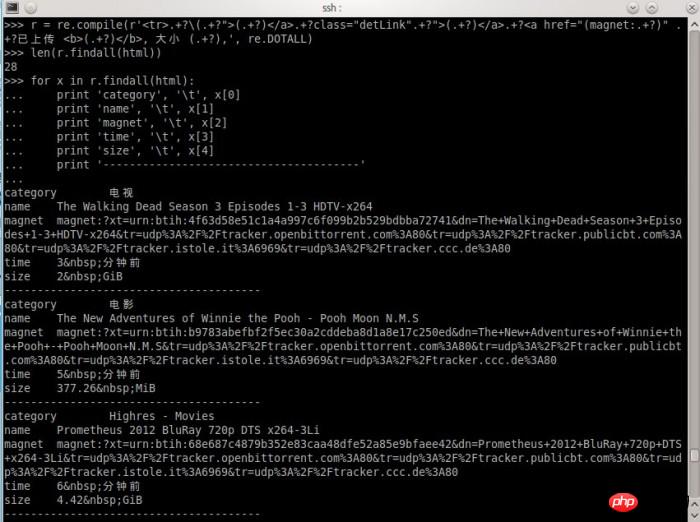

How do I write my regular expression to extract so much content?

According to my past experience, ".*?" or ". ?" is very useful. But we should also pay attention to some small problems. You will know it when you actually use it

For the above tr tag code, I first need to make my expression match the symbol

indicates the beginning of the content. Of course, it can also be anything else, as long as you don’t miss the required content. Then the content I want to match is the following, to get the video classification.

(TV)

Then I have to match the resource link,

and then to other resource information,

font class="detDesc">Uploaded3 minutes ago, size 2 GiB, uploaded by

Last match

Done!

Of course, the final match does not need to be expressed in the regular expression. As long as the starting position is positioned correctly, the position where the information is obtained later will also be correct.

Friends who know more about regular expressions may know how to write them. Let me show you the expression processing process I wrote,

就这么简单,结果出来了,自我感觉挺欢喜的。

当然,这样设计的爬虫是有针对性的,定向爬取某一个站点的内容。也没有任何一个爬虫不会对收集到的链接进行筛选。通常可以使用BFS(宽度优先搜索算法)来爬取一个网站的所有页面链接。

完整的Python爬虫代码,爬取某湾最新的10页视频资源:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

|

以上代码仅供思路展示,实际运行使用到mongodb数据库,同时可能因为无法访问某湾网站而无法得到正常结果。

所以说,电影来了网站用到的爬虫不难写,难的是获得数据后如何整理获取有用信息。例如,如何匹配一个影片信息跟一个资源,如何在影片信息库和视频链接之间建立关联,这些都需要不断尝试各种方法,最后选出比较靠谱的。

曾有某同学发邮件想花钱也要得到我的爬虫的源代码。

要是我真的给了,我的爬虫就几百来行代码,一张A4纸,他不会说,坑爹啊!!!……

都说现在是信息爆炸的时代,所以比的还是谁的数据挖掘能力强

好吧,那么问题来了学习挖掘机(数据)技术到底哪家强?

相关推荐:

The above is the detailed content of Write a simple web crawler in Python to capture videos. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1658

1658

14

14

1415

1415

52

52

1309

1309

25

25

1257

1257

29

29

1231

1231

24

24

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP is mainly procedural programming, but also supports object-oriented programming (OOP); Python supports a variety of paradigms, including OOP, functional and procedural programming. PHP is suitable for web development, and Python is suitable for a variety of applications such as data analysis and machine learning.

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

PHP is suitable for web development and rapid prototyping, and Python is suitable for data science and machine learning. 1.PHP is used for dynamic web development, with simple syntax and suitable for rapid development. 2. Python has concise syntax, is suitable for multiple fields, and has a strong library ecosystem.

PHP and Python: A Deep Dive into Their History

Apr 18, 2025 am 12:25 AM

PHP and Python: A Deep Dive into Their History

Apr 18, 2025 am 12:25 AM

PHP originated in 1994 and was developed by RasmusLerdorf. It was originally used to track website visitors and gradually evolved into a server-side scripting language and was widely used in web development. Python was developed by Guidovan Rossum in the late 1980s and was first released in 1991. It emphasizes code readability and simplicity, and is suitable for scientific computing, data analysis and other fields.

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python is more suitable for beginners, with a smooth learning curve and concise syntax; JavaScript is suitable for front-end development, with a steep learning curve and flexible syntax. 1. Python syntax is intuitive and suitable for data science and back-end development. 2. JavaScript is flexible and widely used in front-end and server-side programming.

How to run sublime code python

Apr 16, 2025 am 08:48 AM

How to run sublime code python

Apr 16, 2025 am 08:48 AM

To run Python code in Sublime Text, you need to install the Python plug-in first, then create a .py file and write the code, and finally press Ctrl B to run the code, and the output will be displayed in the console.

Where to write code in vscode

Apr 15, 2025 pm 09:54 PM

Where to write code in vscode

Apr 15, 2025 pm 09:54 PM

Writing code in Visual Studio Code (VSCode) is simple and easy to use. Just install VSCode, create a project, select a language, create a file, write code, save and run it. The advantages of VSCode include cross-platform, free and open source, powerful features, rich extensions, and lightweight and fast.

Can visual studio code be used in python

Apr 15, 2025 pm 08:18 PM

Can visual studio code be used in python

Apr 15, 2025 pm 08:18 PM

VS Code can be used to write Python and provides many features that make it an ideal tool for developing Python applications. It allows users to: install Python extensions to get functions such as code completion, syntax highlighting, and debugging. Use the debugger to track code step by step, find and fix errors. Integrate Git for version control. Use code formatting tools to maintain code consistency. Use the Linting tool to spot potential problems ahead of time.

How to run python with notepad

Apr 16, 2025 pm 07:33 PM

How to run python with notepad

Apr 16, 2025 pm 07:33 PM

Running Python code in Notepad requires the Python executable and NppExec plug-in to be installed. After installing Python and adding PATH to it, configure the command "python" and the parameter "{CURRENT_DIRECTORY}{FILE_NAME}" in the NppExec plug-in to run Python code in Notepad through the shortcut key "F6".