Beyond JavaScript - Why + doesn&#t equal in programming

JavaScript is frequently ridiculed when developers first encounter this seemingly baffling result:

0.1 + 0.2 == 0.30000000000000004

Memes about JavaScript's handling of numbers are widespread, often leading many to believe that this behaviour is unique to the language.

However, this quirk isn't just limited to JavaScript. It is a consequence of how most programming languages handle floating-point arithmetic.

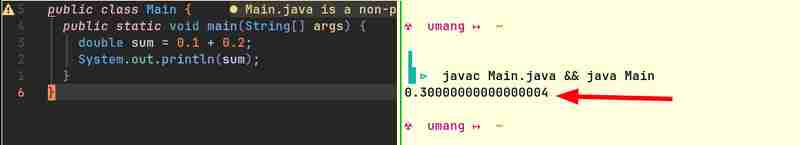

For instance, here are code snippets from Java and Go that produce similar results:

Computers can natively only store integers. They don't understand fractions. (How will they? The only way computers can do arithmetic is by turning some lights on or off. The light can either be on or off. It can't be "half" on!) They need some way of representing floating point numbers. Since this representation is not perfectly accurate, more often than not, 0.1 + 0.2 does not equal 0.3.

All fractions whose denominators are made of prime factors of the number system's base can be cleanly expressed while any other fractions would have repeating decimals. For example, in the number system with base 10, fractions like 1/2, 1/4, 1/5, 1/10 are cleanly represented because the denominators in each case are made up of 2 or 5 - the prime factors of 10. However, fractions like 1/3, 1/6, 1/7 all have recurring decimals.

Similarly, in the binary system fractions like 1/2, 1/4, 1/8 are cleanly expressed while all other fractions have recurring decimals. When you perform arithmetic on these recurring decimals, you end up with leftovers which carry over when you convert the computer's binary representation of numbers to a human readable base-10 representation. This is what leads to approximately correct results.

Now that we've established that this problem is not exclusive to JavaScript, let's explore how floating-point numbers are represented and processed under the hood to understand why this behaviour occurs.

In order to understand how floating point numbers are represented and processed under the hood, we would first have to understand the IEEE 754 floating point standard.

IEEE 754 standard is a widely used specification for representing and performing arithmetic on floating-point numbers in computer systems. It was created to guarantee consistency when using floating-point arithmetic on various computing platforms. Most programming languages and hardware implementations (CPUs, GPUs, etc.) adhere to this standard.

This is how a number is denoted in IEEE 754 format:

Here s is the sign bit (0 for positive, 1 for negative), M is the mantissa (holds the digits of the number) and E is the exponent which determines the scale of the number.

You would not be able to find any integer values for M and E that can exactly represent numbers like 0.1, 0.2 or 0.3 in this format. We can only pick values for M and E that give the closest result.

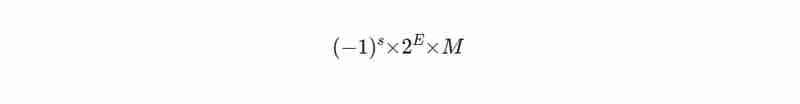

Here is a tool you could use to determine the IEEE 754 notations of decimal numbers: https://www.h-schmidt.net/FloatConverter/IEEE754.html

IEEE 754 notation of 0.25:

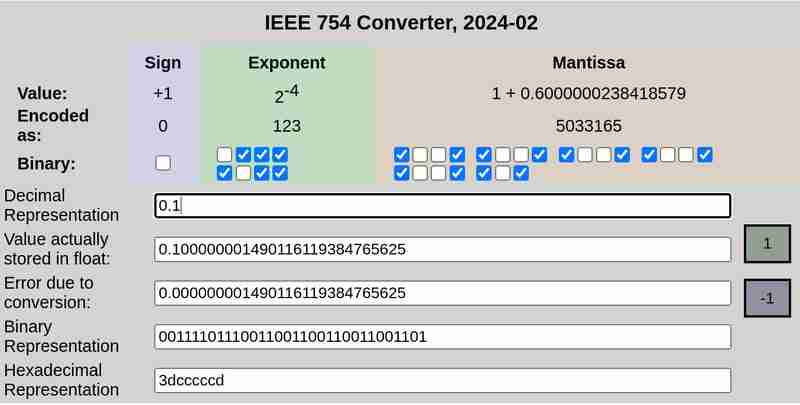

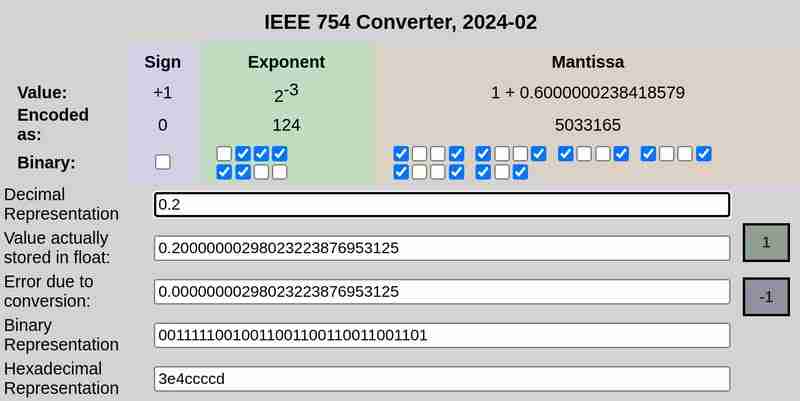

IEEE 754 notation of 0.1 and 0.2 respectively:

Please note that the error due to conversion in case of 0.25 was 0, while 0.1 and 0.2 had non-zero errors.

IEEE 754 defines the following formats for representing floating-point numbers:

Single-precision (32-bit): 1 bit for sign, 8 bits for exponent, 23 bits for mantissa

Double-precision (64-bit): 1 bit for sign, 11 bits for exponent, 52 bits for mantissa

For the sake of simplicity, let us consider the single-precision format that uses 32 bits.

The 32 bit representation of 0.1 is:

0 01111011 10011001100110011001101

Here the first bit represents the sign (0 which means positive in this case), the next 8 bits (01111011) represent the exponent and the final 23 bits (10011001100110011001101) represent the mantissa.

This is not an exact representation. It represents ≈ 0.100000001490116119384765625

Similarly, the 32 bit representation of 0.2 is:

0 01111100 10011001100110011001101

This is not an exact representation either. It represents ≈ 0.20000000298023223876953125

When added, this results in:

0 01111101 11001101010011001100110

which is ≈ 0.30000001192092896 in decimal representation.

In conclusion, the seemingly perplexing result of 0.1 + 0.2 not yielding 0.3 is not an anomaly specific to JavaScript, but a consequence of the limitations of floating-point arithmetic across programming languages. The roots of this behaviour lie in the binary representation of numbers, which inherently leads to precision errors when handling certain fractions.

以上是Beyond JavaScript - Why + doesn&#t equal in programming的详细内容。更多信息请关注PHP中文网其他相关文章!

热AI工具

Undresser.AI Undress

人工智能驱动的应用程序,用于创建逼真的裸体照片

AI Clothes Remover

用于从照片中去除衣服的在线人工智能工具。

Undress AI Tool

免费脱衣服图片

Clothoff.io

AI脱衣机

Video Face Swap

使用我们完全免费的人工智能换脸工具轻松在任何视频中换脸!

热门文章

热工具

记事本++7.3.1

好用且免费的代码编辑器

SublimeText3汉化版

中文版,非常好用

禅工作室 13.0.1

功能强大的PHP集成开发环境

Dreamweaver CS6

视觉化网页开发工具

SublimeText3 Mac版

神级代码编辑软件(SublimeText3)

神秘的JavaScript:它的作用以及为什么重要

Apr 09, 2025 am 12:07 AM

神秘的JavaScript:它的作用以及为什么重要

Apr 09, 2025 am 12:07 AM

JavaScript是现代Web开发的基石,它的主要功能包括事件驱动编程、动态内容生成和异步编程。1)事件驱动编程允许网页根据用户操作动态变化。2)动态内容生成使得页面内容可以根据条件调整。3)异步编程确保用户界面不被阻塞。JavaScript广泛应用于网页交互、单页面应用和服务器端开发,极大地提升了用户体验和跨平台开发的灵活性。

JavaScript的演变:当前的趋势和未来前景

Apr 10, 2025 am 09:33 AM

JavaScript的演变:当前的趋势和未来前景

Apr 10, 2025 am 09:33 AM

JavaScript的最新趋势包括TypeScript的崛起、现代框架和库的流行以及WebAssembly的应用。未来前景涵盖更强大的类型系统、服务器端JavaScript的发展、人工智能和机器学习的扩展以及物联网和边缘计算的潜力。

JavaScript引擎:比较实施

Apr 13, 2025 am 12:05 AM

JavaScript引擎:比较实施

Apr 13, 2025 am 12:05 AM

不同JavaScript引擎在解析和执行JavaScript代码时,效果会有所不同,因为每个引擎的实现原理和优化策略各有差异。1.词法分析:将源码转换为词法单元。2.语法分析:生成抽象语法树。3.优化和编译:通过JIT编译器生成机器码。4.执行:运行机器码。V8引擎通过即时编译和隐藏类优化,SpiderMonkey使用类型推断系统,导致在相同代码上的性能表现不同。

Python vs. JavaScript:学习曲线和易用性

Apr 16, 2025 am 12:12 AM

Python vs. JavaScript:学习曲线和易用性

Apr 16, 2025 am 12:12 AM

Python更适合初学者,学习曲线平缓,语法简洁;JavaScript适合前端开发,学习曲线较陡,语法灵活。1.Python语法直观,适用于数据科学和后端开发。2.JavaScript灵活,广泛用于前端和服务器端编程。

JavaScript:探索网络语言的多功能性

Apr 11, 2025 am 12:01 AM

JavaScript:探索网络语言的多功能性

Apr 11, 2025 am 12:01 AM

JavaScript是现代Web开发的核心语言,因其多样性和灵活性而广泛应用。1)前端开发:通过DOM操作和现代框架(如React、Vue.js、Angular)构建动态网页和单页面应用。2)服务器端开发:Node.js利用非阻塞I/O模型处理高并发和实时应用。3)移动和桌面应用开发:通过ReactNative和Electron实现跨平台开发,提高开发效率。

如何使用Next.js(前端集成)构建多租户SaaS应用程序

Apr 11, 2025 am 08:22 AM

如何使用Next.js(前端集成)构建多租户SaaS应用程序

Apr 11, 2025 am 08:22 AM

本文展示了与许可证确保的后端的前端集成,并使用Next.js构建功能性Edtech SaaS应用程序。 前端获取用户权限以控制UI的可见性并确保API要求遵守角色库

使用Next.js(后端集成)构建多租户SaaS应用程序

Apr 11, 2025 am 08:23 AM

使用Next.js(后端集成)构建多租户SaaS应用程序

Apr 11, 2025 am 08:23 AM

我使用您的日常技术工具构建了功能性的多租户SaaS应用程序(一个Edtech应用程序),您可以做同样的事情。 首先,什么是多租户SaaS应用程序? 多租户SaaS应用程序可让您从唱歌中为多个客户提供服务

从C/C到JavaScript:所有工作方式

Apr 14, 2025 am 12:05 AM

从C/C到JavaScript:所有工作方式

Apr 14, 2025 am 12:05 AM

从C/C 转向JavaScript需要适应动态类型、垃圾回收和异步编程等特点。1)C/C 是静态类型语言,需手动管理内存,而JavaScript是动态类型,垃圾回收自动处理。2)C/C 需编译成机器码,JavaScript则为解释型语言。3)JavaScript引入闭包、原型链和Promise等概念,增强了灵活性和异步编程能力。