Sqoop安装配置及演示

Sqoop是一个用来将Hadoop(Hive、HBase)和关系型数据库中的数据相互转移的工具,可以将一个关系型数据库(例如:MySQL ,Oracle ,Postgres等)中的数据导入到Hadoop的HDFS中,也可以将HDFS的数据导入到关系型数据库中。Sqoop目前已经是Apache的顶级项目了,

Sqoop是一个用来将Hadoop(Hive、HBase)和关系型数据库中的数据相互转移的工具,可以将一个关系型数据库(例如:MySQL ,Oracle ,Postgres等)中的数据导入到Hadoop的HDFS中,也可以将HDFS的数据导入到关系型数据库中。 Sqoop目前已经是Apache的顶级项目了,目前版本是1.4.4 和 Sqoop2 1.99.3,本文以1.4.4的版本为例讲解基本的安装配置和简单应用的演示。- 安装配置

- 准备测试数据

- 导入数据到HDFS

- 导入数据到Hive

- 导入数据到HBase

sqoop-1.4.4.bin__hadoop-2.0.4-alpha.tar.gz

1.1、下载后解压配置:

tar -zxvf sqoop-1.4.4.bin__hadoop-2.0.4-alpha.tar.gz /usr/local/ cd /usr/local ln -s sqoop-1.4.4.bin__hadoop-2.0.4-alpha sqoop

vi ~/.bash_profile :

#Sqoop add by micmiu.com export SQOOP_HOME=/usr/local/sqoop export PATH=$SQOOP_HOME/bin:$PATH

vi ?<sqoop_home>/conf/sqoop-env.sh</sqoop_home>

# 指定各环境变量的实际配置 # Set Hadoop-specific environment variables here. #Set path to where bin/hadoop is available #export HADOOP_COMMON_HOME= #Set path to where hadoop-*-core.jar is available #export HADOOP_MAPRED_HOME= #set the path to where bin/hbase is available #export HBASE_HOME= #Set the path to where bin/hive is available #export HIVE_HOME=

# Hadoop

export HADOOP_PREFIX="/usr/local/hadoop"

export HADOOP_HOME=${HADOOP_PREFIX}

export PATH=$PATH:$HADOOP_PREFIX/bin:$HADOOP_PREFIX/sbin

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_HDFS_HOME=${HADOOP_PREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_PREFIX}

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

# Native Path

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_PREFIX}/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_PREFIX/lib/native"

# Hadoop end

#Hive

export HIVE_HOME=/usr/local/hive

export PATH=$HIVE_HOME/bin:$PATH

#HBase

export HBASE_HOME=/usr/local/hbase

export PATH=$HBASE

#add by micmiu.com<sqoop_home>/lib</sqoop_home> 目录下。

[二]、测试数据准备

以MySQL 为例:

- 192.168.6.77(hostname:Master.Hadoop)

- database: test

- 用户:root 密码:micmiu

CREATE TABLE `demo_blog` ( `id` int(11) NOT NULL AUTO_INCREMENT, `blog` varchar(100) NOT NULL, PRIMARY KEY (`id`) ) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE `demo_log` ( `operator` varchar(16) NOT NULL, `log` varchar(100) NOT NULL ) ENGINE=MyISAM DEFAULT CHARSET=utf8;

insert into demo_blog (id, blog) values (1, "micmiu.com");

insert into demo_blog (id, blog) values (2, "ctosun.com");

insert into demo_blog (id, blog) values (3, "baby.micmiu.com");

insert into demo_log (operator, log) values ("micmiu", "create");

insert into demo_log (operator, log) values ("micmiu", "update");

insert into demo_log (operator, log) values ("michael", "edit");

insert into demo_log (operator, log) values ("michael", "delete");sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 09:58:43 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 09:58:43 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 09:58:43 INFO tool.CodeGenTool: Beginning code generation 14/04/09 09:58:43 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 09:58:43 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 09:58:43 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/e8fd26a5bca5b7f51cdb03bf847ce389/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 09:58:44 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/e8fd26a5bca5b7f51cdb03bf847ce389/demo_blog.jar 14/04/09 09:58:44 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 09:58:44 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 09:58:44 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 09:58:44 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 09:58:44 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 09:58:44 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 09:58:45 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 09:58:45 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 09:58:47 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 09:58:47 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 09:58:47 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 09:58:47 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0001 14/04/09 09:58:47 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0001 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 09:58:47 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0001/ 14/04/09 09:58:47 INFO mapreduce.Job: Running job: job_1396936838233_0001 14/04/09 09:59:00 INFO mapreduce.Job: Job job_1396936838233_0001 running in uber mode : false 14/04/09 09:59:00 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 09:59:14 INFO mapreduce.Job: map 33% reduce 0% 14/04/09 09:59:16 INFO mapreduce.Job: map 67% reduce 0% 14/04/09 09:59:19 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 09:59:19 INFO mapreduce.Job: Job job_1396936838233_0001 completed successfully 14/04/09 09:59:19 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=271866 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=44 HDFS: Number of read operations=12 HDFS: Number of large read operations=0 HDFS: Number of write operations=6 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=43032 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=590 CPU time spent (ms)=6330 Physical memory (bytes) snapshot=440934400 Virtual memory (bytes) snapshot=3882573824 Total committed heap usage (bytes)=160563200 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=44 14/04/09 09:59:19 INFO mapreduce.ImportJobBase: Transferred 44 bytes in 34.454 seconds (1.2771 bytes/sec) 14/04/09 09:59:19 INFO mapreduce.ImportJobBase: Retrieved 3 records.

$ hdfs dfs -ls /user/hadoop/demo_blog Found 4 items -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 09:59 /user/hadoop/demo_blog/_SUCCESS -rw-r--r-- 3 hadoop supergroup 13 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00000 -rw-r--r-- 3 hadoop supergroup 13 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00001 -rw-r--r-- 3 hadoop supergroup 18 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00002 [hadoop@Master ~]$ hdfs dfs -cat /user/hadoop/demo_blog/part-m-0000* 1,micmiu.com 2,ctosun.com 3,baby.micmiu.com

/user/username/tablename/(files),比如我的当前用户是hadoop,那么实际路径即:?/user/hadoop/demo_blog/(files)。

如果要自定义路径需要增加参数:--warehouse-dir 比如:

sqoop import --connect jdbc:mysql://Master.Hadoop/test --username root --password micmiu --table demo_blog --warehouse-dir /user/micmiu/sqoop

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_log --warehouse-dir /user/micmiu/sqoop --split-by operator

--split-by xxx ?或者 -m 1

执行过程:

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_log --warehouse-dir /user/micmiu/sqoop --split-by operator Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 15:02:06 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 15:02:06 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 15:02:06 INFO tool.CodeGenTool: Beginning code generation 14/04/09 15:02:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_log` AS t LIMIT 1 14/04/09 15:02:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_log` AS t LIMIT 1 14/04/09 15:02:06 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/dddc1bcdba30515f95a2d604f22e4fe9/demo_log.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 15:02:07 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/dddc1bcdba30515f95a2d604f22e4fe9/demo_log.jar 14/04/09 15:02:07 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 15:02:07 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 15:02:07 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 15:02:07 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 15:02:07 INFO mapreduce.ImportJobBase: Beginning import of demo_log SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 15:02:07 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 15:02:08 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 15:02:08 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 15:02:10 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`operator`), MAX(`operator`) FROM `demo_log` 14/04/09 15:02:10 WARN db.TextSplitter: Generating splits for a textual index column. 14/04/09 15:02:10 WARN db.TextSplitter: If your database sorts in a case-insensitive order, this may result in a partial import or duplicate records. 14/04/09 15:02:10 WARN db.TextSplitter: You are strongly encouraged to choose an integral split column. 14/04/09 15:02:10 INFO mapreduce.JobSubmitter: number of splits:4 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 15:02:10 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 15:02:10 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0003 14/04/09 15:02:10 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0003 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 15:02:10 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0003/ 14/04/09 15:02:10 INFO mapreduce.Job: Running job: job_1396936838233_0003 14/04/09 15:02:17 INFO mapreduce.Job: Job job_1396936838233_0003 running in uber mode : false 14/04/09 15:02:17 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 15:02:28 INFO mapreduce.Job: map 25% reduce 0% 14/04/09 15:02:30 INFO mapreduce.Job: map 50% reduce 0% 14/04/09 15:02:33 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 15:02:33 INFO mapreduce.Job: Job job_1396936838233_0003 completed successfully 14/04/09 15:02:33 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=362536 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=516 HDFS: Number of bytes written=56 HDFS: Number of read operations=16 HDFS: Number of large read operations=0 HDFS: Number of write operations=8 Job Counters Launched map tasks=4 Other local map tasks=4 Total time spent by all maps in occupied slots (ms)=44481 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=4 Map output records=4 Input split bytes=516 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=429 CPU time spent (ms)=6650 Physical memory (bytes) snapshot=587669504 Virtual memory (bytes) snapshot=5219356672 Total committed heap usage (bytes)=205848576 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=56 14/04/09 15:02:33 INFO mapreduce.ImportJobBase: Transferred 56 bytes in 25.2746 seconds (2.2157 bytes/sec) 14/04/09 15:02:33 INFO mapreduce.ImportJobBase: Retrieved 4 records.

$ hdfs dfs -ls /user/micmiu/sqoop/demo_log Found 5 items -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/_SUCCESS -rw-r--r-- 3 hadoop supergroup 28 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00000 -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00001 -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00002 -rw-r--r-- 3 hadoop supergroup 28 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00003 $ hdfs dfs -cat /user/micmiu/sqoop/demo_log/part-m-0000* michael,edit michael,delete micmiu,create micmiu,update

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --warehouse-dir /user/sqoop --hive-import --create-hive-table

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --warehouse-dir /user/sqoop --hive-import --create-hive-table Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 10:44:21 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 10:44:21 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override 14/04/09 10:44:21 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc. 14/04/09 10:44:21 WARN tool.BaseSqoopTool: It seems that you've specified at least one of following: 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-home 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-overwrite 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --create-hive-table 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-table 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-partition-key 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-partition-value 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --map-column-hive 14/04/09 10:44:21 WARN tool.BaseSqoopTool: Without specifying parameter --hive-import. Please note that 14/04/09 10:44:21 WARN tool.BaseSqoopTool: those arguments will not be used in this session. Either 14/04/09 10:44:21 WARN tool.BaseSqoopTool: specify --hive-import to apply them correctly or remove them 14/04/09 10:44:21 WARN tool.BaseSqoopTool: from command line to remove this warning. 14/04/09 10:44:21 INFO tool.BaseSqoopTool: Please note that --hive-home, --hive-partition-key, 14/04/09 10:44:21 INFO tool.BaseSqoopTool: hive-partition-value and --map-column-hive options are 14/04/09 10:44:21 INFO tool.BaseSqoopTool: are also valid for HCatalog imports and exports 14/04/09 10:44:21 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 10:44:21 INFO tool.CodeGenTool: Beginning code generation 14/04/09 10:44:21 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:21 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:21 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/c071f02ecad006293202fd2c2fad0dce/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 10:44:22 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/c071f02ecad006293202fd2c2fad0dce/demo_blog.jar 14/04/09 10:44:22 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 10:44:22 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 10:44:22 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 10:44:22 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 10:44:22 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 10:44:22 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 10:44:23 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 10:44:23 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 10:44:25 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 10:44:25 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 10:44:25 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 10:44:25 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0002 14/04/09 10:44:25 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0002 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 10:44:25 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0002/ 14/04/09 10:44:25 INFO mapreduce.Job: Running job: job_1396936838233_0002 14/04/09 10:44:33 INFO mapreduce.Job: Job job_1396936838233_0002 running in uber mode : false 14/04/09 10:44:33 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 10:44:46 INFO mapreduce.Job: map 67% reduce 0% 14/04/09 10:44:48 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 10:44:49 INFO mapreduce.Job: Job job_1396936838233_0002 completed successfully 14/04/09 10:44:49 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=271860 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=44 HDFS: Number of read operations=12 HDFS: Number of large read operations=0 HDFS: Number of write operations=6 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=34047 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=505 CPU time spent (ms)=5350 Physical memory (bytes) snapshot=427388928 Virtual memory (bytes) snapshot=3881439232 Total committed heap usage (bytes)=171638784 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=44 14/04/09 10:44:49 INFO mapreduce.ImportJobBase: Transferred 44 bytes in 26.0401 seconds (1.6897 bytes/sec) 14/04/09 10:44:49 INFO mapreduce.ImportJobBase: Retrieved 3 records. 14/04/09 10:44:49 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:49 INFO hive.HiveImport: Loading uploaded data into Hive 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed 14/04/09 10:44:53 INFO hive.HiveImport: 14/04/09 10:44:53 WARN conf.HiveConf: DEPRECATED: hive.metastore.ds.retry.* no longer has any effect. Use hive.hmshandler.retry.* instead 14/04/09 10:44:53 INFO hive.HiveImport: 14/04/09 10:44:53 INFO hive.HiveImport: Logging initialized using configuration in file:/usr/local/hive-0.13.0-bin/conf/hive-log4j.properties 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings. 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 10:44:57 INFO hive.HiveImport: OK 14/04/09 10:44:57 INFO hive.HiveImport: Time taken: 0.773 seconds 14/04/09 10:44:57 INFO hive.HiveImport: Loading data to table default.demo_blog 14/04/09 10:44:57 INFO hive.HiveImport: Table default.demo_blog stats: [numFiles=4, numRows=0, totalSize=44, rawDataSize=0] 14/04/09 10:44:57 INFO hive.HiveImport: OK 14/04/09 10:44:57 INFO hive.HiveImport: Time taken: 0.25 seconds 14/04/09 10:44:57 INFO hive.HiveImport: Hive import complete. 14/04/09 10:44:57 INFO hive.HiveImport: Export directory is empty, removing it

hive> show tables; OK demo_blog hbase_table_1 hbase_table_2 hbase_table_3 micmiu_blog micmiu_hx_master pokes xflow_dstip Time taken: 0.073 seconds, Fetched: 8 row(s) hive> select * from demo_blog; OK 1 micmiu.com 2 ctosun.com 3 baby.micmiu.com Time taken: 0.506 seconds, Fetched: 3 row(s)

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --hbase-table demo_sqoop2hbase --hbase-create-table --hbase-row-key id --column-family url

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --hbase-table demo_sqoop2hbase --hbase-create-table --hbase-row-key id --column-family url Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 16:23:38 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 16:23:38 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 16:23:38 INFO tool.CodeGenTool: Beginning code generation 14/04/09 16:23:39 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 16:23:39 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 16:23:39 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/85408c854ee8fba75bbb2458e5e25093/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 16:23:40 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/85408c854ee8fba75bbb2458e5e25093/demo_blog.jar 14/04/09 16:23:40 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 16:23:40 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 16:23:40 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 16:23:40 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 16:23:40 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 16:23:40 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 16:23:40 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:host.name=Master.Hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.version=1.6.0_20 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Sun Microsystems Inc. 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.home=/java/jdk1.6.0_20/jre 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.class.path=/usr/local/hadoop/etc/hadoop: ....... 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/usr/local/hadoop/lib/native 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.compiler= 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.version=2.6.32-71.el6.x86_64 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.name=hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.home=/home/hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.dir=/home/hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave5.Hadoop/192.168.8.205:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:41 INFO zookeeper.RecoverableZooKeeper: Process identifier=hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Socket connection established to Slave5.Hadoop/192.168.8.205:2181, initiating session 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave5.Hadoop/192.168.8.205:2181, sessionid = 0x453fecb6c50009, negotiated timeout = 90000 14/04/09 16:23:41 INFO Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave7.Hadoop/192.168.8.207:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:41 INFO zookeeper.RecoverableZooKeeper: Process identifier=catalogtracker-on-hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Socket connection established to Slave7.Hadoop/192.168.8.207:2181, initiating session 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave7.Hadoop/192.168.8.207:2181, sessionid = 0x2453fecb6f50008, negotiated timeout = 90000 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Session: 0x2453fecb6f50008 closed 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: EventThread shut down 14/04/09 16:23:41 INFO mapreduce.HBaseImportJob: Creating missing HBase table demo_sqoop2hbase 14/04/09 16:23:42 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:42 INFO zookeeper.RecoverableZooKeeper: Process identifier=catalogtracker-on-hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave7.Hadoop/192.168.8.207:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Socket connection established to Slave7.Hadoop/192.168.8.207:2181, initiating session 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave7.Hadoop/192.168.8.207:2181, sessionid = 0x2453fecb6f50009, negotiated timeout = 90000 14/04/09 16:23:42 INFO zookeeper.ZooKeeper: Session: 0x2453fecb6f50009 closed 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: EventThread shut down 14/04/09 16:23:42 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 16:23:47 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 16:23:47 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 16:23:47 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 16:23:47 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0005 14/04/09 16:23:47 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0005 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 16:23:47 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0005/ 14/04/09 16:23:47 INFO mapreduce.Job: Running job: job_1396936838233_0005 14/04/09 16:23:55 INFO mapreduce.Job: Job job_1396936838233_0005 running in uber mode : false 14/04/09 16:23:55 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 16:24:05 INFO mapreduce.Job: map 33% reduce 0% 14/04/09 16:24:12 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 16:24:12 INFO mapreduce.Job: Job job_1396936838233_0005 completed successfully 14/04/09 16:24:12 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=354636 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=0 HDFS: Number of read operations=3 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=35297 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=381 CPU time spent (ms)=11050 Physical memory (bytes) snapshot=543367168 Virtual memory (bytes) snapshot=3918925824 Total committed heap usage (bytes)=156958720 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=0 14/04/09 16:24:12 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 29.7126 seconds (0 bytes/sec) 14/04/09 16:24:12 INFO mapreduce.ImportJobBase: Retrieved 3 records.

hbase(main):009:0> list

TABLE

demo_sqoop2hbase

table_02

table_03

test_table

xyz

5 row(s) in 0.0310 seconds

=> ["demo_sqoop2hbase", "table_02", "table_03", "test_table", "xyz"]

hbase(main):010:0> scan "demo_sqoop2hbase"

ROW COLUMN+CELL

1 column=url:blog, timestamp=1397031850700, value=micmiu.com

2 column=url:blog, timestamp=1397031844106, value=ctosun.com

3 column=url:blog, timestamp=1397031849888, value=baby.micmiu.com

3 row(s) in 0.0730 seconds

hbase(main):011:0> describe "demo_sqoop2hbase"

DESCRIPTION ENABLED

'demo_sqoop2hbase', {NAME => 'url', DATA_BLOCK_ENCODING => 'NONE', BL true

OOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRE

SSION => 'NONE', MIN_VERSIONS => '0', TTL => '2147483647', KEEP_DELET

ED_CELLS => 'false', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOC

KCACHE => 'true'}

1 row(s) in 0.0580 seconds

hbase(main):012:0>- http://sqoop.apache.org/docs/1.4.4/SqoopUserGuide.html

原文地址:Sqoop安装配置及演示, 感谢原作者分享。

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

Video Face Swap

使用我們完全免費的人工智慧換臉工具,輕鬆在任何影片中換臉!

熱門文章

熱工具

記事本++7.3.1

好用且免費的程式碼編輯器

SublimeText3漢化版

中文版,非常好用

禪工作室 13.0.1

強大的PHP整合開發環境

Dreamweaver CS6

視覺化網頁開發工具

SublimeText3 Mac版

神級程式碼編輯軟體(SublimeText3)

Win11系統無法安裝中文語言套件的解決方法

Mar 09, 2024 am 09:48 AM

Win11系統無法安裝中文語言套件的解決方法

Mar 09, 2024 am 09:48 AM

Win11系統無法安裝中文語言包的解決方法隨著Windows11系統的推出,許多用戶開始升級他們的作業系統以體驗新的功能和介面。然而,一些用戶在升級後發現他們無法安裝中文語言包,這給他們的使用體驗帶來了困擾。在本文中,我們將探討Win11系統無法安裝中文語言套件的原因,並提供一些解決方法,幫助使用者解決這個問題。原因分析首先,讓我們來分析一下Win11系統無法

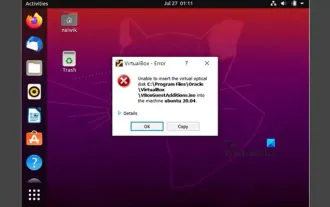

無法在VirtualBox中安裝來賓添加

Mar 10, 2024 am 09:34 AM

無法在VirtualBox中安裝來賓添加

Mar 10, 2024 am 09:34 AM

您可能無法在OracleVirtualBox中將來賓新增安裝到虛擬機器。當我們點擊Devices>;InstallGuestAdditionsCDImage時,它只會拋出一個錯誤,如下所示:VirtualBox-錯誤:無法插入虛擬光碟C:將FilesOracleVirtualBoxVBoxGuestAdditions.iso編程到ubuntu機器中在這篇文章中,我們將了解當您無法在VirtualBox中安裝來賓新增元件時該怎麼辦。無法在VirtualBox中安裝來賓添加如果您無法在Virtua

百度網盤下載成功但是安裝不了怎麼辦?

Mar 13, 2024 pm 10:22 PM

百度網盤下載成功但是安裝不了怎麼辦?

Mar 13, 2024 pm 10:22 PM

如果你已經成功下載了百度網盤的安裝文件,但是無法正常安裝,可能是軟體文件的完整性發生了錯誤或者是殘留文件和註冊表項的問題,下面就讓本站來為用戶們來仔細的介紹一下百度網盤下載成功但是安裝不了問題解析吧。 百度網盤下載成功但是安裝不了問題解析 1、檢查安裝檔完整性:確保下載的安裝檔完整且沒有損壞。你可以重新下載一次,或者嘗試使用其他可信任的來源下載安裝檔。 2、關閉防毒軟體和防火牆:某些防毒軟體或防火牆程式可能會阻止安裝程式的正常運作。嘗試將防毒軟體和防火牆停用或退出,然後重新執行安裝

了解Linux Bashrc:功能、設定與使用方法

Mar 20, 2024 pm 03:30 PM

了解Linux Bashrc:功能、設定與使用方法

Mar 20, 2024 pm 03:30 PM

了解LinuxBashrc:功能、配置與使用方法在Linux系統中,Bashrc(BourneAgainShellruncommands)是一個非常重要的配置文件,其中包含了系統啟動時自動運行的各種命令和設定。 Bashrc文件通常位於使用者的家目錄下,是一個隱藏文件,它的作用是為使用者自訂設定Bashshell的環境。一、Bashrc的功能設定環境

如何在Linux上安裝安卓應用程式?

Mar 19, 2024 am 11:15 AM

如何在Linux上安裝安卓應用程式?

Mar 19, 2024 am 11:15 AM

在Linux上安裝安卓應用程式一直是許多用戶所關心的問題,尤其是對於喜歡使用安卓應用程式的Linux用戶來說,掌握如何在Linux系統上安裝安卓應用程式是非常重要的。雖然在Linux系統上直接運行安卓應用程式並不像在Android平台上那麼簡單,但是透過使用模擬器或第三方工具,我們依然可以在Linux上愉快地享受安卓應用程式的樂趣。以下將為大家介紹在Linux系統上安裝安卓應

如何在Ubuntu 24.04上安裝Podman

Mar 22, 2024 am 11:26 AM

如何在Ubuntu 24.04上安裝Podman

Mar 22, 2024 am 11:26 AM

如果您使用過Docker,則必須了解守護程式、容器及其功能。守護程序是在容器已在任何系統中使用時在背景執行的服務。 Podman是一個免費的管理工具,用於管理和建立容器,而不依賴任何守護程序,例如Docker。因此,它在管理貨櫃方面具有優勢,而不需要長期的後台服務。此外,Podman不需要使用根級別的權限。本指南詳細討論如何在Ubuntu24上安裝Podman。更新系統我們先進行系統更新,開啟Ubuntu24的Terminalshell。在安裝和升級過程中,我們都需要使用命令列。一種簡單的

在Ubuntu 24.04上安裝和執行Ubuntu筆記應用程式的方法

Mar 22, 2024 pm 04:40 PM

在Ubuntu 24.04上安裝和執行Ubuntu筆記應用程式的方法

Mar 22, 2024 pm 04:40 PM

在高中學習的時候,有些學生做的筆記非常清晰準確,比同一個班級的其他人都做得更多。對某些人來說,記筆記是一種愛好,而對其他人來說,當他們很容易忘記任何重要事情的小資訊時,則是一種必需品。 Microsoft的NTFS應用程式對於那些希望保存常規講座以外的重要筆記的學生特別有用。在這篇文章中,我們將描述Ubuntu24上的Ubuntu應用程式的安裝。更新Ubuntu系統在安裝Ubuntu安裝程式之前,在Ubuntu24上我們需要確保新設定的系統已經更新。我們可以使用Ubuntu系統中最著名的「a

Win7系統下如何安裝Go語言?

Mar 27, 2024 pm 01:42 PM

Win7系統下如何安裝Go語言?

Mar 27, 2024 pm 01:42 PM

在Win7系統下安裝Go語言是一項相對簡單的操作,只需按照以下步驟進行操作即可成功安裝。以下將詳細介紹在Win7系統下安裝Go語言的方法。第一步:下載Go語言安裝包首先,開啟Go語言官方網站(https://golang.org/),進入下載頁面。在下載頁面中,選擇與Win7系統相容的安裝套件版本進行下載。點擊下載按鈕,等待安裝包下載完成。第二步:安裝Go語言下