Sqoop安装配置及演示

Sqoop是一个用来将Hadoop(Hive、HBase)和关系型数据库中的数据相互转移的工具,可以将一个关系型数据库(例如:MySQL ,Oracle ,Postgres等)中的数据导入到Hadoop的HDFS中,也可以将HDFS的数据导入到关系型数据库中。Sqoop目前已经是Apache的顶级项目了,

Sqoop是一个用来将Hadoop(Hive、HBase)和关系型数据库中的数据相互转移的工具,可以将一个关系型数据库(例如:MySQL ,Oracle ,Postgres等)中的数据导入到Hadoop的HDFS中,也可以将HDFS的数据导入到关系型数据库中。 Sqoop目前已经是Apache的顶级项目了,目前版本是1.4.4 和 Sqoop2 1.99.3,本文以1.4.4的版本为例讲解基本的安装配置和简单应用的演示。- 安装配置

- 准备测试数据

- 导入数据到HDFS

- 导入数据到Hive

- 导入数据到HBase

sqoop-1.4.4.bin__hadoop-2.0.4-alpha.tar.gz

1.1、下载后解压配置:

tar -zxvf sqoop-1.4.4.bin__hadoop-2.0.4-alpha.tar.gz /usr/local/ cd /usr/local ln -s sqoop-1.4.4.bin__hadoop-2.0.4-alpha sqoop

vi ~/.bash_profile :

#Sqoop add by micmiu.com export SQOOP_HOME=/usr/local/sqoop export PATH=$SQOOP_HOME/bin:$PATH

vi ?<sqoop_home>/conf/sqoop-env.sh</sqoop_home>

# 指定各环境变量的实际配置 # Set Hadoop-specific environment variables here. #Set path to where bin/hadoop is available #export HADOOP_COMMON_HOME= #Set path to where hadoop-*-core.jar is available #export HADOOP_MAPRED_HOME= #set the path to where bin/hbase is available #export HBASE_HOME= #Set the path to where bin/hive is available #export HIVE_HOME=

# Hadoop

export HADOOP_PREFIX="/usr/local/hadoop"

export HADOOP_HOME=${HADOOP_PREFIX}

export PATH=$PATH:$HADOOP_PREFIX/bin:$HADOOP_PREFIX/sbin

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_HDFS_HOME=${HADOOP_PREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_PREFIX}

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

# Native Path

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_PREFIX}/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_PREFIX/lib/native"

# Hadoop end

#Hive

export HIVE_HOME=/usr/local/hive

export PATH=$HIVE_HOME/bin:$PATH

#HBase

export HBASE_HOME=/usr/local/hbase

export PATH=$HBASE

#add by micmiu.com<sqoop_home>/lib</sqoop_home> 目录下。

[二]、测试数据准备

以MySQL 为例:

- 192.168.6.77(hostname:Master.Hadoop)

- database: test

- 用户:root 密码:micmiu

CREATE TABLE `demo_blog` ( `id` int(11) NOT NULL AUTO_INCREMENT, `blog` varchar(100) NOT NULL, PRIMARY KEY (`id`) ) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE `demo_log` ( `operator` varchar(16) NOT NULL, `log` varchar(100) NOT NULL ) ENGINE=MyISAM DEFAULT CHARSET=utf8;

insert into demo_blog (id, blog) values (1, "micmiu.com");

insert into demo_blog (id, blog) values (2, "ctosun.com");

insert into demo_blog (id, blog) values (3, "baby.micmiu.com");

insert into demo_log (operator, log) values ("micmiu", "create");

insert into demo_log (operator, log) values ("micmiu", "update");

insert into demo_log (operator, log) values ("michael", "edit");

insert into demo_log (operator, log) values ("michael", "delete");sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 09:58:43 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 09:58:43 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 09:58:43 INFO tool.CodeGenTool: Beginning code generation 14/04/09 09:58:43 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 09:58:43 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 09:58:43 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/e8fd26a5bca5b7f51cdb03bf847ce389/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 09:58:44 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/e8fd26a5bca5b7f51cdb03bf847ce389/demo_blog.jar 14/04/09 09:58:44 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 09:58:44 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 09:58:44 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 09:58:44 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 09:58:44 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 09:58:44 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 09:58:45 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 09:58:45 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 09:58:47 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 09:58:47 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 09:58:47 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 09:58:47 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0001 14/04/09 09:58:47 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0001 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 09:58:47 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0001/ 14/04/09 09:58:47 INFO mapreduce.Job: Running job: job_1396936838233_0001 14/04/09 09:59:00 INFO mapreduce.Job: Job job_1396936838233_0001 running in uber mode : false 14/04/09 09:59:00 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 09:59:14 INFO mapreduce.Job: map 33% reduce 0% 14/04/09 09:59:16 INFO mapreduce.Job: map 67% reduce 0% 14/04/09 09:59:19 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 09:59:19 INFO mapreduce.Job: Job job_1396936838233_0001 completed successfully 14/04/09 09:59:19 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=271866 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=44 HDFS: Number of read operations=12 HDFS: Number of large read operations=0 HDFS: Number of write operations=6 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=43032 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=590 CPU time spent (ms)=6330 Physical memory (bytes) snapshot=440934400 Virtual memory (bytes) snapshot=3882573824 Total committed heap usage (bytes)=160563200 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=44 14/04/09 09:59:19 INFO mapreduce.ImportJobBase: Transferred 44 bytes in 34.454 seconds (1.2771 bytes/sec) 14/04/09 09:59:19 INFO mapreduce.ImportJobBase: Retrieved 3 records.

$ hdfs dfs -ls /user/hadoop/demo_blog Found 4 items -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 09:59 /user/hadoop/demo_blog/_SUCCESS -rw-r--r-- 3 hadoop supergroup 13 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00000 -rw-r--r-- 3 hadoop supergroup 13 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00001 -rw-r--r-- 3 hadoop supergroup 18 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00002 [hadoop@Master ~]$ hdfs dfs -cat /user/hadoop/demo_blog/part-m-0000* 1,micmiu.com 2,ctosun.com 3,baby.micmiu.com

/user/username/tablename/(files),比如我的当前用户是hadoop,那么实际路径即:?/user/hadoop/demo_blog/(files)。

如果要自定义路径需要增加参数:--warehouse-dir 比如:

sqoop import --connect jdbc:mysql://Master.Hadoop/test --username root --password micmiu --table demo_blog --warehouse-dir /user/micmiu/sqoop

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_log --warehouse-dir /user/micmiu/sqoop --split-by operator

--split-by xxx ?或者 -m 1

执行过程:

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_log --warehouse-dir /user/micmiu/sqoop --split-by operator Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 15:02:06 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 15:02:06 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 15:02:06 INFO tool.CodeGenTool: Beginning code generation 14/04/09 15:02:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_log` AS t LIMIT 1 14/04/09 15:02:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_log` AS t LIMIT 1 14/04/09 15:02:06 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/dddc1bcdba30515f95a2d604f22e4fe9/demo_log.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 15:02:07 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/dddc1bcdba30515f95a2d604f22e4fe9/demo_log.jar 14/04/09 15:02:07 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 15:02:07 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 15:02:07 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 15:02:07 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 15:02:07 INFO mapreduce.ImportJobBase: Beginning import of demo_log SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 15:02:07 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 15:02:08 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 15:02:08 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 15:02:10 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`operator`), MAX(`operator`) FROM `demo_log` 14/04/09 15:02:10 WARN db.TextSplitter: Generating splits for a textual index column. 14/04/09 15:02:10 WARN db.TextSplitter: If your database sorts in a case-insensitive order, this may result in a partial import or duplicate records. 14/04/09 15:02:10 WARN db.TextSplitter: You are strongly encouraged to choose an integral split column. 14/04/09 15:02:10 INFO mapreduce.JobSubmitter: number of splits:4 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 15:02:10 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 15:02:10 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0003 14/04/09 15:02:10 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0003 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 15:02:10 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0003/ 14/04/09 15:02:10 INFO mapreduce.Job: Running job: job_1396936838233_0003 14/04/09 15:02:17 INFO mapreduce.Job: Job job_1396936838233_0003 running in uber mode : false 14/04/09 15:02:17 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 15:02:28 INFO mapreduce.Job: map 25% reduce 0% 14/04/09 15:02:30 INFO mapreduce.Job: map 50% reduce 0% 14/04/09 15:02:33 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 15:02:33 INFO mapreduce.Job: Job job_1396936838233_0003 completed successfully 14/04/09 15:02:33 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=362536 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=516 HDFS: Number of bytes written=56 HDFS: Number of read operations=16 HDFS: Number of large read operations=0 HDFS: Number of write operations=8 Job Counters Launched map tasks=4 Other local map tasks=4 Total time spent by all maps in occupied slots (ms)=44481 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=4 Map output records=4 Input split bytes=516 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=429 CPU time spent (ms)=6650 Physical memory (bytes) snapshot=587669504 Virtual memory (bytes) snapshot=5219356672 Total committed heap usage (bytes)=205848576 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=56 14/04/09 15:02:33 INFO mapreduce.ImportJobBase: Transferred 56 bytes in 25.2746 seconds (2.2157 bytes/sec) 14/04/09 15:02:33 INFO mapreduce.ImportJobBase: Retrieved 4 records.

$ hdfs dfs -ls /user/micmiu/sqoop/demo_log Found 5 items -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/_SUCCESS -rw-r--r-- 3 hadoop supergroup 28 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00000 -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00001 -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00002 -rw-r--r-- 3 hadoop supergroup 28 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00003 $ hdfs dfs -cat /user/micmiu/sqoop/demo_log/part-m-0000* michael,edit michael,delete micmiu,create micmiu,update

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --warehouse-dir /user/sqoop --hive-import --create-hive-table

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --warehouse-dir /user/sqoop --hive-import --create-hive-table Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 10:44:21 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 10:44:21 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override 14/04/09 10:44:21 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc. 14/04/09 10:44:21 WARN tool.BaseSqoopTool: It seems that you've specified at least one of following: 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-home 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-overwrite 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --create-hive-table 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-table 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-partition-key 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-partition-value 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --map-column-hive 14/04/09 10:44:21 WARN tool.BaseSqoopTool: Without specifying parameter --hive-import. Please note that 14/04/09 10:44:21 WARN tool.BaseSqoopTool: those arguments will not be used in this session. Either 14/04/09 10:44:21 WARN tool.BaseSqoopTool: specify --hive-import to apply them correctly or remove them 14/04/09 10:44:21 WARN tool.BaseSqoopTool: from command line to remove this warning. 14/04/09 10:44:21 INFO tool.BaseSqoopTool: Please note that --hive-home, --hive-partition-key, 14/04/09 10:44:21 INFO tool.BaseSqoopTool: hive-partition-value and --map-column-hive options are 14/04/09 10:44:21 INFO tool.BaseSqoopTool: are also valid for HCatalog imports and exports 14/04/09 10:44:21 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 10:44:21 INFO tool.CodeGenTool: Beginning code generation 14/04/09 10:44:21 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:21 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:21 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/c071f02ecad006293202fd2c2fad0dce/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 10:44:22 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/c071f02ecad006293202fd2c2fad0dce/demo_blog.jar 14/04/09 10:44:22 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 10:44:22 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 10:44:22 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 10:44:22 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 10:44:22 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 10:44:22 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 10:44:23 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 10:44:23 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 10:44:25 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 10:44:25 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 10:44:25 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 10:44:25 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0002 14/04/09 10:44:25 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0002 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 10:44:25 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0002/ 14/04/09 10:44:25 INFO mapreduce.Job: Running job: job_1396936838233_0002 14/04/09 10:44:33 INFO mapreduce.Job: Job job_1396936838233_0002 running in uber mode : false 14/04/09 10:44:33 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 10:44:46 INFO mapreduce.Job: map 67% reduce 0% 14/04/09 10:44:48 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 10:44:49 INFO mapreduce.Job: Job job_1396936838233_0002 completed successfully 14/04/09 10:44:49 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=271860 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=44 HDFS: Number of read operations=12 HDFS: Number of large read operations=0 HDFS: Number of write operations=6 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=34047 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=505 CPU time spent (ms)=5350 Physical memory (bytes) snapshot=427388928 Virtual memory (bytes) snapshot=3881439232 Total committed heap usage (bytes)=171638784 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=44 14/04/09 10:44:49 INFO mapreduce.ImportJobBase: Transferred 44 bytes in 26.0401 seconds (1.6897 bytes/sec) 14/04/09 10:44:49 INFO mapreduce.ImportJobBase: Retrieved 3 records. 14/04/09 10:44:49 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:49 INFO hive.HiveImport: Loading uploaded data into Hive 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed 14/04/09 10:44:53 INFO hive.HiveImport: 14/04/09 10:44:53 WARN conf.HiveConf: DEPRECATED: hive.metastore.ds.retry.* no longer has any effect. Use hive.hmshandler.retry.* instead 14/04/09 10:44:53 INFO hive.HiveImport: 14/04/09 10:44:53 INFO hive.HiveImport: Logging initialized using configuration in file:/usr/local/hive-0.13.0-bin/conf/hive-log4j.properties 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings. 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 10:44:57 INFO hive.HiveImport: OK 14/04/09 10:44:57 INFO hive.HiveImport: Time taken: 0.773 seconds 14/04/09 10:44:57 INFO hive.HiveImport: Loading data to table default.demo_blog 14/04/09 10:44:57 INFO hive.HiveImport: Table default.demo_blog stats: [numFiles=4, numRows=0, totalSize=44, rawDataSize=0] 14/04/09 10:44:57 INFO hive.HiveImport: OK 14/04/09 10:44:57 INFO hive.HiveImport: Time taken: 0.25 seconds 14/04/09 10:44:57 INFO hive.HiveImport: Hive import complete. 14/04/09 10:44:57 INFO hive.HiveImport: Export directory is empty, removing it

hive> show tables; OK demo_blog hbase_table_1 hbase_table_2 hbase_table_3 micmiu_blog micmiu_hx_master pokes xflow_dstip Time taken: 0.073 seconds, Fetched: 8 row(s) hive> select * from demo_blog; OK 1 micmiu.com 2 ctosun.com 3 baby.micmiu.com Time taken: 0.506 seconds, Fetched: 3 row(s)

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --hbase-table demo_sqoop2hbase --hbase-create-table --hbase-row-key id --column-family url

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --hbase-table demo_sqoop2hbase --hbase-create-table --hbase-row-key id --column-family url Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 16:23:38 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 16:23:38 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 16:23:38 INFO tool.CodeGenTool: Beginning code generation 14/04/09 16:23:39 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 16:23:39 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 16:23:39 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/85408c854ee8fba75bbb2458e5e25093/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 16:23:40 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/85408c854ee8fba75bbb2458e5e25093/demo_blog.jar 14/04/09 16:23:40 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 16:23:40 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 16:23:40 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 16:23:40 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 16:23:40 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 16:23:40 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 16:23:40 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:host.name=Master.Hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.version=1.6.0_20 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Sun Microsystems Inc. 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.home=/java/jdk1.6.0_20/jre 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.class.path=/usr/local/hadoop/etc/hadoop: ....... 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/usr/local/hadoop/lib/native 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.compiler= 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.version=2.6.32-71.el6.x86_64 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.name=hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.home=/home/hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.dir=/home/hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave5.Hadoop/192.168.8.205:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:41 INFO zookeeper.RecoverableZooKeeper: Process identifier=hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Socket connection established to Slave5.Hadoop/192.168.8.205:2181, initiating session 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave5.Hadoop/192.168.8.205:2181, sessionid = 0x453fecb6c50009, negotiated timeout = 90000 14/04/09 16:23:41 INFO Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave7.Hadoop/192.168.8.207:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:41 INFO zookeeper.RecoverableZooKeeper: Process identifier=catalogtracker-on-hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Socket connection established to Slave7.Hadoop/192.168.8.207:2181, initiating session 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave7.Hadoop/192.168.8.207:2181, sessionid = 0x2453fecb6f50008, negotiated timeout = 90000 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Session: 0x2453fecb6f50008 closed 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: EventThread shut down 14/04/09 16:23:41 INFO mapreduce.HBaseImportJob: Creating missing HBase table demo_sqoop2hbase 14/04/09 16:23:42 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:42 INFO zookeeper.RecoverableZooKeeper: Process identifier=catalogtracker-on-hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave7.Hadoop/192.168.8.207:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Socket connection established to Slave7.Hadoop/192.168.8.207:2181, initiating session 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave7.Hadoop/192.168.8.207:2181, sessionid = 0x2453fecb6f50009, negotiated timeout = 90000 14/04/09 16:23:42 INFO zookeeper.ZooKeeper: Session: 0x2453fecb6f50009 closed 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: EventThread shut down 14/04/09 16:23:42 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 16:23:47 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 16:23:47 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 16:23:47 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 16:23:47 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0005 14/04/09 16:23:47 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0005 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 16:23:47 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0005/ 14/04/09 16:23:47 INFO mapreduce.Job: Running job: job_1396936838233_0005 14/04/09 16:23:55 INFO mapreduce.Job: Job job_1396936838233_0005 running in uber mode : false 14/04/09 16:23:55 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 16:24:05 INFO mapreduce.Job: map 33% reduce 0% 14/04/09 16:24:12 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 16:24:12 INFO mapreduce.Job: Job job_1396936838233_0005 completed successfully 14/04/09 16:24:12 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=354636 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=0 HDFS: Number of read operations=3 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=35297 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=381 CPU time spent (ms)=11050 Physical memory (bytes) snapshot=543367168 Virtual memory (bytes) snapshot=3918925824 Total committed heap usage (bytes)=156958720 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=0 14/04/09 16:24:12 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 29.7126 seconds (0 bytes/sec) 14/04/09 16:24:12 INFO mapreduce.ImportJobBase: Retrieved 3 records.

hbase(main):009:0> list

TABLE

demo_sqoop2hbase

table_02

table_03

test_table

xyz

5 row(s) in 0.0310 seconds

=> ["demo_sqoop2hbase", "table_02", "table_03", "test_table", "xyz"]

hbase(main):010:0> scan "demo_sqoop2hbase"

ROW COLUMN+CELL

1 column=url:blog, timestamp=1397031850700, value=micmiu.com

2 column=url:blog, timestamp=1397031844106, value=ctosun.com

3 column=url:blog, timestamp=1397031849888, value=baby.micmiu.com

3 row(s) in 0.0730 seconds

hbase(main):011:0> describe "demo_sqoop2hbase"

DESCRIPTION ENABLED

'demo_sqoop2hbase', {NAME => 'url', DATA_BLOCK_ENCODING => 'NONE', BL true

OOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRE

SSION => 'NONE', MIN_VERSIONS => '0', TTL => '2147483647', KEEP_DELET

ED_CELLS => 'false', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOC

KCACHE => 'true'}

1 row(s) in 0.0580 seconds

hbase(main):012:0>- http://sqoop.apache.org/docs/1.4.4/SqoopUserGuide.html

原文地址:Sqoop安装配置及演示, 感谢原作者分享。

핫 AI 도구

Undresser.AI Undress

사실적인 누드 사진을 만들기 위한 AI 기반 앱

AI Clothes Remover

사진에서 옷을 제거하는 온라인 AI 도구입니다.

Undress AI Tool

무료로 이미지를 벗다

Clothoff.io

AI 옷 제거제

Video Face Swap

완전히 무료인 AI 얼굴 교환 도구를 사용하여 모든 비디오의 얼굴을 쉽게 바꾸세요!

인기 기사

뜨거운 도구

메모장++7.3.1

사용하기 쉬운 무료 코드 편집기

SublimeText3 중국어 버전

중국어 버전, 사용하기 매우 쉽습니다.

스튜디오 13.0.1 보내기

강력한 PHP 통합 개발 환경

드림위버 CS6

시각적 웹 개발 도구

SublimeText3 Mac 버전

신 수준의 코드 편집 소프트웨어(SublimeText3)

Win11 시스템에서 중국어 언어 팩을 설치할 수 없는 문제에 대한 해결 방법

Mar 09, 2024 am 09:48 AM

Win11 시스템에서 중국어 언어 팩을 설치할 수 없는 문제에 대한 해결 방법

Mar 09, 2024 am 09:48 AM

Win11 시스템에서 중국어 언어 팩을 설치할 수 없는 문제 해결 Windows 11 시스템이 출시되면서 많은 사용자들이 새로운 기능과 인터페이스를 경험하기 위해 운영 체제를 업그레이드하기 시작했습니다. 그러나 일부 사용자는 업그레이드 후 중국어 언어 팩을 설치할 수 없어 경험에 문제가 있다는 사실을 발견했습니다. 이 기사에서는 Win11 시스템이 중국어 언어 팩을 설치할 수 없는 이유에 대해 논의하고 사용자가 이 문제를 해결하는 데 도움이 되는 몇 가지 솔루션을 제공합니다. 원인 분석 먼저 Win11 시스템의 무능력을 분석해 보겠습니다.

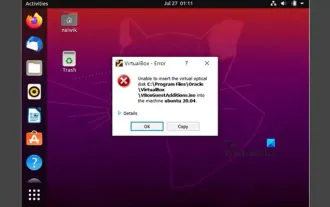

VirtualBox에 게스트 추가 기능을 설치할 수 없습니다

Mar 10, 2024 am 09:34 AM

VirtualBox에 게스트 추가 기능을 설치할 수 없습니다

Mar 10, 2024 am 09:34 AM

OracleVirtualBox의 가상 머신에 게스트 추가 기능을 설치하지 못할 수도 있습니다. Devices>InstallGuestAdditionsCDImage를 클릭하면 아래와 같이 오류가 발생합니다. VirtualBox - 오류: 가상 디스크를 삽입할 수 없습니다. C: 우분투 시스템에 FilesOracleVirtualBoxVBoxGuestAdditions.iso 프로그래밍 이 게시물에서는 어떤 일이 발생하는지 이해합니다. VirtualBox에 게스트 추가 기능을 설치할 수 없습니다. VirtualBox에 게스트 추가 기능을 설치할 수 없습니다. Virtua에 설치할 수 없는 경우

Baidu Netdisk를 성공적으로 다운로드했지만 설치할 수 없는 경우 어떻게 해야 합니까?

Mar 13, 2024 pm 10:22 PM

Baidu Netdisk를 성공적으로 다운로드했지만 설치할 수 없는 경우 어떻게 해야 합니까?

Mar 13, 2024 pm 10:22 PM

바이두 넷디스크 설치 파일을 성공적으로 다운로드 받았으나 정상적으로 설치가 되지 않는 경우, 소프트웨어 파일의 무결성에 문제가 있거나, 잔여 파일 및 레지스트리 항목에 문제가 있을 수 있으므로, 본 사이트에서 사용자들이 주의깊게 확인해 보도록 하겠습니다. Baidu Netdisk가 성공적으로 다운로드되었으나 설치가 되지 않는 문제에 대한 분석입니다. 바이두 넷디스크 다운로드에 성공했지만 설치가 되지 않는 문제 분석 1. 설치 파일의 무결성 확인: 다운로드한 설치 파일이 완전하고 손상되지 않았는지 확인하세요. 다시 다운로드하거나 신뢰할 수 있는 다른 소스에서 설치 파일을 다운로드해 보세요. 2. 바이러스 백신 소프트웨어 및 방화벽 끄기: 일부 바이러스 백신 소프트웨어 또는 방화벽 프로그램은 설치 프로그램이 제대로 실행되지 않도록 할 수 있습니다. 바이러스 백신 소프트웨어와 방화벽을 비활성화하거나 종료한 후 설치를 다시 실행해 보세요.

Linux Bashrc 이해: 기능, 구성 및 사용법

Mar 20, 2024 pm 03:30 PM

Linux Bashrc 이해: 기능, 구성 및 사용법

Mar 20, 2024 pm 03:30 PM

Linux Bashrc 이해: 기능, 구성 및 사용법 Linux 시스템에서 Bashrc(BourneAgainShellruncommands)는 시스템 시작 시 자동으로 실행되는 다양한 명령과 설정이 포함된 매우 중요한 구성 파일입니다. Bashrc 파일은 일반적으로 사용자의 홈 디렉토리에 있으며 숨겨진 파일입니다. 해당 기능은 사용자를 위해 Bashshell 환경을 사용자 정의하는 것입니다. 1. Bashrc 기능 설정 환경

Linux에 Android 앱을 설치하는 방법은 무엇입니까?

Mar 19, 2024 am 11:15 AM

Linux에 Android 앱을 설치하는 방법은 무엇입니까?

Mar 19, 2024 am 11:15 AM

Linux에 Android 애플리케이션을 설치하는 것은 항상 많은 사용자의 관심사였습니다. 특히 Android 애플리케이션을 사용하려는 Linux 사용자의 경우 Linux 시스템에 Android 애플리케이션을 설치하는 방법을 익히는 것이 매우 중요합니다. Linux에서 직접 Android 애플리케이션을 실행하는 것은 Android 플랫폼에서만큼 간단하지는 않지만 에뮬레이터나 타사 도구를 사용하면 여전히 Linux에서 Android 애플리케이션을 즐겁게 즐길 수 있습니다. 다음은 Linux 시스템에 Android 애플리케이션을 설치하는 방법을 소개합니다.

Ubuntu 24.04에 Podman을 설치하는 방법

Mar 22, 2024 am 11:26 AM

Ubuntu 24.04에 Podman을 설치하는 방법

Mar 22, 2024 am 11:26 AM

Docker를 사용해 본 적이 있다면 데몬, 컨테이너 및 해당 기능을 이해해야 합니다. 데몬은 컨테이너가 시스템에서 이미 사용 중일 때 백그라운드에서 실행되는 서비스입니다. Podman은 Docker와 같은 데몬에 의존하지 않고 컨테이너를 관리하고 생성하기 위한 무료 관리 도구입니다. 따라서 장기적인 백엔드 서비스 없이도 컨테이너를 관리할 수 있는 장점이 있습니다. 또한 Podman을 사용하려면 루트 수준 권한이 필요하지 않습니다. 이 가이드에서는 Ubuntu24에 Podman을 설치하는 방법을 자세히 설명합니다. 시스템을 업데이트하려면 먼저 시스템을 업데이트하고 Ubuntu24의 터미널 셸을 열어야 합니다. 설치 및 업그레이드 프로세스 중에 명령줄을 사용해야 합니다. 간단한

Ubuntu 24.04에서 Ubuntu Notes 앱을 설치하고 실행하는 방법

Mar 22, 2024 pm 04:40 PM

Ubuntu 24.04에서 Ubuntu Notes 앱을 설치하고 실행하는 방법

Mar 22, 2024 pm 04:40 PM

고등학교에서 공부하는 동안 일부 학생들은 매우 명확하고 정확한 필기를 하며, 같은 수업을 받는 다른 학생들보다 더 많은 필기를 합니다. 어떤 사람들에게는 노트 필기가 취미인 반면, 어떤 사람들에게는 중요한 것에 대한 작은 정보를 쉽게 잊어버릴 때 필수입니다. Microsoft의 NTFS 응용 프로그램은 정규 강의 외에 중요한 메모를 저장하려는 학생들에게 특히 유용합니다. 이 기사에서는 Ubuntu24에 Ubuntu 애플리케이션을 설치하는 방법을 설명합니다. Ubuntu 시스템 업데이트 Ubuntu 설치 프로그램을 설치하기 전에 Ubuntu24에서 새로 구성된 시스템이 업데이트되었는지 확인해야 합니다. 우분투 시스템에서 가장 유명한 "a"를 사용할 수 있습니다

Win7 시스템에서 Go 언어를 설치하는 방법은 무엇입니까?

Mar 27, 2024 pm 01:42 PM

Win7 시스템에서 Go 언어를 설치하는 방법은 무엇입니까?

Mar 27, 2024 pm 01:42 PM

Win7 시스템에서 Go 언어를 설치하는 것은 비교적 간단한 작업입니다. 성공적으로 설치하려면 다음 단계를 따르세요. 다음은 Win7 시스템에서 Go 언어를 설치하는 방법을 자세히 소개합니다. 1단계: Go 언어 설치 패키지를 다운로드합니다. 먼저 Go 언어 공식 웹사이트(https://golang.org/)를 열고 다운로드 페이지로 들어갑니다. 다운로드 페이지에서 Win7 시스템과 호환되는 설치 패키지 버전을 선택하여 다운로드하세요. 다운로드 버튼을 클릭하고 설치 패키지가 다운로드될 때까지 기다립니다. 2단계: Go 언어 설치